当前位置:网站首页>Hudi 快速体验使用(含操作详细步骤及截图)

Hudi 快速体验使用(含操作详细步骤及截图)

2022-07-03 09:00:00 【小胡今天有变强吗】

Hudi 快速体验使用

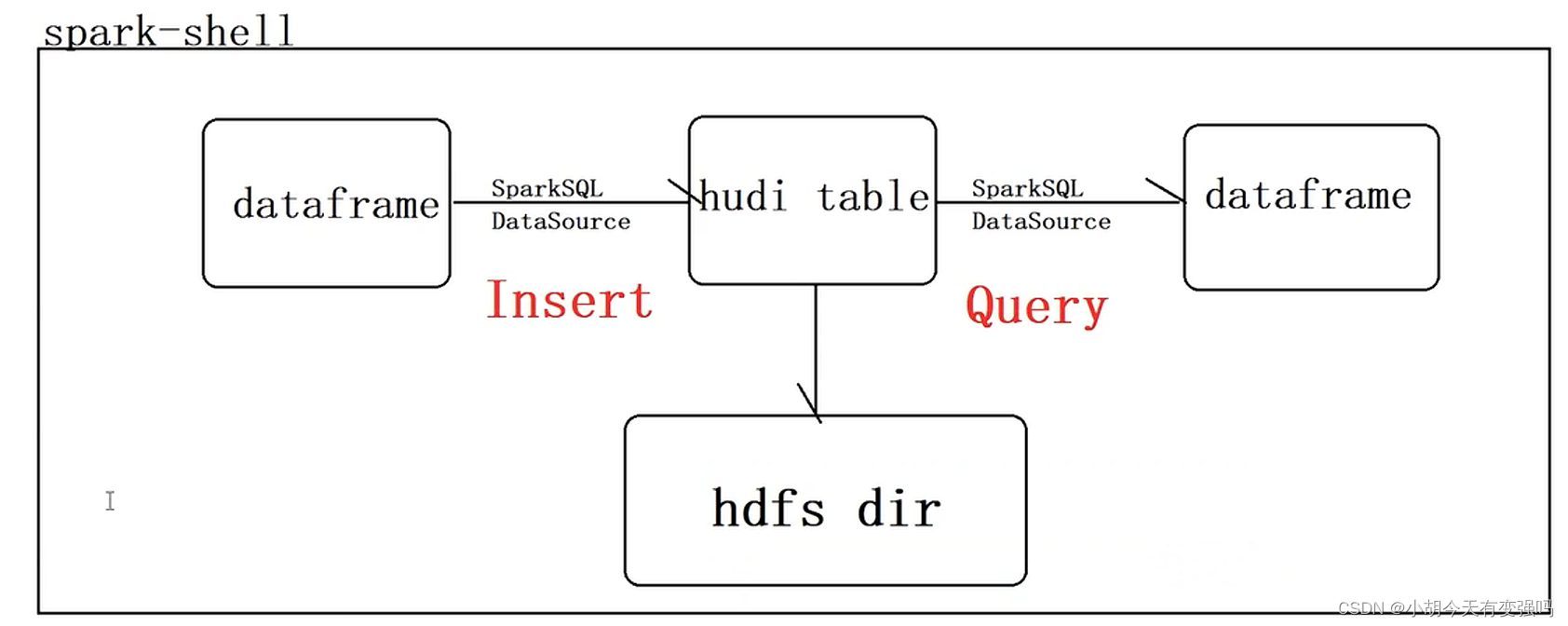

本示例要完成下面的流程:

需要提前安装好hadoop、spark以及hudi及组件。

spark 安装教程:

https://blog.csdn.net/hshudoudou/article/details/125204028?spm=1001.2014.3001.5501

hudi 编译与安装教程:

https://blog.csdn.net/hshudoudou/article/details/123881739?spm=1001.2014.3001.5501

注意只Hudi管理数据,不存储数据,不分析数据。

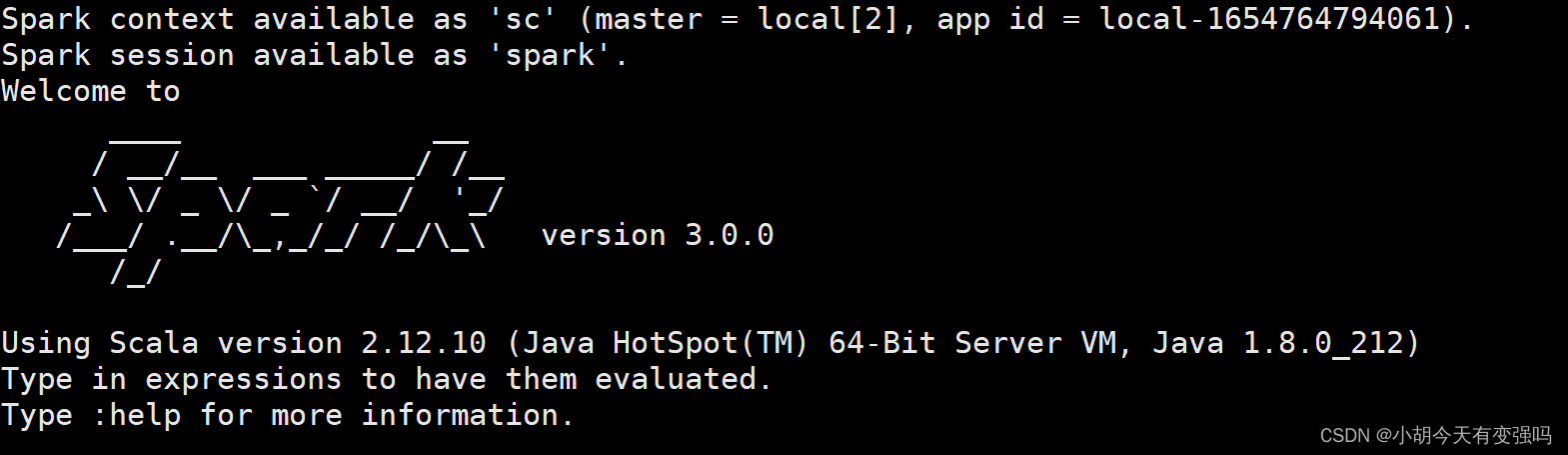

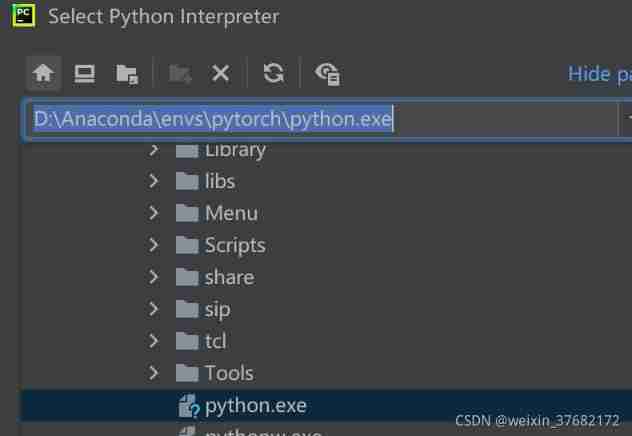

启动 spark-shel l添加 jar 包

./spark-shell \

--master local[2] \

--jars /home/hty/hudi-jars/hudi-spark3-bundle_2.12-0.9.0.jar,\

/home/hty/hudi-jars/spark-avro_2.12-3.0.1.jar,/home/hty/hudi-jars/spark_unused-1.0.0.jar.jar \

--conf "spark.serializer=org.apache.spark.serializer.KryoSerializer"

可以看到三个 jar 包都上传成功:

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-ljXalTIm-1654780395209)(C:\Users\Husheng\Desktop\大数据框架学习\image-20220609165739566.png)]](/img/4e/23f4b3aca8c7a6873cbec44a13e746.png)

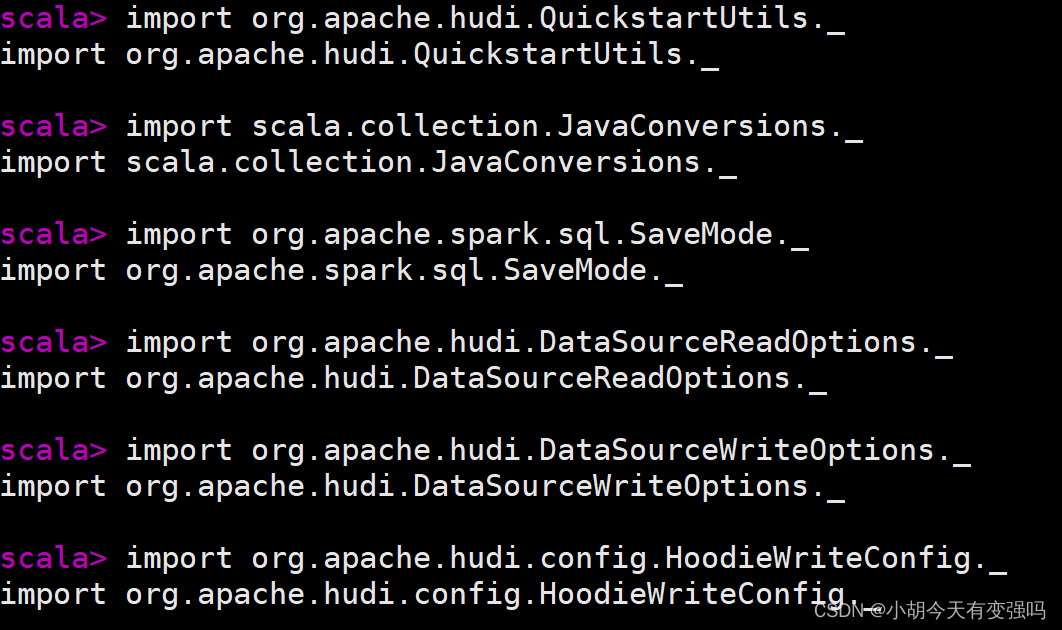

导包并设置存储目录:

import org.apache.hudi.QuickstartUtils._

import scala.collection.JavaConversions._

import org.apache.spark.sql.SaveMode._

import org.apache.hudi.DataSourceReadOptions._

import org.apache.hudi.DataSourceWriteOptions._

import org.apache.hudi.config.HoodieWriteConfig._

val tableName = "hudi_trips_cow"

val basePath = "hdfs://hadoop102:8020/datas/hudi-warehouse/hudi_trips_cow"

val dataGen = new DataGenerator

模拟产生Trip乘车数据

val inserts = convertToStringList(dataGen.generateInserts(10))

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-dd2CZtFP-1654780395209)(C:\Users\Husheng\Desktop\大数据框架学习\image-20220609171909589.png)]](/img/55/8bb7afe823c468b768ef2f5518c245.png)

3.将模拟数据List转换为DataFrame数据集

val df = spark.read.json(spark.sparkContext.parallelize(inserts, 2))

4.查看转换后DataFrame数据集的Schema信息

5.选择相关字段,查看模拟样本数据

df.select("rider", "begin_lat", "begin_lon", "driver", "fare", "uuid", "ts").show(10, truncate=false)

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-q01Acwo7-1654780395209)(C:\Users\Husheng\Desktop\大数据框架学习\image-20220609172907830.png)]](/img/3e/23e59af23e0446eff5ce2e6d120f01.png)

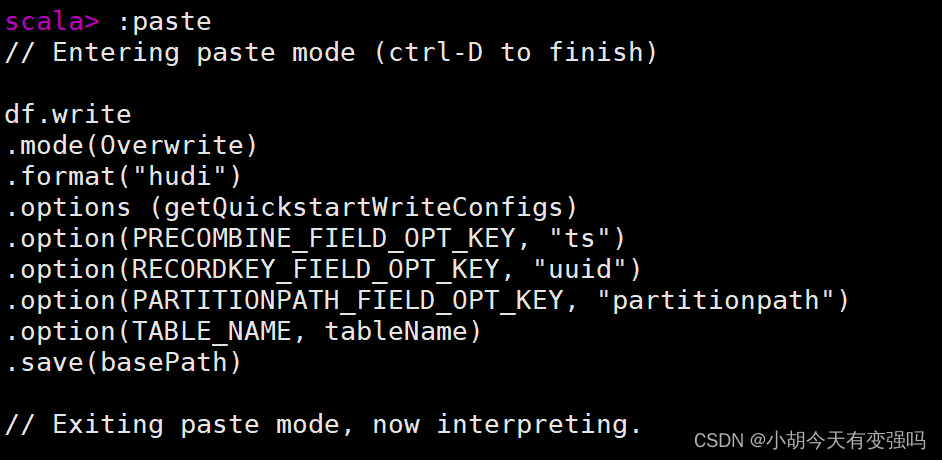

插入数据

将模拟产生Trip数据,保存到Hudi表中,由于Hudi诞生时基于Spark框架,所以SparkSQL支持Hudi数据源,直接通 过format指定数据源Source,设置相关属性保存数据即可。

df.write

.mode(Overwrite)

.format("hudi")

.options (getQuickstartWriteConfigs)

.option(PRECOMBINE_FIELD_OPT_KEY, "ts")

.option(RECORDKEY_FIELD_OPT_KEY, "uuid")

.option(PARTITIONPATH_FIELD_OPT_KEY, "partitionpath")

.option(TABLE_NAME, tableName)

.save(basePath)

getQuickstartWriteConfigs,设置写入/更新数据至Hudi时,Shuffle时分区数目

PRECOMBINE_FIELD_OPT_KEY,数据合并时,依据主键字段

RECORDKEY_FIELD_OPT_KEY,每条记录的唯一id,支持多个字段

PARTITIONPATH_FIELD_OPT_KEY,用于存放数据的分区字段

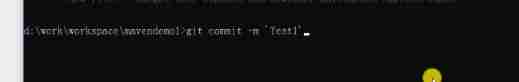

paste模式,粘贴完按ctrl + d 执行。

Hudi表数据存储在HDFS上,以PARQUET列式方式存储的

从Hudi表中读取数据,同样采用SparkSQL外部数据源加载数据方式,指定format数据源和相关参数options:

val tripSnapshotDF = spark.read.format("hudi").load(basePath + "/*/*/*/*")

其中指定Hudi表数据存储路径即可,采用正则Regex匹配方式,由于保存Hudi表属于分区表,并且为三级分区(相 当于Hive中表指定三个分区字段),使用表达式://// 加载所有数据。

查看表结构:

tripSnapshotDF.printSchema()

比原先保存到Hudi表中数据多5个字段,这些字段属于Hudi管理数据时使用的相关字段。

将获取Hudi表数据DataFrame注册为临时视图,采用SQL方式依据业务查询分析数据:

tripSnapshotDF.createOrReplaceTempView("hudi_trips_snapshot")

利用sqark SQL查询

spark.sql("select fare, begin_lat, begin_lon, ts from hudi_trips_snapshot where fare > 20.0").show()

查看新增添的几个字段:

spark.sql("select _hoodie_commit_time, _hoodie_record_key, _hoodie_partition_path, _hoodie_file_name from hudi_trips_snapshot").show()

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-hbKEhmlv-1654780395210)(C:\Users\Husheng\Desktop\大数据框架学习\image-20220609204702530.png)]](/img/95/1074f107be182b59b65c0ee7f35467.png)

这几个新增添的字段就是 hudi 对表进行管理而增添的字段。

参考资料:

边栏推荐

- 2022-2-13 learning xiangniuke project - version control

- What is the difference between sudo apt install and sudo apt -get install?

- Crawler career from scratch (3): crawl the photos of my little sister ③ (the website has been disabled)

- 2022-2-14 learning xiangniuke project - Session Management

- [graduation season | advanced technology Er] another graduation season, I change my career as soon as I graduate, from animal science to programmer. Programmers have something to say in 10 years

- 2022-2-13 learning the imitation Niuke project - home page of the development community

- Jenkins learning (II) -- setting up Chinese

- [point cloud processing paper crazy reading frontier version 10] - mvtn: multi view transformation network for 3D shape recognition

- [point cloud processing paper crazy reading cutting-edge version 12] - adaptive graph revolution for point cloud analysis

- Wonderful review | i/o extended 2022 activity dry goods sharing

猜你喜欢

What are the stages of traditional enterprise digital transformation?

LeetCode 75. 颜色分类

Move anaconda, pycharm and jupyter notebook to mobile hard disk

2022-2-13 learning xiangniuke project - version control

Sword finger offer II 091 Paint the house

LeetCode 30. 串联所有单词的子串

excel一小时不如JNPF表单3分钟,这样做报表,领导都得点赞!

State compression DP acwing 291 Mondrian's dream

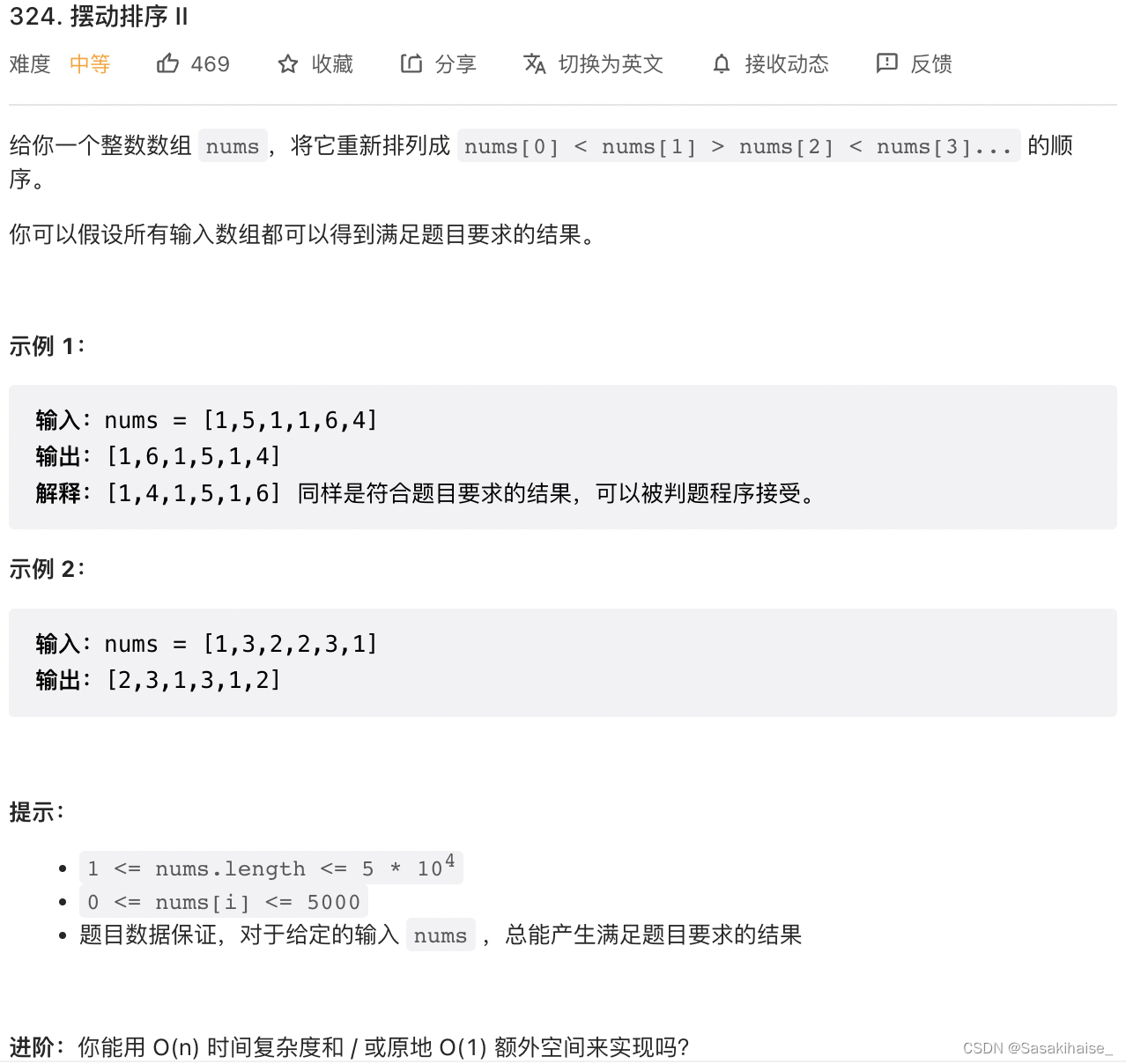

LeetCode 324. Swing sort II

LeetCode 508. 出现次数最多的子树元素和

随机推荐

图像修复方法研究综述----论文笔记

[point cloud processing paper crazy reading frontier edition 13] - gapnet: graph attention based point neural network for exploring local feature

LeetCode 75. 颜色分类

Build a solo blog from scratch

[point cloud processing paper crazy reading classic version 9] - pointwise revolutionary neural networks

State compression DP acwing 91 Shortest Hamilton path

LeetCode 241. Design priorities for operational expressions

低代码起势,这款信息管理系统开发神器,你值得拥有!

【Kotlin学习】运算符重载及其他约定——重载算术运算符、比较运算符、集合与区间的约定

传统办公模式的“助推器”,搭建OA办公系统,原来就这么简单!

干货!零售业智能化管理会遇到哪些问题?看懂这篇文章就够了

剑指 Offer II 029. 排序的循环链表

Data mining 2021-4-27 class notes

Explanation of the answers to the three questions

Overview of database system

LeetCode 324. Swing sort II

With low code prospect, jnpf is flexible and easy to use, and uses intelligence to define a new office mode

Introduction to the usage of getopts in shell

State compression DP acwing 291 Mondrian's dream

The "booster" of traditional office mode, Building OA office system, was so simple!