当前位置:网站首页>TensorFlow: tf.ConfigProto() and Session

TensorFlow: tf.ConfigProto() and Session

2022-08-04 06:06:00 【Rhubarb cat. 1】

转载链接 TensorFlow中的小知识:tf.ConfigProto()与Session

Also make one heretensorflowsmall neural network,代码如下:

#Complete neural network

import tensorflow as tf #导入tensorflow模块

import numpy as np #导入numpy模块

BATCH_SIZE = 8 #Indicates how many sets of data are fed to the neural network at a time

seed = 23455 #It outputs the same random number every time

#基于seed产生随机数

rng = np.random.RandomState(seed)

#随机数返回32行2列的矩阵,表示32组 数据作为输入

X = rng.rand(32,2)

#从X这个32行2列的矩阵中取出一行 Judge if less than1 给Y赋值1,如果不小于1 给Y赋值0

#as a label for the dataset(正确答案),数据标注

Y = [[int(x0 + x1 < 1)] for (x0,x1) in X]

print('X:\n',X)

print('Y:\n',Y)

#1.定义神经网络的输入、参数和输出,Two features are entered herex,输出一个标签y_

x = tf.placeholder(tf.float32,shape=(None,2)) #利用占位符placeholder,输入float32的 A tensor representation of one row and two columns 输入n组特征

y_ = tf.placeholder(tf.float32,shape=(None,1)) #利用占位符placeholder,输入float32的 A tensor representation of one row and two columns 输入n组标签

w1 = tf.Variable(tf.random_normal([2,3],stddev=1,seed=1)) #第一层网络,A matrix of normally distributed random numbers with two rows and three columns,标准差为1,随机种子为1

w2 = tf.Variable(tf.random_normal([3,1],stddev=1,seed=1)) #第一层网络,A matrix of normally distributed random numbers with three rows and one column,标准差为1,随机种子为1

#2.定义前向传播过程

a = tf.matmul(x,w1) #xthrough the first layer of the network,Matrix weighted multiplication

y = tf.matmul(a,w2) #through the second layer of the network,Matrix weighted multiplication,That is, the output of the last layer

#3.Define the loss function and backpropagation method

loss = tf.reduce_mean(tf.square(y-y_))

train_step = tf.train.GradientDescentOptimizer(0.001).minimize(loss) #梯度下降优化器

#4.生成会话,训练STEPS轮

sess = tf.Session()

init_op = tf.global_variables_initializer()

sess.run(init_op)

#Output the current untrained parameter values

#print('w1:',sess.run(w1))

#print('w2:',sess.run(w2))

#训练模型

STEPS = 5000 #训练5000轮

for i in range(STEPS):

start = (i*BATCH_SIZE) % 32

end = start + BATCH_SIZE

sess.run(train_step,feed_dict={x: X[start:end],y_: Y[start:end]}) #Take the corresponding data from the initial dataset and labels,feed into the neural network

if i % 500 == 0: #每500轮打印一次loss值

total_loss = sess.run(loss,feed_dict={x:X,y_:Y})

print('经过%d轮训练,lossAll values are:%g'%(i,total_loss))

#输出训练后的参数取值

print('输出训练后的参数取值:')

print('w1:\n',sess.run(w1))

print('w2:\n',sess.run(w2))输出如下:

X: [[0.83494319 0.11482951] [0.66899751 0.46594987] [0.60181666 0.58838408] [0.31836656 0.20502072] [0.87043944 0.02679395] [0.41539811 0.43938369] [0.68635684 0.24833404] [0.97315228 0.68541849] [0.03081617 0.89479913] [0.24665715 0.28584862] [0.31375667 0.47718349] [0.56689254 0.77079148] [0.7321604 0.35828963] [0.15724842 0.94294584] [0.34933722 0.84634483] [0.50304053 0.81299619] [0.23869886 0.9895604 ] [0.4636501 0.32531094] [0.36510487 0.97365522] [0.73350238 0.83833013] [0.61810158 0.12580353] [0.59274817 0.18779828] [0.87150299 0.34679501] [0.25883219 0.50002932] [0.75690948 0.83429824] [0.29316649 0.05646578] [0.10409134 0.88235166] [0.06727785 0.57784761] [0.38492705 0.48384792] [0.69234428 0.19687348] [0.42783492 0.73416985] [0.09696069 0.04883936]] Y: [[1], [0], [0], [1], [1], [1], [1], [0], [1], [1], [1], [0], [0], [0], [0], [0], [0], [1], [0], [0], [1], [1], [0], [1], [0], [1], [1], [1], [1], [1], [0], [1]] 经过0轮训练,lossAll values are:5.13118 经过500轮训练,lossAll values are:0.429111 经过1000轮训练,lossAll values are:0.409789 经过1500轮训练,lossAll values are:0.399923 经过2000轮训练,lossAll values are:0.394146 经过2500轮训练,lossAll values are:0.390597 经过3000轮训练,lossAll values are:0.388336 经过3500轮训练,lossAll values are:0.386855 经过4000轮训练,lossAll values are:0.385863 经过4500轮训练,lossAll values are:0.385186 输出训练后的参数取值: w1: [[-0.69597054 0.8599247 0.0933773 ] [-2.3418374 -0.12466972 0.5866561 ]] w2: [[-0.07531644] [ 0.8627887 ] [-0.05937821]]

总结:

神经网络的实现过程(八股):准备、前传、反传、迭代

准备:

import 相关模块

定义常量

生成数据集

前向传播

定义输入

x =

y_ =

定义(网络层)参数:

w1 =

w2 =

Define the network inference calculation process

a =

y =

反向传播

定义损失函数

loss =

反向传播方法

train_step =

生成会话,训练STEPS轮,迭代

sess = tf.Session()

init_op = tf.global_variables_initializer()

sess.run(init_op)

#训练模型

STEPS = 5000 #训练5000轮

for i in range(STEPS):

start =

end =

sess.run(train_step,feed_dict)

边栏推荐

猜你喜欢

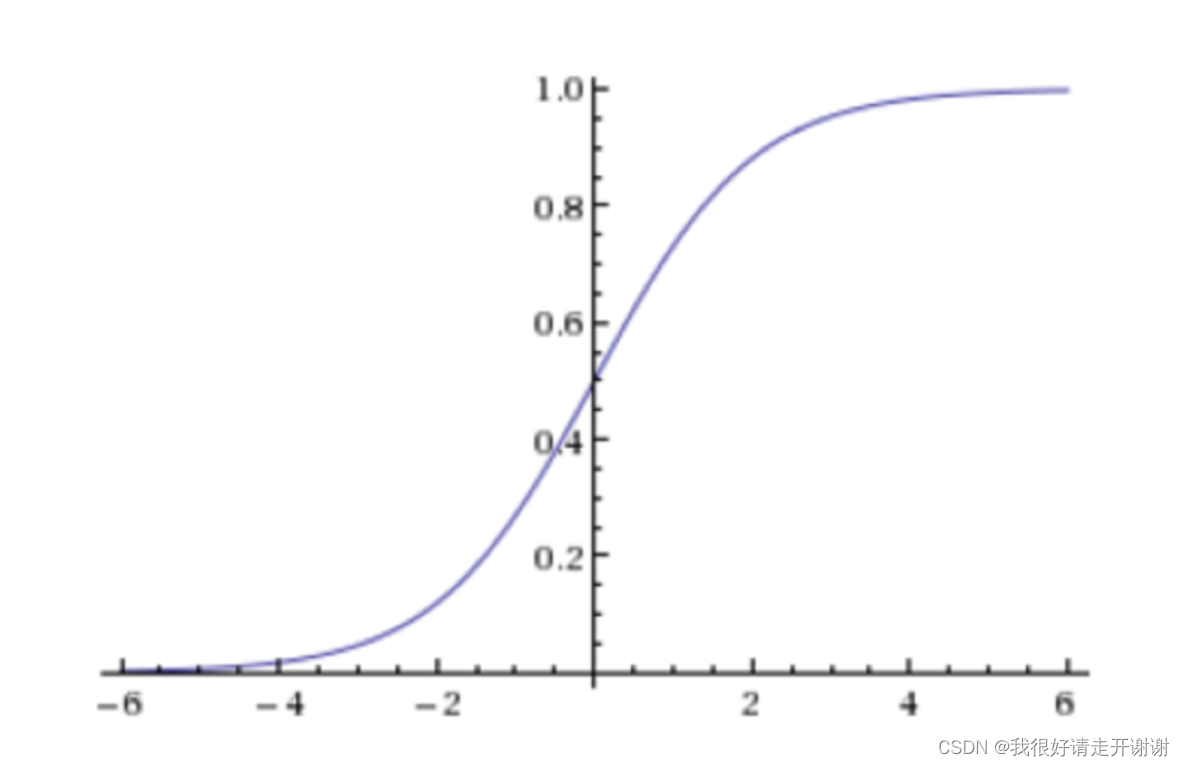

Logistic Regression --- Introduction, API Introduction, Case: Cancer Classification Prediction, Classification Evaluation, and ROC Curve and AUC Metrics

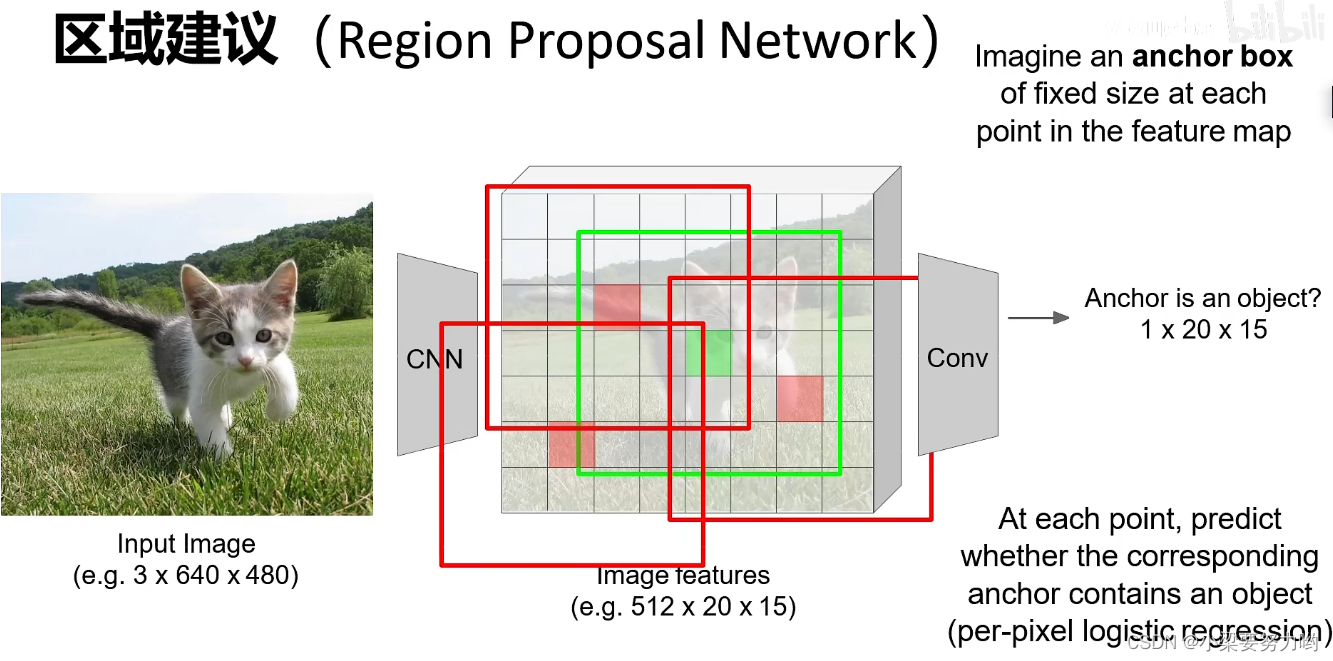

【CV-Learning】目标检测&实例分割

flink-sql大量使用案例

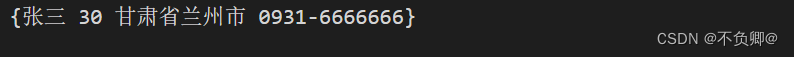

【go语言入门笔记】13、 结构体(struct)

攻防世界MISC———Dift

with recursive用法

win云服务器搭建个人博客失败记录(wordpress,wamp)

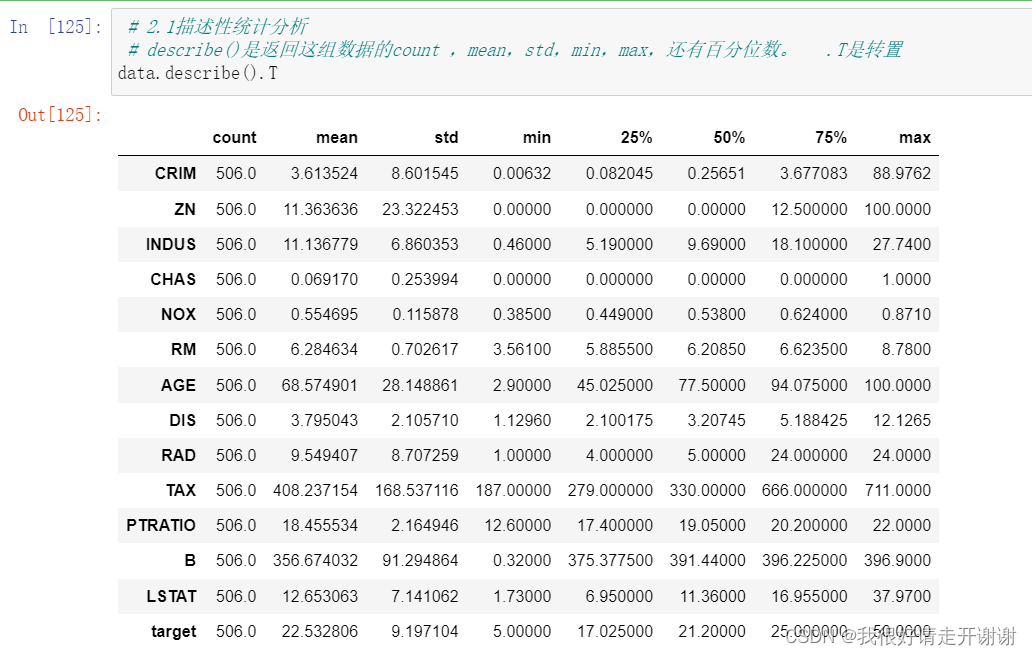

Linear Regression 02---Boston Housing Price Prediction

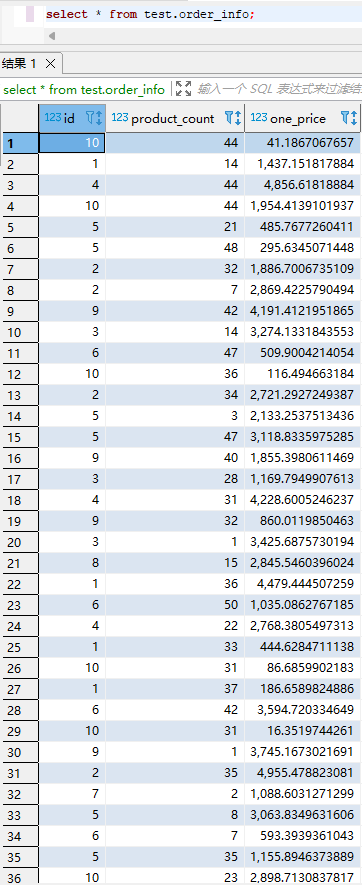

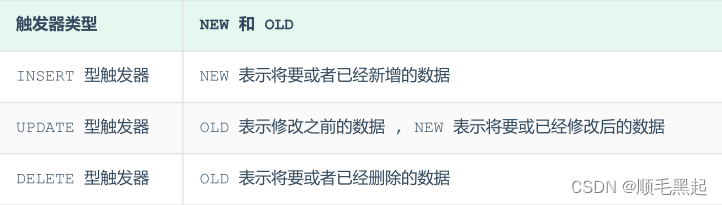

视图、存储过程、触发器

(六)递归

随机推荐

【深度学习21天学习挑战赛】2、复杂样本分类识别——卷积神经网络(CNN)服装图像分类

[Introduction to go language] 12. Pointer

flink-sql查询配置与性能优化参数详解

(九)哈希表

Thoroughly understand box plot analysis

NFT市场以及如何打造一个NFT市场

flink-sql所有数据类型

WARNING: sql version 9.2, server version 11.0. Some psql features might not work.

安卓连接mysql数据库,使用okhttp

[Deep Learning 21-Day Learning Challenge] 3. Use a self-made dataset - Convolutional Neural Network (CNN) Weather Recognition

攻防世界MISC———Dift

读研碎碎念

MySQL最左前缀原则【我看懂了hh】

TensorFlow:tf.ConfigProto()与Session

CAS与自旋锁、ABA问题

postgresql 游标(cursor)的使用

flink sql left join数据倾斜问题解决

Vision Transformer 论文 + 详解( ViT )

TensorFlow2 study notes: 6. Overfitting and underfitting, and their mitigation solutions

数据库根据提纲复习