当前位置:网站首页>Relationship between business policies, business rules, business processes and business master data - modern analysis

Relationship between business policies, business rules, business processes and business master data - modern analysis

2020-11-06 01:19:00 【On jdon】

Data quality is critical to the proper operation of an organization's systems . In most countries / region , There is a legal obligation to ensure the system ( Especially the financial system ) The quality of data in China remains at a high level .

for example , APRA's [APRA] A guide to prudent practice CPG235“ Managing data risk ” The first 51 Stipulated in article :

Data validation is the evaluation of data against business rules , To determine if it is suitable for further processing . It is a set of key control measures to ensure that the data meets the quality requirements . |

In the life of an organization , Sometimes there is a lack of data quality or evidence of data quality , And that could rise to the point where there is a threat , for example :

- Poor data quality can hurt customers , Especially when it becomes public knowledge .

- Regulators issue enforcement measures .

- Engage in M & A activities .

- Migrate core systems .

- Poor data quality hinders an enterprise's ability to respond to compelling market forces in a timely manner .

that , What is data quality ?

Data quality is a measure of how well data meets business rules :

- If the data is from an external source , Then determine its effectiveness ; or ,

- If the data is internally exported , Then confirm its correctness .

therefore , If you don't have standards that can be used immediately as rules , You can't measure data quality ; If you don't have “ The main rule ”, You can't have “ Master data ”.

however , No organization that we know has implemented in any practical sense “ The main rule ” The concept of .

Business rules take precedence over data and business processes

Ten years ago “ The beast of need and complexity ” Published in 《 Modern analysts 》 On , Its correlation has been exceeded so far 40,000 The download confirmed that . This paper takes requirements specification as an important weak link in the traditional system development life cycle . It introduces a new approach to requirements collection and specification , The method is based on the analysis of business strategy , Rules to identify core business entities and manage their respective data state changes . These rules are then captured and described as “ Decision model ”, To implement in the form of executable files in front-line systems .(banq notes :DDD Models belong to one of them )

And then ( And still ) The popular data and process centric approach to requirements is the opposite , This popular approach typically positions rules as dependencies on data or processes , It's not the highest requirement in itself . The article claims that rules are more demanding than data and processes , Because we Data and process requirements can be derived from rules , But it can't be the opposite .

Business rules are the implementation of business policies , Business policies also inherently define business data quality : If the data comes in , Then verify the data ; Or if the output data , Then make the data . In either case , Rules are the essence of data quality . For the sake of clarity , The rules to implement the policy must be executable ; An unenforceable rule is a description of the rule , Not the implementation of rules ; If the implementation doesn't execute , Explanation can't be “ The source of truth ”.

In the ten years since the publication of this article , Our business is deeply involved in the audit of legacy systems , Repair and migration , These businesses cover pensions ( Millions of member accounts ), insurance ( Millions of policies ) And the payroll ( Dozens of first tier corporate and government paychecks ).

Our audit , Remediation and migration activities cover the past 50 Most of the major technology platforms related over the years , It includes many technical platforms that predate relational databases . About these platforms , We've recalculated and corrected the trace back to 35 Personal accounts for .

Master data management

Master data is a concept as old as computing itself [2]. It can be briefly described as a computer data list .Gartner A more formal definition of , As shown below :

“ Master data management (MDM) It's a technology-based discipline , Business and IT Joint efforts , To ensure the consistency of enterprise official sharing master data assets , accuracy , Management power , Semantic consistency and accountability . Master data is a consistent and unified set of identifiers and extended attributes , Describes the core entity of the enterprise , Including customers , Potential customers , citizens , supplier , Site , Hierarchy and chart of accounts .”

actually , As master data management expects , Authoritative classification and management of data is usually limited to early systems Cobol Copybook , Or a data definition language for relational databases and similar systems [DDL]. In either case , We can all use fully automated methods to extract data definitions and data itself within hours . There are dozens of systems involved in our business 50 Mid year , We have never come across reliable and accurate “ Master data ” describe .

Whether it's effective or tried in other ways “ Master data management ”, There is an elephant in the room – Even a complete and authoritative “ A consistent and uniform set of identifiers and extended attributes describing the core entities of an enterprise ”, Gartner The recommendations don't tell us how the data fits into the business strategy for managing data . Except for the name , Data types and their relative position in the classification system , We have nothing .

for example , A project we just completed generated an ontology from multiple different source systems - With a final “ surname ” and “ surname ” As a human attribute . If you don't look at the basic code , There is no way to distinguish between these two properties , These two attributes sometimes have different values for the same person ( Tips , The usage is different !)

Master rule management

If there's no equally powerful “ Master rule management ”, So master data management is not feasible , And master rule management is not a concept we found in practice .

Whenever we audit , Repair and / Or migration , The rules we need to find will enable us to understand the overall data , Especially its quality . We think , Data quality is actually the degree to which data conforms to its rules ( That is, the validation rules of input data and the calculation rules of derived data ) To measure . Rule analysis takes a lot of time and effort . When we just look at the data in isolation , There's usually a starting point , Can be read through DDL Or other file system definitions to automatically get a starting point , But that's not the case with the rules . It's always been the first principle . All the data can tell us , There must be some rules somewhere !

Whether we can extract rules automatically as we process data ?

The answer is No , Although we do have the tools to help with this process . But more importantly , Fully automatic extraction of rules cannot solve the problem , It can't even solve the problem !

Effective rule management was not implemented at the beginning of the system . result , For a long time , The whole rule has grown organically , There is no formal structure - Like weeds, not gardens , It's usually spread across multiple systems and manual processes . The diversity of rules usually means , Achieve the same rule intent in different or even conflicting ways . Rules are often applied to multiple systems in an ambiguous order . The end result is , The rules we extract from the source system are often normalized , Conflict , Incomplete , Sometimes it's even wrong .

Besides , Rules usually have little or no basis . The context of the rules , Intention , Reasons and recognition are rarely documented , Even as time goes by , Oral storytelling is also lost . The definition of correctness becomes elusive .

Rules have never been seen as first-order requirements from the beginning , So it's never been defined “ Rule development lifecycle ”. In short , They're not officially , Existing in an orderly manner , Now it is necessary to infer transitively their actual existence by looking for their impact on the data .

therefore , Even if we want to extract rules automatically , They still need to be rigorously analyzed , Normalization and refactoring process , Then test , Finally, it's official ( again ) approval . in other words , We need to backfill “ Rule development lifecycle ” To promote rules to use “ The main rule ” The level required , And that can't be done by automatic extraction , Because the required rule metadata doesn't exist at all .

stay Sapiens In a short and eye-catching article of , The author outlines the failures of many companies , These failures are ultimately due to “ Because of data ignorance , Decentralized systems and the inability to monitor their own processes ”. From the same article Sapiens article :“ Moody's recently examined the causes of the collapse of insurance companies , One of the main reasons is that some insurance companies don't understand their pricing model . Moody's said , To avoid “ Selling a large number of policies at an inappropriate low price , Insurance companies will need to have systems and controls in place , In order to quickly identify and solve the situation that the pricing algorithm underestimates the risk ”.

All of this can be done through implementation “ Master rule management ” To relieve .

Our conclusion : Whether it's due to regulatory pressure , Mergers and acquisitions , There is a need to improve business governance to reduce risk , The implementation of “ General rule management ” Sooner or later it will be a priority . By then , It will not be optional .

How to extract business rules ?

Rules themselves are not just important artifacts ; They are the only way you can claim to understand data and its downstream processes correctly . To understand data correctly is to run the system correctly , Thus fulfilling legal and professional obligations and avoiding the key points outlined above .

Build the master rule for default , It is necessary to be able to put “ The main rule ” As a mirror image of the implemented system rules , To implement every entity in the system (banq notes : This is a DDD The reason why physical objects should be congested objects , Behavior rules are encapsulated in methods of objects ), In the past , Now? , Every value of each entity . And the future . Whether it's auditing , Remedy or migration , Must independently verify the master rule . These external verifications “ The main rule ” Parallel processing with the system's own rules , To get real-time mirror data , Then the data is incrementally tested in the stream . in other words , We create two values for each rule derived property , And then calculate the difference between them ( If there is ).

The article introduces their unique method , Click on the title to see the original text

版权声明

本文为[On jdon]所创,转载请带上原文链接,感谢

边栏推荐

- 從小公司進入大廠,我都做對了哪些事?

- Real time data synchronization scheme based on Flink SQL CDC

- How to select the evaluation index of classification model

- Swagger 3.0 天天刷屏,真的香嗎?

- High availability cluster deployment of jumpserver: (6) deployment of SSH agent module Koko and implementation of system service management

- H5 makes its own video player (JS Part 2)

- 连肝三个通宵,JVM77道高频面试题详细分析,就这?

- PHP应用对接Justswap专用开发包【JustSwap.PHP】

- Analysis of ThreadLocal principle

- EOS创始人BM: UE,UBI,URI有什么区别?

猜你喜欢

加速「全民直播」洪流,如何攻克延时、卡顿、高并发难题?

In order to save money, I learned PHP in one day!

数据产品不就是报表吗?大错特错!这分类里有大学问

3分钟读懂Wi-Fi 6于Wi-Fi 5的优势

条码生成软件如何隐藏部分条码文字

Filecoin的经济模型与未来价值是如何支撑FIL币价格破千的

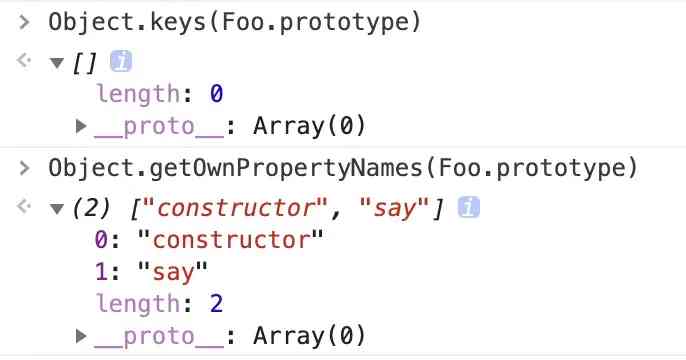

Using Es5 to realize the class of ES6

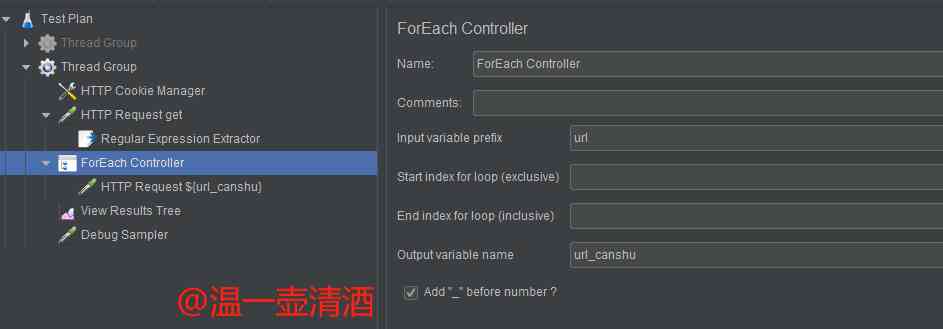

Jmeter——ForEach Controller&Loop Controller

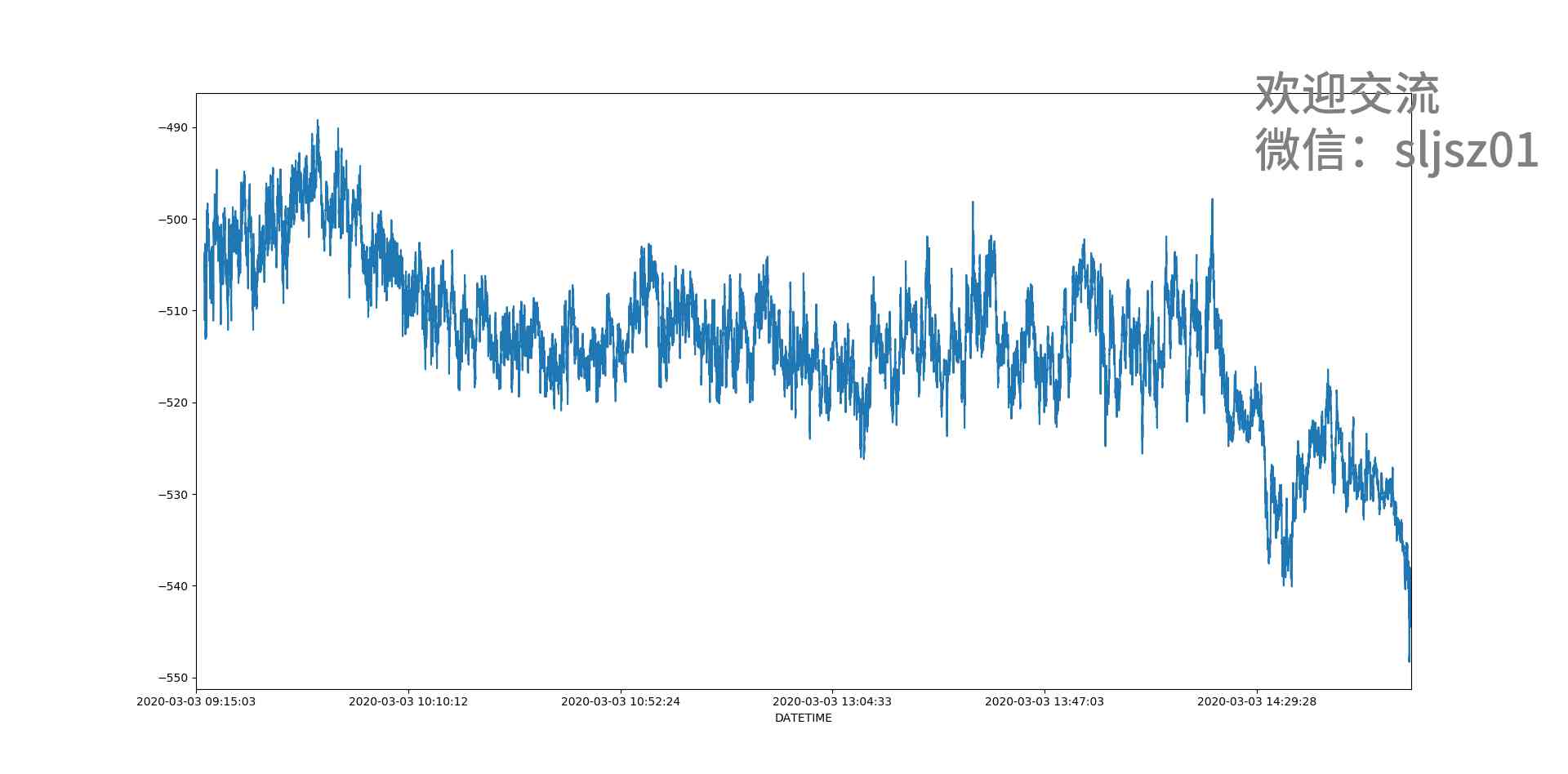

I'm afraid that the spread sequence calculation of arbitrage strategy is not as simple as you think

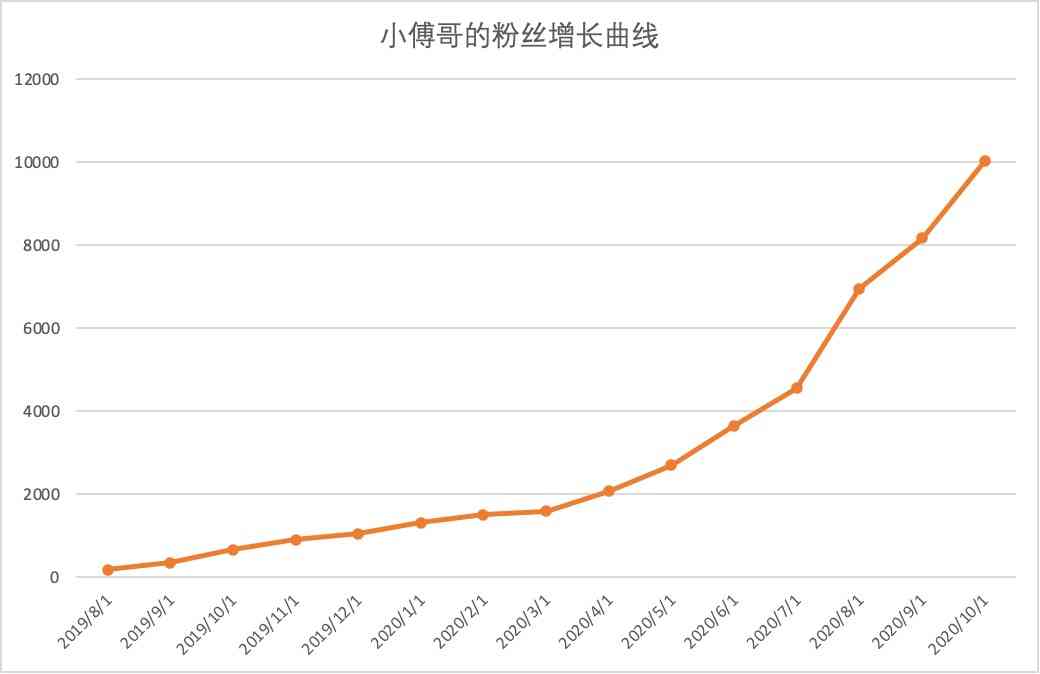

It's so embarrassing, fans broke ten thousand, used for a year!

随机推荐

Top 10 best big data analysis tools in 2020

Filecoin主网上线以来Filecoin矿机扇区密封到底是什么意思

Technical director, to just graduated programmers a word - do a good job in small things, can achieve great things

Elasticsearch 第六篇:聚合統計查詢

深度揭祕垃圾回收底層,這次讓你徹底弄懂她

遞迴思想的巧妙理解

Didi elasticsearch cluster cross version upgrade and platform reconfiguration

采购供应商系统是什么?采购供应商管理平台解决方案

Programmer introspection checklist

阿里云Q2营收破纪录背后,云的打开方式正在重塑

Process analysis of Python authentication mechanism based on JWT

(1) ASP.NET Introduction to core3.1 Ocelot

How to get started with new HTML5 (2)

容联完成1.25亿美元F轮融资

嘗試從零開始構建我的商城 (二) :使用JWT保護我們的資訊保安,完善Swagger配置

条码生成软件如何隐藏部分条码文字

Filecoin最新动态 完成重大升级 已实现四大项目进展!

连肝三个通宵,JVM77道高频面试题详细分析,就这?

High availability cluster deployment of jumpserver: (6) deployment of SSH agent module Koko and implementation of system service management

PHP应用对接Justswap专用开发包【JustSwap.PHP】