当前位置:网站首页>Cut off 20% of Imagenet data volume, and the performance of the model will not decline! Meta Stanford et al. Proposed a new method, using knowledge distillation to slim down the data set

Cut off 20% of Imagenet data volume, and the performance of the model will not decline! Meta Stanford et al. Proposed a new method, using knowledge distillation to slim down the data set

2022-07-05 09:59:00 【QbitAl】

bright and quick From the Aofei temple

qubits | official account QbitAI

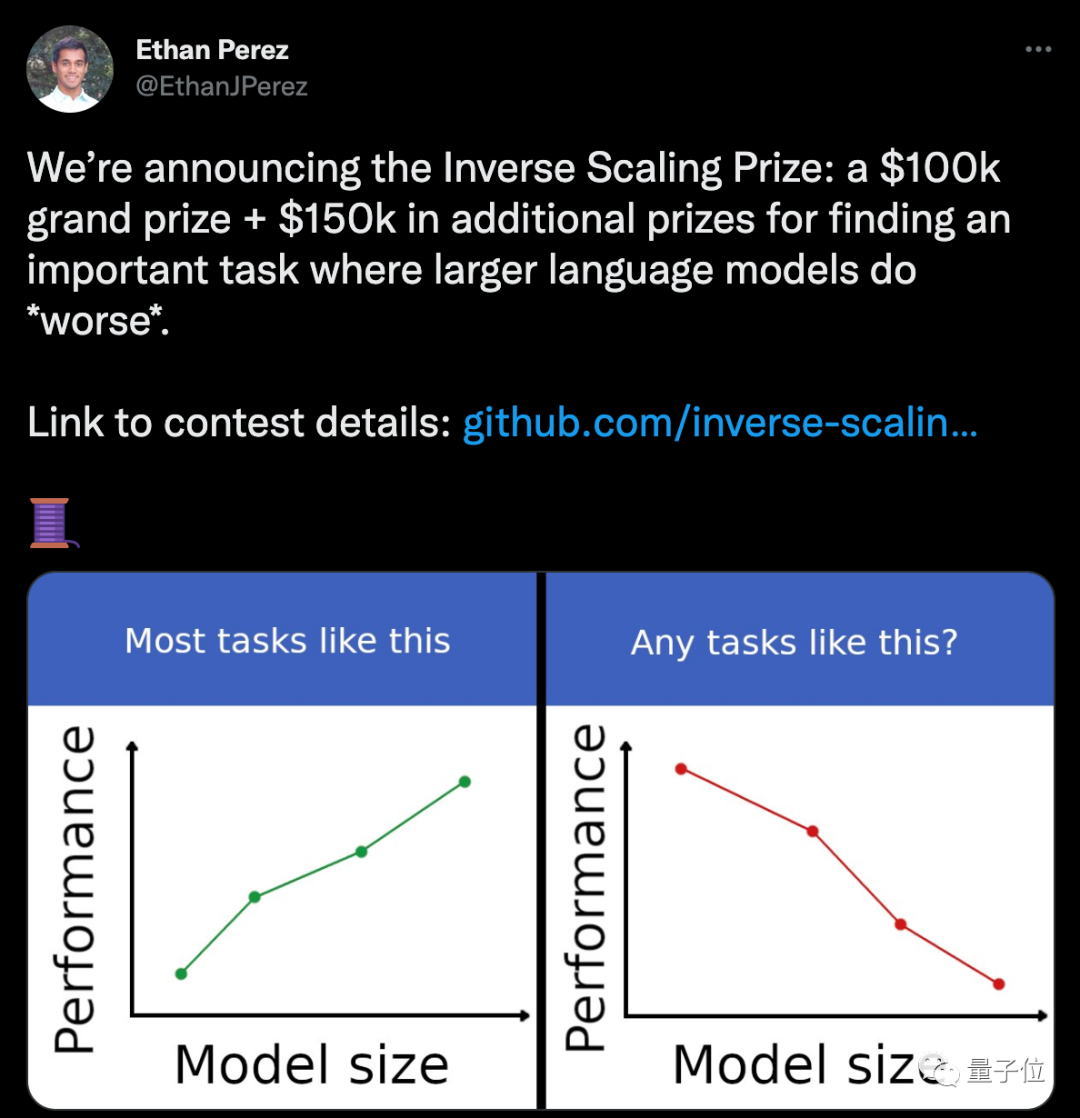

These two days , The reward offered on twitter was a mess .

a AI The company offers 25 ten thousand dollar ( Renminbi equivalent 167 Ten thousand yuan ), Offer a reward for what task can make the model bigger 、 The worse the performance .

There has been a heated discussion in the comment area .

But it's not just a whole job , But to further explore the big model .

After all , In the past two years, everyone has become more and more aware of ,AI The model cannot simply compare “ Big ”.

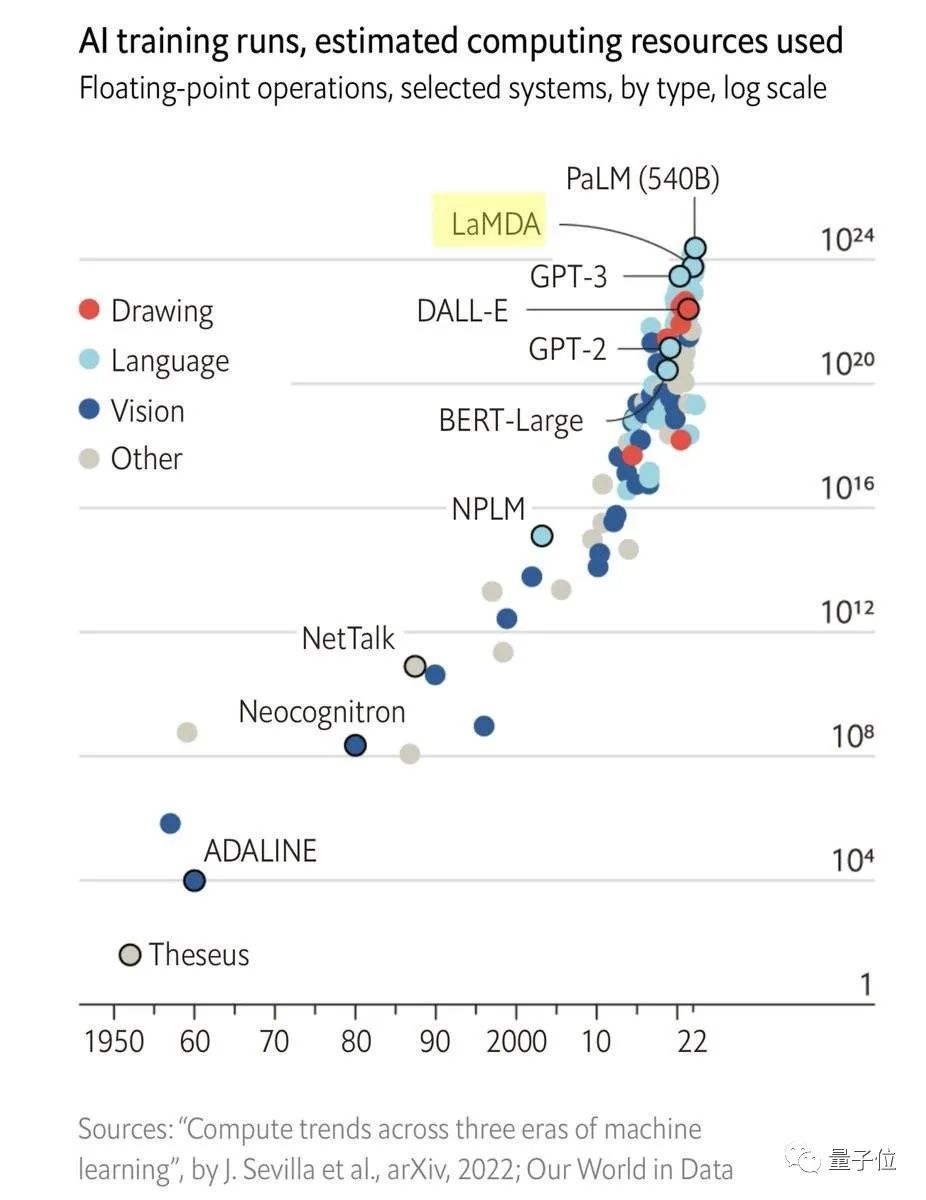

One side , As the scale of the model grows , The cost of training began to increase exponentially ;

On the other hand , The improvement of model performance has gradually reached the bottleneck , Even if you want to reduce the error again 1%, Need more data set increments and calculation increments .

For example, for Transformer for , Cross entropy loss wants to start from 3.4 Knight lowered to 2.8 Knight , You need the original 10 times Amount of training data .

To address these issues ,AI Scholars have been looking for solutions in various directions .

Meta Stanford scholars , Recently, I thought of starting from Data sets Upper cut .

They put forward , Distill the data set , Make the data set small , But it can also keep the performance of the model from declining .

Experimental verification , Cutting ImageNet 20% After the amount of data ,ResNets There is little difference between the performance and the accuracy when using the original data .

The researchers say , This is also for AGI The realization has found a new way .

The efficiency of large data sets is not high

The method proposed in this paper , In fact, it is to optimize and simplify the original data set .

The researchers say , Many methods in the past have shown , Many training examples are highly redundant , In theory, the data set “ cut ” Smaller .

And recently, some studies have proposed some indicators , You can rank training examples according to their difficulty or importance , And by retaining some of these difficult examples , Data pruning can be completed .

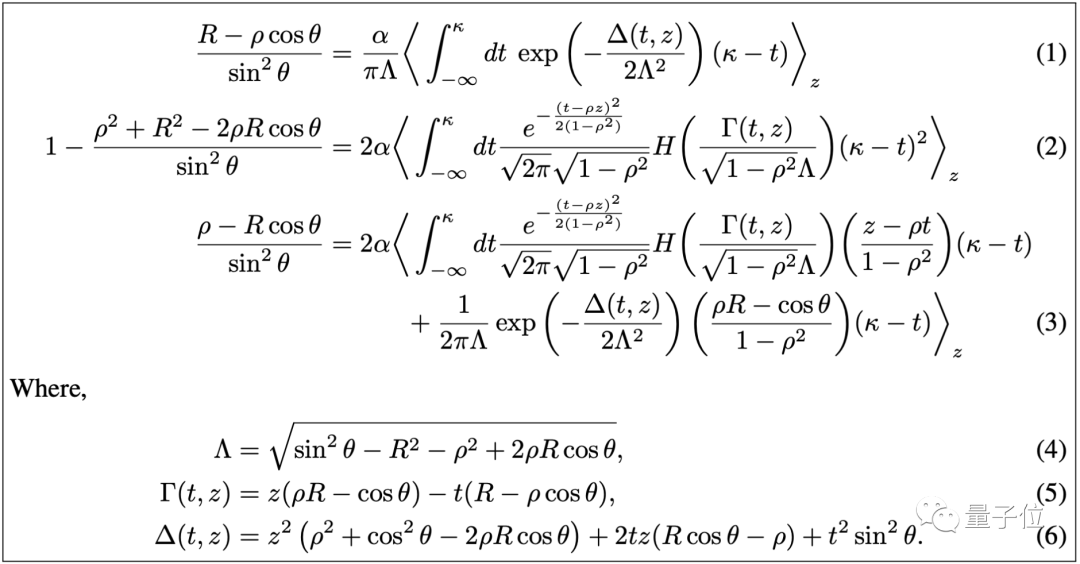

Based on previous discoveries and research , This time, scholars further put forward some concrete methods .

First , They proposed a method of data analysis , The model can learn only part of the data , Can achieve the same performance .

Through data analysis , The researchers came to a preliminary conclusion :

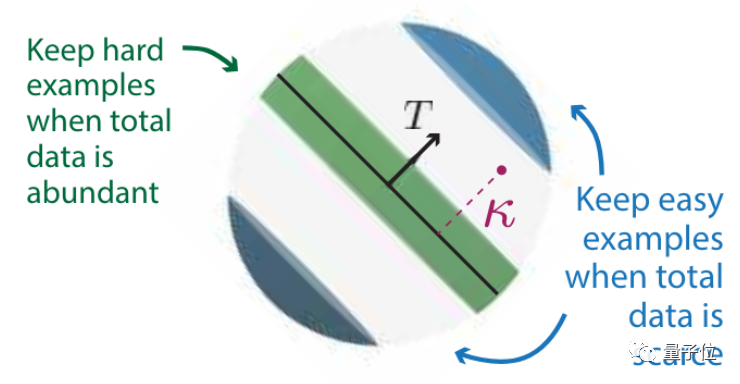

How can a dataset be pruned best ? This is related to its own scale .

The more initial data , The more difficult examples should be kept ;

The less initial data , Then we should keep the examples with low difficulty .

After retaining the difficult examples for data pruning , The corresponding relationship between model and data scale , Can break the power-law distribution .

The often mentioned 28 law is based on the power law .

namely 20% Will affect 80% Result .

And in this case , We can also find an extreme value under Pareto optimality .

Pareto optimality here refers to an ideal state of resource allocation .

It assumes a fixed group of people and allocable resources , Adjust from one allocation state to another , Without making anyone worse , At least make one person better .

In this paper , Adjusting the allocation status can be understood as , How much proportion of data set to trim .

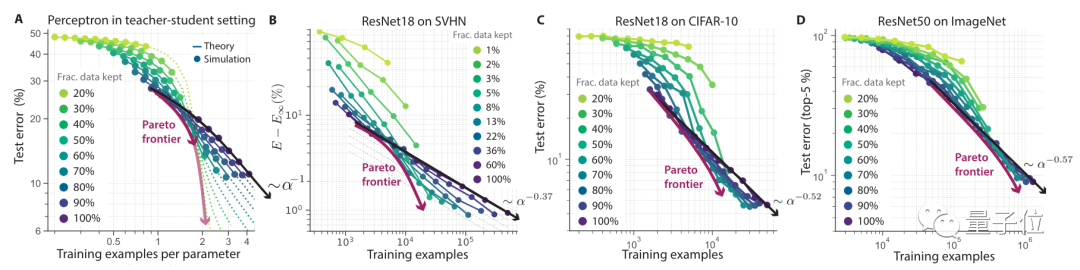

then , Researchers conducted experiments to verify this theory .

From the experimental results , When the data set is larger , The more obvious the effect after pruning .

stay SVHN、CIFAR-10、ImageNet On several datasets ,ResNet The overall error rate is inversely proportional to the pruning scale of the dataset .

stay ImageNet You can see up here , Data set size Retain 80% Under the circumstances , The error rate under the training of the original data set is basically the same .

This curve also approximates Pareto optimality .

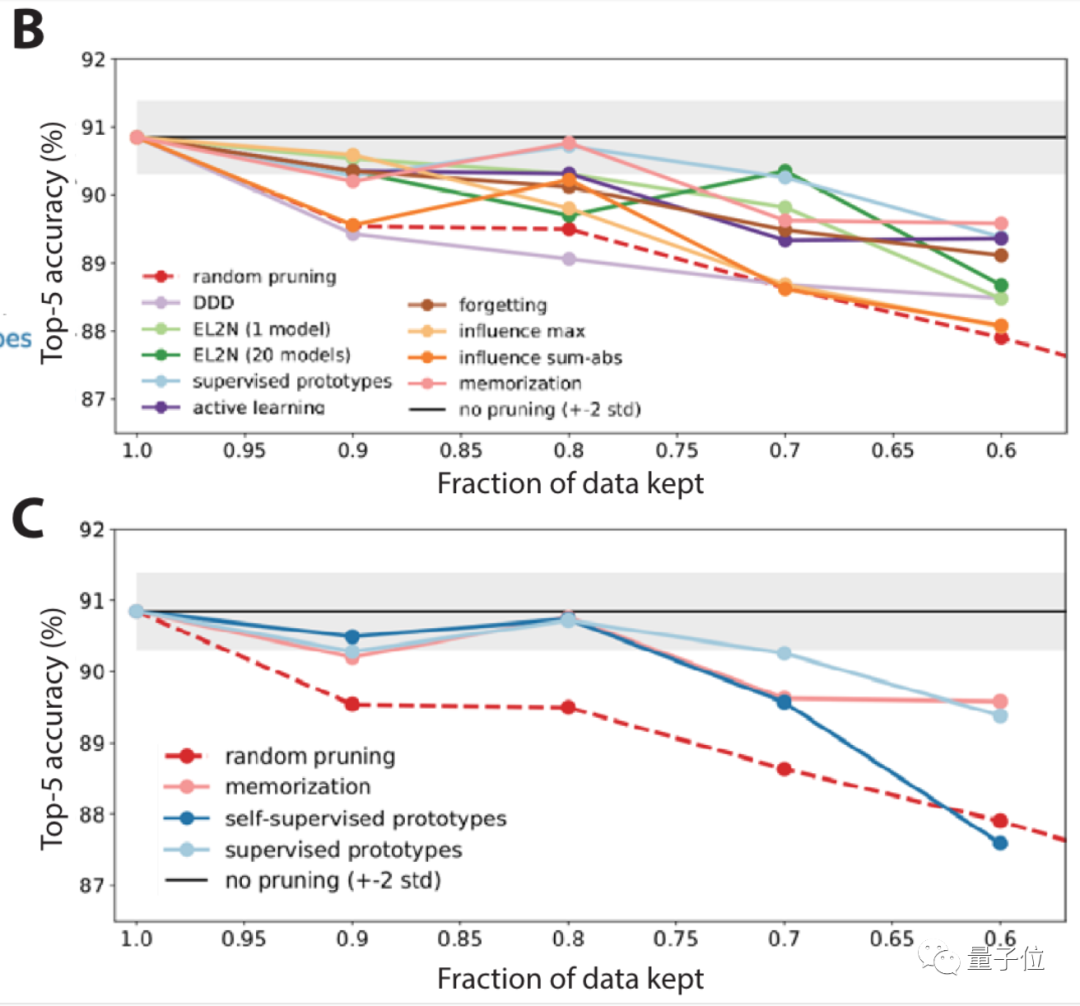

Next , Researchers focused on ImageNet On , Yes 10 Large scale benchmarking has been carried out in different cases .

It turns out that , Random pruning and some pruning indicators , stay ImageNet The performance is not good enough .

So go further , Researchers also proposed a self-monitoring method to prune data .

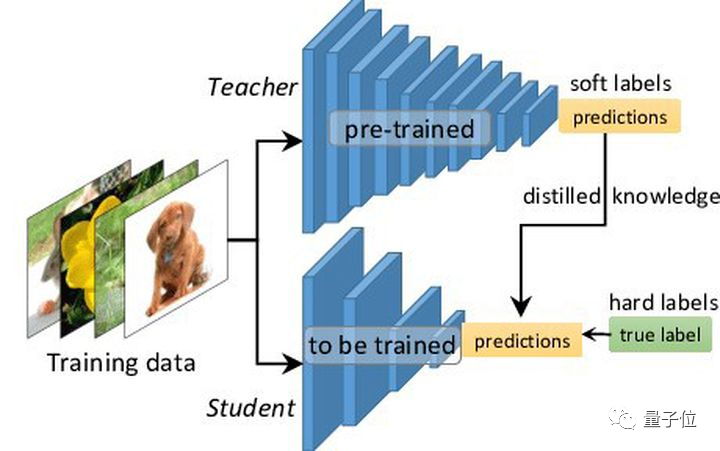

That is, knowledge distillation ( Teacher student model ), This is a common method of model compression .

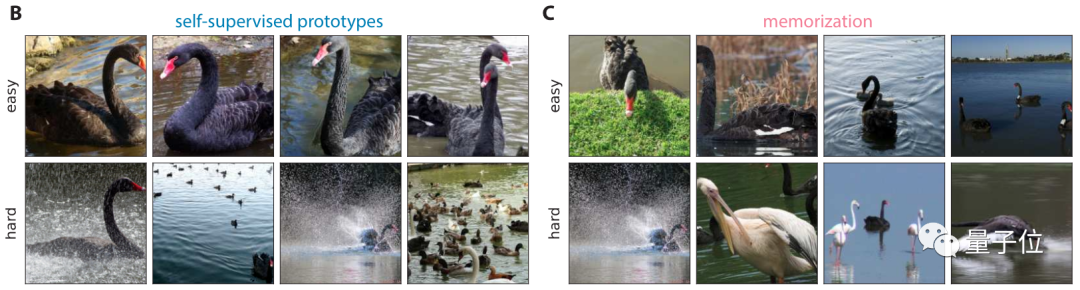

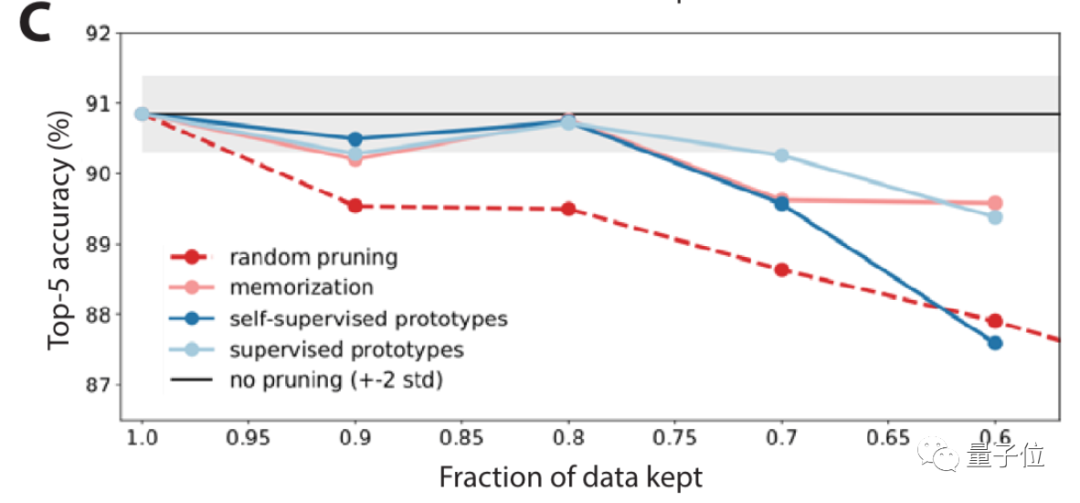

Results show , Under the self-monitoring method , It's looking for data sets that are simple / The performance of difficult examples is good .

After pruning data with self-monitoring method , The accuracy is significantly improved ( chart C Medium light blue line ).

There are still some problems

But in the paper , Researchers also mentioned , Although the data set can be pruned without sacrificing performance through the above method , But some problems still deserve attention .

For example, after the data set is reduced , Want to train a model with the same performance , It may take longer .

therefore , When pruning data sets , We should balance the factors of reducing the scale and increasing the training time .

meanwhile , Prune the dataset , It is bound to lose some samples of groups , This may also cause the model to have drawbacks in a certain aspect .

In this respect, it will easily cause moral and ethical problems .

Research team

One of the authors of this article Surya Ganguli, Is a quantum neural network scientist .

before , During his undergraduate study at Stanford , At the same time, I learned computer science 、 Mathematics and Physics , After that, I got a master's degree in electrical engineering and computer science .

Address of thesis :

https://arxiv.org/abs/2206.14486

边栏推荐

- How to implement complex SQL such as distributed database sub query and join?

- [sorting of object array]

- 让AI替企业做复杂决策真的靠谱吗?参与直播,斯坦福博士来分享他的选择|量子位·视点...

- Wechat applet - simple diet recommendation (2)

- Solve liquibase – waiting for changelog lock Cause database deadlock

- Principle and performance analysis of lepton lossless compression

- 如何获取GC(垃圾回收器)的STW(暂停)时间?

- 基于模板配置的数据可视化平台

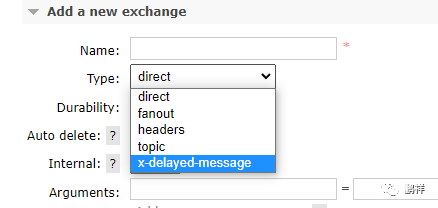

- .Net之延迟队列

- Uncover the practice of Baidu intelligent testing in the field of automatic test execution

猜你喜欢

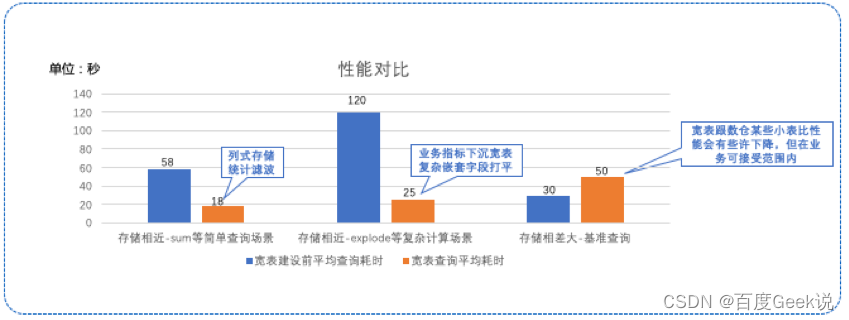

基于宽表的数据建模应用

.Net之延迟队列

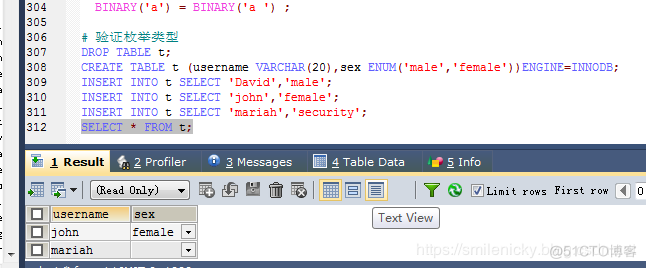

MySQL字符类型学习笔记

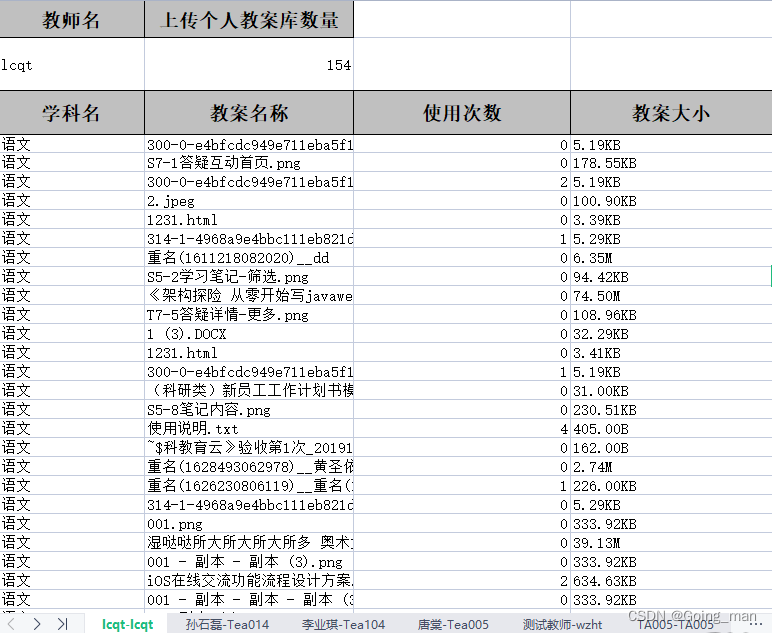

Project practice | excel export function

cent7安装Oracle数据库报错

百度交易中台之钱包系统架构浅析

Wechat applet - simple diet recommendation (2)

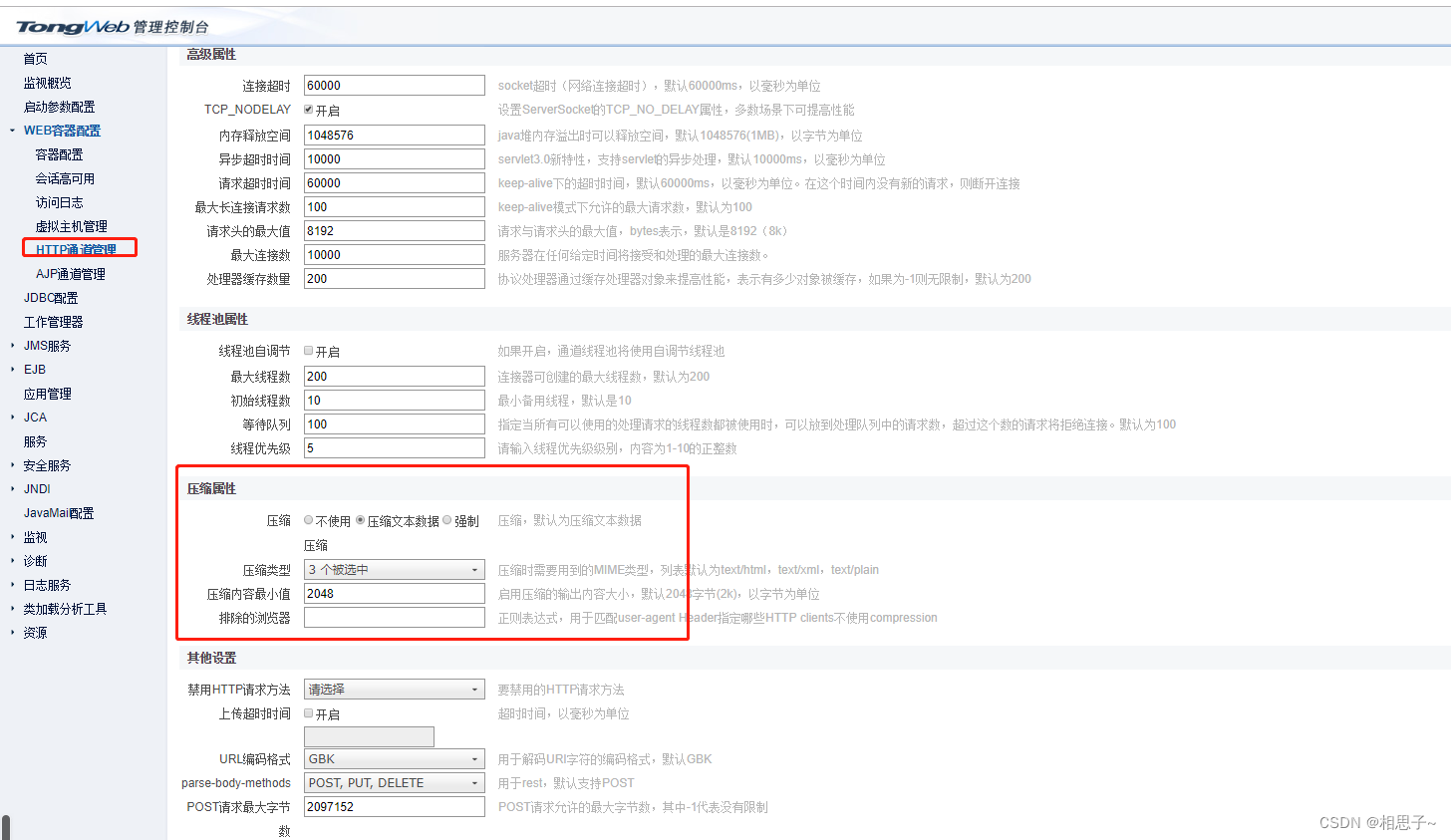

tongweb设置gzip

Community group buying exploded overnight. How should this new model of e-commerce operate?

The popularity of B2B2C continues to rise. What are the benefits of enterprises doing multi-user mall system?

随机推荐

百度评论中台的设计与探索

Tongweb set gzip

Tdengine offline upgrade process

Understand the window query function of tdengine in one article

SQL learning - case when then else

Node の MongoDB Driver

[200 opencv routines] 219 Add digital watermark (blind watermark)

宝塔面板MySQL无法启动

一文读懂TDengine的窗口查询功能

Project practice | excel export function

Solve liquibase – waiting for changelog lock Cause database deadlock

代码语言的魅力

揭秘百度智能测试在测试自动执行领域实践

MySQL installation configuration and creation of databases and tables

Generics, generic defects and application scenarios that 90% of people don't understand

Small program startup performance optimization practice

Officially launched! Tdengine plug-in enters the official website of grafana

基于宽表的数据建模应用

oracle和mysql批量Merge对比

How to choose the right chain management software?