当前位置:网站首页>Dynamic global memory allocation and operation in CUDA

Dynamic global memory allocation and operation in CUDA

2022-07-02 06:28:00 【Little Heshang sweeping the floor】

Dynamic Global Memory Allocation and Operations

Dynamic global memory allocation and operation are only affected by computing power 2.x And later device support .

__host__ __device__ void* malloc(size_t size);

__device__ void *__nv_aligned_device_malloc(size_t size, size_t align);

__host__ __device__ void free(void* ptr);

Dynamically allocate and free memory from a fixed size heap in global memory .

__host__ __device__ void* memcpy(void* dest, const void* src, size_t size);

from src Copy the memory location pointed to size Byte to dest Point to memory location .

__host__ __device__ void* memset(void* ptr, int value, size_t size);

take ptr Of the memory block pointed to size The byte is set to value( Interpreted as an unsigned character ).

CUDA Kernel malloc() Function allocates at least size Bytes , And returns a pointer to the allocated memory , If there is not enough memory to satisfy the request , Then return to NULL. The returned pointer is guaranteed to be the same as 16 Byte boundary alignment .

Kernel CUDA __nv_aligned_device_malloc() Function allocates at least size Bytes , And returns a pointer to the allocated memory , If memory is insufficient to meet the requested size or alignment , Then return to NULL. The address to allocate memory will be align Multiple . align Must be 2 The nonzero power of .

CUDA Kernel free() Function to release ptr Memory pointed to , This memory must be previously paired malloc() or __nv_aligned_device_malloc() The call to return . If ptr by NULL, Ignore the right free() Call to . Use the same ptr Repeated calls to free() Have undefined behavior .

Given CUDA Threads pass through malloc() or __nv_aligned_device_malloc() The allocated memory is CUDA The context remains allocated throughout its lifecycle , Or until you call free() Explicit release . It can be used by any other CUDA Thread usage , This is true even when the subsequent kernel starts . whatever CUDA A thread can release the memory allocated by another thread , However, care should be taken to ensure that the same pointer is not released multiple times .

1. Heap Memory Allocation

The device memory heap has a fixed size , Must be used in any malloc()、__nv_aligned_device_malloc() or free() Specify the size before loading the program into the context . If any program uses without specifying the heap size malloc() or __nv_aligned_device_malloc() , Will be assigned 8 MB Default heap for .

following API Function to get and set the heap size :

cudaDeviceGetLimit(size_t* size, cudaLimitMallocHeapSize)cudaDeviceSetLimit(cudaLimitMallocHeapSize, size_t size)

The granted heap size is at least size Bytes . cuCtxGetLimit() and cudaDeviceGetLimit() Returns the current requested heap size .

When the module is loaded into the context , The actual memory allocation of the heap occurs , Or explicitly through CUDA The driver API( See modular ), Or implicitly through CUDA Runtime API( See CUDA Runtime ). If memory allocation fails , Module loading will produce CUDA_ERROR_SHARED_OBJECT_INIT_FAILED error .

Once module loading occurs , The heap size cannot be changed , And it will not dynamically resize as needed .

Except through the host side CUDA API call ( for example cudaMalloc()) Allocated outside the memory reserved for the device heap .

2. Interoperability with Host Memory API

Through the equipment malloc() or __nv_aligned_device_malloc() The allocated memory cannot be released by the runtime ( namely , By calling any free memory function from device memory ).

Again , Memory allocated by runtime ( namely , By calling any memory allocation function from device memory ) Cannot pass free() Release .

Besides , Call in device code malloc() or __nv_aligned_device_malloc() The allocated memory cannot be used for any runtime or driver API call ( namely cudaMemcpy、cudaMemset etc. ).

3. Examples

3.1. Per Thread Allocation

#include <stdlib.h>

#include <stdio.h>

__global__ void mallocTest()

{

size_t size = 123;

char* ptr = (char*)malloc(size);

memset(ptr, 0, size);

printf("Thread %d got pointer: %p\n", threadIdx.x, ptr);

free(ptr);

}

int main()

{

// Set a heap size of 128 megabytes. Note that this must

// be done before any kernel is launched.

cudaDeviceSetLimit(cudaLimitMallocHeapSize, 128*1024*1024);

mallocTest<<<1, 5>>>();

cudaDeviceSynchronize();

return 0;

}

The above code will output :

Thread 0 got pointer: 00057020

Thread 1 got pointer: 0005708c

Thread 2 got pointer: 000570f8

Thread 3 got pointer: 00057164

Thread 4 got pointer: 000571d0

Notice how each thread encounters malloc() and memset() command , So as to receive and initialize their own assignments . ( The exact pointer value will vary : These are illustrative .)

3.2. Per Thread Block Allocation

#include <stdlib.h>

__global__ void mallocTest()

{

__shared__ int* data;

// The first thread in the block does the allocation and then

// shares the pointer with all other threads through shared memory,

// so that access can easily be coalesced.

// 64 bytes per thread are allocated.

if (threadIdx.x == 0) {

size_t size = blockDim.x * 64;

data = (int*)malloc(size);

}

__syncthreads();

// Check for failure

if (data == NULL)

return;

// Threads index into the memory, ensuring coalescence

int* ptr = data;

for (int i = 0; i < 64; ++i)

ptr[i * blockDim.x + threadIdx.x] = threadIdx.x;

// Ensure all threads complete before freeing

__syncthreads();

// Only one thread may free the memory!

if (threadIdx.x == 0)

free(data);

}

int main()

{

cudaDeviceSetLimit(cudaLimitMallocHeapSize, 128*1024*1024);

mallocTest<<<10, 128>>>();

cudaDeviceSynchronize();

return 0;

}

3.3. Allocation Persisting Between Kernel Launches

#include <stdlib.h>

#include <stdio.h>

#define NUM_BLOCKS 20

__device__ int* dataptr[NUM_BLOCKS]; // Per-block pointer

__global__ void allocmem()

{

// Only the first thread in the block does the allocation

// since we want only one allocation per block.

if (threadIdx.x == 0)

dataptr[blockIdx.x] = (int*)malloc(blockDim.x * 4);

__syncthreads();

// Check for failure

if (dataptr[blockIdx.x] == NULL)

return;

// Zero the data with all threads in parallel

dataptr[blockIdx.x][threadIdx.x] = 0;

}

// Simple example: store thread ID into each element

__global__ void usemem()

{

int* ptr = dataptr[blockIdx.x];

if (ptr != NULL)

ptr[threadIdx.x] += threadIdx.x;

}

// Print the content of the buffer before freeing it

__global__ void freemem()

{

int* ptr = dataptr[blockIdx.x];

if (ptr != NULL)

printf("Block %d, Thread %d: final value = %d\n",

blockIdx.x, threadIdx.x, ptr[threadIdx.x]);

// Only free from one thread!

if (threadIdx.x == 0)

free(ptr);

}

int main()

{

cudaDeviceSetLimit(cudaLimitMallocHeapSize, 128*1024*1024);

// Allocate memory

allocmem<<< NUM_BLOCKS, 10 >>>();

// Use memory

usemem<<< NUM_BLOCKS, 10 >>>();

usemem<<< NUM_BLOCKS, 10 >>>();

usemem<<< NUM_BLOCKS, 10 >>>();

// Free memory

freemem<<< NUM_BLOCKS, 10 >>>();

cudaDeviceSynchronize();

return 0;

}

边栏推荐

- AtCoder Beginner Contest 253 F - Operations on a Matrix // 树状数组

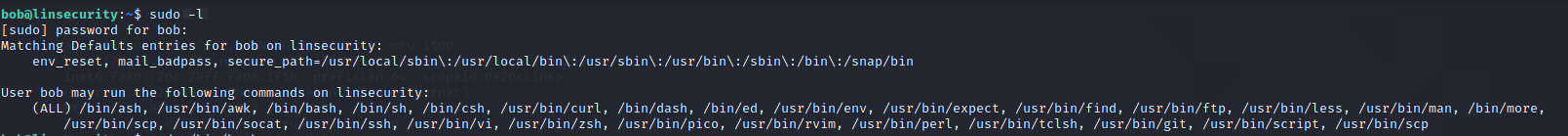

- Sudo right raising

- VLAN experiment of switching technology

- FE - Eggjs 结合 Typeorm 出现连接不了数据库

- 华为MindSpore开源实习机试题

- TensorRT中的循环

- LeetCode 83. Delete duplicate elements in the sorting linked list

- CUDA中的存储空间修饰符

- LeetCode 77. combination

- CUDA and Direct3D consistency

猜你喜欢

Detailed explanation of BGP message

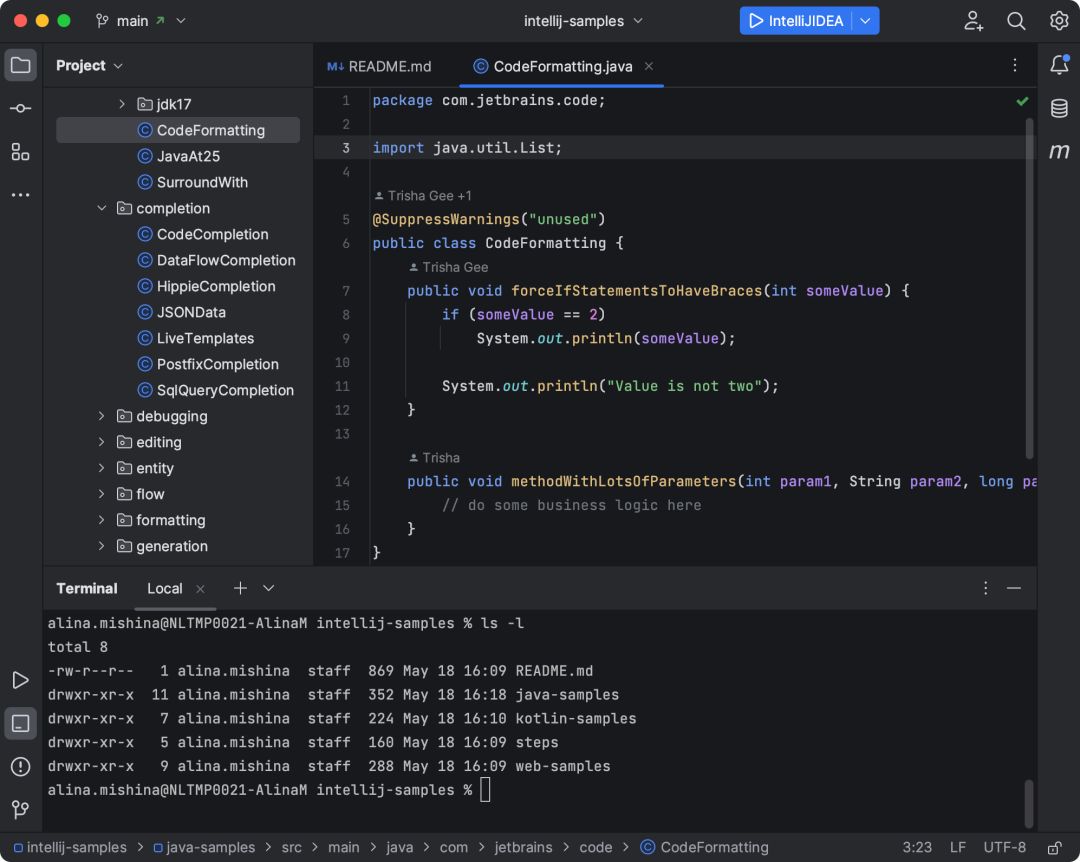

IDEA公布全新默认UI,太清爽了(内含申请链接)

Detailed definition of tensorrt data format

sudo提权

New version of dedecms collection and release plug-in tutorial tool

Support new and old imperial CMS collection and warehousing tutorials

Common means of modeling: combination

FE - 微信小程序 - 蓝牙 BLE 开发调研与使用

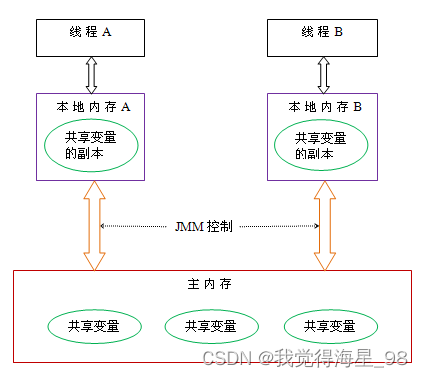

深入了解JUC并发(二)并发理论

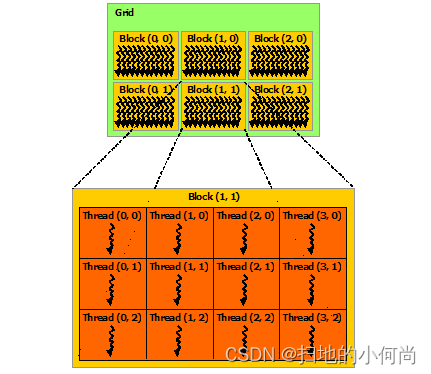

CUDA中的线程层次

随机推荐

New version of dedecms collection and release plug-in tutorial tool

sudo提权

Redis---1. Data structure characteristics and operation

Redis - hot key issues

程序员的自我修养—找工作反思篇

CUDA中的存储空间修饰符

栈(线性结构)

Does the assignment of Boolean types such as tag attribute disabled selected checked not take effect?

CUDA中的Warp matrix functions

Data science [viii]: SVD (I)

AWD学习

深入了解JUC并发(一)什么是JUC

Sublime Text 配置php编译环境

Browser principle mind map

FE - weex 开发 之 使用 weex-ui 组件与配置使用

Redis——热点key问题

数据科学【九】:SVD(二)

ModuleNotFoundError: No module named ‘jieba.analyse‘; ‘jieba‘ is not a package

深入学习JVM底层(五):类加载机制

深入学习JVM底层(二):HotSpot虚拟机对象