当前位置:网站首页>How to deploy pytorch lightning model to production

How to deploy pytorch lightning model to production

2020-11-08 16:58:00 【InfoQ】

版权声明

本文为[InfoQ]所创,转载请带上原文链接,感谢

边栏推荐

- Jsliang job series - 07 - promise

- Design by contract (DBC) and its application in C language

- 机械硬盘随机IO慢的超乎你的想象

- (O)ServiceManager分析(一)之BinderInternal.getContextObject

- 一分钟全面看懂forsage智能合约全球共享以太坊矩阵计划

- 实验

- SQL 速查

- Welcome to offer, grade P7, face-to-face sharing, 10000 words long text to take you through the interview process

- Tips and skills of CSP examination

- 基于阿里云日志服务快速打造简版业务监控看板

猜你喜欢

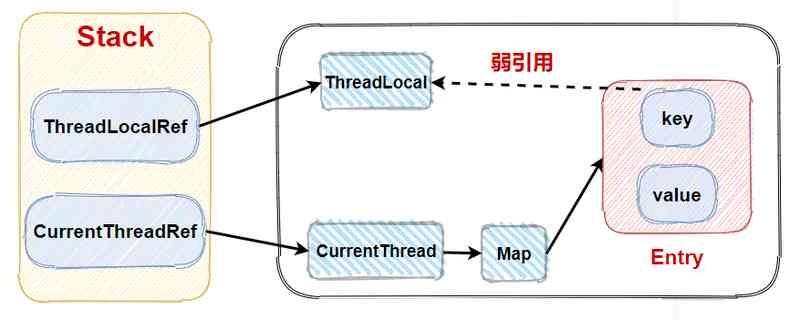

精通高并发与多线程,却不会用ThreadLocal?

Tips and skills of CSP examination

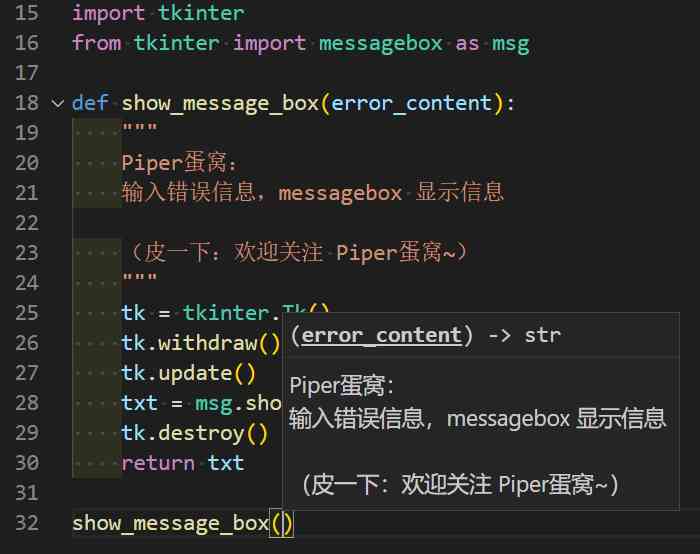

不是程序员,代码也不能太丑!python官方书写规范:任何人都该了解的 pep8

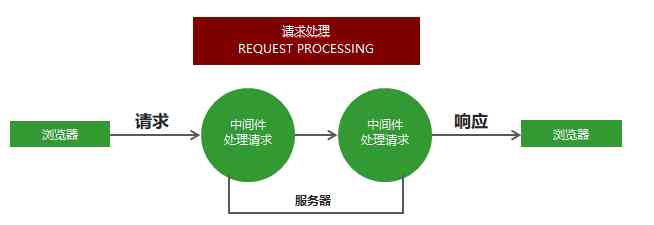

Express框架

总结: 10月海外DeFi新项目,更多资管策略来了!

IT industry salary has been far ahead! Ten years later, is the programmer still a high paying profession?

I used Python to find out all the people who deleted my wechat and deleted them automatically

Don't release resources in finally, unlock a new pose!

Flink's sink: a preliminary study

I used Python to find out all the people who deleted my wechat and deleted them automatically

随机推荐

Xiaoqingtai officially set foot on the third day of no return

刚刚好,才是最理想的状态

趣文分享:C 语言和 C++、C# 的区别在什么地方?

PHP生成唯一字符串

Your random IO hard disk

Using k3s to create local development cluster

二叉树的四种遍历方应用

构建者模式(Builder pattern)

Common memory leakage scenarios in JS

阿里云的MaxCompute数加(原ODPS)用的怎样?

experiment

第五章编程题

进入互联网得知道的必备法律法规有哪些?

latex入门

IT行业薪资一直遥遥领先!十年后的程序员,是否还是一个高薪职业?

Dev-c++在windows环境下无法debug(调试)的解决方案

不是程序员,代码也不能太丑!python官方书写规范:任何人都该了解的 pep8

函数分类大pk!sigmoid和softmax,到底分别怎么用?

搭载固态硬盘的服务器究竟比机械硬盘快多少

Flink's sink: a preliminary study