当前位置:网站首页>How to save the trained neural network model (pytorch version)

How to save the trained neural network model (pytorch version)

2022-07-05 17:33:00 【Chasing young feather】

One 、 Save and load models

After training the model with data, an ideal model is obtained , But in practical application, it is impossible to train first and then use , So you have to save the trained model first , Then load it when you need it and use it directly . The essence of a model is a pile of parameters stored in some structure , So there are two ways to save , One way is to save the whole model directly , Then directly load the whole model , But this will consume more memory ; The other is to save only the parameters of the model , When used later, create a new model with the same structure , Then import the saved parameters into the new model .

Two 、 Implementation methods of two cases

(1) Save only the model parameter dictionary ( recommend )

# preservation

torch.save(the_model.state_dict(), PATH)

# Read

the_model = TheModelClass(*args, **kwargs)

the_model.load_state_dict(torch.load(PATH))(2) Save the entire model

# preservation

torch.save(the_model, PATH)

# Read

the_model = torch.load(PATH)3、 ... and 、 Save only model parameters ( Example )

pytorch Will put the parameters of the model in a dictionary , And all we have to do is save this dictionary , Then call .

For example, design a single layer LSTM Network of , And then training , After training, save the parameter Dictionary of the model , Save as... Under the same folder rnn.pt file :

class LSTM(nn.Module):

def __init__(self, input_size, hidden_size, num_layers):

super(LSTM, self).__init__()

self.hidden_size = hidden_size

self.num_layers = num_layers

self.lstm = nn.LSTM(input_size, hidden_size, num_layers, batch_first=True)

self.fc = nn.Linear(hidden_size, 1)

def forward(self, x):

# Set initial states

h0 = torch.zeros(self.num_layers, x.size(0), self.hidden_size).to(device)

# 2 for bidirection

c0 = torch.zeros(self.num_layers, x.size(0), self.hidden_size).to(device)

# Forward propagate LSTM

out, _ = self.lstm(x, (h0, c0))

# out: tensor of shape (batch_size, seq_length, hidden_size*2)

out = self.fc(out)

return out

rnn = LSTM(input_size=1, hidden_size=10, num_layers=2).to(device)

# optimize all cnn parameters

optimizer = torch.optim.Adam(rnn.parameters(), lr=0.001)

# the target label is not one-hotted

loss_func = nn.MSELoss()

for epoch in range(1000):

output = rnn(train_tensor) # cnn output`

loss = loss_func(output, train_labels_tensor) # cross entropy loss

optimizer.zero_grad() # clear gradients for this training step

loss.backward() # backpropagation, compute gradients

optimizer.step() # apply gradients

output_sum = output

# Save the model

torch.save(rnn.state_dict(), 'rnn.pt')After saving, use the trained model to process the data :

# Test the saved model

m_state_dict = torch.load('rnn.pt')

new_m = LSTM(input_size=1, hidden_size=10, num_layers=2).to(device)

new_m.load_state_dict(m_state_dict)

predict = new_m(test_tensor) Here's an explanation , When you save the model rnn.state_dict() Express rnn The parameter Dictionary of this model , When testing the saved model, first load the parameter Dictionary m_state_dict = torch.load('rnn.pt');

Then instantiate one LSTM Antithetic image , Here, we need to ensure that the parameters passed in are consistent with the instantiation rnn Is the same as when the object is passed in , That is, the structure is the same new_m = LSTM(input_size=1, hidden_size=10, num_layers=2).to(device);

Here are the parameters loaded before passing in the new model new_m.load_state_dict(m_state_dict);

Finally, we can use this model to process the data predict = new_m(test_tensor)

Four 、 Save the whole model ( Example )

class LSTM(nn.Module):

def __init__(self, input_size, hidden_size, num_layers):

super(LSTM, self).__init__()

self.hidden_size = hidden_size

self.num_layers = num_layers

self.lstm = nn.LSTM(input_size, hidden_size, num_layers, batch_first=True)

self.fc = nn.Linear(hidden_size, 1)

def forward(self, x):

# Set initial states

h0 = torch.zeros(self.num_layers, x.size(0), self.hidden_size).to(device) # 2 for bidirection

c0 = torch.zeros(self.num_layers, x.size(0), self.hidden_size).to(device)

# Forward propagate LSTM

out, _ = self.lstm(x, (h0, c0)) # out: tensor of shape (batch_size, seq_length, hidden_size*2)

# print("output_in=", out.shape)

# print("fc_in_shape=", out[:, -1, :].shape)

# Decode the hidden state of the last time step

# out = torch.cat((out[:, 0, :], out[-1, :, :]), axis=0)

# out = self.fc(out[:, -1, :]) # Take the last column as out

out = self.fc(out)

return out

rnn = LSTM(input_size=1, hidden_size=10, num_layers=2).to(device)

print(rnn)

optimizer = torch.optim.Adam(rnn.parameters(), lr=0.001) # optimize all cnn parameters

loss_func = nn.MSELoss() # the target label is not one-hotted

for epoch in range(1000):

output = rnn(train_tensor) # cnn output`

loss = loss_func(output, train_labels_tensor) # cross entropy loss

optimizer.zero_grad() # clear gradients for this training step

loss.backward() # backpropagation, compute gradients

optimizer.step() # apply gradients

output_sum = output

# Save the model

torch.save(rnn, 'rnn1.pt')After saving, use the trained model to process the data :

new_m = torch.load('rnn1.pt')

predict = new_m(test_tensor)边栏推荐

- 华为云云原生容器综合竞争力,中国第一!

- 2022年信息系统管理工程师考试大纲

- 一口气读懂 IT发展史

- Is it safe and reliable to open futures accounts on koufu.com? How to distinguish whether the platform is safe?

- Read the basic grammar of C language in one article

- WR | Jufeng group of West Lake University revealed the impact of microplastics pollution on the flora and denitrification function of constructed wetlands

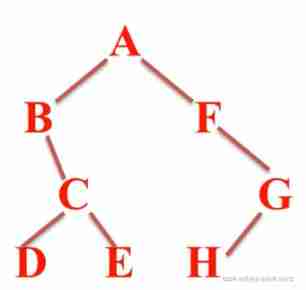

- Three traversal methods of binary tree

- ThoughtWorks global CTO: build the architecture according to needs, and excessive engineering will only "waste people and money"

- Rider 设置选中单词侧边高亮,去除警告建议高亮

- 排错-关于clion not found visual studio 的问题

猜你喜欢

Judge whether a number is a prime number (prime number)

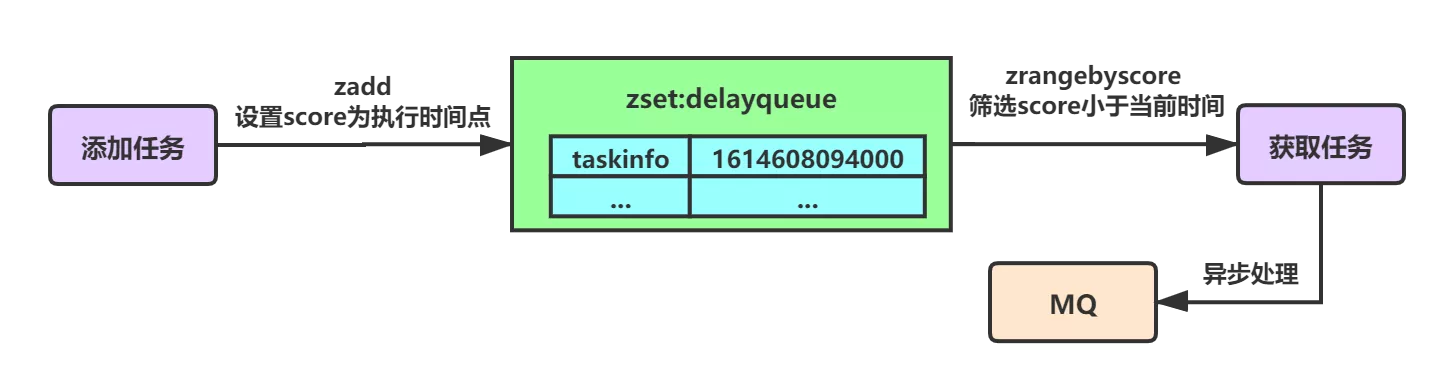

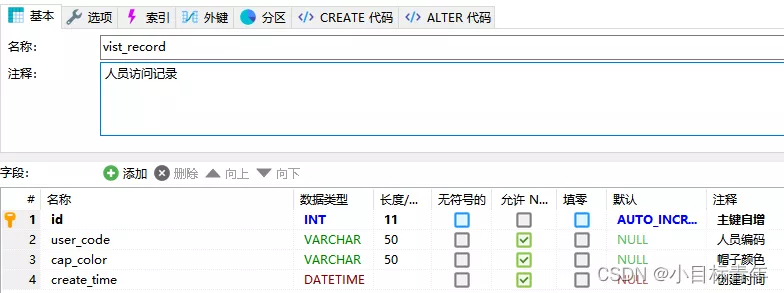

基于Redis实现延时队列的优化方案小结

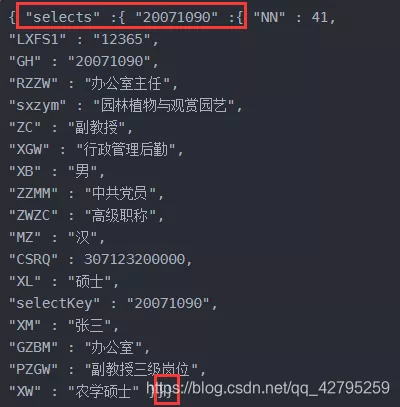

mysql中取出json字段的小技巧

thinkphp3.2.3

IDC报告:腾讯云数据库稳居关系型数据库市场TOP 2!

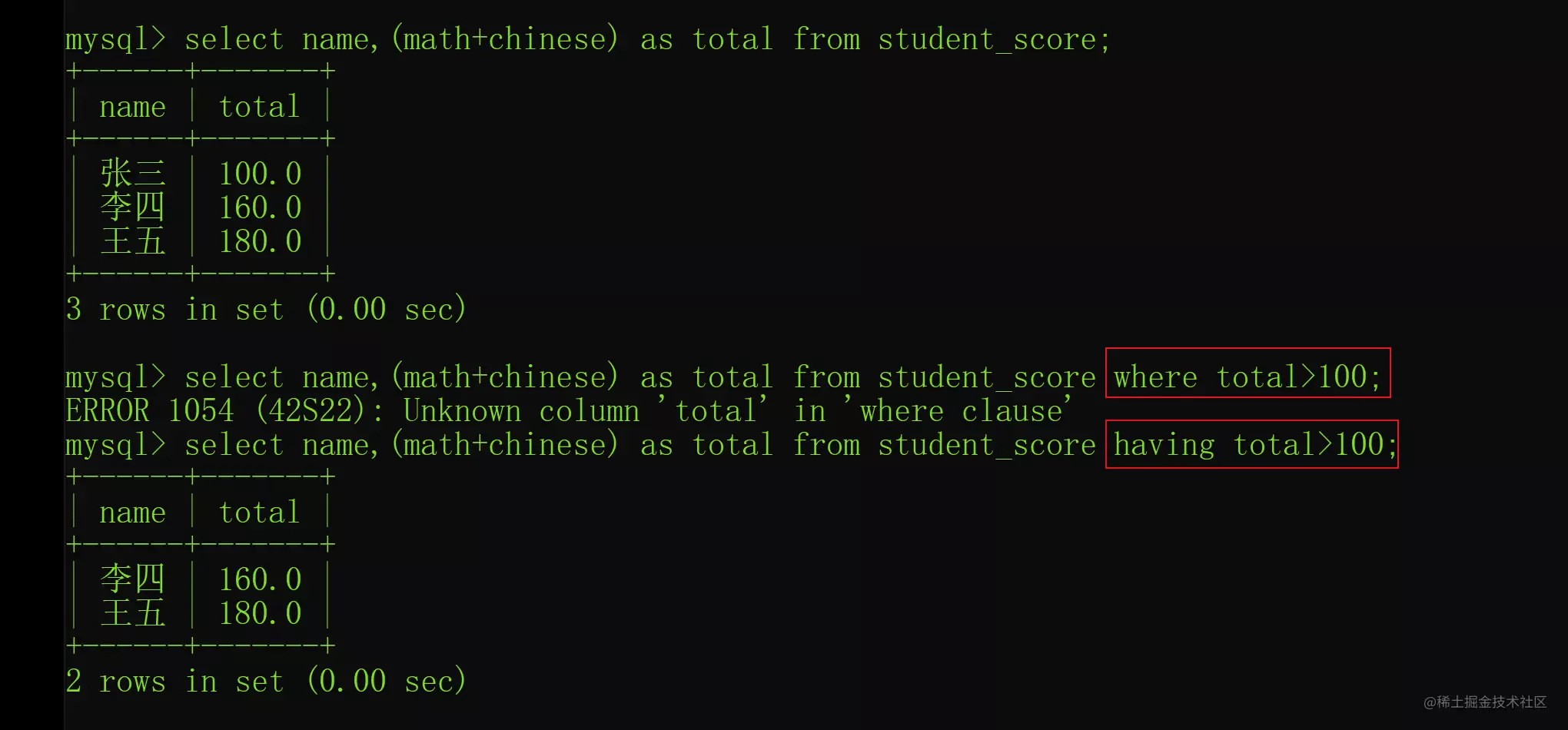

MYSQL group by 有哪些注意事项

MySQL queries the latest qualified data rows

Which is more cost-effective, haqu K1 or haqu H1? Who is more worth starting with?

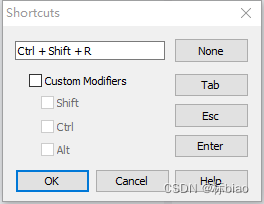

Winedt common shortcut key modify shortcut key latex compile button

Three traversal methods of binary tree

随机推荐

漫画:有趣的海盗问题 (完整版)

Cartoon: interesting [pirate] question

Machine learning 02: model evaluation

Knowledge points of MySQL (6)

统计php程序运行时间及设置PHP最长运行时间

ThoughtWorks global CTO: build the architecture according to needs, and excessive engineering will only "waste people and money"

张平安:加快云上数字创新,共建产业智慧生态

CMake教程Step5(添加系统自检)

ICML 2022 | meta proposes a robust multi-objective Bayesian optimization method to effectively deal with input noise

Compter le temps d'exécution du programme PHP et définir le temps d'exécution maximum de PHP

漫画:寻找无序数组的第k大元素(修订版)

goto Statement

The five most difficult programming languages in the world

Error in compiling libssh2. OpenSSL cannot be found

winedt常用快捷键 修改快捷键latex编译按钮

普通程序员看代码,顶级程序员看趋势

域名解析,反向域名解析nbtstat

Cloud security daily 220705: the red hat PHP interpreter has found a vulnerability of executing arbitrary code, which needs to be upgraded as soon as possible

Embedded-c Language-5

Matery主题自定义(一)黑夜模式