当前位置:网站首页>2021 geometry deep learning master Michael Bronstein long article analysis

2021 geometry deep learning master Michael Bronstein long article analysis

2022-07-06 22:10:00 【wujianming_ one hundred and ten thousand one hundred and sevent】

2021 Master of geometry deep learning Michael Bronstein Long text analysis

Reading guide : Geometric machine learning and graph based machine learning are currently one of the most popular research topics . In the past year , Research in this field is developing rapidly . In this paper , Pioneer of geometry deep learning Michael Bronstein and Petar Veličković cooperation , Interviewed many outstanding experts in the field , The research highlights in this field in the past year are summarized , And in this direction 2022 The development trend in is prospected .

This article is compiled from https://towardsdatascience.com/predictions-and-hopes-for-geometric-graph-ml-in-2022-aa3b8b79f5cc#0b34

author :Michael Bronstein

At the university of Oxford, DeepMind Professor of artificial intelligence 、Twitter Figure head of machine learning

1. Summary of key points

- Geometry is becoming more and more important in machine learning . Differential geometry and homologous fields have introduced new ideas into machine learning , Including a new equivariant graph neural network using symmetry and curvature similar to that in the graph (GNN) framework , And understanding and utilizing uncertainty in deep learning models .

- Messaging is still GNN The dominant paradigm . stay 2020 year , The research community is aware of messaging GNN shortcomings , And seek a more expressive framework beyond this paradigm .2021 year , Obviously , Messaging is still dominant , Because some research work shows , take GNN Better expressive ability can be obtained by applying it to subgraphs .

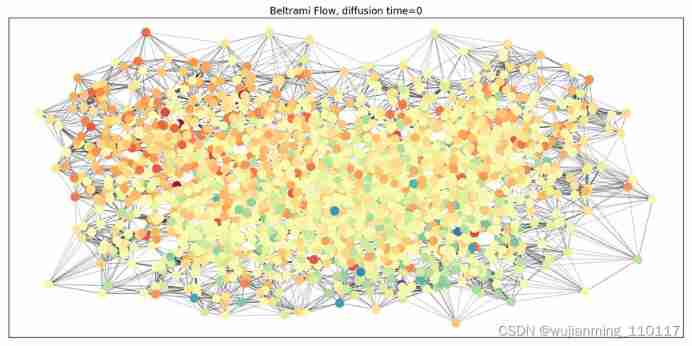

- Differential equations gave birth to new GNN framework .NeuralODE The trend extends to the field of graph machine learning . Some work shows how to GNN The model is formally defined as the discrete form of continuous differential equations . In the short term , These efforts will lead to new ones that can be avoided GNN Common problems in ( Such as over smoothing and over compression ) The architecture of . In the long run , May better understand GNN How it works , And how to make it more expressive and interpretable .

- signal processing 、 Old ideas in neuroscience and physics have been reborn . Many researchers believe that , Graph signal processing has rekindled the recent interest in graph machine learning , It provides the first set of analysis tools for this field ( for example , Generalized Fourier transform and graph convolution ). Representation theory and other classical signal processing and basic technologies in physics have been used 2021 Some important progress has been made in , And still has great potential .

- Modeling complex systems requires more than just diagrams .2021 The Nobel Prize in physics was awarded Giorgio Parisi, In recognition of the study of complex systems . although , Such a system can usually be basically abstracted as a graph . But sometimes more complex structures such as unpaired relationships and dynamic behavior must be considered .2021 Many works in have discussed dynamic relationship system , Shows how to GNN Extended to higher-order structures ( Such as cells and simple complex structures that are traditionally dealt with in the field of algebraic topology ). You may see that machine learning adopts more other ideas in this field .

- In the field of graph machine learning , Reasoning 、 Axiomatization and generalization are still important problems to be solved . In this year , I saw the algorithm inspired reasoning GNN Continuous progress of architecture , And more robust and distributed generalization in graph structure tasks (OOD) Related work . Now , With and generalized Bellman-Ford Knowledge map inference machine with explicit and consistent algorithm , And the graph classifier using the explicit causal model of distribution offset . These are more robust with broad prospects in the future 、 More general GNN Development direction . stay 2022 year , Many of these topics may make great progress .

- Graph is becoming more and more popular in reinforcement learning , But there may be a lot of room for exploration . There are many problems about graph and symmetry in reinforcement learning ( Usually in the structure of reinforcement learning agent , Or in the representation of the environment ).2021 year , Some research directions try to use this structure , Achieved varying degrees of success . How to use these symmetries in reinforcement learning has a better understanding ( Included in multi-agent systems ). Modeling agents as graphs does not seem to require strict use of graph structures . Graph and geometry empowering reinforcement learning in 2022 It has broad development prospects in .

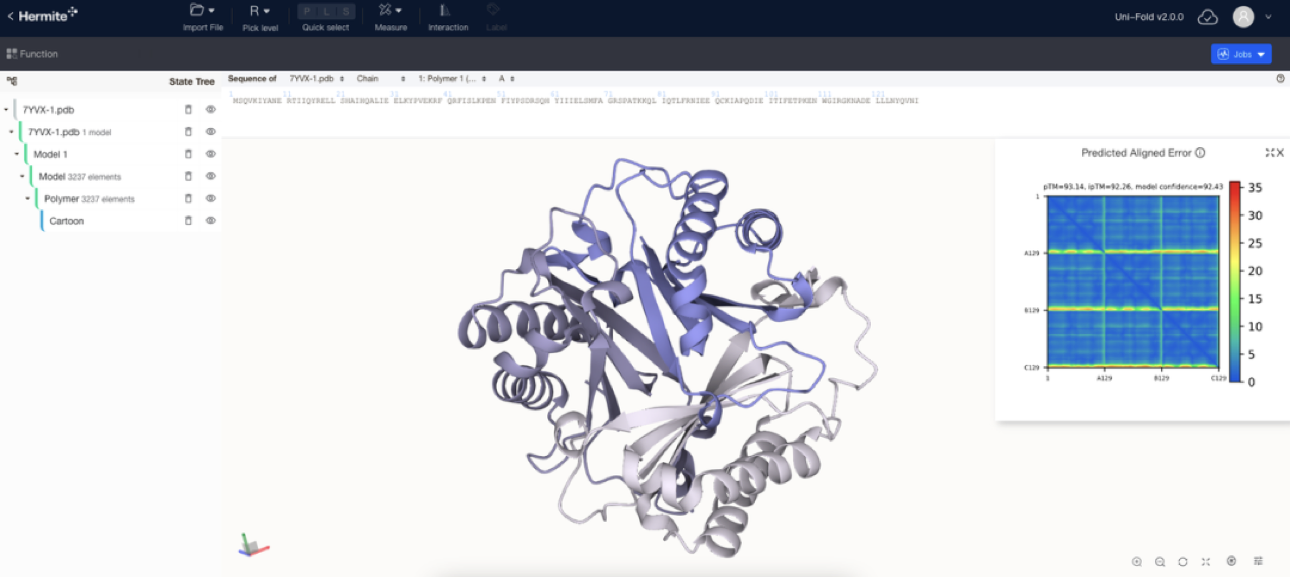

- AlphaFold 2 It is an important achievement in the field of geometric machine learning , It is also a paradigm shift in the field of Structural Biology .20 century 70 years , Nobel Prize winner in Chemistry Christian Anfinsen The possibility of predicting the three-dimensional folding structure of proteins is proposed . This is a very difficult computing task , In the field of Structural Biology 「 grail 」.2021 year ,DeepMind Of AlphaFold 2 Broke the previous record of this problem , It has achieved an accuracy rate that convinces experts in the field , It has been widely used .AlphaFold 2 The core of is a geometric framework based on equivariant attention mechanism .

- GNN And with Transformer The integration of models has helped drug development and design . actually ,GNN The origins of 20 century 90 Computational chemistry in the s . therefore , Molecular graph analysis is the most popular GNN One application , No wonder .2021 year , Significant progress has been made in this field , Dozens of new architectures and several achievements beyond the benchmark have emerged . take Transformer It has been applied to graph data with great success , It is expected to simulate Transformer The key to the success of architecture in natural language processing : A large pre training model that can be generalized across tasks .

- AI led drug discovery technology increasingly uses geometry and graph machine learning .AlphaFold 2 The success of molecular graph neural network has brought mankind one step closer to the dream of designing new drugs through artificial intelligence .Alphabet The new company Isomorphic Labs It marks the industry 「 Gamble 」 It depends on this technology . In order to realize such dreams , Modeling the interaction between molecules is an important frontier topic that must be solved .

- Graph based methods also help quantum machine learning . For most experts in machine learning , Quantum machine learning is still a niche direction of artifact , But with the gradual popularization of quantum computing hardware , It soon became a reality .Alphabet X Recent work has shown the advantages of graph structure inductive bias in quantum machine learning architecture , It combines these two seemingly unrelated fields . Because quantum physical systems usually have rich and profound group symmetry , This property can be used in quantum structure design , Geometry may play a more important role .

2021 year , Geometry and graph based machine learning methods appear in a series of high-profile applications

2. Geometry is becoming more and more important in machine learning

If you have to choose a word , It's in 2021 The year spread chart represents almost every field of learning , without doubt ,「 The geometric 」 The word will be preferred .

Melanie Weber:

「 In the past year , We see that many classical geometric ideas have been applied in the field of graph machine learning in new ways 」——Melanie Weber, Oxford University Institute of Mathematics Hooke researcher

Melanie Think : Notable examples include using symmetry to learn models more efficiently , Application of concepts related to optimal transmission , Or use the concept of curvature in differential geometry in representation learning .

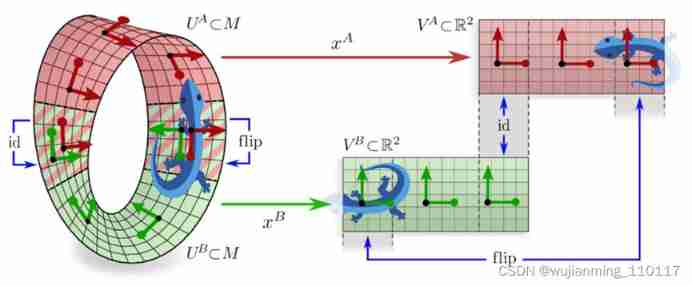

lately , People are good at understanding the geometric characteristics of relational data and using this information ( Euclidean or non Euclidean ) Characterization has generated a strong interest . This has given birth to a lot of specific geometric coding GNN framework . A notable example is hyperbolic GNN Model , The model is based on 2019 At the end of the year, it was first proposed as a tool for learning the efficient representation of hierarchical data . stay 2020 In the year , A large number of new models and architectures have emerged , Can learn hyperbolic representation more efficiently , Or it can capture more complex geometric features . Besides , Another kind of work utilizes geometric information such as equivariability and symmetry .

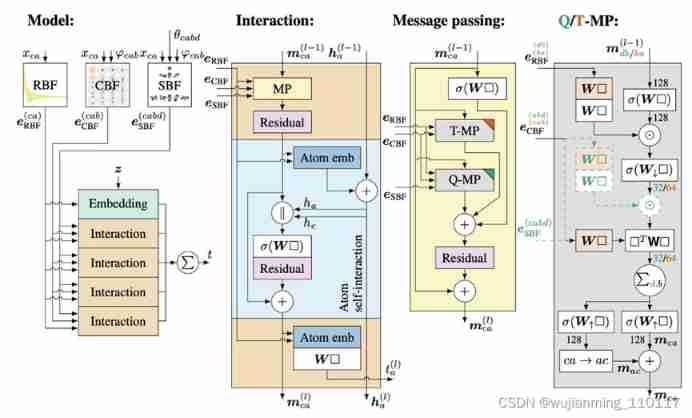

Figure note :2021 year , In the field of graph neural networks , Saw a surge in geometric Technology . for example , Equivariant information transfer in small molecule property prediction 、 Protein folding plays a key role in biochemical applications .

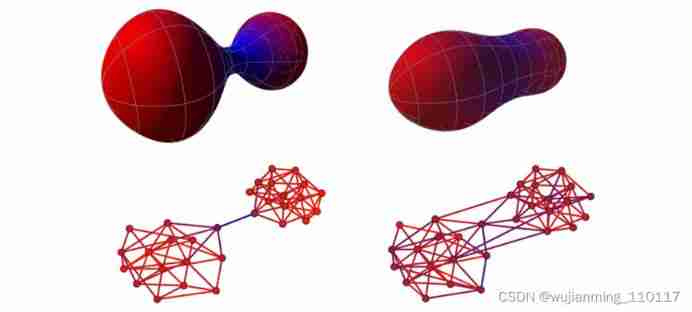

Melanie Differential geometry is further studied , stay 2022 There are many potential applications in : Discrete differential geometry ( Study the geometry of discrete structures such as graphs or simple complexes ) Has been used to analyze GNN. The concept of discrete curvature is an important tool to characterize the local and global geometric properties of discrete structures .Topping Wait for someone in the paper 「Understanding over-squashing and bottlenecks on graphs via curvature」 An important application of curvature in graph machine learning is proposed , In the context of graph reconnection, we study discrete Ricci Curvature , A new method is proposed to alleviate GNN Over compression effect in . Discrete curvature is likely to be associated with other structural and topological problems in graph machine learning .

Melanie I hope these topics will be in 2022 Continue to influence this field in , Apply to more graph machine learning tasks . This may drive advances in Computing , Reduce the computational challenges of implementing non Euclidean algorithms , Traditional tools designed for European data are difficult to do these jobs . Besides , The computational cost of geometric tools such as discrete curvature is very high , It is difficult to integrate into large-scale applications . The progress of computing technology or the development of special program library can make it easier for relevant practitioners to use these geometric ideas .

Pim de Haan:

「 Graph neural network designers pay more and more attention to the rich symmetrical structure of graphs .」——Pim de Haan, Doctoral student at the University of Amsterdam

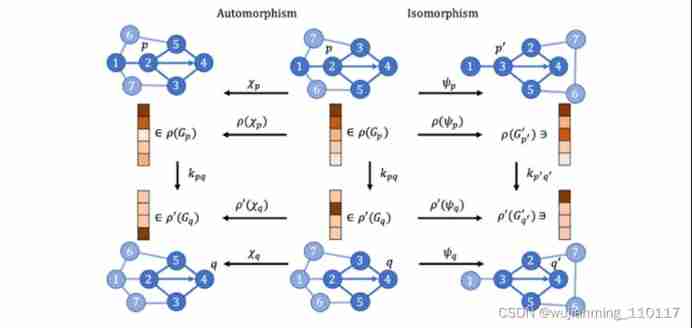

Traditionally ,GNN Adopt a message transmission method with permutation invariance , Later work used group and representation theory to construct equivariant mapping between node permutation group representations . lately , Analogous to the local symmetry of manifolds ( Called metric symmetry ), Begin to study the local symmetry of graphs generated by isomorphic subgraphs . It is found that we should use symmetry theory instead of group analysis to analyze some problems in graphs , Integrating symmetry into neural network architecture can improve some graph machine learning tasks ( for example , Molecular prediction ) Performance of .

Figure note : Figure machine learning researchers use the rich symmetrical structure in the figure .

Pim Prediction channel : In the New Year , I hope to see category theory become a design language widely used in Neural Networks . This provides a formal language to discuss and utilize more complex symmetries than before . Used to deal with local and approximate symmetry of graphs , Combine the geometry and composite structure of the point cloud , Help study the symmetry of cause and effect diagram .

Francesco Di Giovanni:

「 Although the graph is non differentiable , But many ideas that have been successfully applied in manifold analysis are gradually emerging in GNN In the field .」——Francesco Di Giovanni,Twitter Machine learning researcher

Francesco Particularly interested in partial differential equation methods , This method was first used to study surfaces ,Francesco And others are used to process images . Explored 「 Picture reconnection 」 The idea of ,「 Picture reconnection 」 It refers to the modification of the underlying adjacency relationship , It belongs to the expansion of geometric flow method . Besides , The new concept of curvature based on edges is also used to study GNN Over compression in , A graph reconnection method is proposed . For molecules that maintain and destroy symmetrical forms , Geometry is considered to be the GNN Key factors applied to molecules .

Francesco Think , Research in this field is just emerging . Graph reconnection technology may play a role in solving some of the main defects of messaging , These shortcomings include performance on heterogeneous datasets and handling long-distance dependencies . It is hoped that the large conceptual difference between convolution on graphs and convolution on manifolds can be quickly eliminated , This may lead to the next generation GNN Appearance . Last ,Francesco I'm glad to see that the geometric variational method has further revealed GNN Internal dynamics , And hope to provide a more principled way to design new GNN framework 、 Compare existing architectures .

Figure note :Ricci Curvature 、 Concepts in differential geometry such as geometric flow are used in graph machine learning , improvement GNN Information flow in .

Aasa Feragen:

「 People hope to provide reasonable solutions to the problems of nonlinear geometry in those accurate formulas through mathematical theories such as differential geometry .」——Aasa Feragen, Assistant professor at the University of Copenhagen

Aasa Think , Differential geometry plays a fundamental role in understanding and utilizing the uncertainty of deep learning models . for example , Use model uncertainty to generate a geometric representation of the data , Reveal biological information that is still very vague under the standard European representation . Another example is , Using Riemann geometry encoded by locally directed data to quantify the uncertainty of structured brain connections .

Geometric models are usually used for deeply preprocessed data , Reveal its geometric structure . Data is usually estimated from raw data , There are errors and uncertainties in the original data .Aasa hope 2022 In, more work began to evaluate the uncertainty of the original data , Impact on directly processed data , And how this uncertainty should be propagated to the model .Aasa It is hoped that the measurement error can be included in the analysis of non European data , Try to break the gap between statistics and deep learning .

3. Messaging is still GNN The dominant paradigm

Haggai Maron:

「 Hope subgraph GNN As well as the corresponding reconstruction conjecture, this research direction has yielded fruitful results in the new year .」——Haggai Maron, NVIDIA research scientist

Because it is equivalent to Weisfeiler-Lehman test , Figure the field of machine learning has encountered fundamental limitations of the messaging paradigm .Michael Brostein stay 2021 The year predicted : Want to continue to develop graph machine learning , You need to leave 2020 Years ago, the dominant messaging mechanism . Now , This prediction has been realized to a certain extent . However , Even though 2021 In, there have been some more expressive GNN framework , Most are still within the scope of messaging mechanisms .

lately , Some researchers use subgraphs to improve GNN The ability to express .Haggai Maron It has been pointed out that :「 Subgraphs GNN」 The bottom idea is to represent a graph as a collection of its substructures , stay Kelly and Ulam In the last century 60 This theme can be found in the work on graph reconstruction conjecture in the s . The same thought structure is expressive GNN,GNN In turn, the related work has spawned new 、 More refined reconstruction conjecture .

4. Differential equations gave birth to new GNN framework

Figure note :2021 year , Some research works derive graph neural networks through discrete diffusion partial differential equations .

Pierre Vandergheynst:

「 This puts forward a new viewpoint , have access to GNN Extract meaningful information for downstream machine learning tasks , Shift the focus from the domain of supporting information to the use of graphs as the support for the calculation of signals .」——Pierre Vandergheynst, Federal Institute of technology in Lausanne

By using the physical system dynamics represented by differential equations, the learning on the graph is reconstructed , 2021 Another trend in . Just as ordinary differential equations are powerful tools for understanding residual neural networks (「Neural ODEs」 Has been rated NeurIPS 2019 The best paper ), Partial differential equations can model information transmission on graphs . Such partial differential equations can be solved by iterative numerical calculation , Restore many standard GNN framework . here , Consider a graph as a discrete representation of a continuous object :

Pierre Think , stay 2022 year , Use the graph as a locally coherent calculation for a given data set 、 Mechanism for exchanging information , Pay attention to the overall attributes of data , Will become a new trend . This will be unsupervised 、 The field of zero sample learning inspires people's interest .

5. signal processing 、 Old ideas in neuroscience and physics are reborn

Many modern GNN Methods all originated in the field of signal processing . Figure signal processing (GSP) The father of Pierre Vandergheynst This perspective provides an interesting perspective for the development of graph machine learning methods :

Fig. the expansion of signal processing to digital signal processing is reflected in two aspects :(1) The domain of supporting information is generalized . Traditional digital signal processing is defined in low dimensional Euclidean space , Figure signal processing defines it in a much more complex 、 But on structured objects . You can use pictures ( for example , The Internet 、 Mesh surfaces ) Represent these objects .(2) Use the figure ( Some kind of nearest neighbor ), Get rid of structured domains , Directly process some data sets , Indicates the similarity between samples . The idea behind this is , The tag domain inherits some rules that can be defined using graphs and captured through appropriate transformations . therefore , The graph can support the local calculation on the whole data set .GNN Some interesting ideas in can be traced back to these early motives ,2021 There are some highlights in the work that continue this trend .

Pierre Vandergheynst:

「 Classical linear transformation ( for example , The Fourier transform 、 Wavelet transform ) This paper presents a mathematical characteristic ( for example , The smoothed signal has low-frequency Fourier coefficients , Piecewise smoothed signals have sparse 、 Local wavelet sparse ) General potential space 」——Pierre Vandergheynst, Federal Institute of technology in Lausanne

In the past , Researchers reveal the characteristics of signals by constructing linear transformations . Physicists are particularly advanced in designing equivalent transformations of different symmetries based on group actions . These group functions include , Wavelet transform on Affine Group 、Weyl-Heisenberg Group linear time-frequency analysis, etc . A general solution is proposed for the work in the field of coherent states in Mathematical Physics : Parameterize functions by using group representation , Construct some kind of linear transformation .2021 year , Some excellent papers further introduce nonlinear and learnable parametric functions , Given GNN symmetry , Shine brightly in physical or chemical problems :

Figure note : Group representation is a traditional tool in the field of signal processing and Physics , We can derive a coordinate independent deep learning architecture that can be applied to manifolds .

Pierre Think , Due to some application requirements 、 Trade off between adaptability and interpretability ( The structural transformation domain has poor adaptability but strong interpretability ,GNN A good balance can be achieved between the two ), The trend of building structured latent space will be in 2022 The year has continued .

Traditionally , Neuroscience is closely related to signal processing . in fact , Understand how animals perceive the world around them by analyzing the electrical signals transmitted by the brain .

Kim Stachenfeld:

「 The research background is computational neuroscience , Graph is first used in research because it hopes to show how any animal learns structure .」——Kim Stachenfeld,DeepMind Research scientist

Through the mathematical object of graph , Analyze how any animal represents relevant concepts acquired through independent experience segments , Spliced into a globally coherent 、 Integrated knowledge system .

2021 year , Some studies combine the local operation of neural networks with the underlying or intrinsic set representation . for example , Something about GNN The invariance of the work makes GNN You can use geometry and symmetry outside the graph structure . Besides , Use the graph Laplacian eigenvector as the graph Transformer The location code of , send GNN Can be free from its constraints , Use about inner 、 Information about low dimensional geometric properties .

Kim Yes GNN I am very excited about its application in neuroscience and broader fields , Especially in the application of large-scale real data . for example , Use GNN Predict traffic conditions 、 Simulation of complex physical dynamics 、 Solve the problem of super large-scale map . take GNN Work for neural data analysis has also emerged . These problems have an impact on the real world , It requires that the model can be extended and generalized efficiently , Still able to capture really complex dynamics .GNN The optimization goal of is to balance the structure and expression ability .

6. Modeling complex systems requires more than just diagrams

Tina Eliassi-Rad:

「2021 The Nobel Prize in physics was awarded for the study of complex systems . Basically , Complex systems are composed of interactions between entities . Complex systems are usually represented as complex networks , This provides motivation for graph machine learning .」——Tina Eliassi-Rad, Professor of Northeast University

As graph machine learning matures , System dependencies in different forms need to be carefully analyzed ( for example , A subset of 、 Time 、 Space ), General mathematical representation ( chart 、 Simple complex 、 hypergraph ), Underlying assumptions . There is no perfect way to represent a complex system , The modeling decisions made when checking the data set of an incoming system may not necessarily be able to migrate to another system , You can't even migrate to another dataset from the same system . However , Consider the system dependencies associated with the selected mathematical representation , It points out new research opportunities for graph machine learning .

Pierre Vandergheynst:

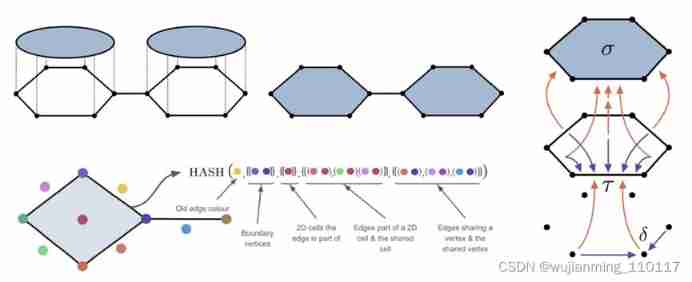

Diagram cannot provide an appropriate model for all complex systems , Need a way other than the diagram .2021 year , Some excellent papers have proposed new structured information domains obtained by graph generalization . Using simple complex and other ideas of algebraic topology to construct new neural networks is theoretically and practically right GNN It's been upgraded . The trend is in 2022 The annual meeting will continue , Will study in depth a large number of structured mathematical objects provided by algebraic topology or differential geometry .

Figure note : Expand the graph to cellular complex or simple complex , More complex topology messages can be delivered , So as to produce transcendence WL Test the ability of expression GNN framework .

Cristian Bodanr:

「 It is likely to see the use of more exotic mathematical objects , These mathematical objects have so far been little explored . It is believed that these topological methods can reduce the dimension of analysis and understanding GNN Provide a new set of mathematical tools .」——Cristian Bodnar, Doctor of Cambridge University

Cristian Bodnar Keen on algebra to study the connection between topology and graph machine learning . In the past year , Convolution and message passing models on simple complex and cellular complex solve many GNN The defects of ( for example , Detect specific substructures 、 Capture long-distance and high-order interactions 、 Deal with high-order features 、 Jump out of WL Test hierarchy ). In molecular related problems 、 The best results have been achieved in the tasks of trajectory prediction and classification .

2022 year ,Cristian It is expected that these methods will be extended to exciting new applications , for example : Computational Algebraic Topology 、 Link prediction 、 Computer graphics , etc. .

Rose Yu:

「 I am very excited about the role of graph machine learning in learning spatiotemporal dynamics .」——Rose Yu,UCSD assistant professor

Spatiotemporal map is an important complex network system , The structure will evolve over time .Rose Think ,COVID-19 forecast 、 Traffic forecast 、 Applications such as trajectory modeling need to capture the complex dynamics of highly structured time series data . Figure machine learning has the ability to capture time series 、 Interaction between spatial dependencies , And the correlation in dynamics .

2022 year , Happy to see the integration of ideas and graph machine learning in time series and dynamic systems . It is hoped that these ideas will lead to new model design 、 Training algorithm , Help better understand the internal mechanism of complex dynamic systems . Graph neural network has permutation symmetry ( Invariance or equivariant ), Symmetry discovery is an important problem neglected in the field of graph representation learning . But this global symmetry may be fundamentally limited , Some excellent work has extended graph neural networks to symmetric groups and local symmetries beyond permutations . I hope to see more research on the symmetry of graph Neural Networks .

This article is compiled from https://towardsdatascience.com/predictions-and-hopes-for-geometric-graph-ml-in-2022-aa3b8b79f5cc#0b34

author :Michael Bronstein

At the university of Oxford, DeepMind Professor of artificial intelligence 、Twitter Figure head of machine learning

Reference link :

https://towardsdatascience.com/predictions-and-hopes-for-geometric-graph-ml-in-2022-aa3b8b79f5cc#0b34

https://blog.csdn.net/BAAIBeijing/article/details/122757723

边栏推荐

- What a new company needs to practice and pay attention to

- [Chongqing Guangdong education] Tianjin urban construction university concrete structure design principle a reference

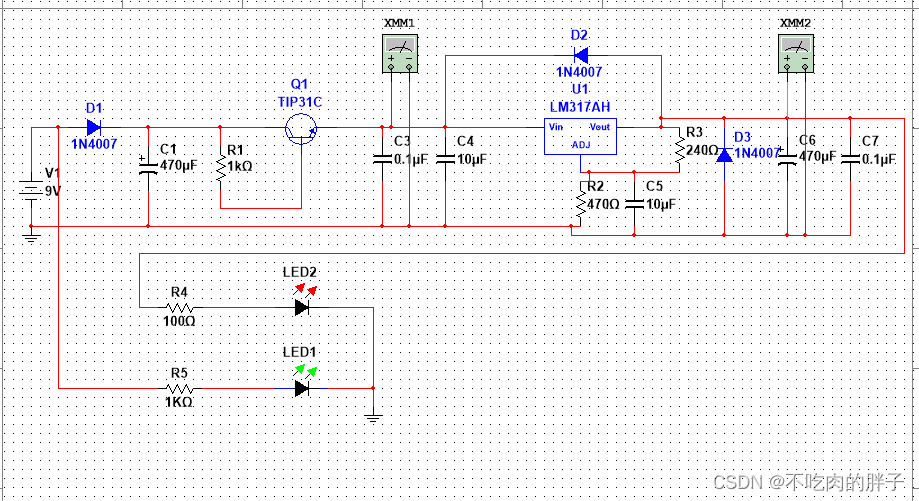

- Adjustable DC power supply based on LM317

- MongoDB(三)——CRUD

- Classic sql50 questions

- Embedded common computing artifact excel, welcome to recommend skills to keep the document constantly updated and provide convenience for others

- VIP case introduction and in-depth analysis of brokerage XX system node exceptions

- HDR image reconstruction from a single exposure using deep CNNs阅读札记

- HDU 2008 数字统计

- 2022年6月国产数据库大事记-墨天轮

猜你喜欢

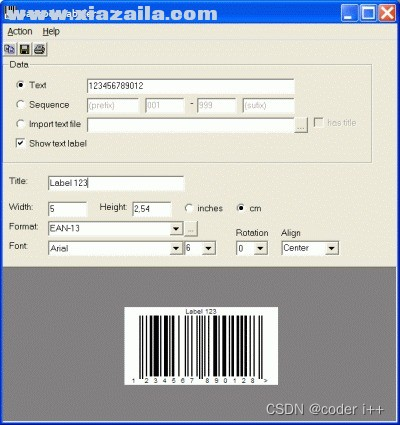

Barcodex (ActiveX print control) v5.3.0.80 free version

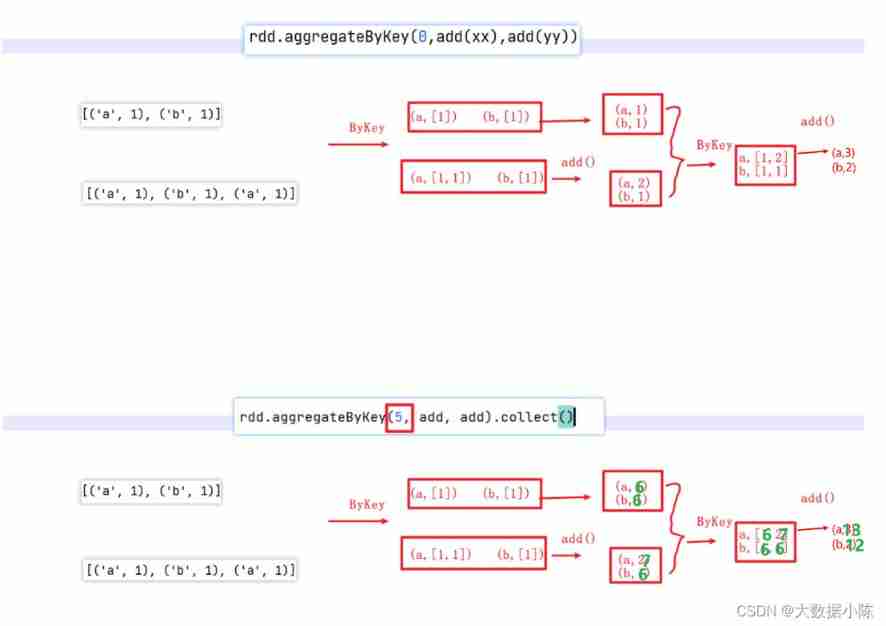

Aggregate function with key in spark

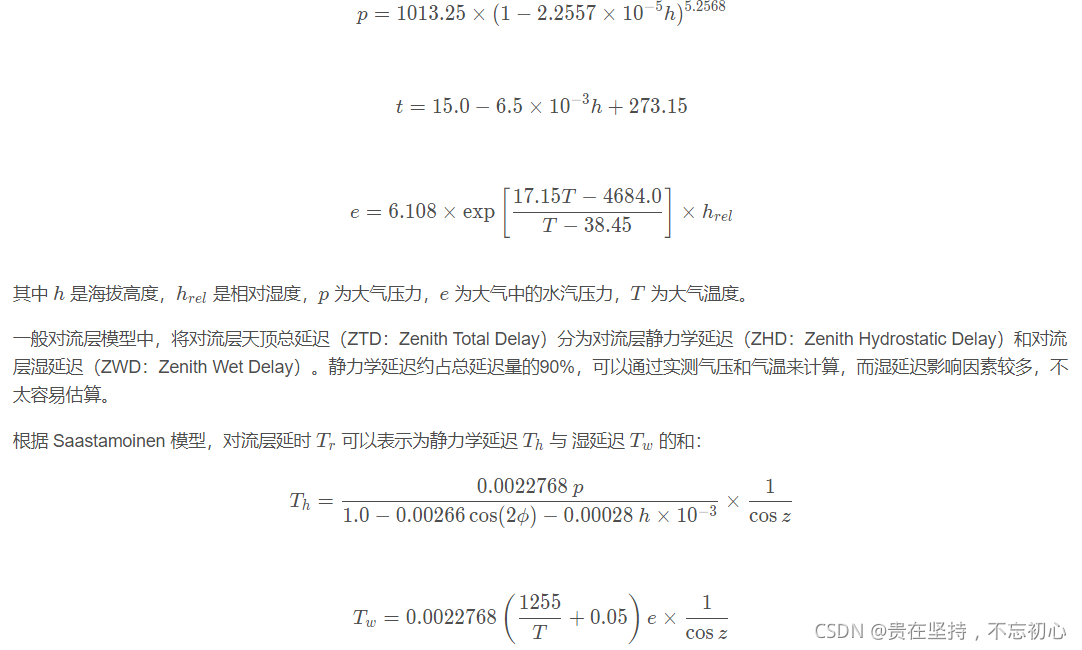

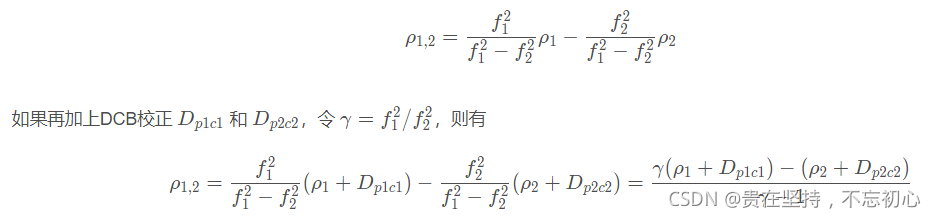

GPS from entry to abandonment (XVII), tropospheric delay

AI enterprise multi cloud storage architecture practice | Shenzhen potential technology sharing

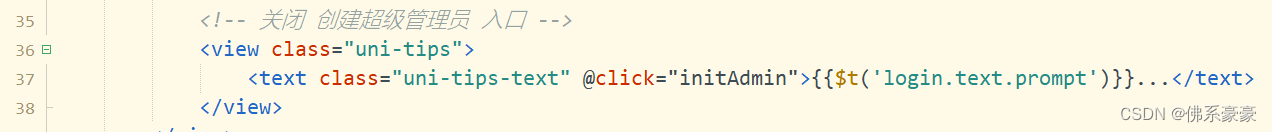

How does the uni admin basic framework close the creation of super administrator entries?

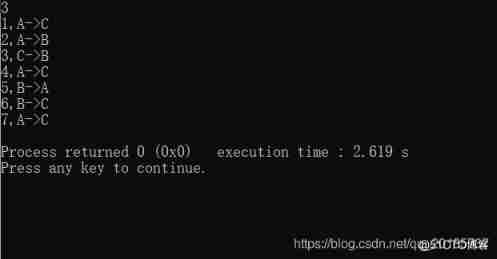

Yyds dry goods inventory C language recursive implementation of Hanoi Tower

GPS from getting started to giving up (XV), DCB differential code deviation

Adjustable DC power supply based on LM317

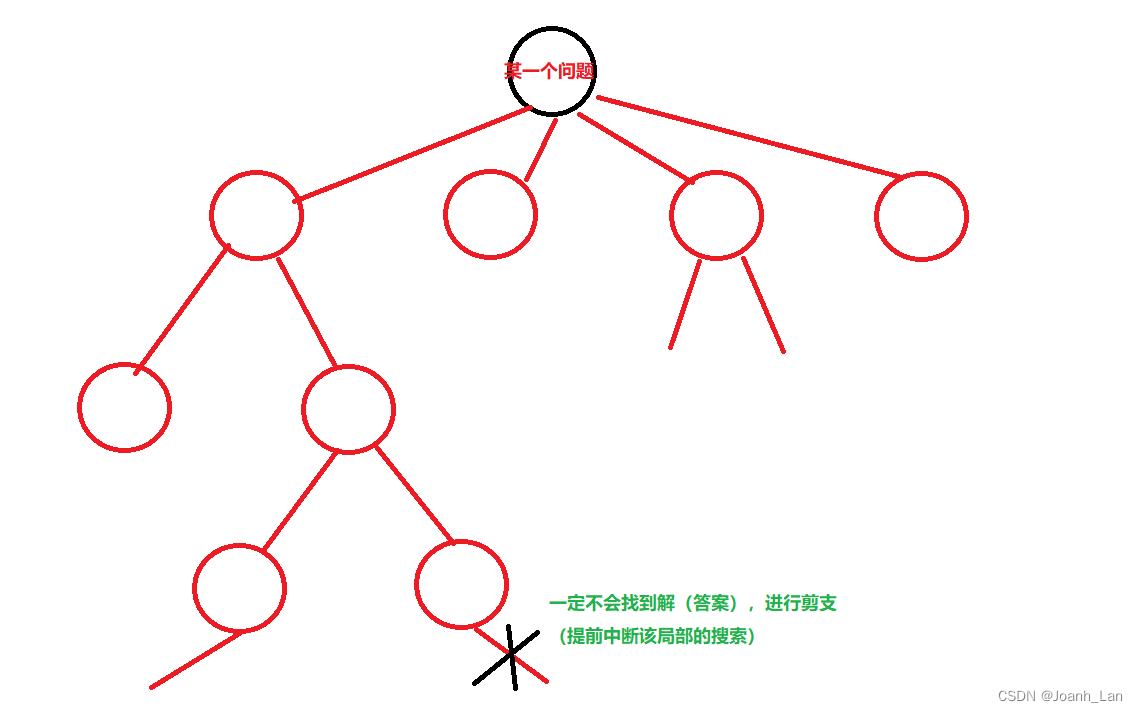

搜素专题(DFS )

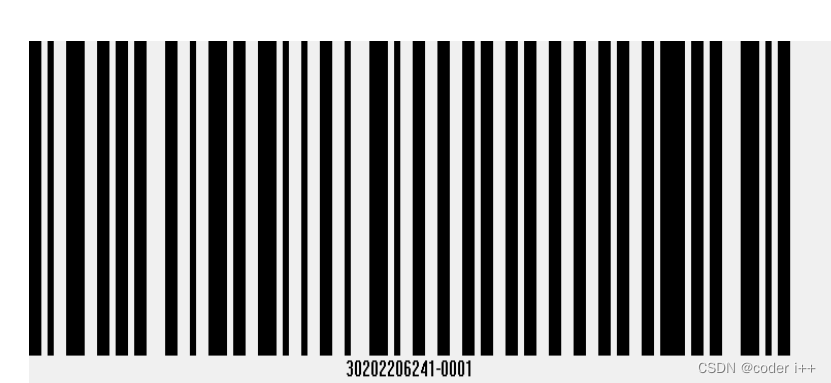

C#實現水晶報錶綁定數據並實現打印4-條形碼

随机推荐

解决项目跨域问题

NPM run dev start project error document is not defined

[sciter]: encapsulate the notification bar component based on sciter

Leveldb source code analysis series - main process

GPS从入门到放弃(十一)、差分GPS

CCNA-思科网络 EIGRP协议

美国科技行业结束黄金时代,芯片求售、裁员3万等哀声不断

【10点公开课】:视频质量评价基础与实践

How does the uni admin basic framework close the creation of super administrator entries?

Checkpoint of RDD in spark

1292_ Implementation analysis of vtask resume() and xtask resume fromisr() in freeros

bat脚本学习(一)

make menuconfig出现recipe for target ‘menuconfig‘ failed错误

C # réalise la liaison des données du rapport Crystal et l'impression du Code à barres 4

LeetCode学习记录(从新手村出发之杀不出新手村)----1

A Mexican airliner bound for the United States was struck by lightning after taking off and then returned safely

Why rdd/dataset is needed in spark

2020 Bioinformatics | GraphDTA: predicting drug target binding affinity with graph neural networks

GPS从入门到放弃(十六)、卫星时钟误差和卫星星历误差

HDU 4912 paths on the tree (lca+)