当前位置:网站首页>[dpdk] dpdk sample source code analysis III: dpdk-l3fwd_ 001

[dpdk] dpdk sample source code analysis III: dpdk-l3fwd_ 001

2022-07-07 03:37:00 【LFTF】

This article mainly introduces dpdk-l3fwd Example source code , By analyzing the code logic , Study DPDK Some of them API Interface function and how to use ?

Operating system version :CentOS 8.4

DPDK edition :dpdk-20.11.3

How to create dpdk-l3fwd The project , Reference link :【DPDK】dpdk-l3fwd Test cases are compiled separately

Function module analysis

0、 Introduction to startup parameters

start-up dpdk-l3fwd Program command is :

[[email protected] dpdk-l3fwd]# ./build/l3fwd_app-static -l 1-2 -n 4 -- -p 0x3 --config="(0,0,1),(1,0,2)" --parse-ptype

-- Before the symbol is EAL Initialize load parameters ,-- The following parameters are required for the initialization of the program port queue and the core binding . Other parameters can be explained by referring to the connection :

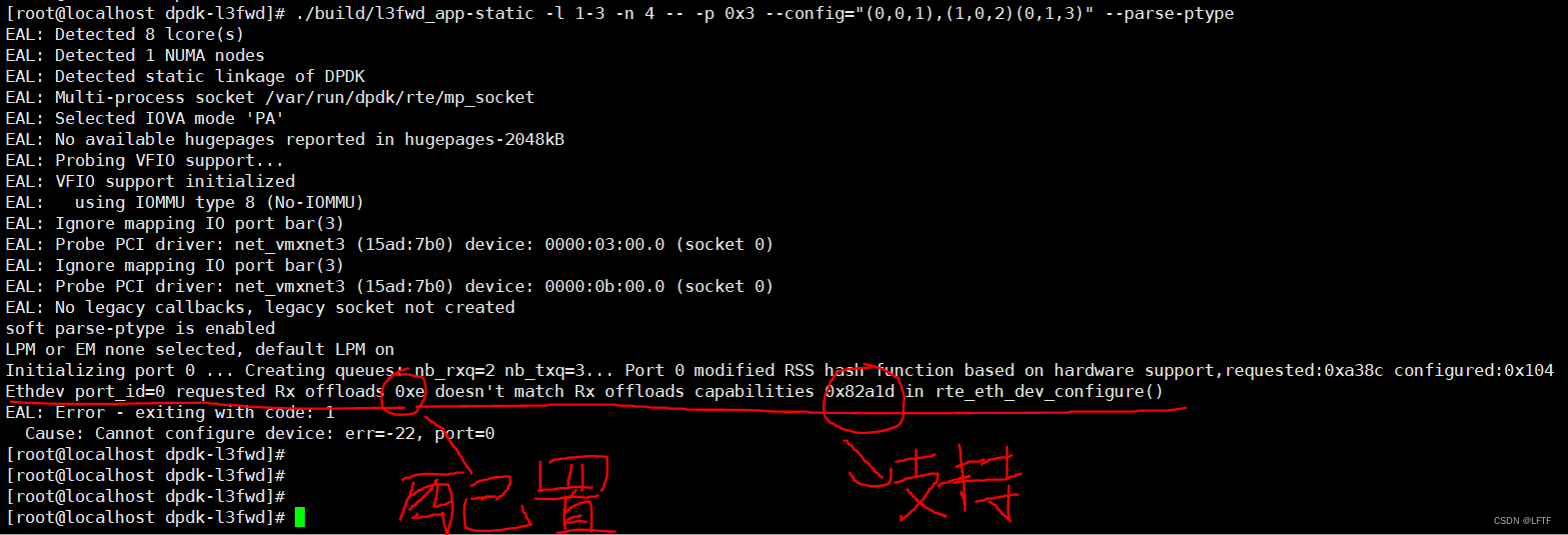

Be careful :VMWare The default network card driver on the virtual machine is e1000, The following error messages may appear after startup :

[[email protected] dpdk-l3fwd]# ./build/l3fwd_app-static -l 1-3 -n 4 -- -p 0x3 --config="(0,0,1),(1,0,2)(0,1,3)" --parse-ptype

EAL: Detected 8 lcore(s)

EAL: Detected 1 NUMA nodes

EAL: Detected static linkage of DPDK

EAL: Multi-process socket /var/run/dpdk/rte/mp_socket

EAL: Selected IOVA mode 'PA'

EAL: No available hugepages reported in hugepages-2048kB

EAL: Probing VFIO support...

EAL: VFIO support initialized

EAL: using IOMMU type 8 (No-IOMMU)

EAL: Ignore mapping IO port bar(4)

EAL: Probe PCI driver: net_e1000_em (8086:100f) device: 0000:02:05.0 (socket 0)

EAL: Ignore mapping IO port bar(4)

EAL: Probe PCI driver: net_e1000_em (8086:100f) device: 0000:02:07.0 (socket 0)

EAL: No legacy callbacks, legacy socket not created

soft parse-ptype is enabled

LPM or EM none selected, default LPM on

Initializing port 0 ... Creating queues: nb_rxq=2 nb_txq=3... Port 0 modified RSS hash function based on hardware support,requested:0x104 configured:0

Ethdev port_id=0 nb_rx_queues=2 > 1

EAL: Error - exiting with code: 1

Cause: Cannot configure device: err=-22, port=0

Modify the network card driver to vmnet3, Virtual machine network card configuration vmnet3 link :DPDK- Virtual machine configuration network card multi queue

Note: After setting the network card multi queue, the network card driver changes , You need to modify the network card binding script and unbinding script .

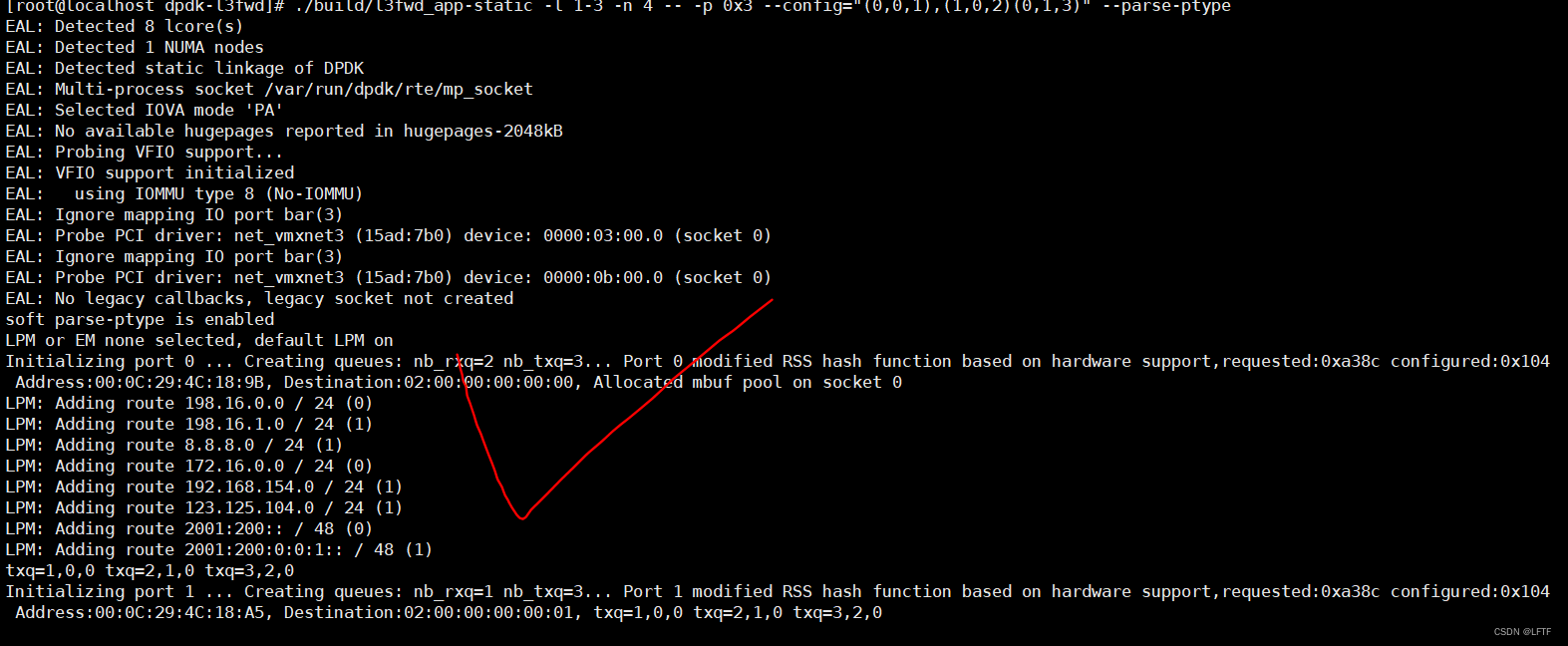

After setting, start printing as follows :

[[email protected] dpdk-l3fwd]# ./build/l3fwd_app-static -l 1-3 -n 4 -- -p 0x3 --config="(0,0,1),(1,0,2)(0,1,3)" --parse-ptype

EAL: Detected 8 lcore(s)

EAL: Detected 1 NUMA nodes

EAL: Detected static linkage of DPDK

EAL: Multi-process socket /var/run/dpdk/rte/mp_socket

EAL: Selected IOVA mode 'PA'

EAL: No available hugepages reported in hugepages-2048kB

EAL: Probing VFIO support...

EAL: VFIO support initialized

EAL: using IOMMU type 8 (No-IOMMU)

EAL: Ignore mapping IO port bar(3)

EAL: Probe PCI driver: net_vmxnet3 (15ad:7b0) device: 0000:03:00.0 (socket 0)

EAL: Ignore mapping IO port bar(3)

EAL: Probe PCI driver: net_vmxnet3 (15ad:7b0) device: 0000:0b:00.0 (socket 0)

EAL: No legacy callbacks, legacy socket not created

soft parse-ptype is enabled

LPM or EM none selected, default LPM on

Initializing port 0 ... Creating queues: nb_rxq=2 nb_txq=3... Address:00:0C:29:4C:18:9B, Destination:02:00:00:00:00:00, Allocated mbuf pool on socket 0

LPM: Adding route 198.16.0.0 / 24 (0)

LPM: Adding route 198.16.1.0 / 24 (1)

LPM: Adding route 8.8.8.0 / 24 (1)

LPM: Adding route 172.16.0.0 / 24 (0)

LPM: Adding route 192.168.154.0 / 24 (1)

LPM: Adding route 123.125.104.0 / 24 (1)

LPM: Adding route 2001:200:: / 48 (0)

LPM: Adding route 2001:200:0:0:1:: / 48 (1)

txq=1,0,0 txq=2,1,0 txq=3,2,0

Initializing port 1 ... Creating queues: nb_rxq=1 nb_txq=3... Address:00:0C:29:4C:18:A5, Destination:02:00:00:00:00:01, txq=1,0,0 txq=2,1,0 txq=3,2,0

Initializing rx queues on lcore 1 ... rxq=0,0,0

Initializing rx queues on lcore 2 ... rxq=1,0,0

Initializing rx queues on lcore 3 ... rxq=0,1,0

Port 0: softly parse packet type info

Port 1: softly parse packet type info

Port 0: softly parse packet type info

Checking link statusdone

Port 0 Link up at 10 Gbps FDX Fixed

Port 1 Link up at 10 Gbps FDX Fixed

L3FWD: entering main loop on lcore 2

L3FWD: -- lcoreid=2 portid=1 rxqueueid=0

L3FWD: entering main loop on lcore 3

L3FWD: -- lcoreid=3 portid=0 rxqueueid=1

L3FWD: entering main loop on lcore 1

L3FWD: -- lcoreid=1 portid=0 rxqueueid=0

You can see EAL: Probe PCI driver: net_vmxnet3 (15ad:7b0) device: 0000:03:00.0 (socket 0) It has changed , The program also started successfully .

1、 Environment configuration module

1.1、EAL initialization

The code interface is :

/* init EAL */

ret = rte_eal_init(argc, argv);

EAL Its full name is Environment Abstraction Layer namely Environment abstraction layer ,rte_eal_init Initialization mainly determines how to allocate the resources of the operating system ( That is, memory space 、 equipment 、 Timer 、 Console and so on ).

1.1.1、CPU Nuclear testing

Detect system logic CPU Number , Judge whether the incoming parameters exceed the available CPU Number , If it exceeds, an error will be returned . The start command is as follows :

[[email protected] dpdk-l3fwd]# ./build/l3fwd_app-static -l 1-10 -n 4 -- -p 0x3 --config="(0,0,1),(1,0,2)" --parse-ptype

EAL: Detected 8 lcore(s)

EAL: Detected 1 NUMA nodes

EAL: lcore 9 unavailable

EAL: lcore 10 unavailable

EAL: invalid core list, please check specified cores are part of 0-8

.

.

.

EAL: FATAL: Invalid 'command line' arguments.

EAL: Invalid 'command line' arguments.

EAL: Error - exiting with code: 1

Cause: Invalid EAL parameters

Print available cores The number is 8, If it exceeds, it will report an error and exit .

1.1.2、 Large page memory detection

Check whether the giant page memory configured by the system is available , If unavailable, an error is returned . Before starting the program, you can check the memory configuration of giant pages , The order is as follows :

[[email protected] dpdk-l3fwd]# cat /proc/meminfo | grep Huge

AnonHugePages: 0 kB

ShmemHugePages: 0 kB

FileHugePages: 0 kB

HugePages_Total: 1

HugePages_Free: 1

HugePages_Rsvd: 0

HugePages_Surp: 0

Hugepagesize: 1048576 kB

Hugetlb: 1048576 kB

It can be seen that , The format of the configured giant page memory is 1G * 1 Of , After the program starts, print the following :

[[email protected] dpdk-l3fwd]# ./build/l3fwd_app-static -l 1-2 -n 4 -- -p 0x3 --config="(0,0,1),(1,0,2)" --parse-ptype

EAL: Detected 8 lcore(s)

EAL: Detected 1 NUMA nodes

EAL: Multi-process socket /var/run/dpdk/rte/mp_socket

EAL: Selected IOVA mode 'VA'

EAL: No available hugepages reported in hugepages-2048kB

.

.

EAL: No available hugepages reported in hugepages-2048kB, Printing tips 2M The giant page of is not available , This may be because the format of setting the huge page memory is 1G The size of , So the program can start normally .

1.1.3、 Network card driver detection

Use DPDK After the script binds the specified network card , When starting the program, it will pass EAL Initialize the interface to load the network card information into the program , Unbound network card When to start dpdk-l3fwd Program , Print as follows :

[[email protected] dpdk-l3fwd]# ./build/l3fwd_app-static -l 1-2 -n 4 -- -p 0x3 --config="(0,0,1),(1,0,2)" --parse-ptype

EAL: Detected 8 lcore(s)

EAL: Detected 1 NUMA nodes

EAL: Multi-process socket /var/run/dpdk/rte/mp_socket

EAL: Selected IOVA mode 'PA'

EAL: No available hugepages reported in hugepages-2048kB

EAL: Probing VFIO support...

EAL: Invalid NUMA socket, default to 0

EAL: Invalid NUMA socket, default to 0

EAL: Invalid NUMA socket, default to 0

EAL: No legacy callbacks, legacy socket not created

soft parse-ptype is enabled

LPM or EM none selected, default LPM on

port 0 is not present on the board

EAL: Error - exiting with code: 1

Cause: check_port_config failed

After binding the network card , start-up dpdk-l3fwd Program , Print as follows :

·[[email protected] dpdk-l3fwd]# ./build/l3fwd_app-static -l 1-2 -n 4 -- -p 0x3 --config="(0,0,1),(1,0,2)" --parse-ptype

EAL: Detected 8 lcore(s)

EAL: Detected 1 NUMA nodes

EAL: Multi-process socket /var/run/dpdk/rte/mp_socket

EAL: Selected IOVA mode 'PA'

EAL: No available hugepages reported in hugepages-2048kB

EAL: Probing VFIO support...

EAL: VFIO support initialized

EAL: Invalid NUMA socket, default to 0

EAL: Invalid NUMA socket, default to 0

EAL: using IOMMU type 8 (No-IOMMU)

EAL: Ignore mapping IO port bar(3)

EAL: Probe PCI driver: net_vmxnet3 (15ad:7b0) device: 0000:03:00.0 (socket 0)

EAL: Invalid NUMA socket, default to 0

EAL: Ignore mapping IO port bar(3)

EAL: Probe PCI driver: net_vmxnet3 (15ad:7b0) device: 0000:0b:00.0 (socket 0)

EAL: No legacy callbacks, legacy socket not created

soft parse-ptype is enabled

LPM or EM none selected, default LPM on

.

EAL: VFIO support initialized Express VFIO Driver installed successfully , The corresponding network card information is printed as :

EAL: Probe PCI driver: net_vmxnet3 (15ad:7b0) device: 0000:03:00.0 (socket 0)

EAL: Probe PCI driver: net_vmxnet3 (15ad:7b0) device: 0000:0b:00.0 (socket 0)

DPDK For the network card binding steps, please refer to the link :

Summary :EAL The reason for the failure of layer initialization can be judged by the output error printing , Common mistakes are :

1、 The huge page memory is not configured successfully, resulting in program startup failure ;

2、 The number of logical cores is configured incorrectly, resulting in startup failure ;

3、 If you use -c Mask mode configuration core , Please check whether the configuration is correct ;

4、 The network card is not bound and failed to start ;

5、 Repeat the startup procedure .

Be careful :EAL Part of the content of the layer initialization load is user configured , Such as 1、 The core to use ID( adopt -l Parameter configuration );2、 The loaded driver is VFIO still UIO drive ( Bind the card by insmod Command mount ;3、 The size of loaded Juye memory ( By modifying the grub Profile to configure );4、 The network card loaded by the program ( adopt dpdk Script binding ).

1.2、 Application parameter initialization

Initialization can be carried out by applying parameters, such as :( Network card port , queue id, Processing core ) Binding relationship between ; The purpose of each port forwarding message mac value ; Network card receiving data mode ( Whether to turn on hybrid mode ); Matching mode (LPM or EM Pattern ); Load the bound network card id Initialization of mask and other information . Interface api As shown below :

/* parse application arguments (after the EAL ones) */

ret = parse_args(argc, argv);

if (ret < 0)

rte_exit(EXIT_FAILURE, "Invalid L3FWD parameters\n");

Parameter interpretation has been explained before , I'm not going to repeat it here , Which one is used in the later use will be introduced separately , Refer to the link for the explanation of various parameters :【DPDK】dpdk Sample source code analysis II :dpdk-helloworld

1.3、 Network card initialization

1.3.1、 Get driver information

ret = rte_eth_dev_info_get(portid, &dev_info);

if (ret != 0)

rte_exit(EXIT_FAILURE,

"Error during getting device (port %u) info: %s\n",

portid, strerror(-ret));

Get the default information of the device , Then through the subsequent assignment , Modify the default information of the device , as follows :

// Judge whether the network card driver supports multi partition forwarding packets

if (dev_info.tx_offload_capa & DEV_TX_OFFLOAD_MBUF_FAST_FREE)

local_port_conf.txmode.offloads |=

DEV_TX_OFFLOAD_MBUF_FAST_FREE;

// Configured rss_conf.rss_hf And supported by default offloads Assign values to take and operate

local_port_conf.rx_adv_conf.rss_conf.rss_hf &=

dev_info.flow_type_rss_offloads;

// Judge the configuration of rss_conf.rss_hf Value and default configuration offloads Is it worth the same // Take and operate , No problem if it's different

if (local_port_conf.rx_adv_conf.rss_conf.rss_hf !=

port_conf.rx_adv_conf.rss_conf.rss_hf) {

printf("Port %u modified RSS hash function based on hardware support,"

"requested:%#"PRIx64" configured:%#"PRIx64"\n",

portid,

port_conf.rx_adv_conf.rss_conf.rss_hf,

local_port_conf.rx_adv_conf.rss_conf.rss_hf);

}

1.3.2、 Network card driver configuration

Configure the number of receiving and sending queues of the network card port , And port information , The last parameter can also determine the mode of use in the team , Multi queue information and calculate the receiving queue (RX queue) Load balancing and same origin and same destination RSS Value required related parameters , Include key、key_len、hash_function Etc .TX Generally, there is no redundant setting for queues .

ret = rte_eth_dev_configure(portid, nb_rx_queue,

(uint16_t)n_tx_queue, &local_port_conf);

if (ret < 0)

rte_exit(EXIT_FAILURE,

"Cannot configure device: err=%d, port=%d\n",

ret, portid);

So let's talk about that struct rte_eth_conf port_conf Structure parameter function :

static struct rte_eth_conf port_conf = {

.rxmode = {

.mq_mode = ETH_MQ_RX_RSS, // network card Multi Queue

.max_rx_pkt_len = RTE_ETHER_MAX_LEN, // Maximum packet length received

.split_hdr_size = 0,

.offloads = DEV_RX_OFFLOAD_CHECKSUM, // Network card driven OFFLOADS To configure

},

.rx_adv_conf = {

.rss_conf = {

.rss_key = rss_intel_key, // Calculation RSS The required KEY

.rss_key_len = 40, // KEY The length of

.rss_hf = ETH_RSS_IP, // hash basis

},

},

.txmode = {

.mq_mode = ETH_MQ_TX_NONE,

},

};

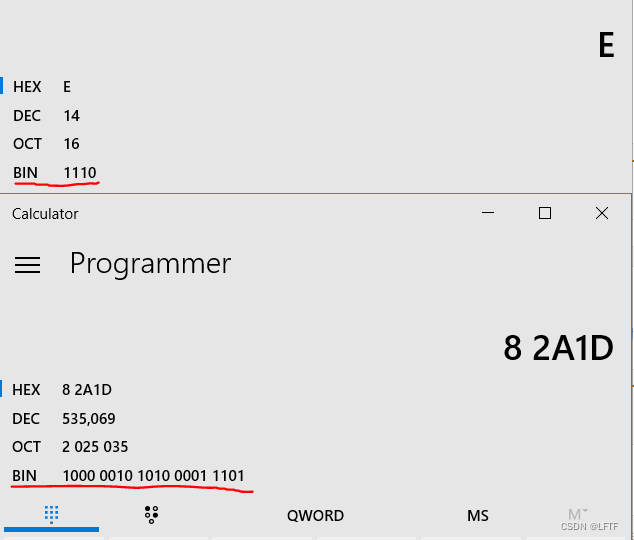

Be careful :port_conf.rxmode.offloads The value needs to be set to the one supported and enabled by the network card type OFFLOADS. Otherwise, the following error will be reported :

Configured in the code RX offloads The value is 0xe, That is to say DEV_RX_OFFLOAD_CHECKSUM Value , The value supported by the network card is 0x82a1d

among 0xe and 0x82a1d The binary representation of is as follows :

It can be seen that the network card driver does not support 1<<2 Value corresponding OFFLOAD, That is to say DEV_RX_OFFLOAD_IPV4_CKSUM Value

#define DEV_RX_OFFLOAD_IPV4_CKSUM 0x00000002

take port_conf.rxmode.offloads Value is set to DEV_RX_OFFLOAD_UDP_CKSUM | DEV_RX_OFFLOAD_TCP_CKSUM, Start the program again and it will start normally .

Summary :rxmode.offloads The value needs to be set as supported by the network card driver offloads, Otherwise, the program will exit with an error .

1.4、 Queue initialization

Start command :

[[email protected] dpdk-l3fwd]# ./build/l3fwd_app-static -l 1-2 -n 4 -- -p 0x3 --config="(0,0,1),(1,0,2)" --parse-ptype

start-up "(0,0,1),(1,0,2)" The corresponding relationship is (port,queue,locre).(0,0,1) I.e. port 0 receive data , The core of binding is 1, Network card queue id by 0,(1,0,2) I.e. port 1 receive data , The core of binding is 2, Network card queue id by 0

If the network card is configured to receive data in multiple queues , The following startup commands can be used :

[[email protected] dpdk-l3fwd]# ./build/l3fwd_app-static -l 1-3 -n 4 -- -p 0x3 --config="(0,0,1),(1,0,2)(0,1,3)" --parse-ptype

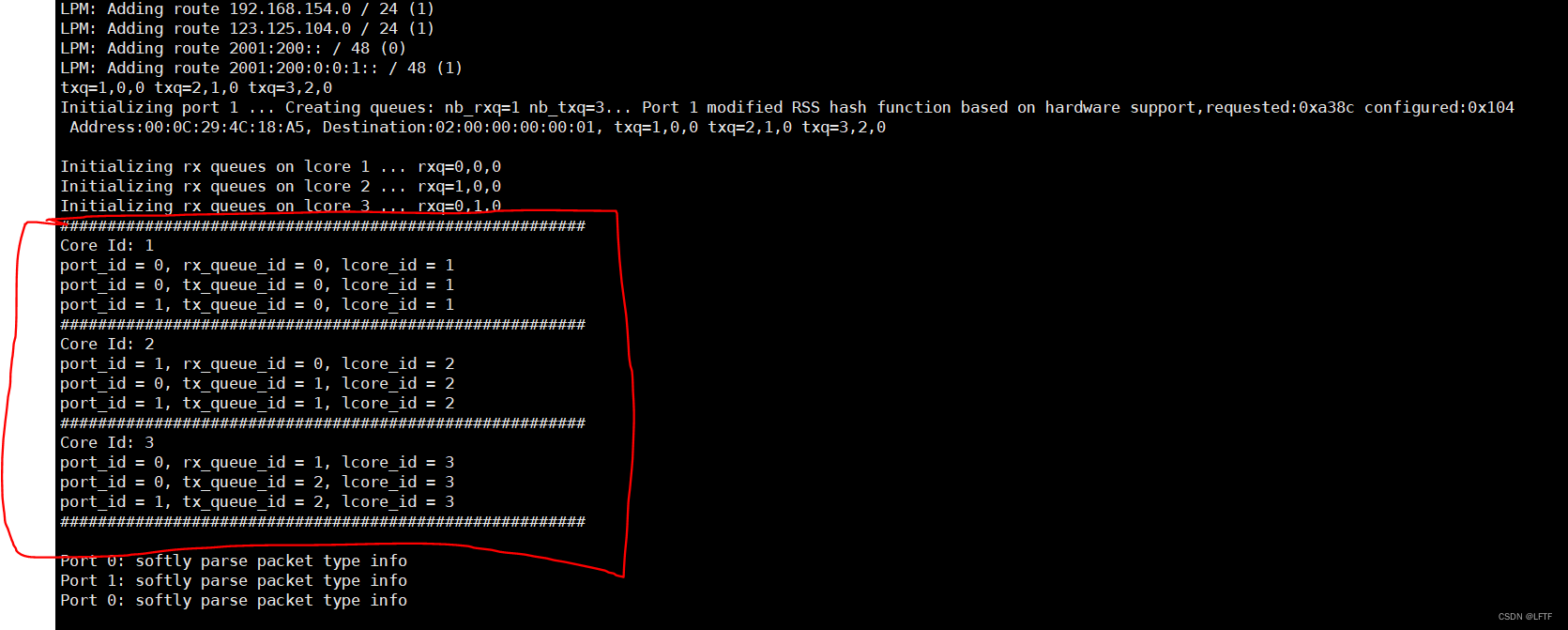

Intercepts part of the print , Explain the following :

# Be careful : Because it is multi-threaded printing , Actually, the content may be disordered . The following is the sorted content

# Initialize port 0 network card , Create a queue : Receiving queue 2 individual , Sending queue 3 individual . Transfer source MAC The address is the network card MAC, Purpose MAC Address is the default 00( It may be modified by starting parameters ),txq=1,0,0 The corresponding parameter is lcore_id, queueid, socketid

Initializing port 0 ... Creating queues: nb_rxq=2 nb_txq=3... Address:00:0C:29:4C:18:9B, Destination:02:00:00:00:00:00,txq=1,0,0 txq=2,1,0 txq=3,2,0

# Initialize port 1 network card , Create a queue : Receiving queue 1 individual , Sending queue 3 individual . Transfer source MAC The address is the network card MAC, Purpose MAC Address is the default 01( It may be modified by starting parameters ),txq=1,0,0 The corresponding parameter is lcore_id, queueid, socketid

Initializing port 1 ... Creating queues: nb_rxq=1 nb_txq=3... Address:00:0C:29:4C:18:A5, Destination:02:00:00:00:00:01, txq=1,0,0 txq=2,1,0 txq=3,2,0

# Receiving queue , Bind the core through the startup parameters ,rxq=0,0,0 Corresponding parameter portid, queueid, socketid

# 1. Network card port 0 Queues id_0 Data are available. cpu The core 1 Do parsing

Initializing rx queues on lcore 1 ... rxq=0,0,0

# 2. Network card port 1 Queues id_0 Data are available. cpu The core 2 Do parsing

Initializing rx queues on lcore 2 ... rxq=1,0,0

# 3. Network card port 0 Queues id_1 Data are available. cpu The core 3 Do parsing

Initializing rx queues on lcore 3 ... rxq=0,1,0

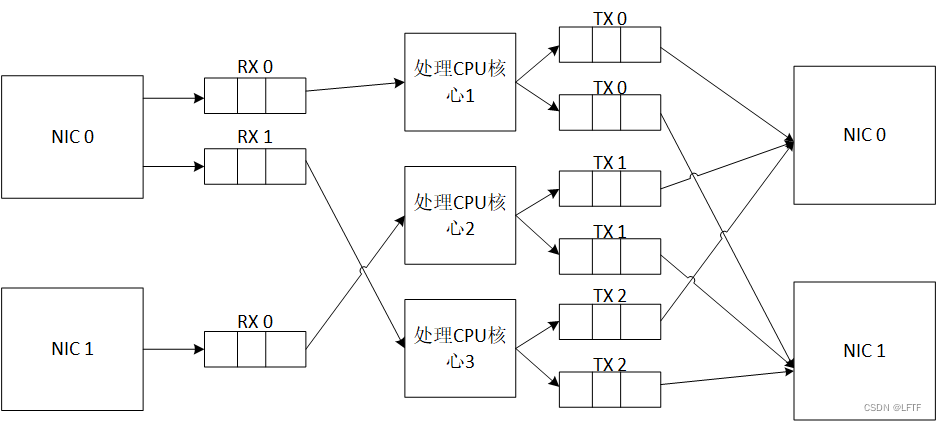

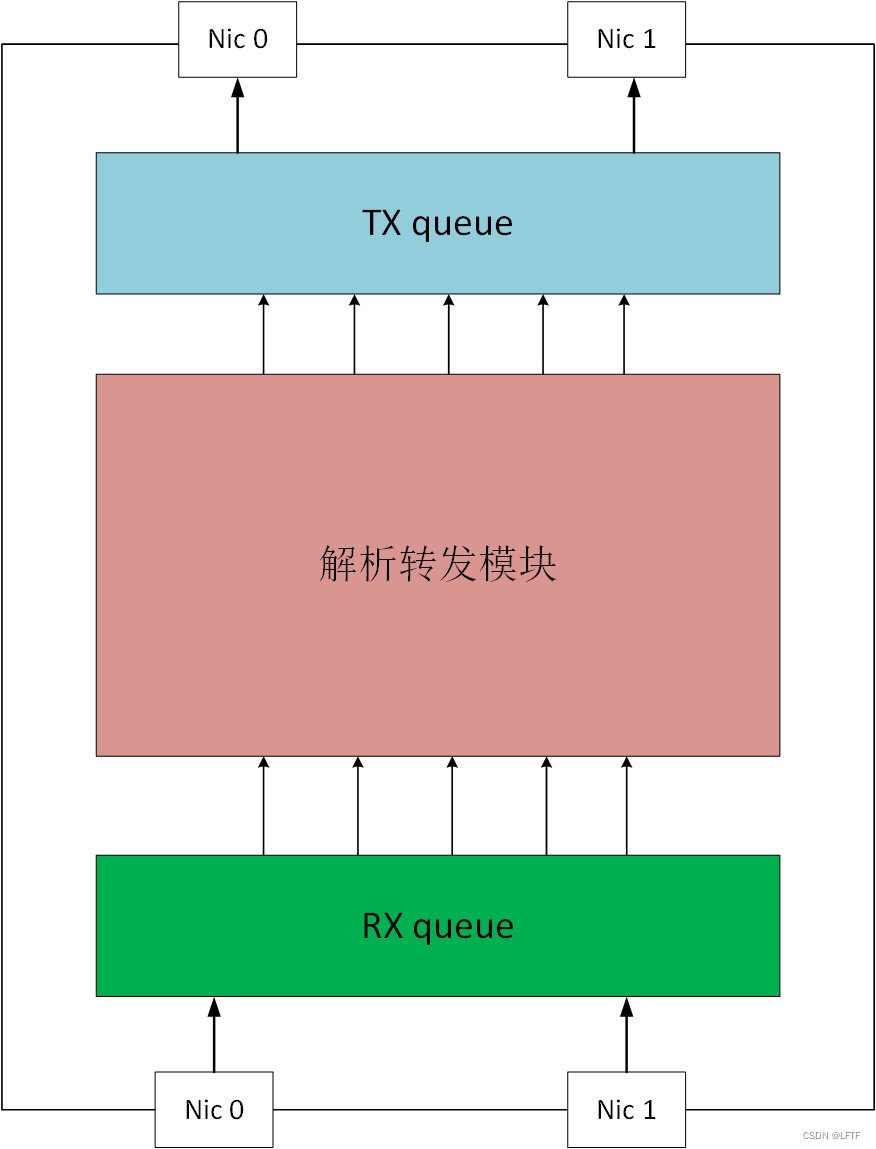

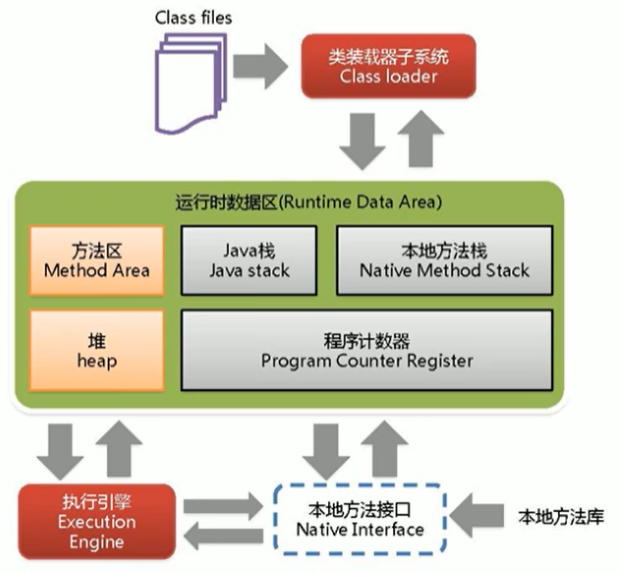

The schematic diagram is as follows :

Left and right NIC 0 For a network card , This painting is just for the convenience of illustration ,NIC 1 Empathy .

A total of 3 A nuclear , Receiving queue : network card 0 Set up 2 Receive queues , queue ID 0 The packet consists of the core 1 Parsing , queue ID 1 The data package of is from the core 3 Parsing , network card 0 Set up 1 Receive queues , queue ID 0 The packet consists of the core 2 Parsing . Sending queue : Each network card is set 3 Send queues ( If there are several parsing cores, there should be several sending queues ), The reason is that the packets received by the receiving queue go through LPM perhaps HASH Only after matching can we determine which network card port to forward , Therefore, it is necessary to set two sending queues for each core corresponding to the network card sending queue .

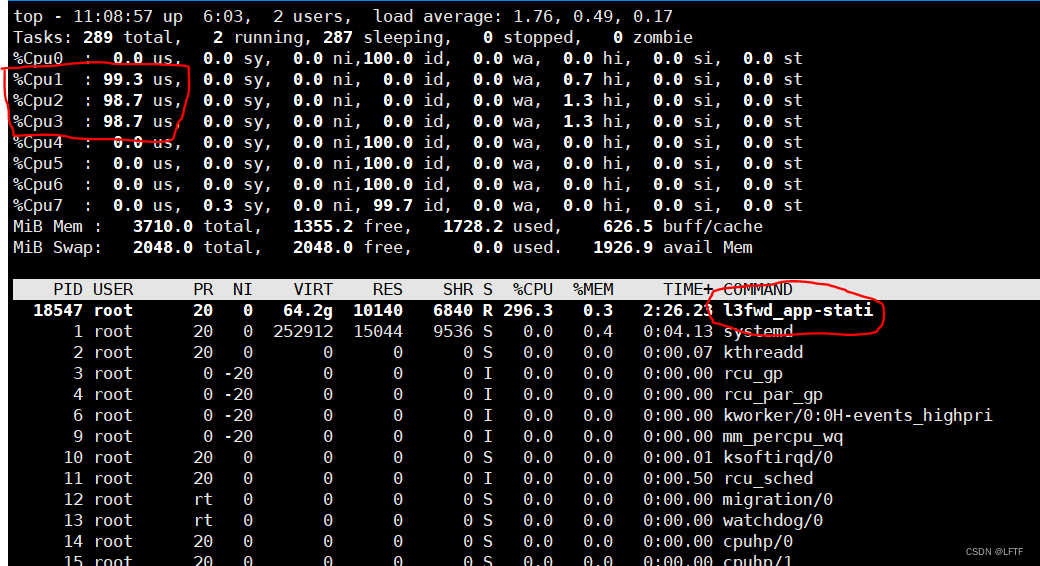

CPU The utilization ratio is shown in the figure below :

NOTE: The corresponding relationship between the core and the receiving queue is not only 1-1 Corresponding , You can also start the program with the following command

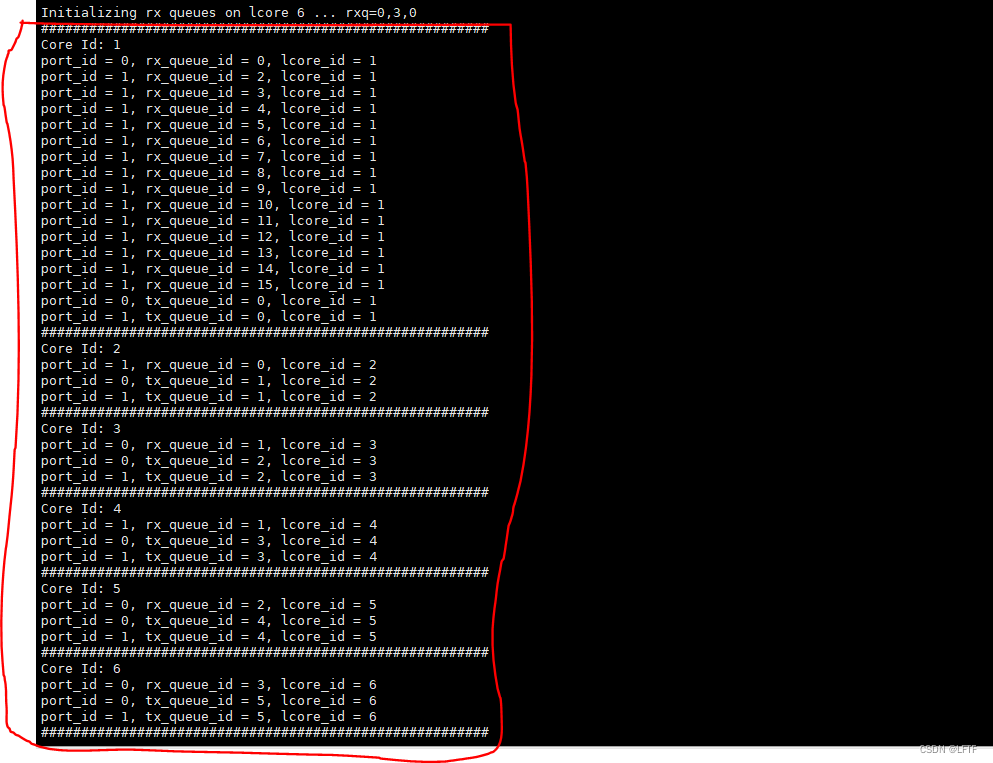

[[email protected] dpdk-l3fwd]# ./l3fwd_app-static -l 1-6 -n 4 -- -p 0x3 -P -L --eth-dest 0,00:0c:29:4c:18:7d --eth-dest 1,52:54:00:9d:ae:51 --config="(0,0,1),(1,0,2),(0,1,3),(1,1,4),(0,2,5),(0,3,6),(1,2,1),(1,3,1),(1,4,1),(1,5,1),(1,6,1),(1,7,1),(1,8,1),(1,9,1),(1,10,1),(1,11,1),(1,12,1),(1,13,1),(1,14,1),(1,15,1)" --parse-ptype

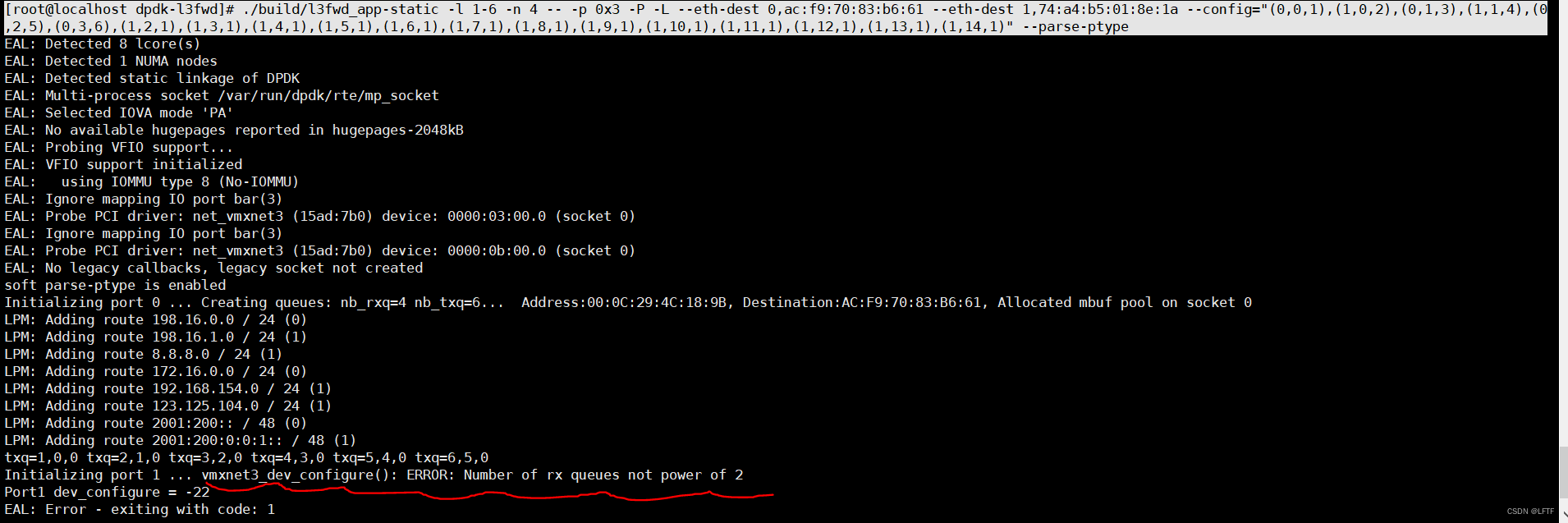

Be careful :RX queues Need to be for 2 Power square , Otherwise, an error will be reported , as follows :

1.4.1、 Receive queue initialization

First, let's take a look at the receiving queue printing after the following command is started :

[[email protected] dpdk-l3fwd]# ./l3fwd_app-static -l 1-6 -n 4 -- -p 0x3 -P -L --eth-dest 0,ac:f9:70:83:b6:61 --eth-dest 1,74:a4:b5:01:8e:1a --config="(0,0,1),(1,0,2),(0,1,3),(1,1,4),(0,2,5),(0,3,6),(1,2,1),(1,3,1),(1,4,1),(1,5,1),(1,6,1),(1,7,1),(1,8,1),(1,9,1),(1,10,1),(1,11,1),(1,12,1),(1,13,1),(1,14,1),(1,15,1)" --parse-ptype

Receive queue initialization print is as follows :

Initializing rx queues on lcore 1 ... rxq=0,0,0 rxq=1,2,0 rxq=1,3,0 rxq=1,4,0 rxq=1,5,0 rxq=1,6,0 rxq=1,7,0 rxq=1,8,0 rxq=1,9,0 rxq=1,10,0 rxq=1,11,0 rxq=1,12,0 rxq=1,13,0 rxq=1,14,0 rxq=1,15,0

Initializing rx queues on lcore 2 ... rxq=1,0,0

Initializing rx queues on lcore 3 ... rxq=0,1,0

Initializing rx queues on lcore 4 ... rxq=1,1,0

Initializing rx queues on lcore 5 ... rxq=0,2,0

Initializing rx queues on lcore 6 ... rxq=0,3,0

# The corresponding meaning of parameters printf("rxq=%d,%d,%d ", portid, queueid, socketid);

The core 1 Initialization creates 15 Receive queues , Other cores are set 1 Receive queues . queue ID Continuous is corresponding to the network card port , such as

Network card port 0: Yes 4 Receive queues , among 1 Receive queues ( queue id 0) Bound to the core 1( That is, the packets in this queue have a core 1 To deal with ), queue id 1 The data is bound to the core 3 above ; queue id 2 The data is bound to the core 5 above ; queue id 3 The data is bound to the core 6 above .

Network card port 1: Yes 16 Receive queues , among 14 Receive queues ( queue id 2-15) Bound to the core 1( That is, the packets in these queues have cores 1 To deal with ), queue id 0 The data is bound to the core 2 above ; queue id 1 The data is bound to the core 4 above .

Accept queue initialization , The pseudocode is as follows :

// Loop through each core

for (lcore_id = 0; lcore_id < RTE_MAX_LCORE; lcore_id++) {

/* init RX queues */ // qconf->n_rx_queue Indicates the number of receive queues bound by the core ( Yes “ Application parameter initialization ” Time assignment )

for(queue = 0; queue < qconf->n_rx_queue; ++queue) {

struct rte_eth_rxconf rxq_conf;

portid = qconf->rx_queue_list[queue].port_id; // The network card port bound to the current queue ID value

queueid = qconf->rx_queue_list[queue].queue_id; // queue ID value , Not necessarily continuous

printf("rxq=%d,%d,%d ", portid, queueid, socketid);

ret = rte_eth_dev_info_get(portid, &dev_info); // Get network card driver information

if (ret != 0)

rte_exit(EXIT_FAILURE,

"Error during getting device (port %u) info: %s\n",

portid, strerror(-ret));

rxq_conf = dev_info.default_rxconf;

rxq_conf.offloads = port_conf.rxmode.offloads;

// Use the previously created pktmbuf_pool Initialize each receive queue

ret = rte_eth_rx_queue_setup(portid, queueid,

nb_rxd, socketid,

&rxq_conf,

pktmbuf_pool[portid][socketid]);

if (ret < 0)

rte_exit(EXIT_FAILURE,

"rte_eth_rx_queue_setup: err=%d, port=%d\n",

ret, portid);

}

}

rte_eth_rx_queue_setup Parameter interpretation

/* @param port_id * Network card port ID * @param rx_queue_id * Receiving queue ID * @param nb_rx_desc * receive RING SIZE * @param socket_id * NUMA Under the architecture , Identify the lcore Corresponding NUMA node, Not NUMA The schema incoming value has no constraints * @param rx_conf * dpdk-l3fwd The example is mainly used to activate the network card hardware OFFLOAD function * @param mb_pool * Used to point to the... Received by the storage network card rte_mbuf* The memory pool of , * @return Return value information * - 0: Success, receive queue correctly set up. * - -EIO: if device is removed. * - -ENODEV: if *port_id* is invalid. * - -EINVAL: The memory pool pointer is null or the size of network buffers * which can be allocated from this memory pool does not fit the various * buffer sizes allowed by the device controller. * - -ENOMEM: Unable to allocate the receive ring descriptors or to * allocate network memory buffers from the memory pool when * initializing receive descriptors. */

int rte_eth_rx_queue_setup(uint16_t port_id, uint16_t rx_queue_id,

uint16_t nb_rx_desc, unsigned int socket_id,

const struct rte_eth_rxconf *rx_conf,

struct rte_mempool *mb_pool);

1.4.2、 Send queue initialization

DPDK-L3FWD When the sample sending queue initializes traversal , The outer layer is the network card port , The inner layer is bound core id, And the queue id The value of is self increasing , In this way, initialization can ensure , The core of every binding core Can create DPDK Queue using the number of network card ports , Easy to forward .( Generally speaking, the configuration of the sending queue is written in the code , Cannot be configured through startup parameters )

First, take a look at the sending queue printing after the following command is started :

[[email protected] dpdk-l3fwd]# ./l3fwd_app-static -l 1-6 -n 4 -- -p 0x3 -P -L --eth-dest 0,ac:f9:70:83:b6:61 --eth-dest 1,74:a4:b5:01:8e:1a --config="(0,0,1),(1,0,2),(0,1,3),(1,1,4),(0,2,5),(0,3,6),(1,2,1),(1,3,1),(1,4,1),(1,5,1),(1,6,1),(1,7,1),(1,8,1),(1,9,1),(1,10,1),(1,11,1),(1,12,1),(1,13,1),(1,14,1),(1,15,1)" --parse-ptype

Send queue initialization print as follows :

Port 0, txq=1,0,0 txq=2,1,0 txq=3,2,0 txq=4,3,0 txq=5,4,0 txq=6,5,0

Port 1, txq=1,0,0 txq=2,1,0 txq=3,2,0 txq=4,3,0 txq=5,4,0 txq=6,5,0

# The corresponding meaning of the parameter : printf("txq=%u,%d,%d ", lcore_id, queueid, socketid);

Be careful : queue ID Continuous is corresponding to the network card port

Send queue initialization , The pseudocode is as follows :

RTE_ETH_FOREACH_DEV(portid) {

struct rte_eth_conf local_port_conf = port_conf;

/* Removed the network card initialization related code */

/* init one TX queue per couple (lcore,port) */

queueid = 0;

for (lcore_id = 0; lcore_id < RTE_MAX_LCORE; lcore_id++) {

if (rte_lcore_is_enabled(lcore_id) == 0)

continue;

printf("txq=%u,%d,%d ", lcore_id, queueid, socketid);

txconf = &dev_info.default_txconf;

txconf->offloads = local_port_conf.txmode.offloads;

// Send queue settings

ret = rte_eth_tx_queue_setup(portid, queueid, nb_txd,

socketid, txconf);

if (ret < 0)

rte_exit(EXIT_FAILURE,

"rte_eth_tx_queue_setup: err=%d, "

"port=%d\n", ret, portid);

qconf = &lcore_conf[lcore_id];

qconf->tx_queue_id[portid] = queueid;

queueid++;

qconf->tx_port_id[qconf->n_tx_port] = portid;

qconf->n_tx_port++;

}

printf("\n");

}

rte_eth_tx_queue_setup Parameter interpretation

/* @param port_id * Network card port ID * @param tx_queue_id * Sending queue ID * @param nb_tx_desc * send out RING SIZE * @param socket_id * NUMA Under the architecture , Identify the lcore Corresponding NUMA node, Not NUMA The schema incoming value has no constraints * @param tx_conf * Used to configure the send queue , If you pass in NULL Value will use the default configuration Return value * @return Return value * - 0: Success, the transmit queue is correctly set up. * - -ENOMEM: Unable to allocate the transmit ring descriptors. */

int rte_eth_tx_queue_setup(uint16_t port_id, uint16_t tx_queue_id,

uint16_t nb_tx_desc, unsigned int socket_id,

const struct rte_eth_txconf *tx_conf);

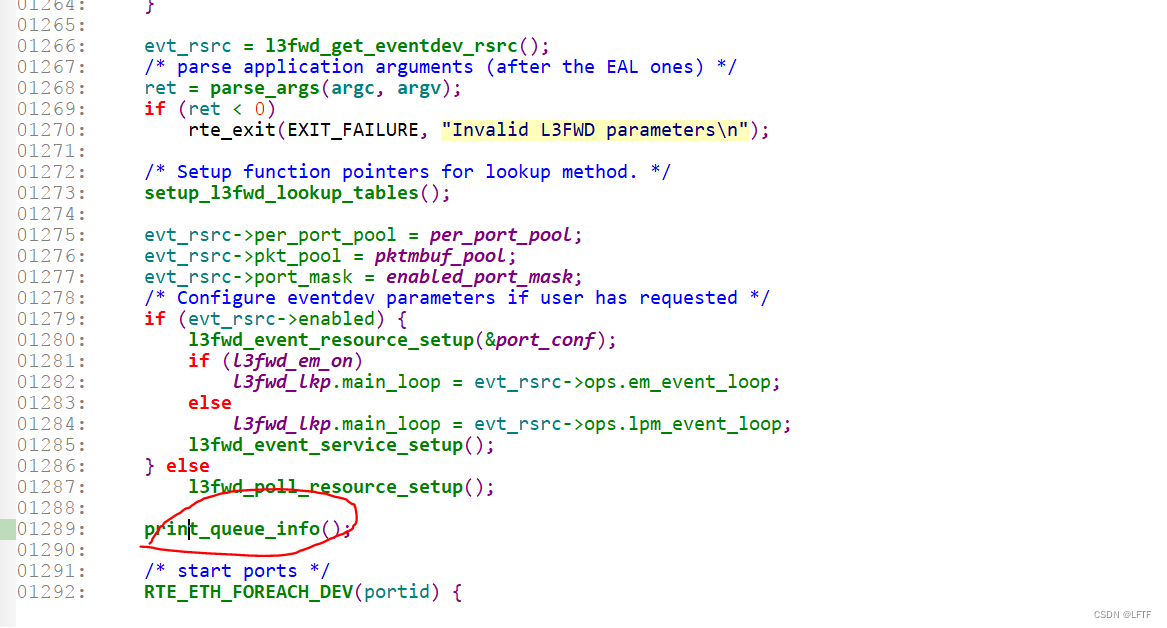

1.5、 Add queue print

After queue initialization , Add a function , The function is to print the network card port and queue bound by each core ID Information . The code is added at the following location :

The specific code is as follows :

static void print_queue_info( void )

{

unsigned int lcore_id;

struct lcore_conf *qconf;

/* printf all lcore */

printf("\n########################################################\n");

for (lcore_id = 0; lcore_id < RTE_MAX_LCORE; lcore_id++) {

if (rte_lcore_is_enabled(lcore_id) == 0)

continue;

printf("Core Id: %u\n", lcore_id);

qconf = &lcore_conf[lcore_id];

for(int i = 0; i < qconf->n_rx_queue; i++) {

printf("port_id = %u, rx_queue_id = %u, lcore_id = %u\n", \

qconf->rx_queue_list[i].port_id, qconf->rx_queue_list[i].queue_id, lcore_id);

}

for(int j = 0; j < qconf->n_tx_port; j++) {

printf("port_id = %u, tx_queue_id = %u, lcore_id = %u\n", \

qconf->tx_port_id[j], qconf->tx_queue_id[j], lcore_id);

}

printf("########################################################\n");

}

}

Start program parameters and printing information are as follows :

[[email protected] dpdk-l3fwd]# ./build/l3fwd_app-static -l 1-3 -n 4 -- -p 0x3 --config="(0,0,1),(1,0,2)(0,1,3)" --parse-ptype

[[email protected] dpdk-l3fwd]# ./l3fwd_app-static -l 1-6 -n 4 -- -p 0x3 -P -L --eth-dest 0,ac:f9:70:83:b6:61 --eth-dest 1,74:a4:b5:01:8e:1a --config="(0,0,1),(1,0,2),(0,1,3),(1,1,4),(0,2,5),(0,3,6),(1,2,1),(1,3,1),(1,4,1),(1,5,1),(1,6,1),(1,7,1),(1,8,1),(1,9,1),(1,10,1),(1,11,1),(1,12,1),(1,13,1),(1,14,1),(1,15,1)" --parse-ptype

It can be seen that ,rx_queue And lcore The binding between is configured by starting the command parameters , and tx_queue And lcore The binding between each is by default lcore Configure the number of network card ports (2) individual tx_queue Number of queues .

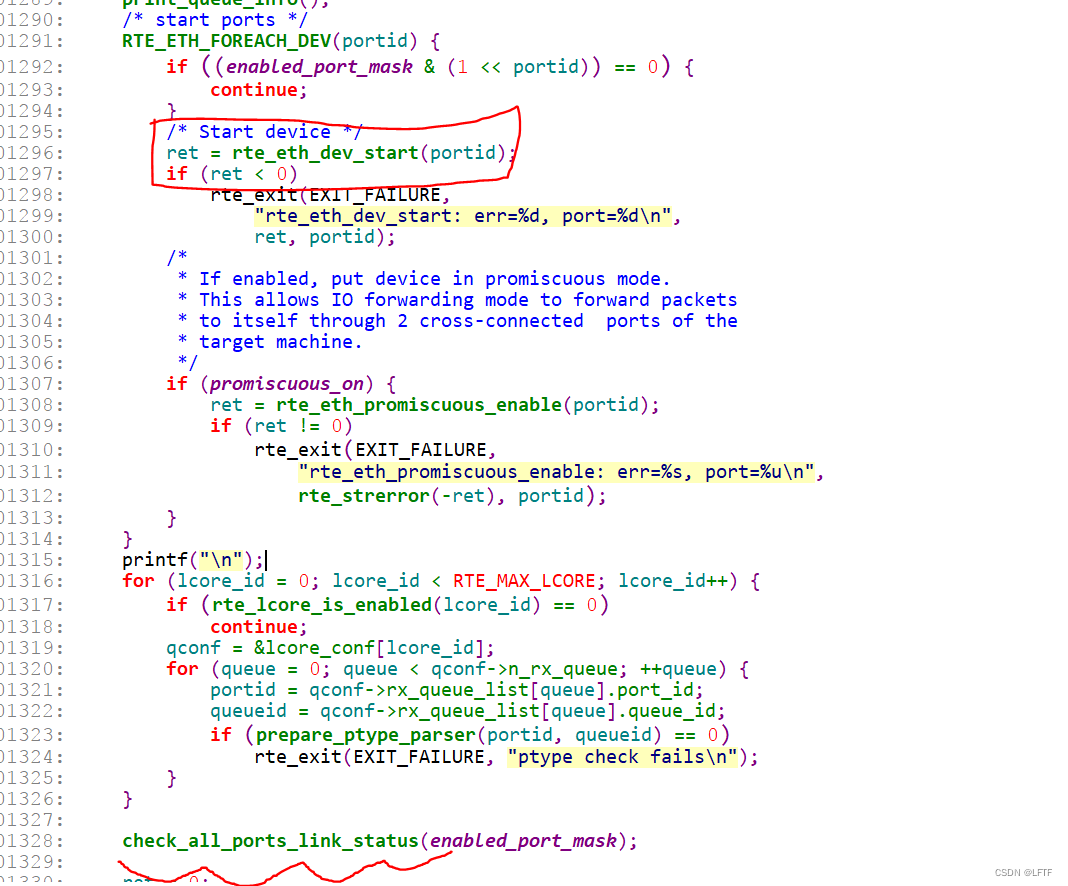

1.6、 The network card driver starts

There is no logical problem here , Directly call the related API Just use it

** Summary :** thus ,dpdk-l3fwd Program initialization is basically completed , Then loop through each lcore And generate corresponding threads for packet parsing, processing, forwarding and other functions , The interface functions called are as follows :

/* launch per-lcore init on every lcore */

rte_eal_mp_remote_launch(l3fwd_lkp.main_loop, NULL, CALL_MAIN);

main_loop Involved in the code HASH lookup functions and LPM lookup functions, The main difference is that the program resolves to L3 Data time , What matching logic is used for forwarding , Therefore, this is not the focus of the introduction , If there is a need in the future, just learn more . This paper is based on lpm_main_loop The interface is introduced in the following code .

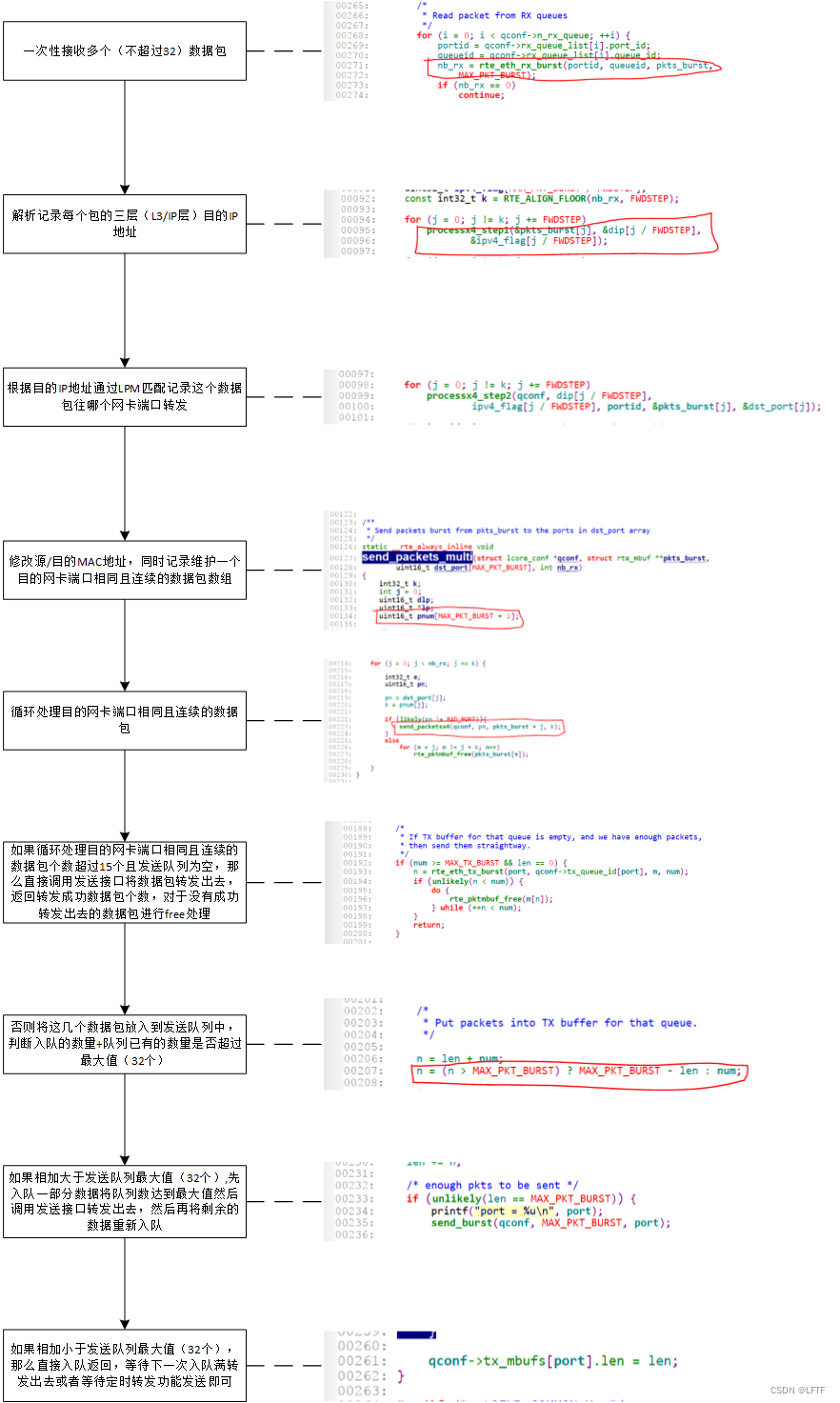

The overall processing flow chart of data parsing and forwarding is as follows :

lpm_main_loop Source code :

/* main processing loop */

int

lpm_main_loop(__rte_unused void *dummy)

{

struct rte_mbuf *pkts_burst[MAX_PKT_BURST];

unsigned lcore_id;

uint64_t prev_tsc, diff_tsc, cur_tsc;

int i, nb_rx;

uint16_t portid;

uint8_t queueid;

struct lcore_conf *qconf;

const uint64_t drain_tsc = (rte_get_tsc_hz() + US_PER_S - 1) /

US_PER_S * BURST_TX_DRAIN_US;

prev_tsc = 0;

lcore_id = rte_lcore_id();

qconf = &lcore_conf[lcore_id];

if (qconf->n_rx_queue == 0) {

RTE_LOG(INFO, L3FWD, "lcore %u has nothing to do\n", lcore_id);

return 0;

}

RTE_LOG(INFO, L3FWD, "entering main loop on lcore %u\n", lcore_id);

for (i = 0; i < qconf->n_rx_queue; i++) {

portid = qconf->rx_queue_list[i].port_id;

queueid = qconf->rx_queue_list[i].queue_id;

RTE_LOG(INFO, L3FWD,

" -- lcoreid=%u portid=%u rxqueueid=%hhu\n",

lcore_id, portid, queueid);

}

while (!force_quit) {

cur_tsc = rte_rdtsc();

// It will be TX The data in the queue is forwarded

/* * TX burst queue drain */

diff_tsc = cur_tsc - prev_tsc;

if (unlikely(diff_tsc > drain_tsc)) {

for (i = 0; i < qconf->n_tx_port; ++i) {

portid = qconf->tx_port_id[i];

if (qconf->tx_mbufs[portid].len == 0)

continue;

send_burst(qconf,

qconf->tx_mbufs[portid].len,

portid);

qconf->tx_mbufs[portid].len = 0;

}

prev_tsc = cur_tsc;

}

/* * Read packet from RX queues */

for (i = 0; i < qconf->n_rx_queue; ++i) {

portid = qconf->rx_queue_list[i].port_id;

queueid = qconf->rx_queue_list[i].queue_id;

// Read RX Queue packets , The return value is the number of packets obtained ,pkts_burst Just think of the first rte_mbuf *

nb_rx = rte_eth_rx_burst(portid, queueid, pkts_burst,

MAX_PKT_BURST);

// If the return value is 0, It indicates that the network card did not receive data

if (nb_rx == 0)

continue;

// The tester is LINUX x86_64 Server , It can be done by arch Command to view the server architecture

#if defined RTE_ARCH_X86 || defined __ARM_NEON || defined RTE_ARCH_PPC_64

l3fwd_lpm_send_packets(nb_rx, pkts_burst,

portid, qconf);

#else

l3fwd_lpm_no_opt_send_packets(nb_rx, pkts_burst,

portid, qconf);

#endif /* X86 */

}

}

return 0;

}

The general logic is every LCORE Create a thread , And then recycle , Time traversal TX queue, If there is data in the queue, it will be forwarded to the corresponding network card port , Then traverse the receiving process RX queue queue , Send the received packet to l3fwd_lpm_send_packets Do parsing .

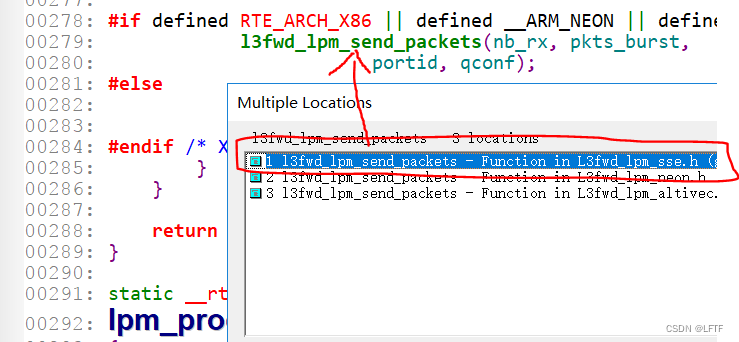

2、 Data analysis and forwarding module

Data receiving mainly calls rte_eth_rx_burst Interface gets from the receive queue rte_mbuf * The packet then calls l3fwd_lpm_send_packets Analyze the data package , Let's go down to l3fwd_lpm_send_packets Function to see how to parse . According to different server architectures , The functions called are also different , here x86 Called under the framework API Interface for l3fwd_lpm_sse.h Inside , The following is related to different architectures API Interface , Here are xxxx_sse.h Call in file . as follows :

Easy to understand , First, let's take a look at the incoming packets l3fwd_lpm_send_packets Processing flow chart and corresponding code after interface :

Function names and functions involved :

| Function name | function |

|---|---|

processx4_step1 | Loop once to get 4 The purpose of the packets IP And IP type |

processx4_step2 | Loop once to get 4 Packets passed LPM The network card port that needs to be forwarded after matching ID |

lpm_get_dst_port | Get a single packet through LPM The network card port that needs to be forwarded after matching ID |

processx4_step3 | Cycle through one modification 4 Source and destination of packets MAC |

port_groupx4 | Record the continuous destination network card port once ID Number of identical packets |

process_packet | Modify the source and destination of a single packet MAC |

GROUP_PORT_STEP | Record a single continuous destination network card port ID Number of identical packets |

send_packetsx4 | One time forwarding handles multiple destination ports ID Same and continuous packets |

l3fwd_lpm_send_packets The source code analysis is as follows :

static inline void

l3fwd_lpm_send_packets(int nb_rx, struct rte_mbuf **pkts_burst,

uint16_t portid, struct lcore_conf *qconf)

{

int32_t j;

uint16_t dst_port[MAX_PKT_BURST];

__m128i dip[MAX_PKT_BURST / FWDSTEP];

uint32_t ipv4_flag[MAX_PKT_BURST / FWDSTEP];

// Get the number of received packets 4 The nearest value of the multiple of (k The value must be less than or equal to nb_rx), Such as : receive 30 A packet , that K The value is 28

// Call the following for Loops can be processed at one time 4 A packet , Then I'll take the rest 2 Packets are processed separately , It can improve the efficiency of data processing

const int32_t k = RTE_ALIGN_FLOOR(nb_rx, FWDSTEP);

// One-off treatment 4 A packet , Parse the packet L3 The purpose of the layer IP Address

for (j = 0; j != k; j += FWDSTEP)

processx4_step1(&pkts_burst[j], &dip[j / FWDSTEP],

&ipv4_flag[j / FWDSTEP]);

// Will parse out 4 The purpose of the packets IP address LPM matching , Get which network card port the packet needs to be forwarded to id in , It was recorded that dst_port Bring out

for (j = 0; j != k; j += FWDSTEP)

processx4_step2(qconf, dip[j / FWDSTEP],

ipv4_flag[j / FWDSTEP], portid, &pkts_burst[j], &dst_port[j]);

/* Classify last up to 3 packets one by one */

// Yes, not enough 4 The data of data packets are analyzed and processed separately

switch (nb_rx % FWDSTEP) {

case 3:

dst_port[j] = lpm_get_dst_port(qconf, pkts_burst[j], portid);

j++;

/* fall-through */

case 2:

dst_port[j] = lpm_get_dst_port(qconf, pkts_burst[j], portid);

j++;

/* fall-through */

case 1:

dst_port[j] = lpm_get_dst_port(qconf, pkts_burst[j], portid);

j++;

}

// Send the packet to the matching det_port in

send_packets_multi(qconf, pkts_burst, dst_port, nb_rx);

}

send_packets_multi Source code comments as follows :

/** * Send packets burst from pkts_burst to the ports in dst_port array */

static __rte_always_inline void

send_packets_multi(struct lcore_conf *qconf, struct rte_mbuf **pkts_burst,

uint16_t dst_port[MAX_PKT_BURST], int nb_rx)

{

int32_t k;

int j = 0;

uint16_t dlp;

uint16_t *lp;

// Used to record the number of consecutive packets with the same destination port

uint16_t pnum[MAX_PKT_BURST + 1];

/*************************START***************************************/

// Get the number of consecutive packets with the same destination port ; At the same time, update the packet source / Purpose MAC Address

/* * Finish packet processing and group consecutive * packets with the same destination port. */

k = RTE_ALIGN_FLOOR(nb_rx, FWDSTEP);

if (k != 0) {

__m128i dp1, dp2;

lp = pnum;

lp[0] = 1;

processx4_step3(pkts_burst, dst_port);

/* dp1: <d[0], d[1], d[2], d[3], ... > */

dp1 = _mm_loadu_si128((__m128i *)dst_port);

for (j = FWDSTEP; j != k; j += FWDSTEP) {

processx4_step3(&pkts_burst[j], &dst_port[j]);

/* * dp2: * <d[j-3], d[j-2], d[j-1], d[j], ... > */

dp2 = _mm_loadu_si128((__m128i *)

&dst_port[j - FWDSTEP + 1]);

lp = port_groupx4(&pnum[j - FWDSTEP], lp, dp1, dp2);

/* * dp1: * <d[j], d[j+1], d[j+2], d[j+3], ... > */

dp1 = _mm_srli_si128(dp2, (FWDSTEP - 1) *

sizeof(dst_port[0]));

}

/* * dp2: <d[j-3], d[j-2], d[j-1], d[j-1], ... > */

dp2 = _mm_shufflelo_epi16(dp1, 0xf9);

lp = port_groupx4(&pnum[j - FWDSTEP], lp, dp1, dp2);

/* * remove values added by the last repeated * dst port. */

lp[0]--;

dlp = dst_port[j - 1];

} else {

/* set dlp and lp to the never used values. */

dlp = BAD_PORT - 1;

lp = pnum + MAX_PKT_BURST;

}

/* Process up to last 3 packets one by one. */

switch (nb_rx % FWDSTEP) {

case 3:

process_packet(pkts_burst[j], dst_port + j);

GROUP_PORT_STEP(dlp, dst_port, lp, pnum, j);

j++;

/* fall-through */

case 2:

process_packet(pkts_burst[j], dst_port + j);

GROUP_PORT_STEP(dlp, dst_port, lp, pnum, j);

j++;

/* fall-through */

case 1:

process_packet(pkts_burst[j], dst_port + j);

GROUP_PORT_STEP(dlp, dst_port, lp, pnum, j);

j++;

}

/***************************END*************************************/

/* * Send packets out, through destination port. * Consecutive packets with the same destination port * are already grouped together. * If destination port for the packet equals BAD_PORT, * then free the packet without sending it out. */

// k Indicates the destination port to be forwarded after the start of the current packet ID Number of identical packets

for (j = 0; j < nb_rx; j += k) {

int32_t m;

uint16_t pn;

pn = dst_port[j];

k = pnum[j];

if (likely(pn != BAD_PORT)){

// One-off treatment K A packet

send_packetsx4(qconf, pn, pkts_burst + j, k);

}

else

for (m = j; m != j + k; m++)

rte_pktmbuf_free(pkts_burst[m]);

}

}

**Note:** In the above code pnum I don't understand how to maintain arrays , But I picked up the relevant code , Interested friends can debug and learn by themselves through the following sample code :

// Compile command : gcc -o main main.c

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

#include <stdint.h>

#include <inttypes.h>

#include <sys/types.h>

#include <sys/queue.h>

#include <netinet/in.h>

#include <setjmp.h>

#include <stdarg.h>

#include <ctype.h>

#include <errno.h>

#include <getopt.h>

#include <signal.h>

#include <stdbool.h>

#include <emmintrin.h>

#define FWDSTEP 4

#define MAX_PKT_BURST 32

#define BAD_PORT ((uint16_t)-1)

#ifndef likely

#define likely(x) __builtin_expect(!!(x), 1)

#endif /* likely */

/** * Check if a branch is unlikely to be taken. * * This compiler builtin allows the developer to indicate if a branch is * unlikely to be taken. Example: * * if (unlikely(x < 1)) * do_stuff(); * */

#ifndef unlikely

#define unlikely(x) __builtin_expect(!!(x), 0)

#endif /* unlikely */

/** * Macro to align a value to a given power-of-two. The resultant value * will be of the same type as the first parameter, and will be no * bigger than the first parameter. Second parameter must be a * power-of-two value. */

#define RTE_ALIGN_FLOOR(val, align) \ (typeof(val))((val) & (~((typeof(val))((align) - 1))))

/* * We group consecutive packets with the same destionation port into one burst. * To avoid extra latency this is done together with some other packet * processing, but after we made a final decision about packet's destination. * To do this we maintain: * pnum - array of number of consecutive packets with the same dest port for * each packet in the input burst. * lp - pointer to the last updated element in the pnum. * dlp - dest port value lp corresponds to. */

#define GRPSZ (1 << FWDSTEP)

#define GRPMSK (GRPSZ - 1)

#define GROUP_PORT_STEP(dlp, dcp, lp, pn, idx) do {

\ if (likely((dlp) == (dcp)[(idx)])) {

\ (lp)[0]++; \ } else {

\ (dlp) = (dcp)[idx]; \ (lp) = (pn) + (idx); \ (lp)[0] = 1; \ } \ } while (0)

static const struct {

uint64_t pnum; /* prebuild 4 values for pnum[]. */

int32_t idx; /* index for new last updated elemnet. */

uint16_t lpv; /* add value to the last updated element. */

} gptbl[GRPSZ] = {

{

/* 0: a != b, b != c, c != d, d != e */

.pnum = UINT64_C(0x0001000100010001),

.idx = 4,

.lpv = 0,

},

{

/* 1: a == b, b != c, c != d, d != e */

.pnum = UINT64_C(0x0001000100010002),

.idx = 4,

.lpv = 1,

},

{

/* 2: a != b, b == c, c != d, d != e */

.pnum = UINT64_C(0x0001000100020001),

.idx = 4,

.lpv = 0,

},

{

/* 3: a == b, b == c, c != d, d != e */

.pnum = UINT64_C(0x0001000100020003),

.idx = 4,

.lpv = 2,

},

{

/* 4: a != b, b != c, c == d, d != e */

.pnum = UINT64_C(0x0001000200010001),

.idx = 4,

.lpv = 0,

},

{

/* 5: a == b, b != c, c == d, d != e */

.pnum = UINT64_C(0x0001000200010002),

.idx = 4,

.lpv = 1,

},

{

/* 6: a != b, b == c, c == d, d != e */

.pnum = UINT64_C(0x0001000200030001),

.idx = 4,

.lpv = 0,

},

{

/* 7: a == b, b == c, c == d, d != e */

.pnum = UINT64_C(0x0001000200030004),

.idx = 4,

.lpv = 3,

},

{

/* 8: a != b, b != c, c != d, d == e */

.pnum = UINT64_C(0x0002000100010001),

.idx = 3,

.lpv = 0,

},

{

/* 9: a == b, b != c, c != d, d == e */

.pnum = UINT64_C(0x0002000100010002),

.idx = 3,

.lpv = 1,

},

{

/* 0xa: a != b, b == c, c != d, d == e */

.pnum = UINT64_C(0x0002000100020001),

.idx = 3,

.lpv = 0,

},

{

/* 0xb: a == b, b == c, c != d, d == e */

.pnum = UINT64_C(0x0002000100020003),

.idx = 3,

.lpv = 2,

},

{

/* 0xc: a != b, b != c, c == d, d == e */

.pnum = UINT64_C(0x0002000300010001),

.idx = 2,

.lpv = 0,

},

{

/* 0xd: a == b, b != c, c == d, d == e */

.pnum = UINT64_C(0x0002000300010002),

.idx = 2,

.lpv = 1,

},

{

/* 0xe: a != b, b == c, c == d, d == e */

.pnum = UINT64_C(0x0002000300040001),

.idx = 1,

.lpv = 0,

},

{

/* 0xf: a == b, b == c, c == d, d == e */

.pnum = UINT64_C(0x0002000300040005),

.idx = 0,

.lpv = 4,

},

};

static inline uint16_t *

port_groupx4(uint16_t pn[FWDSTEP + 1], uint16_t *lp, __m128i dp1, __m128i dp2)

{

union {

uint16_t u16[FWDSTEP + 1];

uint64_t u64;

} *pnum = (void *)pn;

int32_t v;

dp1 = _mm_cmpeq_epi16(dp1, dp2);

dp1 = _mm_unpacklo_epi16(dp1, dp1);

v = _mm_movemask_ps((__m128)dp1);

/* update last port counter. */

lp[0] += gptbl[v].lpv;

/* if dest port value has changed. */

if (v != GRPMSK) {

pnum->u64 = gptbl[v].pnum;

pnum->u16[FWDSTEP] = 1;

lp = pnum->u16 + gptbl[v].idx;

}

return lp;

}

static void send_packets_multi(uint16_t dst_port[MAX_PKT_BURST], int nb_rx)

{

int32_t k;

int j = 0;

uint16_t dlp;

uint16_t *lp;

uint16_t pnum[MAX_PKT_BURST + 1];

/* * Finish packet processing and group consecutive * packets with the same destination port. */

k = RTE_ALIGN_FLOOR(nb_rx, FWDSTEP);

if (k != 0) {

__m128i dp1, dp2;

lp = pnum;

lp[0] = 1;

/* dp1: <d[0], d[1], d[2], d[3], ... > */

dp1 = _mm_loadu_si128((__m128i *)dst_port);

for (j = FWDSTEP; j != k; j += FWDSTEP) {

/* * dp2: * <d[j-3], d[j-2], d[j-1], d[j], ... > */

dp2 = _mm_loadu_si128((__m128i *)

&dst_port[j - FWDSTEP + 1]);

lp = port_groupx4(&pnum[j - FWDSTEP], lp, dp1, dp2);

/* * dp1: * <d[j], d[j+1], d[j+2], d[j+3], ... > */

dp1 = _mm_srli_si128(dp2, (FWDSTEP - 1) *

sizeof(dst_port[0]));

}

/* * dp2: <d[j-3], d[j-2], d[j-1], d[j-1], ... > */

dp2 = _mm_shufflelo_epi16(dp1, 0xf9);

lp = port_groupx4(&pnum[j - FWDSTEP], lp, dp1, dp2);

/* * remove values added by the last repeated * dst port. */

lp[0]--;

dlp = dst_port[j - 1];

} else {

/* set dlp and lp to the never used values. */

dlp = BAD_PORT - 1;

lp = pnum + MAX_PKT_BURST;

}

/* Process up to last 3 packets one by one. */

switch (nb_rx % FWDSTEP) {

case 3:

GROUP_PORT_STEP(dlp, dst_port, lp, pnum, j);

j++;

/* fall-through */

case 2:

GROUP_PORT_STEP(dlp, dst_port, lp, pnum, j);

j++;

/* fall-through */

case 1:

GROUP_PORT_STEP(dlp, dst_port, lp, pnum, j);

j++;

}

for (j = 0; j < nb_rx; j += k) {

int32_t m;

uint16_t pn;

pn = dst_port[j];

k = pnum[j];

// Printout K Is it understood [7, 2, 2]

if (likely(pn != BAD_PORT)){

printf("k = %u\n", k);

}

else

printf("j = %u\n", j);

}

}

int main(int argc, char **argv)

{

// Pretend to receive 11 A packet

int nb_rx = 11;

// Initialize the following received 11 Ports to forward packets ID

uint16_t dst_port[MAX_PKT_BURST] = {

1,1,1,1,1,1,1,0,0,1,1};

send_packets_multi(dst_port, nb_rx);

return 0;

}

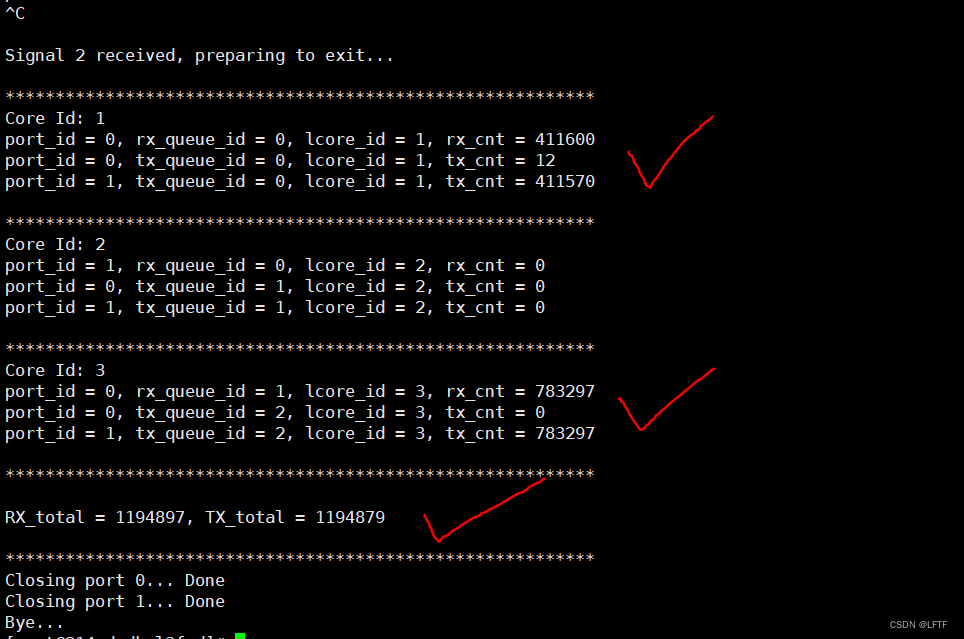

3、 Add count print

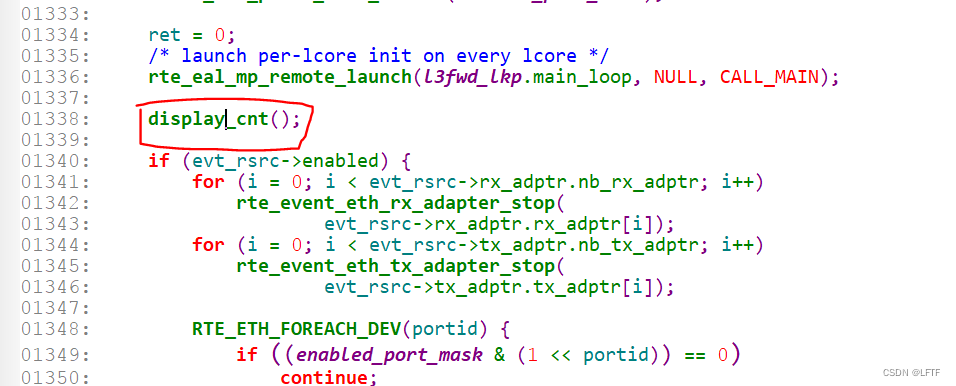

When the program exits , Put each one RX queue and TX queue The received and forwarded counts are printed , Add location as follows , stay main_loop After the loop exits :

struct lcore_conf Add a count variable to the structure , The comparison before and after the update is as follows :

struct lcore_conf The structure is updated as follows :

struct lcore_rx_queue {

uint16_t port_id;

uint8_t queue_id;

uint64_t rx_packet_cnts;

} __rte_cache_aligned;

struct lcore_conf {

uint16_t n_rx_queue;

struct lcore_rx_queue rx_queue_list[MAX_RX_QUEUE_PER_LCORE];

uint16_t n_tx_port;

uint16_t tx_port_id[RTE_MAX_ETHPORTS];

uint16_t tx_queue_id[RTE_MAX_ETHPORTS];

uint64_t tx_packet_cnts[RTE_MAX_ETHPORTS];

struct mbuf_table tx_mbufs[RTE_MAX_ETHPORTS];

void *ipv4_lookup_struct;

void *ipv6_lookup_struct;

} __rte_cache_aligned;

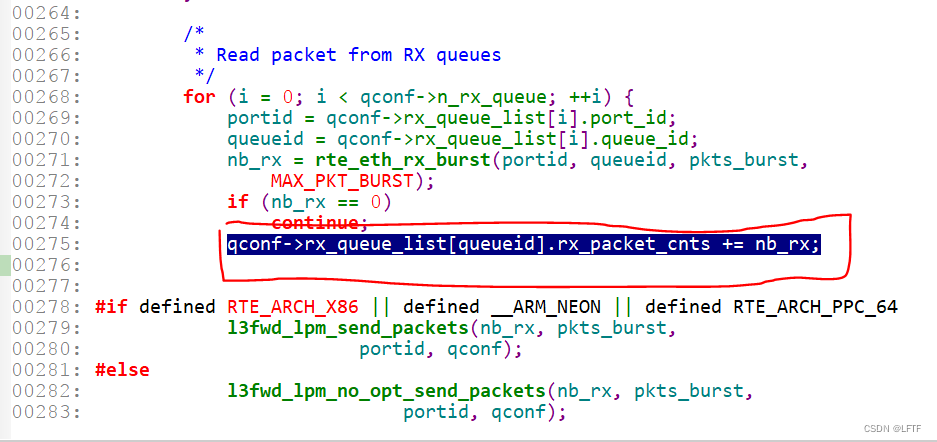

rx queue The counting position is as follows :

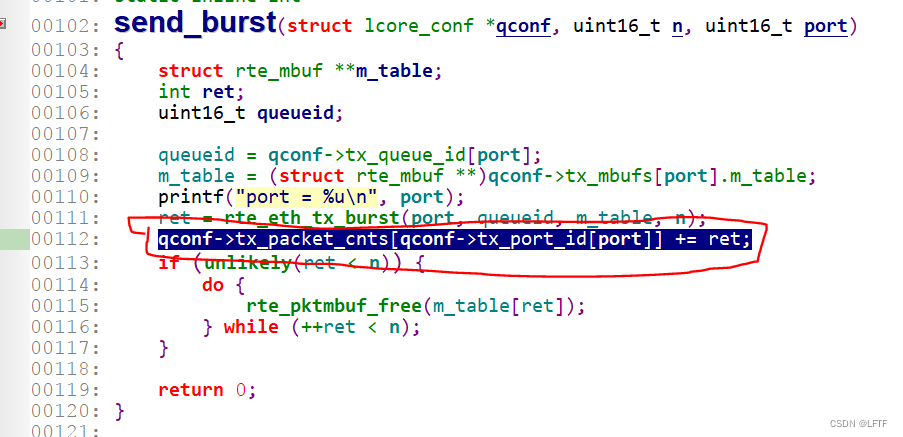

tx queue The counting position is as follows :

display_cnt The code is as follows :

static void display_cnt( void )

{

unsigned int lcore_id;

struct lcore_conf *qconf;

uint32_t rx_total = 0, tx_total = 0;

/* printf all lcore */

printf("\n***********************************************************\n");

for (lcore_id = 0; lcore_id < RTE_MAX_LCORE; lcore_id++) {

if (rte_lcore_is_enabled(lcore_id) == 0)

continue;

printf("Core Id: %u\n", lcore_id);

qconf = &lcore_conf[lcore_id];

for(int i = 0; i < qconf->n_rx_queue; i++) {

printf("port_id = %u, rx_queue_id = %u, lcore_id = %u, rx_cnt = %lu\n", \

qconf->rx_queue_list[i].port_id, qconf->rx_queue_list[i].queue_id, lcore_id, qconf->rx_queue_list[qconf->rx_queue_list[i].queue_id].rx_packet_cnts);

rx_total += qconf->rx_queue_list[qconf->rx_queue_list[i].queue_id].rx_packet_cnts;

}

for(int j = 0; j < qconf->n_tx_port; j++) {

printf("port_id = %u, tx_queue_id = %u, lcore_id = %u, tx_cnt = %lu\n", \

qconf->tx_port_id[j], qconf->tx_queue_id[j], lcore_id, qconf->tx_packet_cnts[qconf->tx_port_id[j]]);

tx_total += qconf->tx_packet_cnts[qconf->tx_port_id[j]];

}

printf("\n***********************************************************\n");

}

printf("\nRX_total = %u, TX_total = %u\n", rx_total, tx_total);

printf("\n***********************************************************\n");

}

The start command is as follows :

[[email protected] dpdk-l3fwd]# ./l3fwd_app-static -l 1-3 -n 4 -- -p 0x3 -P -L --config="(0,0,1),(1,0,2),(0,1,3)" --parse-ptype

The printouts are as follows :

4、 summary

thus ,dpdk-l3fwd The source code is basically sorted out , The main thing is to sort it out DPDK Bound network card ID、lcore、queue id The association between the three and the packet parsing and forwarding logic , It is basically the surface logic of the code to realize the function , Relatively simple .

边栏推荐

- First understand the principle of network

- Ubuntu20 installation redisjson record

- Sorting operation partition, argpartition, sort, argsort in numpy

- How to customize the shortcut key for latex to stop running

- 2022.6.28

- leetcode

- Principle of attention mechanism

- input_ delay

- QT 项目 表格新建列名称设置 需求练习(找数组消失的数字、最大值)

- 树莓派设置静态ip

猜你喜欢

Not All Points Are Equal Learning Highly Efficient Point-based Detectors for 3D LiDAR Point

Principle of attention mechanism

变量、流程控制与游标(MySQL)

Clock in during winter vacation

Restcloud ETL Community Edition June featured Q & A

Confirm the future development route! Digital economy, digital transformation, data This meeting is very important

图形化工具打包YOLOv5,生成可执行文件EXE

Can the applet run in its own app and realize live broadcast and connection?

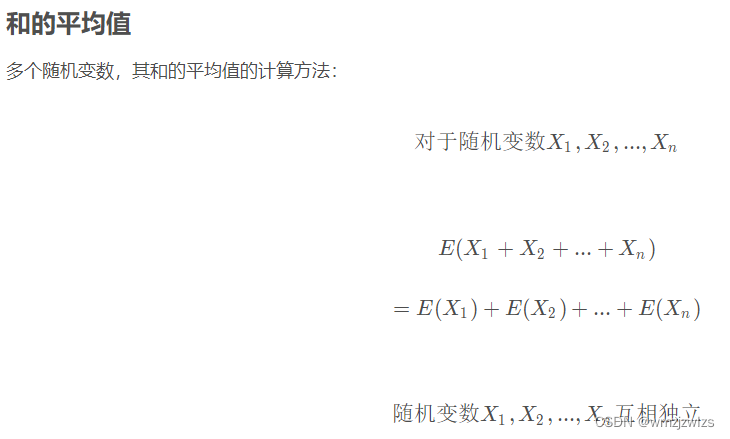

Shangsilicon Valley JVM Chapter 1 class loading subsystem

概率论公式

随机推荐

哈夫曼树基本概念

Not All Points Are Equal Learning Highly Efficient Point-based Detectors for 3D LiDAR Point

QT 项目 表格新建列名称设置 需求练习(找数组消失的数字、最大值)

Basic concepts of Huffman tree

How to replace the backbone of the model

When you go to the toilet, you can clearly explain the three Scheduling Strategies of scheduled tasks

[leetcode] 450 and 98 (deletion and verification of binary search tree)

Jericho is in non Bluetooth mode. Do not jump back to Bluetooth mode when connecting the mobile phone [chapter]

Ubuntu 20 installation des enregistrements redisjson

Set static IP for raspberry pie

Sub pixel corner detection opencv cornersubpix

HMS core machine learning service creates a new "sound" state of simultaneous interpreting translation, and AI makes international exchanges smoother

变量、流程控制与游标(MySQL)

About Tolerance Intervals

[leetcode] 700 and 701 (search and insert of binary search tree)

我的勇敢对线之路--详细阐述,浏览器输入URL发生了什么

VHDL implementation of single cycle CPU design

25. (ArcGIS API for JS) ArcGIS API for JS line modification line editing (sketchviewmodel)

20. (ArcGIS API for JS) ArcGIS API for JS surface collection (sketchviewmodel)

About Tolerance Intervals