当前位置:网站首页>Restore backup data on persistent volumes

Restore backup data on persistent volumes

2022-07-07 21:24:00 【Tianxiang shop】

This document describes how to store in Persistent volume The backup data on is restored to Kubernetes In the environment TiDB colony . The persistent volume described in this article refers to any Kubernetes Supported persistent volume types . In this paper, from the network file system (NFS) Store recovery data to TiDB For example .

The recovery method described in this document is based on TiDB Operator Of CustomResourceDefinition (CRD) Realization , Bottom use BR Tools to recover data .BR Its full name is Backup & Restore, yes TiDB Command line tools for distributed backup and recovery , Used to deal with TiDB Cluster for data backup and recovery .

Use scenarios

When using BR take TiDB After the cluster data is backed up to the persistent volume , If you need to backup from a persistent volume SST ( Key value pair ) Restore files to TiDB colony , Please refer to this article to use BR Resume .

Be careful

- BR Only support TiDB v3.1 And above .

- BR The recovered data cannot be synchronized to the downstream , because BR Direct import SST file , At present, the downstream cluster has no way to obtain the upstream SST file .

The first 1 Step : Prepare to restore the environment

Use BR take PV The backup data on is restored to TiDB front , Please follow these steps to prepare the recovery environment .

Download the file backup-rbac.yaml To the server performing the recovery .

Execute the following command in the

test2In this namespace, create the required RBAC Related resources :kubectl apply -f backup-rbac.yaml -n test2Confirm that you can start from Kubernetes Access the... Used to store backup data in the cluster NFS The server .

If you use it TiDB Version below v4.0.8, You also need to do the following . If you use it TiDB by v4.0.8 And above , You can skip this step .

Make sure you have the recovery database

mysql.tidbTabularSELECTandUPDATEjurisdiction , Used for adjusting before and after recovery GC Time .establish

restore-demo2-tidb-secretsecret:kubectl create secret generic restore-demo2-tidb-secret --from-literal=user=root --from-literal=password=<password> --namespace=test2

The first 2 Step : Recover data from persistent volumes

establish Restore custom resource (CR), Restore the specified backup data to TiDB colony :

kubectl apply -f restore.yamlrestore.yamlThe contents of the document are as follows :--- apiVersion: pingcap.com/v1alpha1 kind: Restore metadata: name: demo2-restore-nfs namespace: test2 spec: # backupType: full br: cluster: demo2 clusterNamespace: test2 # logLevel: info # statusAddr: ${status-addr} # concurrency: 4 # rateLimit: 0 # checksum: true # # Only needed for TiDB Operator < v1.1.10 or TiDB < v4.0.8 # to: # host: ${tidb_host} # port: ${tidb_port} # user: ${tidb_user} # secretName: restore-demo2-tidb-secret local: prefix: backup-nfs volume: name: nfs nfs: server: ${nfs_server_if} path: /nfs volumeMount: name: nfs mountPath: /nfsIn the configuration

restore.yamlWhen you file , Please refer to the following information :In the example above , Stored in NFS On

local://${.spec.local.volume.nfs.path}/${.spec.local.prefix}/Backup data under folder , Restored totest2In namespace TiDB colonydemo2. More persistent volume storage related configurations , Reference resources Local Storage field introduction ..spec.brSome parameter items in can be omitted , Such aslogLevel、statusAddr、concurrency、rateLimit、checksum、timeAgo、sendCredToTikv. more.spec.brDetailed explanation of fields , Reference resources BR Field is introduced .If you use TiDB >= v4.0.8, BR Will automatically adjust

tikv_gc_life_timeParameters , Don't need to Restore CR Middle configurationspec.toField .more

RestoreCR Detailed explanation of fields , Reference resources Restore CR Field is introduced .

Create good Restore CR after , View the status of the recovery through the following command :

kubectl get rt -n test2 -owide

边栏推荐

- Magic weapon - sensitive file discovery tool

- Virtual machine network configuration in VMWare

- FatMouse&#39; Trade(杭电1009)

- 【矩阵乘】【NOI 2012】【cogs963】随机数生成器

- Small guide for rapid formation of manipulator (12): inverse kinematics analysis

- Numerical method for solving optimal control problem (0) -- Definition

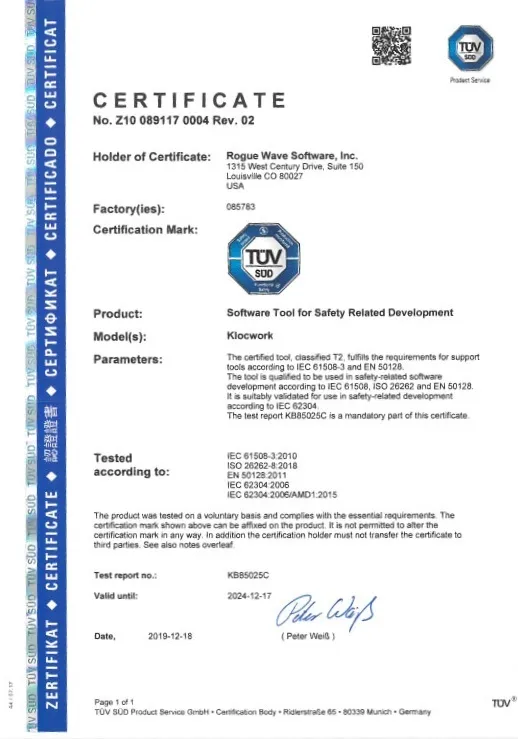

- Klocwork code static analysis tool

- Is private equity legal in China? Is it safe?

- npm uninstall和rm直接删除的区别

- The new version of onespin 360 DV has been released, refreshing the experience of FPGA formal verification function

猜你喜欢

Goal: do not exclude yaml syntax. Try to get started quickly

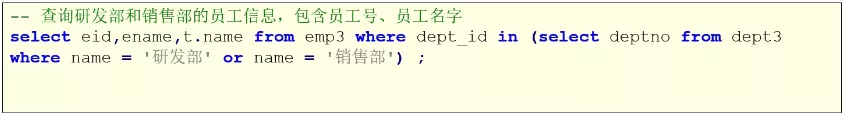

Usage of MySQL subquery keywords (exists)

![[paper reading] maps: Multi-Agent Reinforcement Learning Based Portfolio Management System](/img/76/b725788272ba2dcdf866b28cbcc897.jpg)

[paper reading] maps: Multi-Agent Reinforcement Learning Based Portfolio Management System

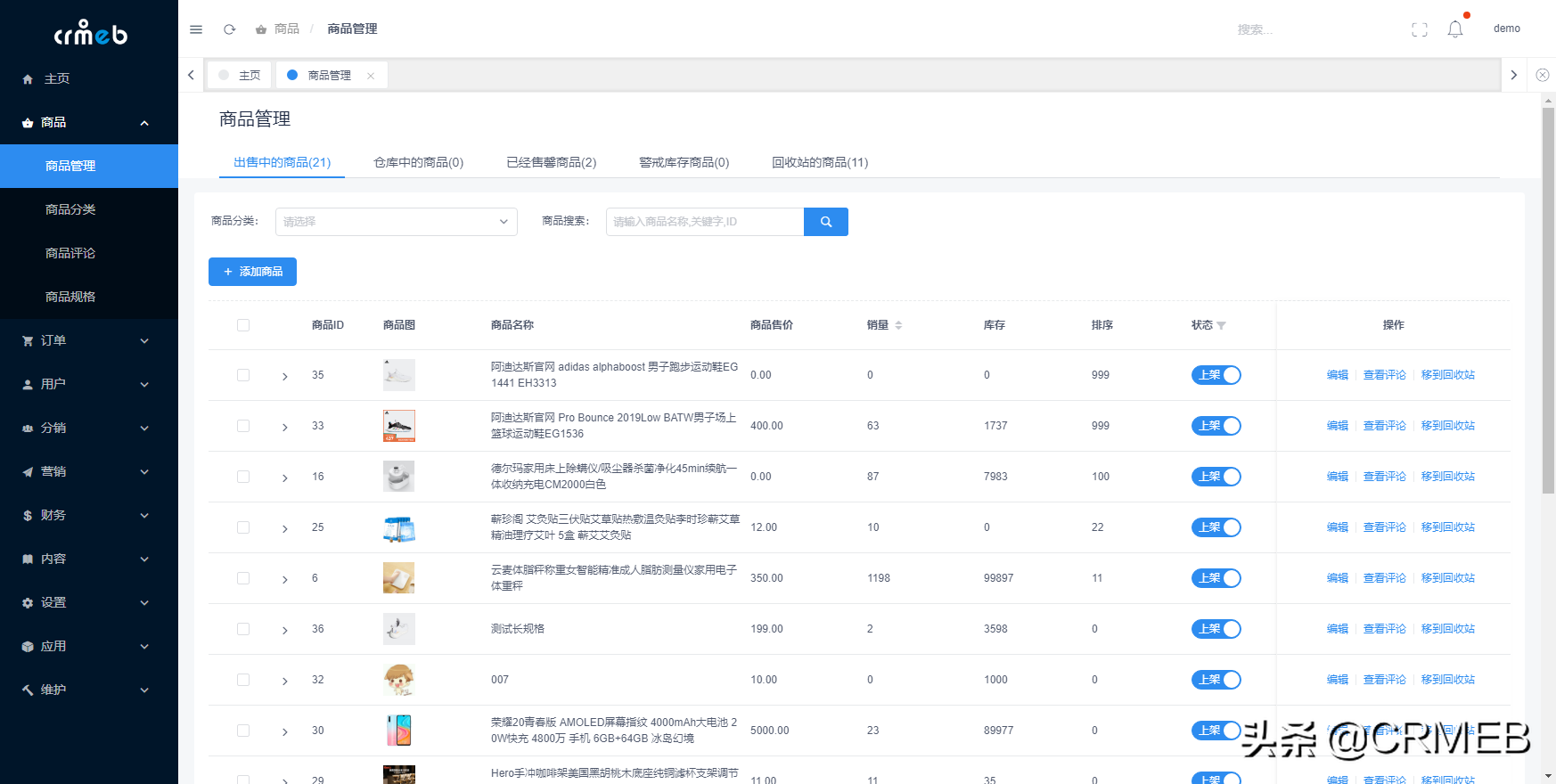

Make this crmeb single merchant wechat mall system popular, so easy to use!

ISO 26262 - considerations other than requirements based testing

Klocwork code static analysis tool

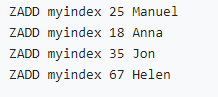

使用高斯Redis实现二级索引

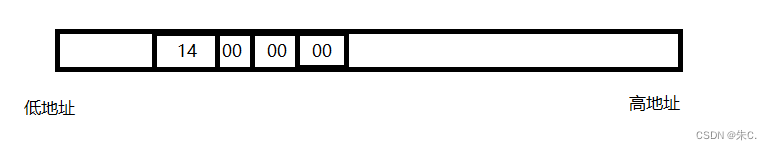

C语言 整型 和 浮点型 数据在内存中存储详解(内含原码反码补码,大小端存储等详解)

The little money made by the program ape is a P!

Onespin | solve the problems of hardware Trojan horse and security trust in IC Design

随机推荐

Numerical method for solving optimal control problem (0) -- Definition

Do you have to make money in the account to open an account? Is the fund safe?

Problems encountered in installing mysql8 for Ubuntu and the detailed installation process

OpenGL super classic learning notes (1) the first triangle "suggestions collection"

Le capital - investissement est - il légal en Chine? C'est sûr?

[C language] advanced pointer --- do you really understand pointer?

Postgresql数据库character varying和character的区别说明

国家正规的股票交易app有哪些?使用安不安全

恶魔奶爸 B3 少量泛读,完成两万词汇量+

AADL inspector fault tree safety analysis module

Cocos2d-x game archive [easy to understand]

Klocwork code static analysis tool

寫一下跳錶

POJ 3140 contents division "suggestions collection"

C language helps you understand pointers from multiple perspectives (1. Character pointers 2. Array pointers and pointer arrays, array parameter passing and pointer parameter passing 3. Function point

Details of C language integer and floating-point data storage in memory (including details of original code, inverse code, complement, size end storage, etc.)

恶魔奶爸 A1 语音听力初挑战

Can Huatai Securities achieve Commission in case of any accident? Is it safe to open an account

Virtual machine network configuration in VMWare

Magic weapon - sensitive file discovery tool