当前位置:网站首页>General process of machine learning training and parameter optimization (discussion)

General process of machine learning training and parameter optimization (discussion)

2022-07-06 02:13:00 【Min fan】

Abstract : In practical machine learning applications , Not only model training , Also control the input parameters . This paper describes the general process , For reference only .

1. Training machine learning models

For an input of m m m Features , Output as a decision indicator , Machine learning models can be built

f : R m → R (1) f: \mathbb{R}^m \to \mathbb{R} \tag{1} f:Rm→R(1)

among R \mathbb{R} R Is a set of real numbers . If different features have their own value range , Then the machine learning model can be expressed as

f : ∏ i = 1 m V i → R (2) f: \prod_{i=1}^m \mathbf{V}_i \to \mathbb{R} \tag{2} f:i=1∏mVi→R(2)

among V i \mathbf{V}_i Vi It's No i i i Value range of features .

Simplicity , Only... Is discussed below (1) Model corresponding to formula .

Given to contain n n n Characteristic matrix of instances X = [ x 1 , … , x n ] T ∈ R n × m \mathbf{X} = [\mathbf{x}_1, \dots, \mathbf{x}_n]^{\mathrm{T}} \in \mathbb{R}^{n \times m} X=[x1,…,xn]T∈Rn×m And the corresponding label vector Y ∈ R n \mathbf{Y} \in \mathbb{R}^n Y∈Rn, The optimization objective of machine learning can generally be expressed as

min f L ( f ( X ) , Y ) + R ( f ) (3) \min_f \mathcal{L}(f(\mathbf{X}), \mathbf{Y}) + R(f) \tag{3} fminL(f(X),Y)+R(f)(3)

among f ( X ) = [ f ( x 1 ) , … , f ( x n ) ] f(\mathbf{X}) = [f(\mathbf{x}_1), \dots, f(\mathbf{x}_n)] f(X)=[f(x1),…,f(xn)] Vector for predicted tags , R ( f ) R(f) R(f) by f f f Regular term of parameter in . If the optimization goal is a convex function , Then the gradient descent method can be used to quickly find the optimal solution . For regular terms :

- If f f f For a linear model , The regular can be 1 norm 、2 norm 、 Kernel norm, etc . Its function is to prevent over fitting .

- If f f f For a neural network model , You can use the dropout And other technologies to prevent over fitting .

2. Parameter optimization method

For some practical problems , Some of the input characteristics are objective , Some are controllable . No loss of generality , Before order m 1 m_1 m1 The first feature is objective , after m 2 m_2 m2 Three features are controllable ( So we also call it parameter ), m 1 + m 2 = m m_1 + m_2 = m m1+m2=m. Suppose a reliable machine learning model has been trained through a large amount of data f f f, And we expect to maximize the decision indicators . Given the objective eigenvector x b ∈ R m 1 \mathbf{x}_b \in \mathbb{R}^{m_1} xb∈Rm1, The objective function of parameter optimization is

arg max x u ∈ R m 2 f ( x b ∥ x u ) (4) \argmax_{\mathbf{x_u} \in \mathbb{R}^{m_2}} f(\mathbf{x}_b \| \mathbf{x}_u)\tag{4} xu∈Rm2argmaxf(xb∥xu)(4)

among ∥ \| ∥ Indicates the vector splicing operation .

- If f f f Each controllable feature is a convex function , Then the optimal parameters can be obtained by gradient descent and other methods .

- If f f f The controllable features are not a convex function , Then some bionic algorithms can be used to optimize the parameters .

- If the controllable features are enumerated, the cardinality of the definition domain is not large , Then the optimal parameters can be obtained directly by the exhaustive method . example : Controllable features include 5 individual , Everyone with a 10 Possible values , From the 1 0 5 10^5 105 The optimal parameter vector is obtained from three parameter combinations , It only takes a few seconds to calculate .

边栏推荐

- MySQL learning notes - subquery exercise

- Jisuanke - t2063_ Missile interception

- leetcode-两数之和

- It's wrong to install PHP zbarcode extension. I don't know if any God can help me solve it. 7.3 for PHP environment

- vs code保存时 出现两次格式化

- Numpy array index slice

- Blue Bridge Cup embedded_ STM32 learning_ Key_ Explain in detail

- Bidding promotion process

- Using SA token to solve websocket handshake authentication

- [solution] every time idea starts, it will build project

猜你喜欢

1. Introduction to basic functions of power query

Audio and video engineer YUV and RGB detailed explanation

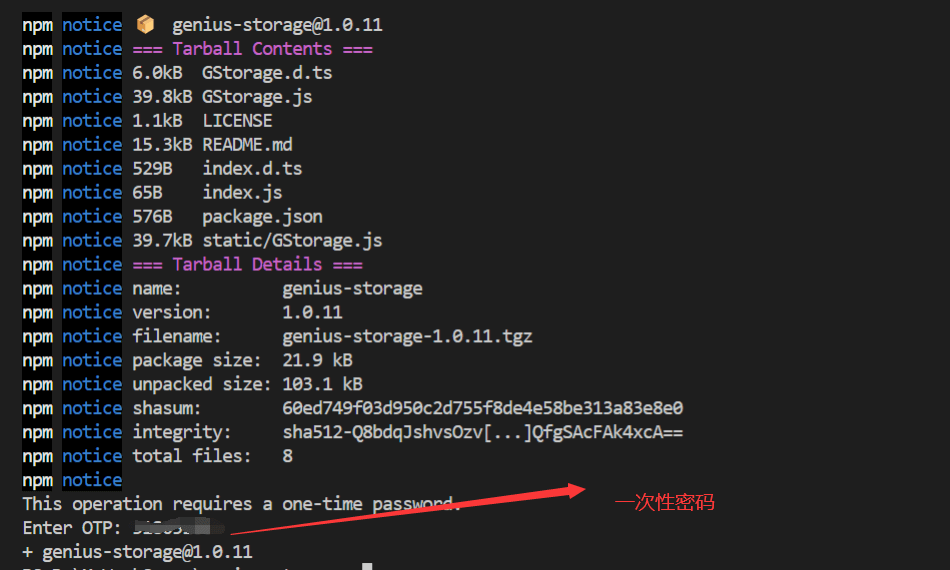

Publish your own toolkit notes using NPM

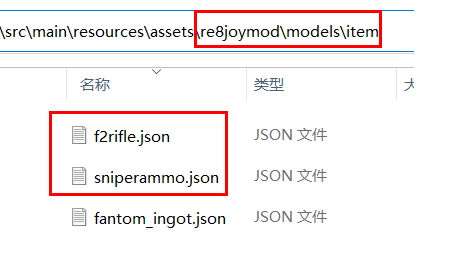

Minecraft 1.18.1、1.18.2模组开发 22.狙击枪(Sniper Rifle)

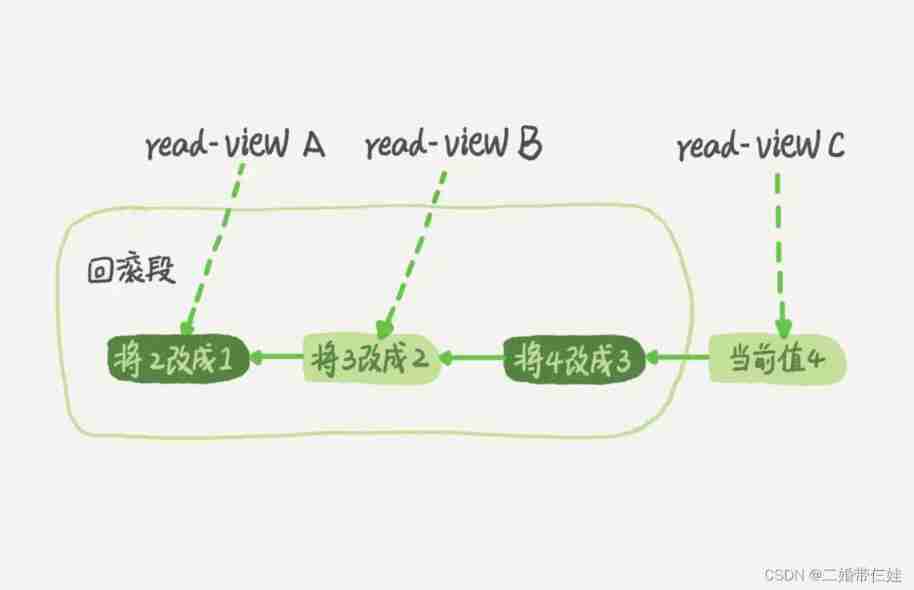

MySQL lethal serial question 1 -- are you familiar with MySQL transactions?

![[flask] official tutorial -part1: project layout, application settings, definition and database access](/img/c3/04422e4c6c1247169999dd86b74c05.png)

[flask] official tutorial -part1: project layout, application settings, definition and database access

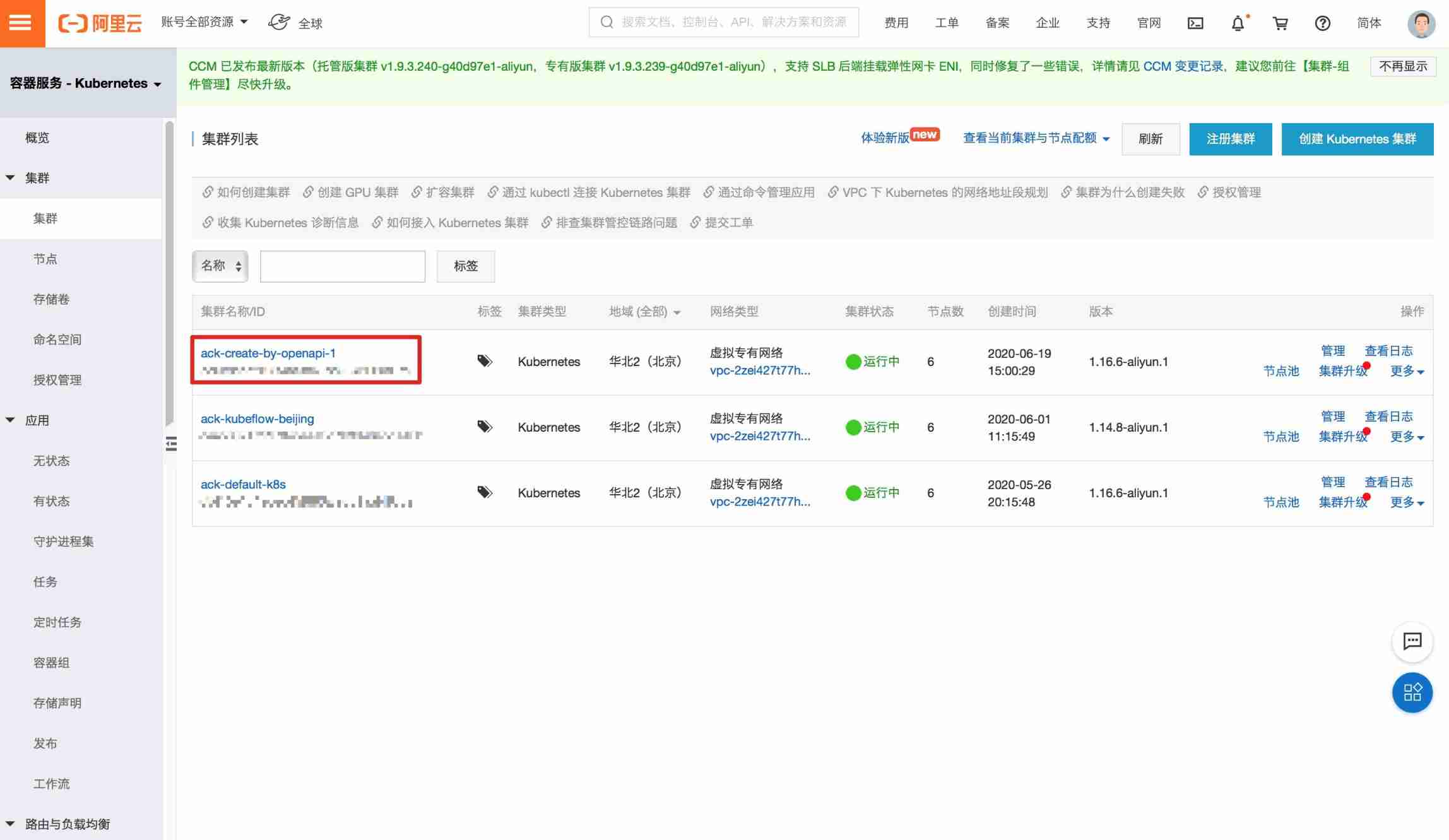

Accelerating spark data access with alluxio in kubernetes

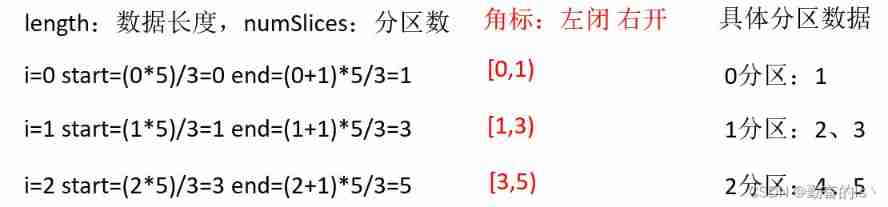

RDD partition rules of spark

NumPy 数组索引 切片

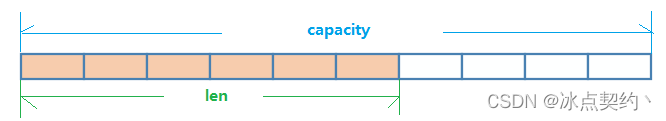

Redis-字符串类型

随机推荐

The ECU of 21 Audi q5l 45tfsi brushes is upgraded to master special adjustment, and the horsepower is safely and stably increased to 305 horsepower

【社区人物志】专访马龙伟:轮子不好用,那就自己造!

PHP campus movie website system for computer graduation design

How does redis implement multiple zones?

使用npm发布自己开发的工具包笔记

Computer graduation design PHP college student human resources job recruitment network

同一个 SqlSession 中执行两条一模一样的SQL语句查询得到的 total 数量不一样

The intelligent material transmission system of the 6th National Games of the Blue Bridge Cup

Gbase 8C database upgrade error

Adapter-a technology of adaptive pre training continuous learning

Open source | Ctrip ticket BDD UI testing framework flybirds

Virtual machine network, networking settings, interconnection with host computer, network configuration

阿裏測開面試題

01.Go语言介绍

[ssrf-01] principle and utilization examples of server-side Request Forgery vulnerability

Text editing VIM operation, file upload

Competition question 2022-6-26

Card 4G industrial router charging pile intelligent cabinet private network video monitoring 4G to Ethernet to WiFi wired network speed test software and hardware customization

Global and Chinese markets hitting traffic doors 2022-2028: Research Report on technology, participants, trends, market size and share

SPI communication protocol