当前位置:网站首页>How to upgrade kubernetes in place

How to upgrade kubernetes in place

2022-07-06 01:30:00 【a_ small_ cherry】

brief introduction : In the word "upgrade in place" ,“ upgrade ” It's not difficult to understand. , It is to replace the old version with the new version of the application instance . So how to combine Kubernetes Environment to understand “ In situ ” Well ?

Image download 、 Domain name resolution 、 Time synchronization please click Alibaba open source mirror site

One 、 The concept is introduced

In the word "upgrade in place" ,“ upgrade ” It's not difficult to understand. , It is to replace the old version with the new version of the application instance . So how to combine Kubernetes Environment to understand “ In situ ” Well ?

Let's take a look first K8s Native workload How to publish . Suppose we need to deploy an application , Include foo、bar Two containers in Pod in . among ,foo The mirror version of the container when it is first deployed is v1, We need to upgrade it to v2 Version image , How do you do that ?

- If this app uses Deployment Deploy , So during the upgrade process Deployment A new version will be triggered ReplicaSet establish Pod, And delete the old version Pod. As shown in the figure below :

During this upgrade , primary Pod Object deleted , A new Pod Object created . new Pod Is scheduled to another Node On , Assign to a new IP, And put foo、bar Two containers in this Node Pull the mirror image again 、 Start the container .

- If this should be used StatefulSet Deploy , So during the upgrade process StatefulSet Will delete the old Pod object , After deleting, create a new one with the same name Pod object . As shown in the figure below :

It is worth noting that , Although the old and the new Pod Their names are pod-0, But it's actually two completely different Pod object (uid Also changed. ).StatefulSet Wait until the original pod-0 The object is completely from Kubernetes After being deleted from the cluster , Will submit to create a new pod-0 object . And this new Pod It will also be rescheduled 、 Distribute IP、 Pull the mirror 、 Start the container .

And the so-called in place upgrade mode , That is, in the process of application upgrade, avoid the whole process of Pod Object delete 、 newly build , It's based on the original Pod Object to upgrade the mirror version of one or more containers :

In the process of upgrading in place , We just updated the original Pod In the object foo Container of image Field to trigger foo Container upgrade to new version . whether Pod object , still Node、IP Nothing has changed , even to the extent that foo In the process of container upgrade bar The container is still running .

summary : This only updates Pod One or more container versions in 、 It doesn't affect the whole thing Pod object 、 How to upgrade the remaining containers , We call it Kubernetes Upgrade in place .

Two 、 Income analysis

that , Why should we Kubernetes The concept and design of this in place upgrade are introduced in ?

First , This in place upgrade mode greatly improves the efficiency of application release , According to incomplete statistics , In the Ali environment, upgrading in place is at least better than upgrading with full reconstruction 80% The above release speed . It's easy to understand , In place upgrade brings the following optimization points for release efficiency :

1. The scheduling time is saved ,Pod The location of 、 Resources don't change ;

2. It saves the time of distribution network ,Pod And use the original IP;

3. Saving distribution 、 Time consuming to mount the remote disk ,Pod And use the original PV( And they are already in Node Mount good );

4. Saves most of the time needed to pull the image , because Node The old image of the application already exists on , When pulling a new version of the image, you only need to download a few layers layer.

secondly , When we upgrade Pod Some of them sidecar Containers ( Such as collecting logs 、 Monitoring etc. ) when , You don't want to interfere with the operation of the business container . But in the face of this scenario ,Deployment or StatefulSet The upgrade will take the whole Pod The reconstruction , It is bound to have a certain impact on the business . The scope of container level in place upgrade is very controllable , Only the containers that need to be upgraded will be rebuilt , The rest of the containers include the network 、 Will not be affected by the mount .

Last , Upgrading in place also brings us the stability and certainty of the cluster . When one Kubernetes A large number of applications in the cluster trigger reconstruction Pod Upgrade time , It could lead to large-scale Pod Drift , And right Node On some low priority tasks Pod Causing repeated preemptive migrations . These large-scale Pod The reconstruction , Itself will be right to apiserver、scheduler、 The Internet / Central components such as disk allocation cause more pressure , And the delay of these components will also give Pod Reconstruction brings a vicious circle . After upgrading in place , The whole upgrade process will only involve controller Yes Pod Object update operation and kubelet Rebuild the corresponding container .

3、 ... and 、 Technical background

Inside Alibaba , The vast majority of e-commerce applications are released in the cloud native environment by in situ upgrade , The controller that supports in place upgrade is located in OpenKruise Open source projects .

in other words , Ali's internal cloud native applications are all used in a unified way OpenKruise The extension workload Do Deployment Management , It's not native Deployment/StatefulSet etc. .

that OpenKruise How to achieve in place upgrade capability ? Before introducing the implementation principle of in place upgrade , Let's first look at some native features that upgrade in place depends on Kubernetes function :

background 1:Kubelet in the light of Pod Container version management

Every Node Upper Kubelet, It will target all the Pod.spec.containers Each of the container Calculate a hash value , And record it in the actual container created .

If we modify Pod One of them container Of image Field ,kubelet Will find container Of hash There is a change 、 With containers created in the past on the machine hash atypism , Then kubelet It will stop the old container , And then according to the latest Pod spec Medium container To create a new container .

This function , In fact, it's aimed at individual Pod The core principle of in place upgrade .

background 2:Pod Update restrictions

In a native kube-apiserver in , Yes Pod Object update requests are strictly validation Check logic :

// validate updateable fields:

// 1. spec.containers[*].image

// 2. spec.initContainers[*].image

// 3. spec.activeDeadlineSeconds Simply speaking , For a created Pod, stay Pod Spec You can only modify containers/initContainers Medium image Field , as well as activeDeadlineSeconds Field . Yes Pod Spec Update of all other fields in , Will be kube-apiserver Refuse .

background 3:containerStatuses Report

kubelet Will be in pod.status Medium reporting containerStatuses, Corresponding Pod The actual operational status of all containers in :

apiVersion: v1

kind: Pod

spec:

containers:

- name: nginx

image: nginx:latest

status:

containerStatuses:

- name: nginx

image: nginx:mainline

imageID: docker-pullable://[email protected]:2f68b99bc0d6d25d0c56876b924ec20418544ff28e1fb89a4c27679a40da811b In most cases ,spec.containers[x].image And status.containerStatuses[x].image The two images are identical .

But there are also the above situations ,kubelet Reported and spec Medium image atypism (spec Medium is nginx:latest, but status It is reported that nginx:mainline).

This is because ,kubelet Reported image In fact, from CRI The image name corresponding to the container obtained in the interface . And if the Node There are multiple images on the machine corresponding to one imageID, So it could be any one of them :

$ docker images | grep nginx

nginx latest 2622e6cca7eb 2 days ago 132MB

nginx mainline 2622e6cca7eb 2 days ago therefore , One Pod in spec and status Of image Inconsistent fields , It doesn't mean that the mirror version of the container running on the host is not consistent with the expectation .

background 4:ReadinessGate control Pod whether Ready

stay Kubernetes 1.12 Before the release , One Pod Is it in Ready The state is just made up of kubelet According to the state of the container : If Pod All the containers are ready, that Pod It's in Ready state .

But in fact , Most of the time, the upper class operator Or users need to be able to control Pod whether Ready The ability of . therefore ,Kubernetes 1.12 The version provides a readinessGates Function to meet this scenario . as follows :

apiVersion: v1

kind: Pod

spec:

readinessGates:

- conditionType: MyDemo

status:

conditions:

- type: MyDemo

status: "True"

- type: ContainersReady

status: "True"

- type: Ready

status: "True" at present kubelet Decide on a Pod whether Ready Two prerequisites for :

1.Pod All the containers are Ready( In fact, it corresponds to ContainersReady condition by True);

2. If pod.spec.readinessGates One or more... Are defined in conditionType, So you need these conditionType stay pod.status.conditions There are corresponding status: "true" The state of .

Only if the above two premises are satisfied ,kubelet I'll report it Ready condition by True.

Four 、 Realization principle

After understanding the above four backgrounds , Let's analyze OpenKruise How is the Kubernetes The principle of in place upgrade in .

1. Single Pod How to upgrade in place ?

from “ background 1” You know , In fact, we have a stock of Pod Of spec.containers[x] Modify the fields in the ,kubelet Will feel this container Of hash There is a change , Then the corresponding old container will be stopped , And use the new container To pull the mirror image 、 Create and start a new container .

from “ background 2” You know , At present, we have a stock of Pod Of spec.containers[x] Modification in , Is limited to image Field .

therefore , The first realization principle is obtained :** For an existing Pod object , We can and can only modify spec.containers[x].image Field , To trigger Pod Upgrade the corresponding container in to a new one image.

2. How to determine Pod Upgrade in place succeeded ?

The next question is , When we changed Pod Medium spec.containers[x].image Field after , How to determine kubelet The container has been rebuilt successfully ?

from “ background 3” You know , Compare spec and status Medium image Fields are unreliable , Because it's possible status It is reported that Node There is another image name on ( identical imageID).

therefore , The second realization principle is obtained : Judge Pod Whether the upgrade in place is successful , Relatively speaking, the more reliable way , Before upgrading in place status.containerStatuses[x].imageID recorded . In the update spec After mirroring , If you observe Pod Of status.containerStatuses[x].imageID Changed , We think the in place upgrade has rebuilt the container .

But this way , We upgrade in place image There is also a requirement : Out-of-service image name (tag) Different 、 But it actually corresponds to the same imageID To upgrade in place , Otherwise, it may be judged that the upgrade is not successful ( because status in imageID No change ).

Of course , In the future, we can continue to optimize .OpenKruise The ability to warm up the open source image , Will pass DaemonSet At every Node Put a NodeImage Pod. adopt NodeImage We can learn that pod spec Medium image The corresponding imageID, And then pod status Medium imageID Comparison can accurately judge whether the in place upgrade is successful .

3. How to ensure that the traffic is lossless in the process of in place upgrade ?

stay Kubernetes in , One Pod whether Ready It means whether it can provide services . therefore , image Service This kind of flow inlet will pass the judgment Pod Ready To choose whether or not to put this Pod Join in endpoints In the endpoint .

from “ background 4” You know , from Kubernetes 1.12+ after ,operator/controller These components can also be set by readinessGates And update pod.status.conditions Customizations in type state , To control Pod Is it available .

therefore , The third realization principle is obtained : Can be in pod.spec.readinessGates It is defined as InPlaceUpdateReady Of conditionType.

When upgrading in place :

First the pod.status.conditions Medium InPlaceUpdateReady condition Set to "False", This will trigger kubelet take Pod Reported as NotReady, So that the flow component ( Such as endpoint controller) Put this Pod Remove from the service endpoint ;

Update again pod spec Medium image Trigger in place upgrade .

After the in place upgrade is over , then InPlaceUpdateReady condition Set to "True", send Pod Return to Ready state .

In addition, in the two steps of in place upgrade , The first step will be Pod Change it to NotReady after , The flow component is asynchronous watch It may take some time to get to the change and remove the endpoint . So we also offer the ability to upgrade gracefully in place , That is, through gracePeriodSeconds Configuration is changing NotReady Status and real updates image Trigger the silence time between the two steps of in place upgrade .

4. Combined publishing strategy

Upgrade in place and Pod Rebuild and upgrade , It can be implemented with various release strategies :

- partition: If configured partition Do grayscale , Then it will only be replicas-partition In quantity Pod Upgrade in place ;

- maxUnavailable: If configured maxUnavailable, Then it will only satisfy unavailable In quantity Pod Upgrade in place ;

- maxSurge: If configured maxSurge Make it elastic , So we should expand it first maxSurge In quantity Pod after , In stock Pod Still use in place upgrade ;

- priority/scatter: If the release priority is configured / Break up strategy , It will deal with Pod Upgrade in place .

5、 ... and 、 summary

As mentioned above ,OpenKruise combination Kubernetes Provided by the native kubelet Container version management 、readinessGates And so on , To achieve the goal of Pod The ability to upgrade in place .

And in place upgrade also brings great efficiency for application release 、 Improved stability . Here's the thing to watch , With clustering 、 Increasing the scale of applications , The more obvious the benefits of this increase . It's the ability to upgrade in place , In the past two years, it has helped Alibaba smoothly migrate its super large-scale application container to the one based on Kubernetes Cloud native environment of , And the original Deployment/StatefulSet It can't be used in this kind of environment .

In this paper, from : How to Kubernetes Upgrade in place - Alicloud developer community

边栏推荐

- MySQL learning notes 2

- PHP error what is an error?

- Force buckle 9 palindromes

- Leetcode study - day 35

- 500 lines of code to understand the principle of mecached cache client driver

- 【Flask】官方教程(Tutorial)-part3:blog蓝图、项目可安装化

- 记一个 @nestjs/typeorm^8.1.4 版本不能获取.env选项问题

- Leetcode 剑指 Offer 59 - II. 队列的最大值

- MUX VLAN configuration

- Huawei converged VLAN principle and configuration

猜你喜欢

![[solved] how to generate a beautiful static document description page](/img/c1/6ad935c1906208d81facb16390448e.png)

[solved] how to generate a beautiful static document description page

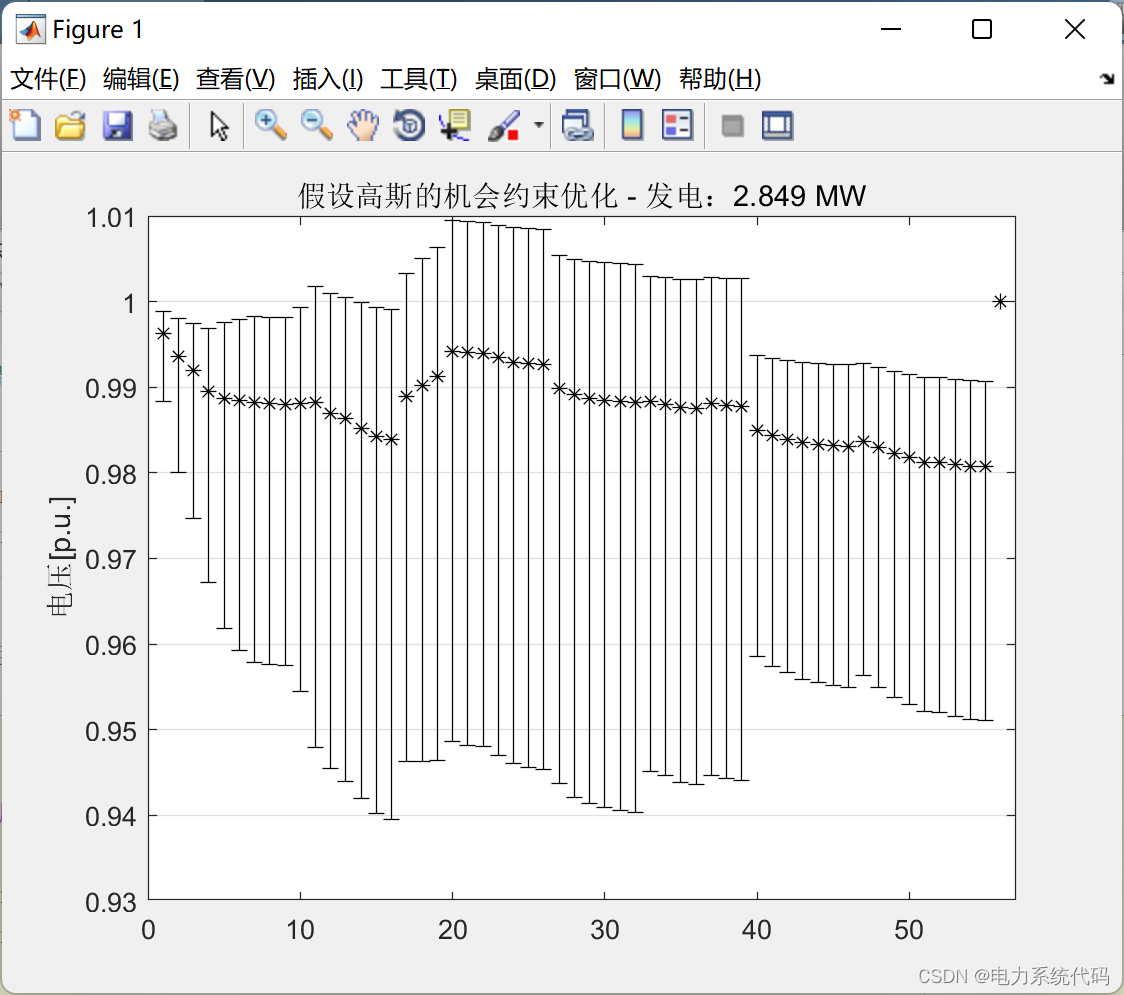

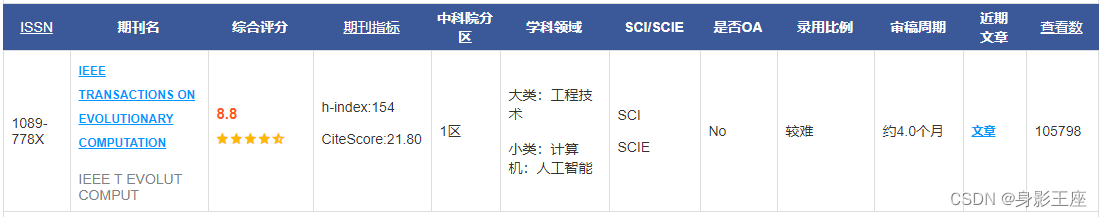

MATLB | real time opportunity constrained decision making and its application in power system

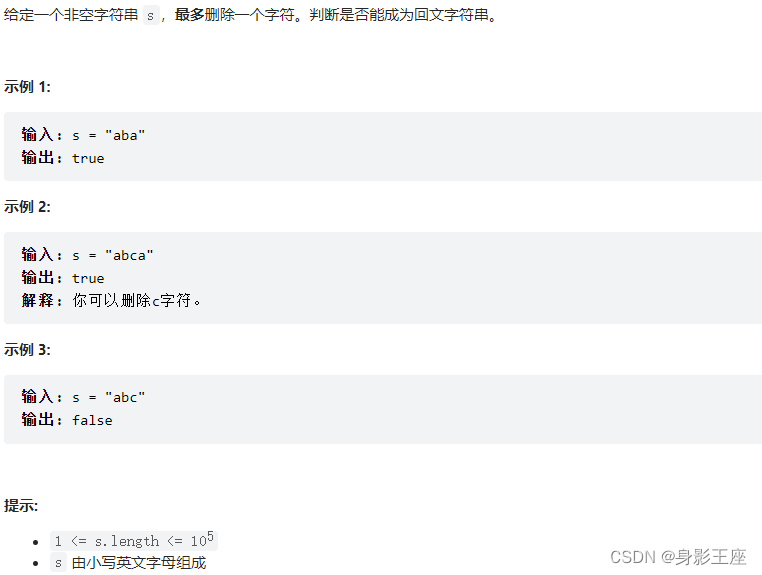

leetcode刷题_验证回文字符串 Ⅱ

MySQL learning notes 2

A Cooperative Approach to Particle Swarm Optimization

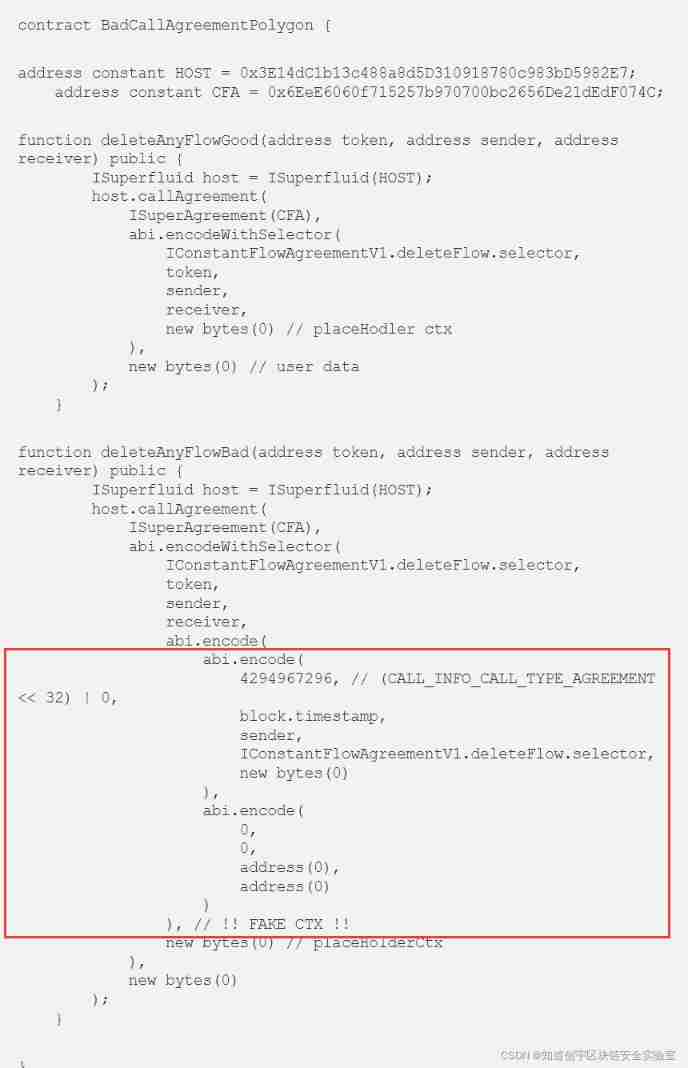

Superfluid_ HQ hacked analysis

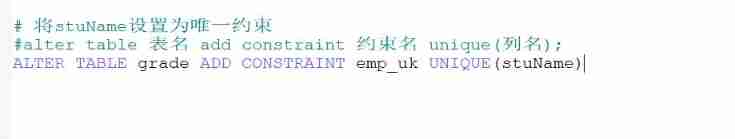

Basic operations of databases and tables ----- unique constraints

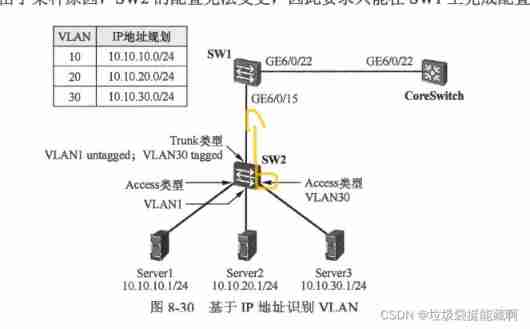

Huawei Hrbrid interface and VLAN division based on IP

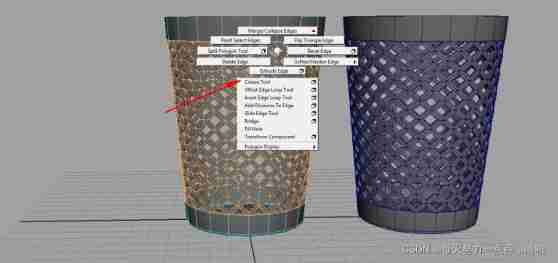

Maya hollowed out modeling

Electrical data | IEEE118 (including wind and solar energy)

随机推荐

黄金价格走势k线图如何看?

[flask] response, session and message flashing

The basic usage of JMeter BeanShell. The following syntax can only be used in BeanShell

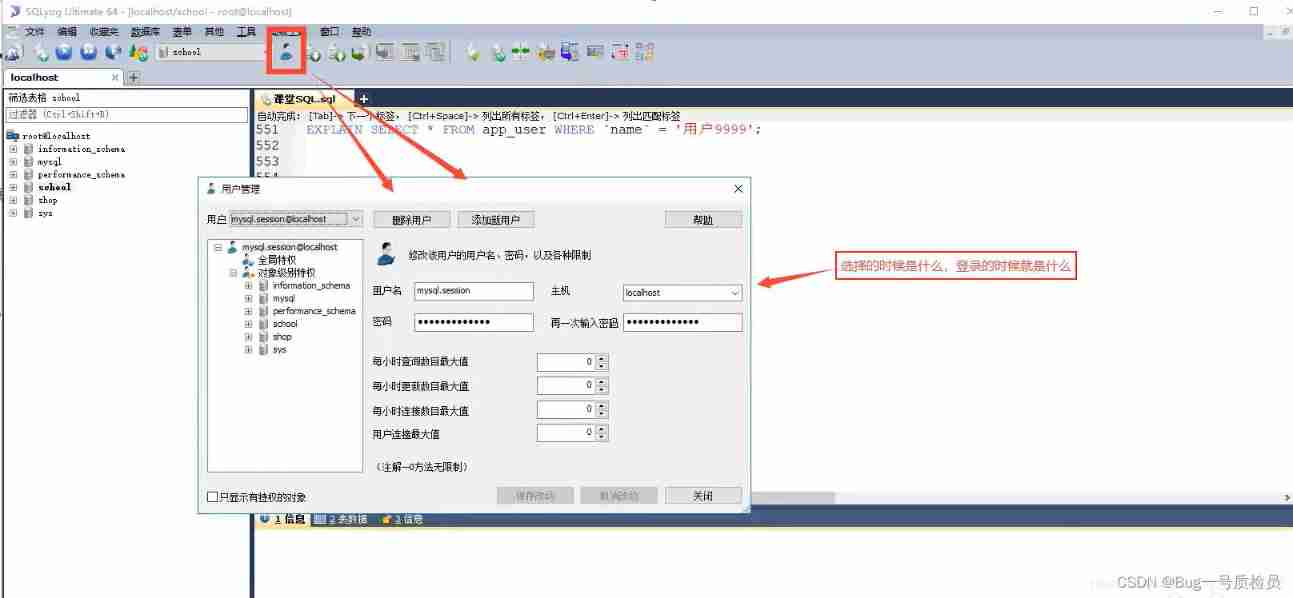

[the most complete in the whole network] |mysql explain full interpretation

Opinions on softmax function

Test de vulnérabilité de téléchargement de fichiers basé sur dvwa

How does the crystal oscillator vibrate?

You are using pip version 21.1.1; however, version 22.0.3 is available. You should consider upgradin

关于softmax函数的见解

A Cooperative Approach to Particle Swarm Optimization

VMware Tools installation error: unable to automatically install vsock driver

Development trend of Ali Taobao fine sorting model

MySQL learning notes 2

VMware Tools安装报错:无法自动安装VSock驱动程序

Huawei Hrbrid interface and VLAN division based on IP

internship:项目代码所涉及陌生注解及其作用

Unity | 实现面部驱动的两种方式

Yii console method call, Yii console scheduled task

一圖看懂!為什麼學校教了你Coding但還是不會的原因...

Unity | two ways to realize facial drive