当前位置:网站首页>The whole process of knowledge map construction

The whole process of knowledge map construction

2022-07-07 03:00:00 【AI Zeng Xiaojian】

One 、 An introduction to the knowledge map

Knowledge map , yes Structured semantic knowledge base , Used to quickly describe The physical world Medium Concept And its interrelation , Through the knowledge map, we can transform Web Information on 、 Data and Link relationships are aggregated into knowledge , Make information resources easier to calculate 、 Understand and evaluate , And can realize the rapid response and reasoning of knowledge .

1.1 Widely used in various fields

At present, knowledge atlas has been widely used in the industrial field , Such as in the search field Google Search for 、 Baidu search , The leading UK economic map in the social field , In the field of enterprise information Tianyan checks the enterprise atlas , In the field of e-commerce Taobao commodity map ,O2O In the field of Meituan knowledge brain , In the medical field Ding Xiangyuan knowledge map , And the knowledge map of industrial manufacturing .

In the early stage of the development of Knowledge Mapping Technology , Many enterprises and scientific research institutions will adopt a top-down approach to build a basic knowledge base , Such as Freebase. With Automatic knowledge extraction With the continuous maturity of processing technology , The current knowledge map mostly adopts Bottom up The way to build , Such as Google Of Knowledge Vault And Microsoft. Satori The knowledge base .

1.2 Build technology classification

The construction technology of knowledge map mainly includes top-down and bottom-up .

- Top down build : With the help of encyclopedia websites Structured data sources , Extract from high-quality data noumenon And mode information , Add to the knowledge base .

- Build from the bottom up : By means of certain technical means , Extract resource patterns from publicly collected data , Select the information with high confidence , Add to the knowledge base .

1.3 “ Entity - Relationship - Entity ” A triple

The following figure is a typical sample diagram of knowledge map . You can see ,“ Manual ” There are a lot of nodes in , If there is a relationship between two nodes , They will Connected by an undirected edge together , This node is called Entity (Entity), The edge between the nodes , We call it Relationship (Relationship).

The basic unit of knowledge map , Namely “ Entity (Entity)- Relationship (Relationship)- Entity (Entity)” The triples formed , This is also the core of the knowledge map .

Two 、 Data types and storage methods

Generally speaking, there are three types of original data of knowledge map ( It's also the three kinds of raw data on the Internet ):

- Structured data (Structed Data), Such as : relational database 、 Linked data

- Semi-structured data (Semi-Structured Data), Such as :XML、JSON、 Encyclopedias

- Unstructured data (Unstructured Data), Such as : picture 、 Audio 、 video

Typical examples of semi-structured data are as follows :

How to store the above three types of data ?

There are generally two options : Can pass RDF( Resource Description Framework ) Such a standard storage format for storage , More commonly used are Jena etc. .

<RDF>

<Description about="https://www.w3.org/RDF/">

<author>HanXinzi</author>

<homepage> http://www.showmeai.tech </homepage>

</Description>

</RDF>Another way is to use Graph database To store , Commonly used Neo4j etc. .

So far , It seems that the knowledge map is mainly a pile A triple , Is it OK to use relational database to store ?

Yes , Technically , Using relational database to store knowledge map ( Especially the knowledge map with simple structure ), No problem at all . But once the knowledge map becomes complex , In the traditional way 「 Relational data storage 」, The query efficiency will be significantly lower than 「 Graph database 」. In some cases, it involves 2,3 Degree related query scenario , Graph database can improve query efficiency thousands or even millions of times .

And graph based storage will be very flexible in design , Generally, only local changes are needed . When your scene data scale is large , It is recommended to directly use the graph database for storage .

3、 ... and 、 The structure of knowledge map

The structure of knowledge map can be divided into :

- Logical architecture

- Technology Architecture

3.1 Logical architecture

Logically , We usually divide the knowledge map into two levels : Data layer and pattern layer .

- Pattern layer : Above the data layer , yes The core of knowledge map , Store refined knowledge , This layer is usually managed through ontology library ( Ontology library can be understood as... In object-oriented “ class ” Such a concept , Ontology database stores the classes of knowledge map ).

- The data layer : Store real data .

Take a look at this example :

Pattern layer : Entity - Relationship - Entity , Entity - attribute - Sex value

The data layer : Wu Jing - Wife - Xie Nan , Wu Jing - The director - Warwolf Ⅱ

3.2 Technology Architecture

The overall structure of the knowledge map is shown in the figure , The part in the dotted box is the construction process of knowledge map , At the same time, it is also the process of updating knowledge map . take it easy , Let's follow this picture to sort out our thoughts .

- First , We have a lot of data , The data may be structured 、 Unstructured and semi-structured ;

- then , We build a knowledge map based on these data , This step is mainly through a series of automatic or semi-automatic technical means , To extract knowledge elements from the original data , That is, a bunch of entity relationships , And store it in the pattern layer and data layer of our knowledge base .

Four 、 Build technology

I've talked about the previous content , There are top-down and bottom-up construction methods of knowledge map , The construction techniques mentioned here are mainly Bottom up Building technology for .

As mentioned earlier , Building a knowledge map is an iterative process , According to the logic of knowledge acquisition , Each iteration consists of three phases :

- Information extraction : Extract entities from various types of data sources 、 Properties and relationships between entities , On this basis, ontology knowledge expression is formed .

- Knowledge fusion : After acquiring new knowledge , It needs to be integrated , To eliminate contradictions and ambiguities , For example, some entities may have multiple expressions , A certain appellation may correspond to many different entities, etc .

- Knowledge processing : For new knowledge that has been integrated , After quality assessment ( Some of them need to be screened manually ), In order to add the qualified part to the knowledge base , To ensure the quality of the knowledge base .

Let's introduce each step in turn .

4.1 knowledge

knowledge (infromation extraction) It's the first... Of the construction of knowledge map 1 Step , The key problem is : How to automatically extract information from heterogeneous data sources to get candidate instruction units ?

Information extraction is an automatic extraction of entities from semi-structured and unstructured data 、 The technology of structured information such as relationships and entity attributes . The key technologies involved include : Entity extraction 、 Relationship extraction and Attribute extraction .

1) Entity extraction

Entity extraction , Also known as named entity recognition (named entity recognition,NER), It refers to the automatic recognition of named entities from the text dataset .

In the figure , Through entity extraction, we can extract four entities :“ Africa ”、“ The Chinese navy ”、“ Leng Feng ”、“ Warwolf ”.

Study history :

◉ Entity extraction from a single domain , Step by step to open domain (Open Domain) Entity extraction .

2) Relationship extraction

After entity extraction of text corpus , What we get is a series of discrete named entities . In order to get semantic information , We also need to extract the relationship between entities from the relevant corpus , Connect entities through relationships , To form a network of knowledge structure . This is what relationship extraction needs to do , As shown in the figure below .

Study history :

◉ Artificially construct grammar and semantic rules ( Pattern matching ).

◉ Statistical machine learning methods .

◉ Supervised learning method based on eigenvector or kernel function .

◉ The focus of research has shifted to semi supervised and unsupervised .

◉ We started to study information extraction methods for open domain .

◉ It combines the information extraction method for open domain with the traditional method for closed domain .

3) Attribute extraction

The goal of attribute extraction is to collect attribute information of specific entities from different information sources , For example, for a public figure , You can get its nickname from the public information on the Internet 、 Birthday 、 nationality 、 Education background, etc .

Study history :

◉ Regarding the attribute of entity as a nominal relationship between entity and attribute value , Transform attribute extraction task into relation extraction task .

◉ Based on rules and Heuristics , Extract structured data .

◉ Based on the semi-structured data of encyclopedia websites , Training corpus is generated by automatic extraction , Used to train entity attribute annotation model , Then it is applied to the entity attribute extraction of unstructured data .

◉ Data mining method is used to mine the relationship pattern between entity attributes and attribute values directly from the text , According to this, we can locate the attribute name and value in the text .

4.2 Knowledge fusion

Through information extraction , We get the entities from the original unstructured and semi-structured data 、 Relationship and attribute information of entity . If we compare the next process to a jigsaw puzzle , So these are pieces of puzzle , It's disorganized, and even fragments from other puzzles 、 It's a piece of error that interferes with our puzzle .

in other words , Puzzle pieces ( Information ) The relationship between them is flat , Lack of hierarchy and logicality ; Puzzle ( knowledge ) There are also a lot of jumbled and wrong pieces of jigsaw ( Information ). So how to solve this problem , It's what we need to do in the process of knowledge integration .

Knowledge fusion includes 2 Part content : Entity link 、 Knowledge merge .

1) Entity link

Entity link (entity linking) It refers to the entity object extracted from the text , The operation of linking it to the corresponding correct entity object in the knowledge base . Its basic idea is first according to the given entity reference , Select a set of candidate entity objects from the knowledge base , Then the reference item is linked to the correct entity object by similarity calculation .

Study history :

◉ Only focus on how to link the entities extracted from the text to the knowledge base , Ignore the semantic relationship between entities in the same document ;

◉ Start to pay attention to the co-occurrence relationship of using entities , Link multiple entities to the knowledge base at the same time . That is, integration entity link (collective entity linking).

The process of entity linking :

- Entity references are extracted from the text .

- Conduct Entity disambiguation and Co refers to digestion , Determine whether the entity with the same name in the knowledge base represents different meanings and whether there are other named entities with the same meaning in the knowledge base .

- After confirming the corresponding correct entity object in the knowledge base , Connect the entity reference necklace to the corresponding entity in the knowledge base .

◉ Entity disambiguation : It is a technology specially used to solve the ambiguity problem of entities with the same name , By entity disambiguation , According to the current context , Establish entity links accurately , The main method of entity disambiguation is clustering . In fact, it can also be seen as the problem of context based classification , Similar to part of speech disambiguation and word sense disambiguation .

◉ Co refers to digestion : It is mainly used to solve the problem that multiple references correspond to the same entity object . In a conversation , Multiple references may refer to the same entity object . Using the common finger digestion technology , You can relate these references to ( Merge ) To the correct entity object , Because this problem has special importance in the fields of information retrieval and natural language processing , Attracted a lot of research efforts . There are other names for coreference resolution , Such as object alignment 、 Entity matching is synonymous with entity .

2) Knowledge fusion

In the previous entity link , We have linked the entity to the corresponding correct entity object in the knowledge base , But it should be noted that , Entity link refers to the data extracted by information extraction from semi-structured data and unstructured data .

Well, in addition to semi-structured data and unstructured data , We also have a more convenient data source ——— Structured data , Such as external knowledge base and relational database . For the processing of this part of structured data , This is the content of our knowledge fusion .

Generally speaking, knowledge fusion can be divided into two types : Merge external knowledge base , It mainly deals with the conflict between data layer and pattern layer ; Merge relational databases , Yes RDB2RDF Other methods .

4.3 Knowledge processing

After a series of steps just now , We have finally reached the stage of knowledge processing ! in front , We have extracted information , The entity is extracted from the original corpus 、 Knowledge elements such as relationship and attribute , And through knowledge fusion , Eliminate the ambiguity between the entity referent and the entity object , Get a basic set of facts to express .

But fact itself is not knowledge . To finally get structured , Network knowledge system , It also needs to go through the process of knowledge processing . Knowledge processing mainly includes 3 Aspect content : Ontology extraction 、 Knowledge reasoning and quality assessment .

1) Ontology extraction

noumenon (ontology) It refers to the concept set of workers 、 Conceptual framework , Such as “ people ”、“ things ”、“ matter ” etc. . Ontologies can be built manually by means of manual editing ( With the help of ontology editing software ), You can also build ontologies in a data-driven, automated way . Because of the huge workload of manual mode , And it's hard to find qualified experts , So the current mainstream global ontology library products , They all start from some existing ontology libraries that are oriented to specific fields , It is gradually expanded by using automatic construction technology .

The automated ontology building process consists of three phases : Similarity calculation of entity juxtaposition relationship → Entity relation extraction → The generation of ontology .

As shown in the figure , When the map of knowledge has just been obtained “ Warwolf Ⅱ”、“ Wandering the earth ”、“ Beijing cultural ” When these three entities , It may be thought that there is no difference among the three . But when it calculates the similarity between three entities , You will find ,“ Warwolf Ⅱ” and “ Wandering the earth ” May be more similar , And “ Beijing cultural ” The difference is bigger .

- The first step is to come down , In fact, there is no concept of upper and lower levels in knowledge map . It still doesn't know ,“ Wandering the earth ” and “ Beijing cultural ” Not belonging to a type , Can't compare .

- So the second step 『 Entity relation extraction 』 Need to do such a job , So as to generate the ontology of the third step .

- When the three steps are over , This map of knowledge may understand ,“ Warwolf 2 And wandering the earth , It is a subdivision entity under the entity of film . They are not the same as Beijing culture ”.

2) Knowledge reasoning

After we have completed the ontology building step , The rudiment of a knowledge map has been built . But maybe at this time , Most of the relationships between knowledge maps are incomplete , The missing value is very serious , So at this point , We can use knowledge reasoning technology , To complete further knowledge discovery .

Of course, the object of knowledge reasoning is not limited to the relationship between entities , It can also be the attribute value of an entity , The concept level relation of ontology .

- Infer attribute values : The birthday attribute of an entity is known , The age attribute of the entity can be obtained by reasoning ;

- The concept of reasoning : It is known that ( The tiger , Families, , Felidae ) and ( Felidae , Objective , Carnivores ) Can be launched ( The tiger , Objective , Carnivores )

The algorithm of this block can be divided into 3 Categories: : Relational reasoning technology based on knowledge expression ; Schematic diagram of relationship reasoning technology based on probability graph model ; Schematic diagram of relationship reasoning technology based on deep learning .

3) Quality assessment

Quality assessment is also an important part of knowledge base construction technology , The significance of this part lies in : The credibility of knowledge can be quantified , The quality of knowledge base is guaranteed by discarding knowledge with low confidence .

4.4 Knowledge update

Logically speaking , The update of knowledge base includes the update of concept layer and data layer .

- Update of concept layer : After adding new data, a new concept is obtained , New concepts need to be automatically added to the concept layer of the knowledge base .

- Data layer update : It is mainly about adding or updating entities 、 Relationship 、 Property value , To update the data layer, we need to consider the reliability of the data source 、 Data consistency ( Whether there are contradictions or miscellaneous problems ) Wait for reliable data sources , And choose the facts and attributes that appear frequently in each data source to join the knowledge base .

There are two ways to update the content of knowledge map :

- Comprehensive update : It refers to the input of all the updated data , Build a knowledge map from scratch . This method is relatively simple , But resource consumption is high , And it takes a lot of human resources to maintain the system ;

- Incremental updating : Take the newly added data as input , Add new knowledge to the existing knowledge map . This way, the consumption of resources is small , But a lot of human intervention is still needed ( Define rules, etc ), So it's very difficult to implement .

The construction of knowledge map is over !

5、 ... and 、 Relevant code implementation reference

obtain 『 natural language processing 』 Industry solutions

official account ShowMeAI research center Reply key 『 natural language processing 』, obtain ShowMeAI Organized Big factory solutions —— Including Tencent 、 Iqiyi 、 Meituan 、 millet 、 Baidu 、 TaoBao 、 Gaode and other project codes 、 Data sets 、 Paper collection and other packaged materials .

Relevant code implementation reference

ShowMeAI The technical experts and partners in the community have also implemented the typical algorithm of knowledge map . Yes 『 Knowledge map construction and practice 』 If you are interested in details , Please go to our GitHub project https://github.com/ShowMeAI-Hub View the implementation code . thank AI Institute of algorithms All technical experts and partners involved in this project , Recommend official account . The collation of data sets and code takes a lot of effort , Welcome to PR and Star!

6、 ... and 、 reference

- 1 Liu Qiao , Li Yang , Duan Hong , etc. . Overview of knowledge map construction technology J. Computer research and development , 2016, 53(3):582-600.

- 2 Strange ant . CSDN. Knowledge map technology skills .

- 3 Ehrlinger L, Wöß W. Towards a Definition of Knowledge GraphsC// Joint Proceedings of the Posters and Demos Track of,

International Conference on Semantic Systems - Semantics2016 and,

International Workshop on Semantic Change & Evolving Semantics. 2016.

- 4 Das R, Neelakantan A, Belanger D, et al. Chains of Reasoning over Entities, Relations, and Text using Recurrent Neural NetworksJ.

边栏推荐

- Analysis of USB network card sending and receiving data

- Redis入门完整教程:客户端常见异常

- 慧通编程入门课程 - 2A闯关

- Metaforce force meta universe fossage 2.0 smart contract system development (source code deployment)

- Code line breaking problem of untiy text box

- What are the applications and benefits of MES management system

- Redis入门完整教程:客户端案例分析

- wzoi 1~200

- Planning and design of double click hot standby layer 2 network based on ENSP firewall

- oracle连接池长时间不使用连接失效问题

猜你喜欢

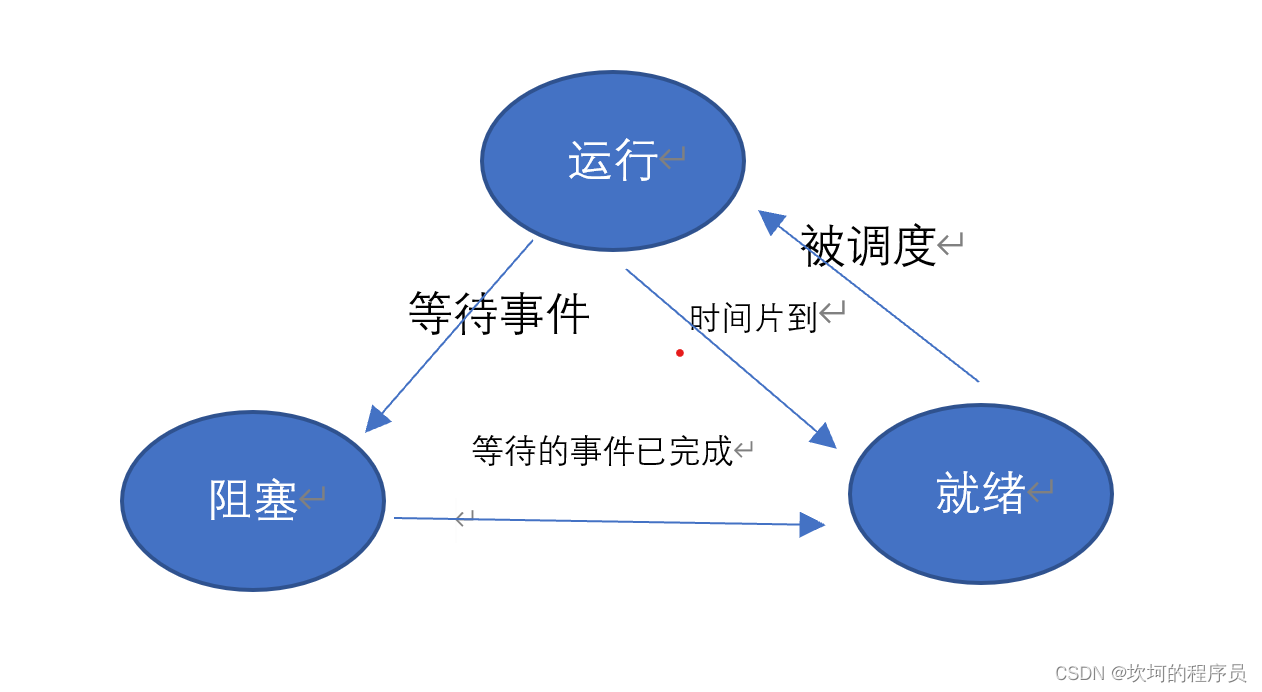

Fundamentals of process management

Niuke programming problem -- double pointer of 101 must be brushed

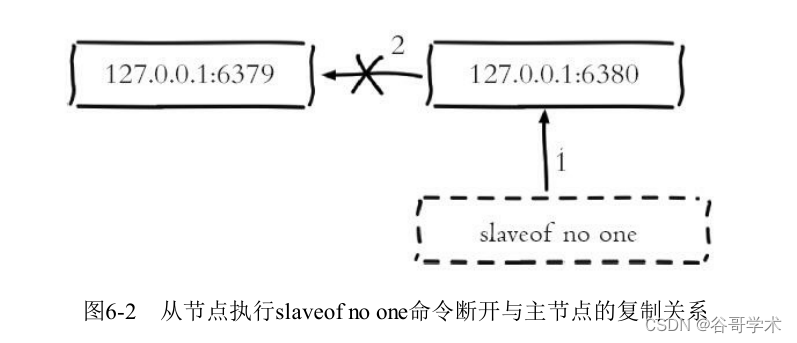

Redis入门完整教程:复制配置

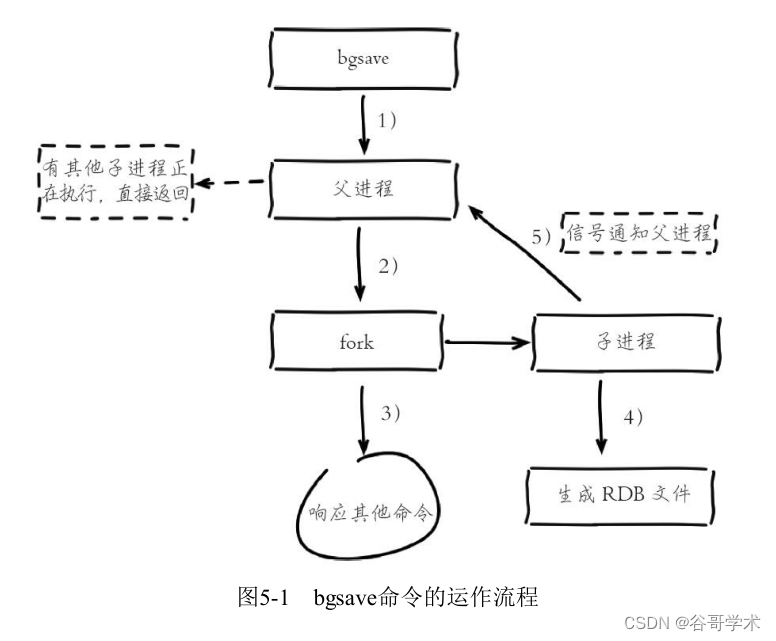

A complete tutorial for getting started with redis: RDB persistence

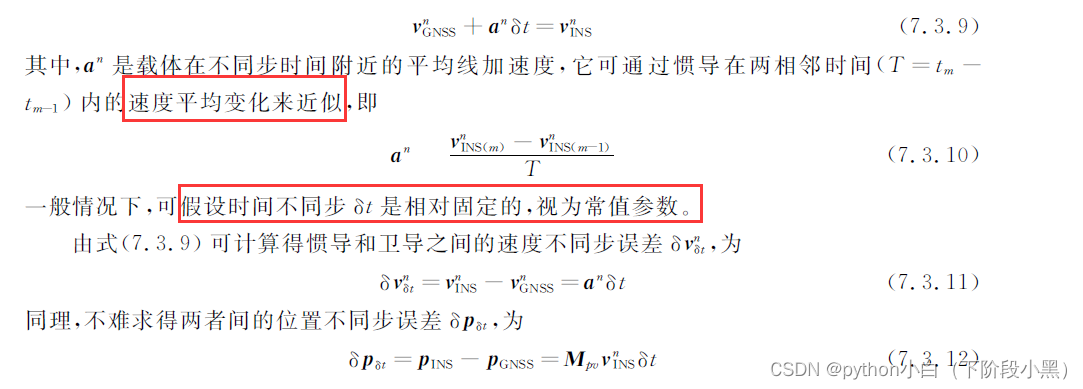

Detailed explanation of 19 dimensional integrated navigation module sinsgps in psins (time synchronization part)

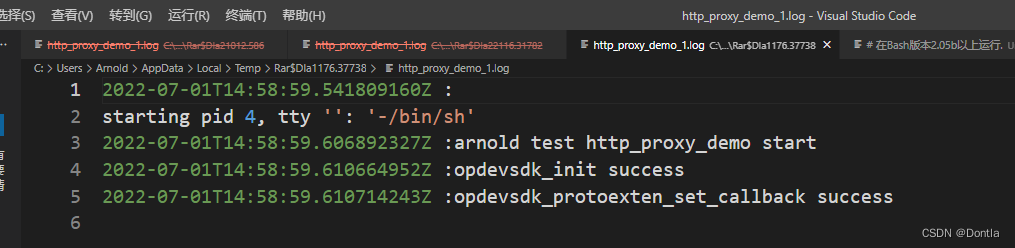

KYSL 海康摄像头 8247 h9 isapi测试

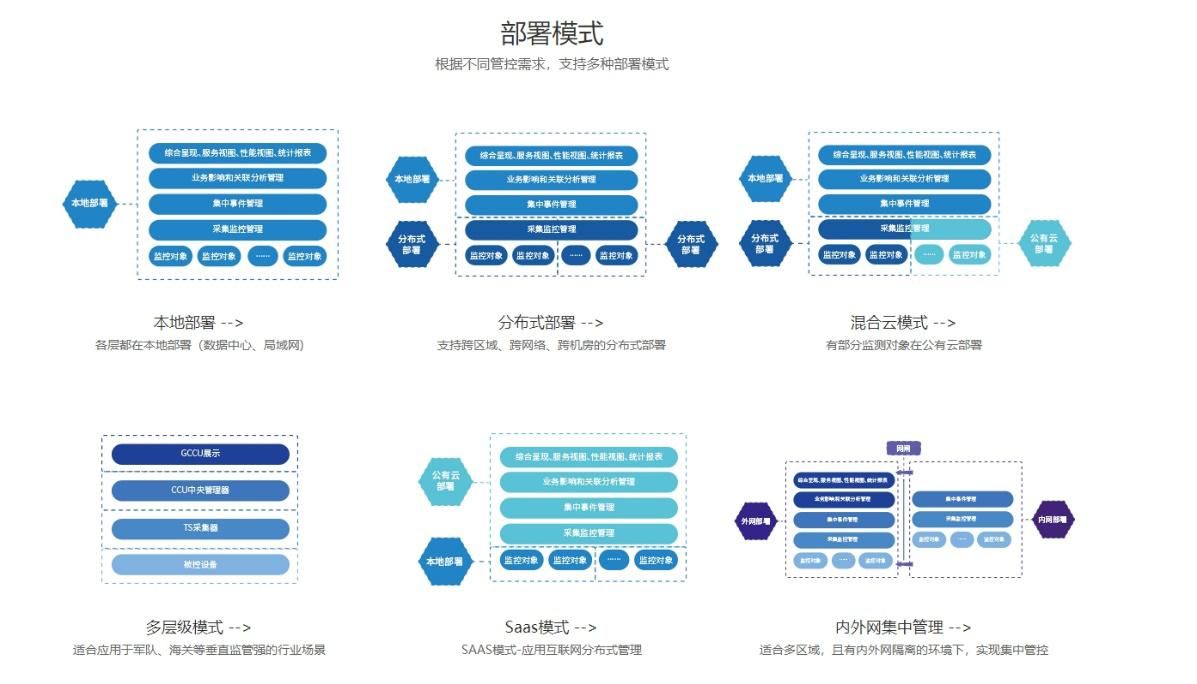

What are the characteristics of the operation and maintenance management system

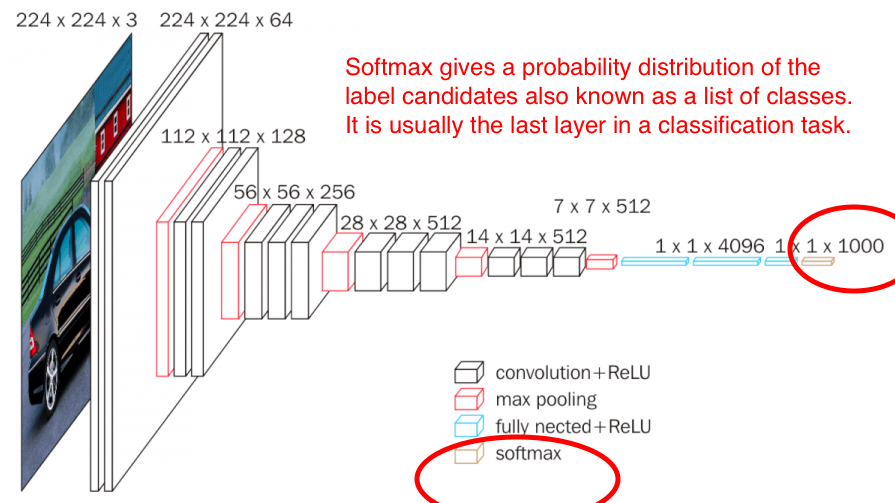

Classify the features of pictures with full connection +softmax

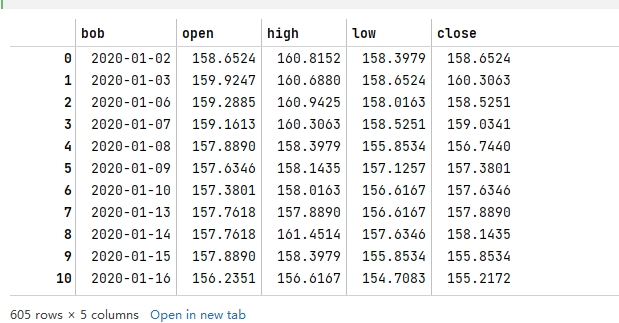

掘金量化:通过history方法获取数据,和新浪财经,雪球同用等比复权因子。不同于同花顺

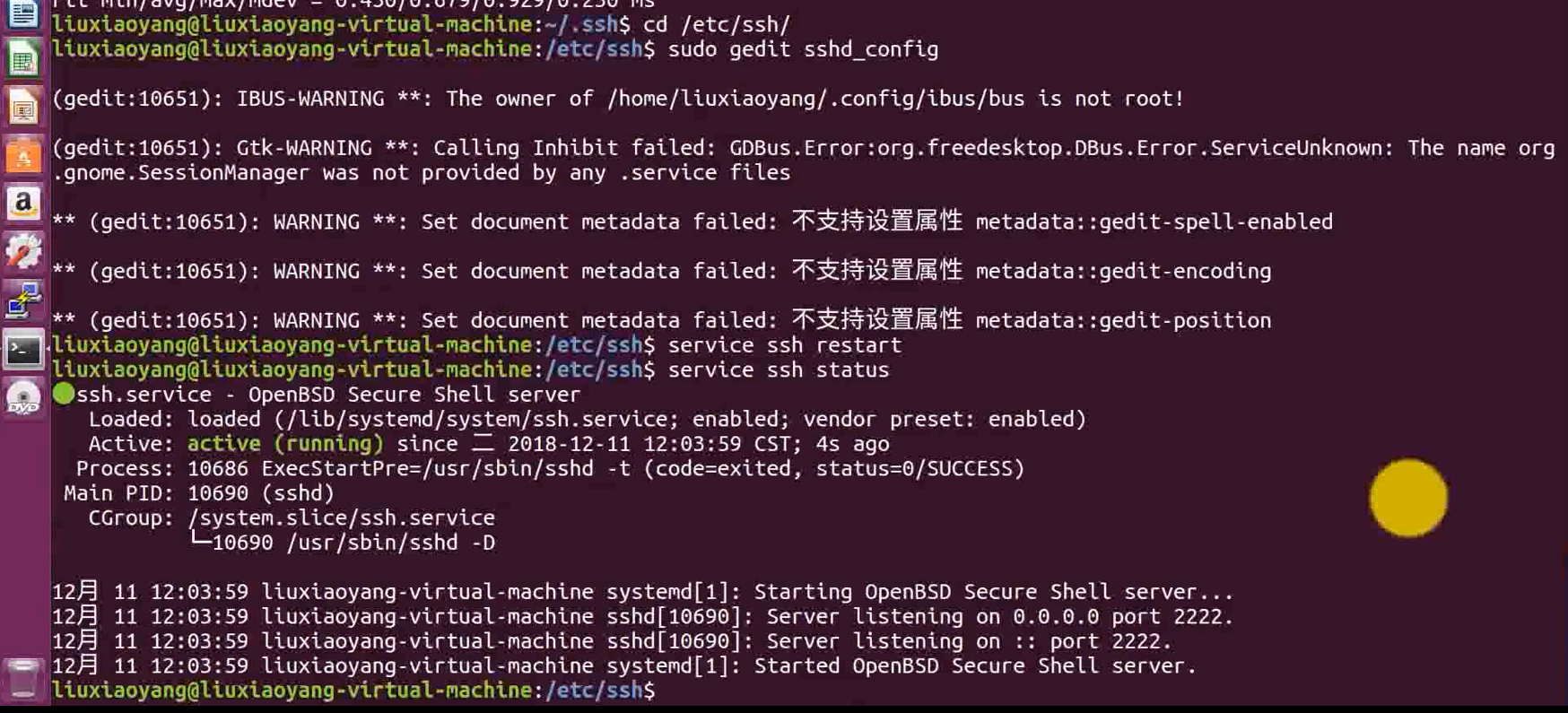

6-6 vulnerability exploitation SSH security defense

随机推荐

[software test] the most complete interview questions and answers. I'm familiar with the full text. If I don't win the offer, I'll lose

商城商品的知识图谱构建

Data analysis from the perspective of control theory

Safety delivery engineer

Kysl Haikang camera 8247 H9 ISAPI test

从零安装Redis

[2022 national tournament simulation] polygon - computational geometry, binary answer, multiplication

S120驱动器基本调试步骤总结

Summary of basic debugging steps of S120 driver

Convert widerperson dataset to Yolo format

掘金量化:通过history方法获取数据,和新浪财经,雪球同用等比复权因子。不同于同花顺

慧通编程入门课程 - 2A闯关

Mmdetection3d loads millimeter wave radar data

Five reasons for clothing enterprises to deploy MES management system

Have fun | latest progress of "spacecraft program" activities

How to find file accessed / created just feed minutes ago

Redis入门完整教程:RDB持久化

Redis introduction complete tutorial: replication principle

牛客编程题--必刷101之双指针篇

MATLB|具有储能的经济调度及机会约束和鲁棒优化