当前位置:网站首页>Greenplum 6.x monitoring software setup

Greenplum 6.x monitoring software setup

2022-07-07 08:46:00 【xcSpark】

One . Download a software

Download address

Enter keywords in the search bar to search

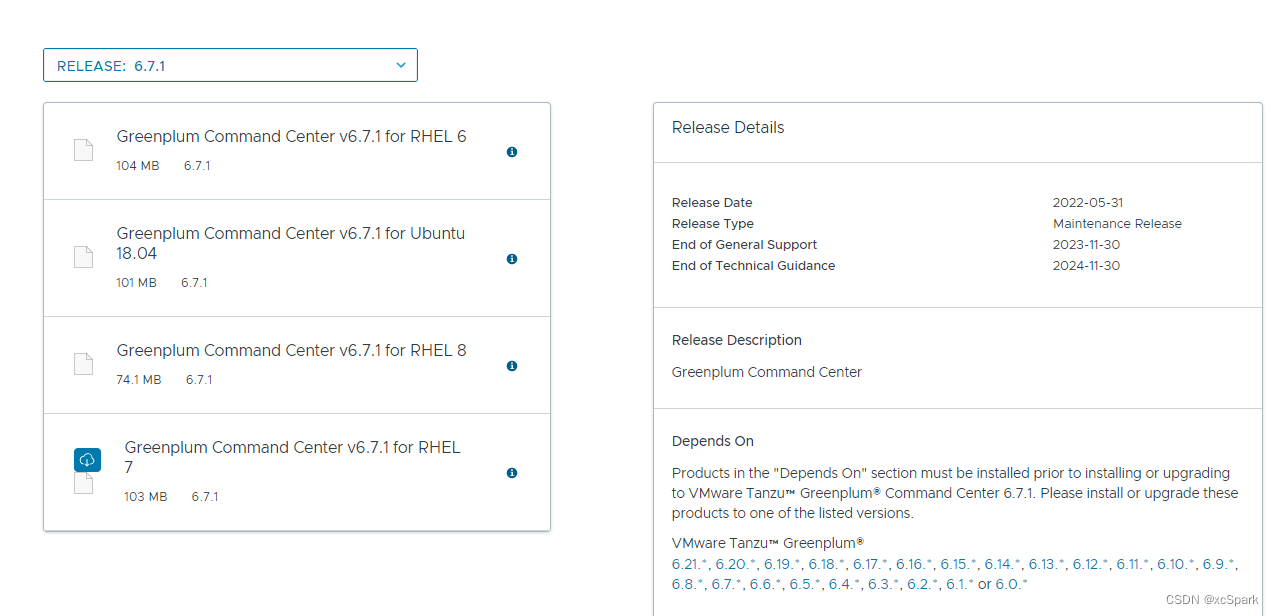

Select the corresponding version , Click to download ( You need to log in )

Upload to /opt Under the table of contents

Two . install

root The user login master Machine operation

# decompression

[[email protected] opt]# unzip greenplum-cc-web-6.0.0-rhel7_x86_64.zip

Archive: greenplum-cc-web-6.7.1-gp6-rhel7-x86_64.zip

creating: greenplum-cc-web-6.7.1-gp6-rhel7-x86_64/

inflating: greenplum-cc-web-6.7.1-gp6-rhel7-x86_64/gpccinstall-6.0.0

[[email protected] opt]# ls

greenplum-cc-web-6.7.1-gp6-rhel7-x86_64 greenplum-cc-web-6.7.1-gp6-rhel7-x86_64.zip greenplum-cc-web-6.7.1-gp6-rhel7-x86_64.rpm rh

[[email protected] opt]#

# Assign permissions to the directory gpadmin

[[email protected] opt]# chown -R gpadmin:gpadmin /opt/greenplum-cc-web-6.7.1-gp6-rhel7-x86_64

# Switch user installation

[[email protected] greenplum-cc-web-6.7.1-gp6-rhel7-x86_64]# su - gpadmin

[[email protected] ~]$ cd /opt/greenplum-cc-web-6.7.1-gp6-rhel7-x86_64/

[[email protected] greenplum-cc-web-6.7.1-gp6-rhel7-x86_64]$ ./gpccinstall-6.0.0

...

# agree!

Do you agree to the Pivotal Greenplum Command Center End User License Agreement? Yy/Nn (Default=Y)

y

# Specify installation path greenplum

Where would you like to install Greenplum Command Center? (Default=/usr/local)

/greenplum

# Default carriage return

What would you like to name this installation of Greenplum Command Center? (Default=gpcc)

# Default , enter

What port would you like gpcc webserver to use? (Default=28080)

# Input n

Would you like enable SSL? Yy/Nn (Default=N)

n

# Input 2

Please choose a display language (Default=English)

1. English

2. Chinese

3. Korean

4. Russian

5. Japanese

2

INSTALLATION IN PROGRESS...

RELOADING pg_hba.conf. PLEASE WAIT ...

********************************************************************************

* *

* INSTALLATION COMPLETED SUCCESSFULLY *

* *

* Source the gpcc_path.sh or add it to your bashrc file to use gpcc command *

* utility. *

* *

* To see the GPCC web UI, you must first start the GPCC webserver. *

* *

* To start the GPCC webserver on the current host, run gpcc start. *

* *

********************************************************************************

To manage Command Center, use the gpcc command-line utility.

Usage:

gpcc [OPTIONS] <command>

Application Options:

-v, --version Show Greenplum Command Center version

--settings Print the current configuration settings

Help Options:

-h, --help Show this help message

Available commands:

help Print list of commands

krbdisable Disables kerberos authentication

krbenable Enables kerberos authentication

start Starts Greenplum Command Center webserver and metrics collection agents

with [-W] option to force password prompt for GPDB user gpmon [optional]

status Print agent status

with [-W] option to force password prompt for GPDB user gpmon [optional]

stop Stops Greenplum Command Center webserver and metrics collection agents

with [-W] option to force password prompt for GPDB user gpmon [optional]

[[email protected] greenplum-cc-web-6.0.0-rhel7_x86_64]$

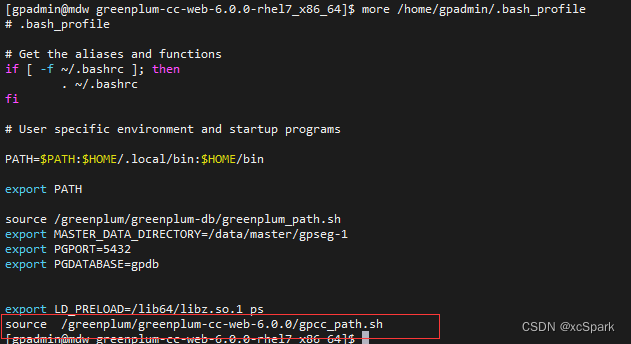

3、 ... and . Set the environment variable

gpadmin The user is in maser Machine operation

cat >> /home/gpadmin/.bash_profile << EOF source /greenplum/greenplum-cc-web-6.0.0/gpcc_path.sh EOF

# Effective environment variables

source /home/gpadmin/.bash_profile

# New includes standby host ,segment Host files , No extra spaces and line breaks

[[email protected] ~]$ vi /tmp/standby_seg_hosts

# Add the following

smdw

sdw1

sdw2

Save and exit

# distribution

gpscp -f /tmp/standby_seg_hosts ~/.bash_profile =:~

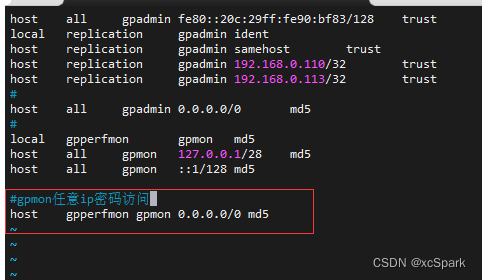

Four . Modify the configuration

vi /data/master/gpseg-1/pg_hba.conf

# Add the following

host gpperfmon gpmon 0.0.0.0/0 md5

#host all gpmon 0.0.0.0/0 trust

gpscp -f /tmp/hostlist /data/master/gpseg-1/postgresql.conf =/data/master/gpseg-1/postgresql.conf

[[email protected] gpseg-1]$ scp postgresql.conf [email protected]:/data/master/gpseg-1

postgresql.conf 100% 23KB 13.8MB/s 00:00

[[email protected] gpseg-1]$ scp pg_hba.conf [email protected]:/data/master/gpseg-1

pg_hba.conf 100% 4956 4.1MB/s 00:00

[[email protected] gpseg-1]$

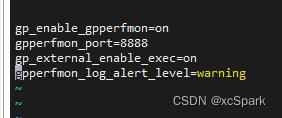

modify postgres.conf

cd /data/master/gpseg-1

vi postgresql.conf

# Add the following

gp_enable_gpperfmon=on

gpperfmon_port=8888

gp_external_enable_exec=on

gpperfmon_log_alert_level=warning

Save and exit

gpscp -f /tmp/standby_seg_hosts /data/master/gpseg-1/postgresql.conf =/data/master/gpseg-1/postgresql.conf

5、 ... and . start-up

gpadmin The user is in master Machine operation

# First refresh the configuration pg_hba.conf

[[email protected] gpseg-1]$ gpstop -u

20220703:03:28:57:037778 gpstop:mdw:gpadmin-[INFO]:-Starting gpstop with args: -u

20220703:03:28:57:037778 gpstop:mdw:gpadmin-[INFO]:-Gathering information and validating the environment...

20220703:03:28:57:037778 gpstop:mdw:gpadmin-[INFO]:-Obtaining Greenplum Master catalog information

20220703:03:28:57:037778 gpstop:mdw:gpadmin-[INFO]:-Obtaining Segment details from master...

20220703:03:28:57:037778 gpstop:mdw:gpadmin-[INFO]:-Greenplum Version: 'postgres (Greenplum Database) 6.18.2 build commit:1242aadf0137d3b26ee42c80e579e78bd7a805c7'

20220703:03:28:57:037778 gpstop:mdw:gpadmin-[INFO]:-Signalling all postmaster processes to reload

.

# start-up

[[email protected] gpseg-1]$ gpcc start

2022-07-03 03:29:00 Starting the gpcc agents and webserver...

2022-07-03 03:29:10 Agent successfully started on 4/4 hosts

2022-07-03 03:29:10 View Greenplum Command Center at http://mdw:28080

[[email protected] gpseg-1]$

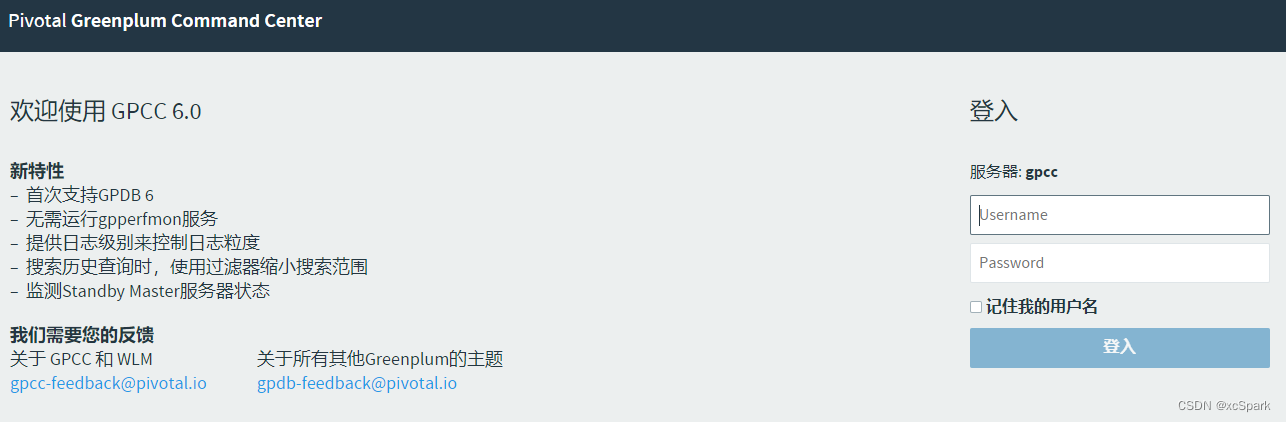

# visit

# take http://mdw:28080 Medium mdw Replace with the actual ip Address , Open with a browser

http://192.168.0.110:28080

gpadmin The user to change gpmon Password

[gpadmin@mdw gpseg-1]$ psql

psql (9.4.24)

Type "help" for help.

gpdb=# alter user gpmon with password 'gpmon';

ALTER ROLE

gpdb=# \q

[gpadmin@mdw gpseg-1]$

Set password file

Format

hostname:port:database:username:password

[[email protected] gpseg-1]$ more /home/gpadmin/.pgpass

[[email protected] gpseg-1]$vi /home/gpadmin/.pgpass

Change the password to

*:5432:gpperfmon:gpmon:gpmon

# distribution

gpscp -f /tmp/hostlist /home/gpadmin/.pgpass =~

User name, password :gpmon/gpmon, Sign in

6、 ... and . Add

gpdb=# select * from pg_authid;

rolname | rolsuper | rolinherit | rolcreaterole | rolcreatedb | rolcatupdate | rolcanlogin | rolreplication | rolconnlimit | rolpassword | rolvaliduntil | rolresqueue | rol

createrextgpfd | rolcreaterexthttp | rolcreatewextgpfd | rolresgroup

---------------------+----------+------------+---------------+-------------+--------------+-------------+----------------+--------------+-------------------------------------+---------------+-------------+----

---------------+-------------------+-------------------+-------------

gpadmin | t | t | t | t | t | t | t | -1 | md5d115c26178447dc7986c5e67afcd710b | | 6055 | t

| t | t | 6438

gpcc_basic | f | t | f | f | f | f | f | -1 | | | 6055 | f

| f | f | 6437

gpcc_operator | f | t | f | f | f | f | f | -1 | | | 6055 | f

| f | f | 6437

gpcc_operator_basic | f | t | f | f | f | f | f | -1 | | | 6055 | f

| f | f | 6437

gpmon | t | t | f | t | t | t | f | -1 | md5271fed4320b7ac3aae3ac625420cf73f | | 6055 | f

| f | f | 6438

(5 rows)

gpdb=#

error :password authentication failed for user “gpmon”

[[email protected] gpseg-1]$ gpcc stop

2022-07-03 10:40:58 Stopping the gpcc agents and webserver...

2022-07-03 10:40:58 pq: password authentication failed for user "gpmon"

[[email protected] gpseg-1]$

psql The password for entering and modifying is invalid

solve : Manually modify vi /home/gpadmin/.pgpass. The following order takes effect

[[email protected] gpseg-1]$ gpcc stop

2022-07-03 10:55:34 Stopping the gpcc agents and webserver...

2022-07-03 10:55:35 GPCC webserver and metrics collection agents have been stopped. Use gpcc start to start them again

[[email protected] gpseg-1]$

[[email protected] gpseg-1]$ gpcc start

2022-07-03 10:56:31 Starting the gpcc agents and webserver...

2022-07-03 10:56:35 Agent successfully started on 4/4 hosts

2022-07-03 10:56:35 View Greenplum Command Center at http://mdw:28080

[[email protected] gpseg-1]$

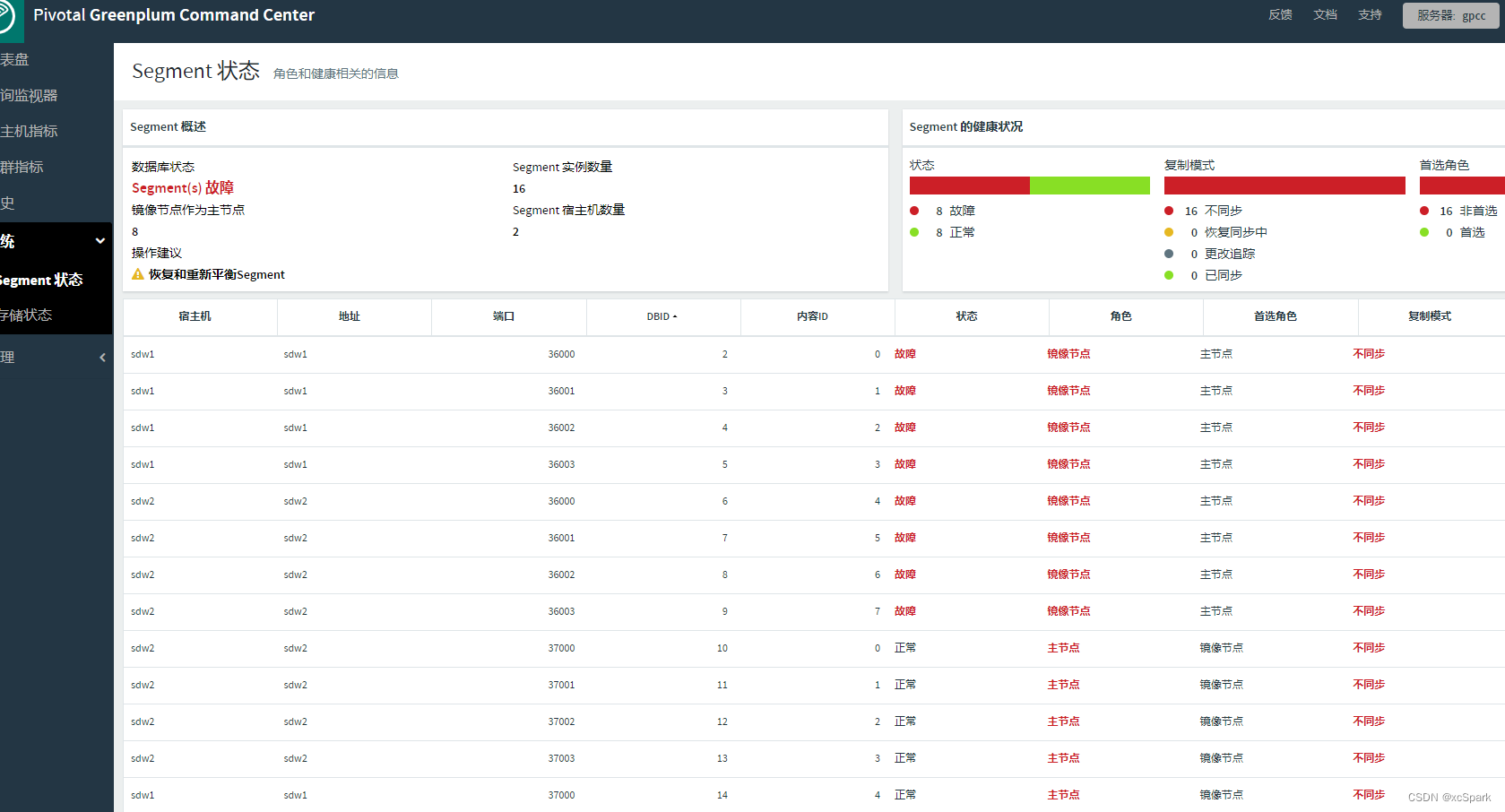

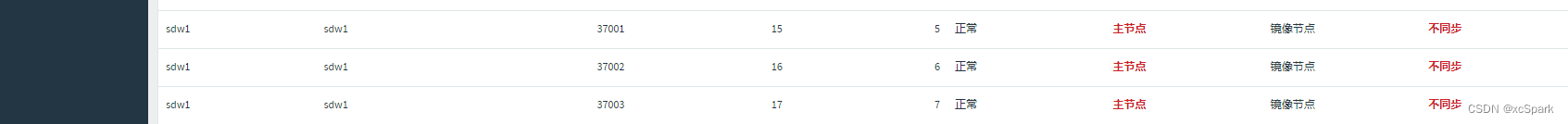

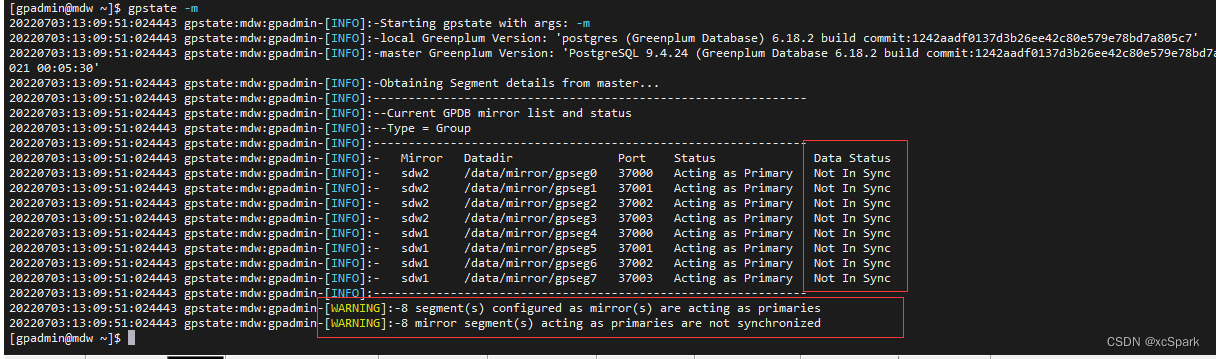

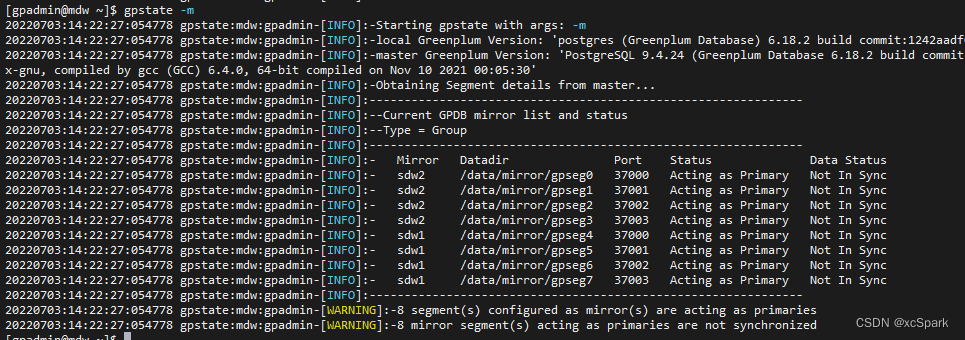

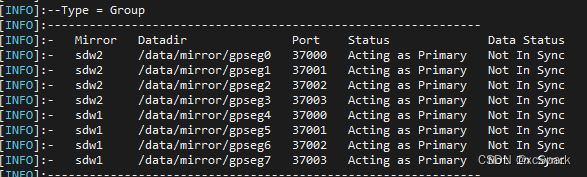

error 2: Mirror image mirror Node failure

Check the mirror status

[[email protected] gpseg-1]$ gpstate -m

20220703:13:02:28:020990 gpstate:mdw:gpadmin-[INFO]:-Starting gpstate with args: -m

20220703:13:02:28:020990 gpstate:mdw:gpadmin-[INFO]:-local Greenplum Version: 'postgres (Greenplum Database) 6.18.2 build commit:1242aadf0137d3b26ee42c80e579e78bd7a805c7'

20220703:13:02:28:020990 gpstate:mdw:gpadmin-[INFO]:-master Greenplum Version: 'PostgreSQL 9.4.24 (Greenplum Database 6.18.2 build commit:1242aadf0137d3b26ee42c80e579e78bd7a805c7) on x86_64-unknown-linux-gnu, compiled by gcc (GCC) 6.4.0, 64-bit compiled on Nov 10 2021 00:05:30'

20220703:13:02:28:020990 gpstate:mdw:gpadmin-[INFO]:-Obtaining Segment details from master...

20220703:13:02:28:020990 gpstate:mdw:gpadmin-[INFO]:--------------------------------------------------------------

20220703:13:02:28:020990 gpstate:mdw:gpadmin-[INFO]:--Current GPDB mirror list and status

20220703:13:02:28:020990 gpstate:mdw:gpadmin-[INFO]:--Type = Group

20220703:13:02:28:020990 gpstate:mdw:gpadmin-[INFO]:--------------------------------------------------------------

20220703:13:02:28:020990 gpstate:mdw:gpadmin-[INFO]:- Mirror Datadir Port Status Data Status

20220703:13:02:28:020990 gpstate:mdw:gpadmin-[INFO]:- sdw2 /data/mirror/gpseg0 37000 Acting as Primary Not In Sync

20220703:13:02:28:020990 gpstate:mdw:gpadmin-[INFO]:- sdw2 /data/mirror/gpseg1 37001 Acting as Primary Not In Sync

20220703:13:02:28:020990 gpstate:mdw:gpadmin-[INFO]:- sdw2 /data/mirror/gpseg2 37002 Acting as Primary Not In Sync

20220703:13:02:28:020990 gpstate:mdw:gpadmin-[INFO]:- sdw2 /data/mirror/gpseg3 37003 Acting as Primary Not In Sync

20220703:13:02:28:020990 gpstate:mdw:gpadmin-[INFO]:- sdw1 /data/mirror/gpseg4 37000 Acting as Primary Not In Sync

20220703:13:02:28:020990 gpstate:mdw:gpadmin-[INFO]:- sdw1 /data/mirror/gpseg5 37001 Acting as Primary Not In Sync

20220703:13:02:28:020990 gpstate:mdw:gpadmin-[INFO]:- sdw1 /data/mirror/gpseg6 37002 Acting as Primary Not In Sync

20220703:13:02:28:020990 gpstate:mdw:gpadmin-[INFO]:- sdw1 /data/mirror/gpseg7 37003 Acting as Primary Not In Sync

20220703:13:02:28:020990 gpstate:mdw:gpadmin-[INFO]:--------------------------------------------------------------

20220703:13:02:28:020990 gpstate:mdw:gpadmin-[WARNING]:-8 segment(s) configured as mirror(s) are acting as primaries

20220703:13:02:28:020990 gpst

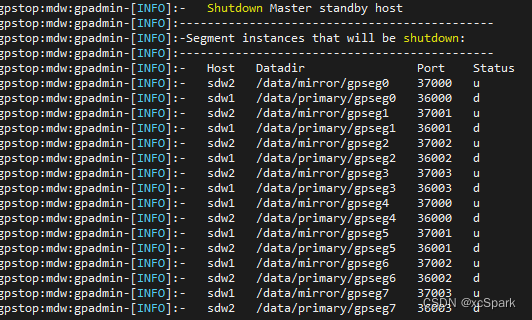

Execute restart database

gpstop -m -M fast

gpstart

[[email protected] ~]$ gpstop -m -M fast

20220703:13:05:15:022266 gpstop:mdw:gpadmin-[INFO]:-Starting gpstop with args: -m -M fast

20220703:13:05:15:022266 gpstop:mdw:gpadmin-[INFO]:-Gathering information and validating the environment...

20220703:13:05:15:022266 gpstop:mdw:gpadmin-[INFO]:-Obtaining Greenplum Master catalog information

20220703:13:05:15:022266 gpstop:mdw:gpadmin-[INFO]:-Obtaining Segment details from master...

20220703:13:05:15:022266 gpstop:mdw:gpadmin-[INFO]:-Greenplum Version: 'postgres (Greenplum Database) 6.18.2 build commit:1242aadf0137d3b26ee42c80e579e78bd7a805c7'

Continue with master-only shutdown Yy|Nn (default=N):

> y

20220703:13:05:20:022266 gpstop:mdw:gpadmin-[INFO]:-Commencing Master instance shutdown with mode='fast'

20220703:13:05:20:022266 gpstop:mdw:gpadmin-[INFO]:-Master segment instance directory=/data/master/gpseg-1

20220703:13:05:20:022266 gpstop:mdw:gpadmin-[INFO]:-Attempting forceful termination of any leftover master process

20220703:13:05:20:022266 gpstop:mdw:gpadmin-[INFO]:-Terminating processes for segment /data/master/gpseg-1

[[email protected] ~]$

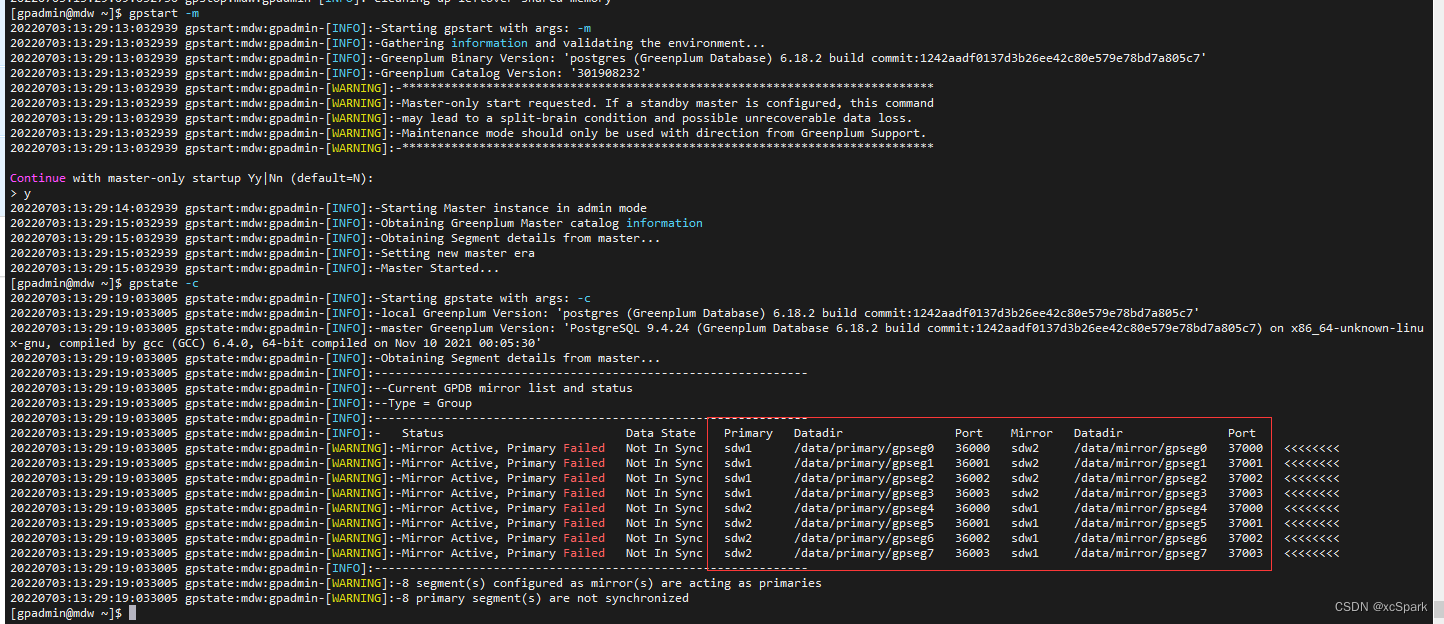

[[email protected] ~]$ gpstart

20220703:13:07:12:023074 gpstart:mdw:gpadmin-[INFO]:-Starting gpstart with args:

20220703:13:07:12:023074 gpstart:mdw:gpadmin-[INFO]:-Gathering information and validating the environment...

20220703:13:07:12:023074 gpstart:mdw:gpadmin-[INFO]:-Greenplum Binary Version: 'postgres (Greenplum Database) 6.18.2 build commit:1242aadf0137d3b26ee42c80e579e78bd7a805c7'

20220703:13:07:12:023074 gpstart:mdw:gpadmin-[INFO]:-Greenplum Catalog Version: '301908232'

20220703:13:07:12:023074 gpstart:mdw:gpadmin-[INFO]:-Starting Master instance in admin mode

20220703:13:07:12:023074 gpstart:mdw:gpadmin-[INFO]:-Obtaining Greenplum Master catalog information

20220703:13:07:12:023074 gpstart:mdw:gpadmin-[INFO]:-Obtaining Segment details from master...

20220703:13:07:12:023074 gpstart:mdw:gpadmin-[INFO]:-Setting new master era

20220703:13:07:12:023074 gpstart:mdw:gpadmin-[INFO]:-Master Started...

20220703:13:07:13:023074 gpstart:mdw:gpadmin-[INFO]:-Shutting down master

20220703:13:07:13:023074 gpstart:mdw:gpadmin-[WARNING]:-Skipping startup of segment marked down in configuration: on sdw1 directory /data/primary/gpseg0 <<<<<

20220703:13:07:13:023074 gpstart:mdw:gpadmin-[WARNING]:-Skipping startup of segment marked down in configuration: on sdw1 directory /data/primary/gpseg1 <<<<<

20220703:13:07:13:023074 gpstart:mdw:gpadmin-[WARNING]:-Skipping startup of segment marked down in configuration: on sdw1 directory /data/primary/gpseg2 <<<<<

20220703:13:07:13:023074 gpstart:mdw:gpadmin-[WARNING]:-Skipping startup of segment marked down in configuration: on sdw1 directory /data/primary/gpseg3 <<<<<

20220703:13:07:13:023074 gpstart:mdw:gpadmin-[WARNING]:-Skipping startup of segment marked down in configuration: on sdw2 directory /data/primary/gpseg4 <<<<<

20220703:13:07:13:023074 gpstart:mdw:gpadmin-[WARNING]:-Skipping startup of segment marked down in configuration: on sdw2 directory /data/primary/gpseg5 <<<<<

20220703:13:07:13:023074 gpstart:mdw:gpadmin-[WARNING]:-Skipping startup of segment marked down in configuration: on sdw2 directory /data/primary/gpseg6 <<<<<

20220703:13:07:13:023074 gpstart:mdw:gpadmin-[WARNING]:-Skipping startup of segment marked down in configuration: on sdw2 directory /data/primary/gpseg7 <<<<<

20220703:13:07:13:023074 gpstart:mdw:gpadmin-[INFO]:---------------------------

20220703:13:07:13:023074 gpstart:mdw:gpadmin-[INFO]:-Master instance parameters

20220703:13:07:13:023074 gpstart:mdw:gpadmin-[INFO]:---------------------------

20220703:13:07:13:023074 gpstart:mdw:gpadmin-[INFO]:-Database = template1

20220703:13:07:13:023074 gpstart:mdw:gpadmin-[INFO]:-Master Port = 5432

20220703:13:07:13:023074 gpstart:mdw:gpadmin-[INFO]:-Master directory = /data/master/gpseg-1

20220703:13:07:13:023074 gpstart:mdw:gpadmin-[INFO]:-Timeout = 600 seconds

20220703:13:07:13:023074 gpstart:mdw:gpadmin-[INFO]:-Master standby start = On

20220703:13:07:13:023074 gpstart:mdw:gpadmin-[INFO]:---------------------------------------

20220703:13:07:13:023074 gpstart:mdw:gpadmin-[INFO]:-Segment instances that will be started

20220703:13:07:13:023074 gpstart:mdw:gpadmin-[INFO]:---------------------------------------

20220703:13:07:13:023074 gpstart:mdw:gpadmin-[INFO]:- Host Datadir Port Role

20220703:13:07:13:023074 gpstart:mdw:gpadmin-[INFO]:- sdw2 /data/mirror/gpseg0 37000 Primary

20220703:13:07:13:023074 gpstart:mdw:gpadmin-[INFO]:- sdw2 /data/mirror/gpseg1 37001 Primary

20220703:13:07:13:023074 gpstart:mdw:gpadmin-[INFO]:- sdw2 /data/mirror/gpseg2 37002 Primary

20220703:13:07:13:023074 gpstart:mdw:gpadmin-[INFO]:- sdw2 /data/mirror/gpseg3 37003 Primary

20220703:13:07:13:023074 gpstart:mdw:gpadmin-[INFO]:- sdw1 /data/mirror/gpseg4 37000 Primary

20220703:13:07:13:023074 gpstart:mdw:gpadmin-[INFO]:- sdw1 /data/mirror/gpseg5 37001 Primary

20220703:13:07:13:023074 gpstart:mdw:gpadmin-[INFO]:- sdw1 /data/mirror/gpseg6 37002 Primary

20220703:13:07:13:023074 gpstart:mdw:gpadmin-[INFO]:- sdw1 /data/mirror/gpseg7 37003 Primary

Continue with Greenplum instance startup Yy|Nn (default=N):

> y

20220703:13:07:23:023074 gpstart:mdw:gpadmin-[INFO]:-Commencing parallel primary and mirror segment instance startup, please wait...

....

20220703:13:07:27:023074 gpstart:mdw:gpadmin-[INFO]:-Process results...

20220703:13:07:27:023074 gpstart:mdw:gpadmin-[INFO]:-----------------------------------------------------

20220703:13:07:27:023074 gpstart:mdw:gpadmin-[INFO]:- Successful segment starts = 8

20220703:13:07:27:023074 gpstart:mdw:gpadmin-[INFO]:- Failed segment starts = 0

20220703:13:07:27:023074 gpstart:mdw:gpadmin-[WARNING]:-Skipped segment starts (segments are marked down in configuration) = 8 <<<<<<<<

20220703:13:07:27:023074 gpstart:mdw:gpadmin-[INFO]:-----------------------------------------------------

20220703:13:07:27:023074 gpstart:mdw:gpadmin-[INFO]:-Successfully started 8 of 8 segment instances, skipped 8 other segments

20220703:13:07:27:023074 gpstart:mdw:gpadmin-[INFO]:-----------------------------------------------------

20220703:13:07:27:023074 gpstart:mdw:gpadmin-[WARNING]:-****************************************************************************

20220703:13:07:27:023074 gpstart:mdw:gpadmin-[WARNING]:-There are 8 segment(s) marked down in the database

20220703:13:07:27:023074 gpstart:mdw:gpadmin-[WARNING]:-To recover from this current state, review usage of the gprecoverseg

20220703:13:07:27:023074 gpstart:mdw:gpadmin-[WARNING]:-management utility which will recover failed segment instance databases.

20220703:13:07:27:023074 gpstart:mdw:gpadmin-[WARNING]:-****************************************************************************

20220703:13:07:27:023074 gpstart:mdw:gpadmin-[INFO]:-Starting Master instance mdw directory /data/master/gpseg-1

20220703:13:07:28:023074 gpstart:mdw:gpadmin-[INFO]:-Command pg_ctl reports Master mdw instance active

20220703:13:07:28:023074 gpstart:mdw:gpadmin-[INFO]:-Connecting to dbname='template1' connect_timeout=15

20220703:13:07:28:023074 gpstart:mdw:gpadmin-[INFO]:-Starting standby master

20220703:13:07:28:023074 gpstart:mdw:gpadmin-[INFO]:-Checking if standby master is running on host: smdw in directory: /data/master/gpseg-1

20220703:13:07:30:023074 gpstart:mdw:gpadmin-[WARNING]:-Number of segments not attempted to start: 8

20220703:13:07:30:023074 gpstart:mdw:gpadmin-[INFO]:-Check status of database with gpstate utility

[[email protected] ~]$

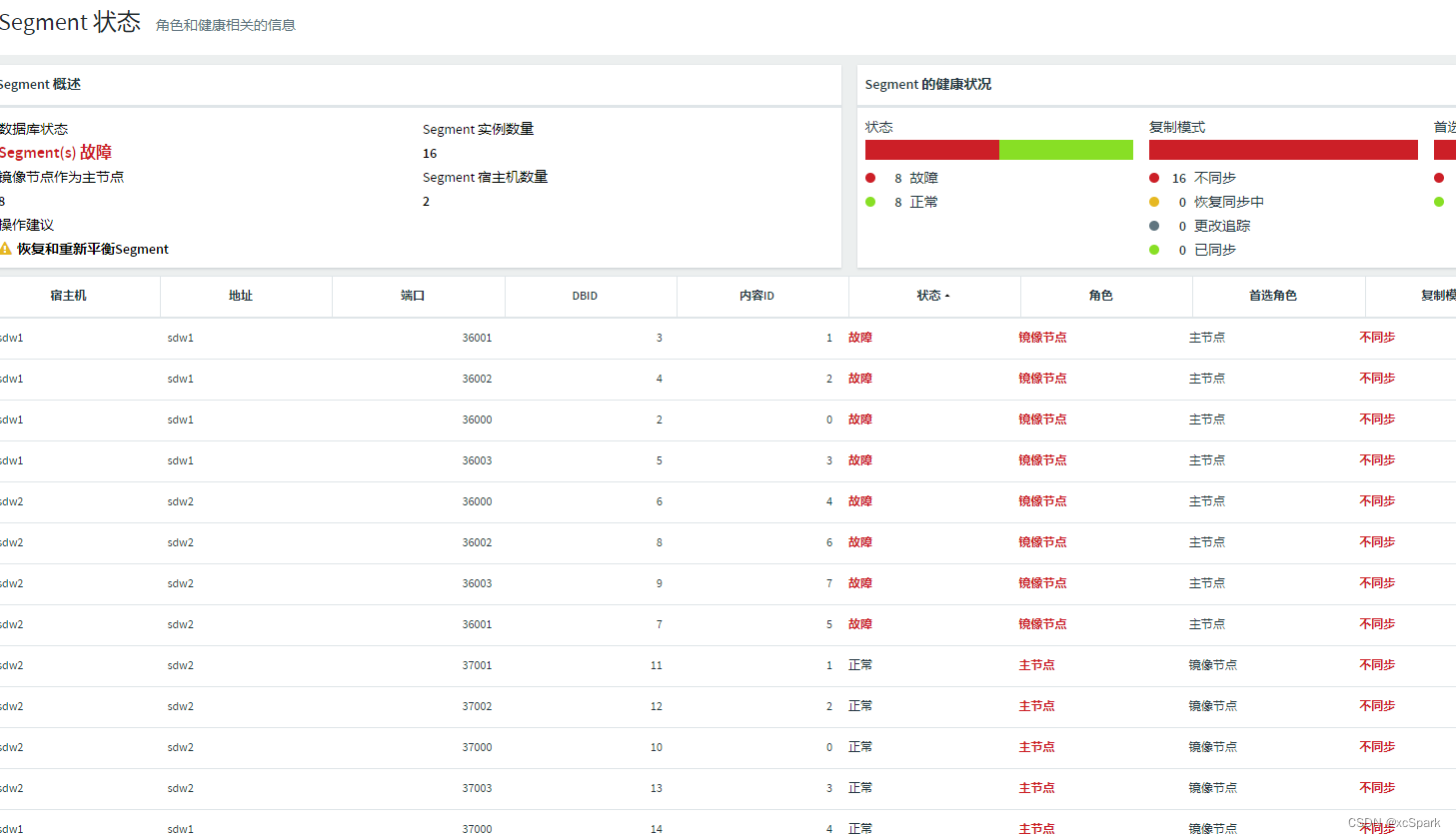

Still failed after restart

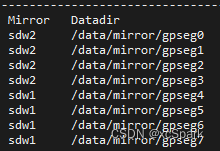

7、 ... and . After starting mirro Failed repair

Repair method 1 ( Invalid )

Repair segment node , Need to restart greenplum colony

take primary Copy normally to the corresponding failed mirror in

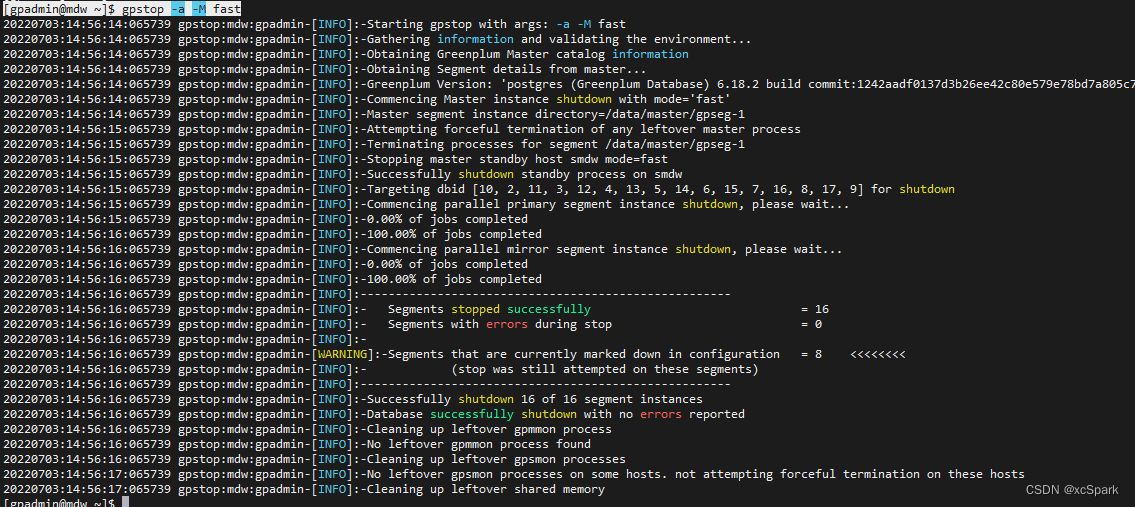

1. Stop the cluster

gpadmin The user action master machine

# Stop the cluster

gpstop -M fast

#####2. Record node information

# Take a look at the information

gpstart -m

gpstate -c

3. Stop the cluster , Copy the data

# stop it

gpstop -M fast

Input y

# take primary Copy the data of to the corresponding mirror

# Execute sequentially

scp -r sdw1:/data/primary/gpseg0 sdw2:/data/mirror

# Because there is too much content displayed ,-q silently

scp -r sdw1:/data/primary/gpseg0 sdw2:/data/mirror

scp -r sdw1:/data/primary/gpseg1 sdw2:/data/mirror

scp -r sdw1:/data/primary/gpseg2 sdw2:/data/mirror

scp -r sdw1:/data/primary/gpseg3 sdw2:/data/mirror

scp -r sdw2:/data/primary/gpseg4 sdw1:/data/mirror

scp -r sdw2:/data/primary/gpseg5 sdw1:/data/mirror

scp -r sdw2:/data/primary/gpseg6 sdw1:/data/mirror

scp -r sdw2:/data/primary/gpseg7 sdw1:/data/mirror

Many documents

-q Parameters can be silently , Invalid

You can see segment The server mirror Catalog , The folder time has changed after copying

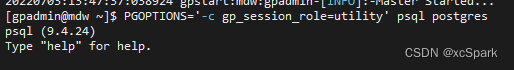

4. Start only master

# master only Pattern

gpstart -m

[[email protected] ~]$ PGOPTIONS='-c gp_session_role=utility' psql postgres

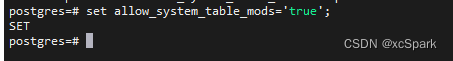

postgres=# set allow_system_table_mods='true';

psql (9.4.24)

Type "help" for help.

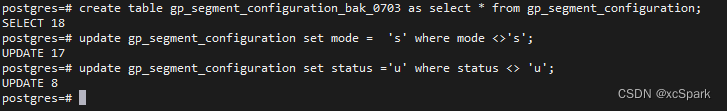

postgres=# create table gp_segment_configuration_bak_0703 as select * from gp_segment_configuration;

update gp_segment_configuration set mode = 's' where mode <>'s';

update gp_segment_configuration set status ='u' where status <> 'u';

\q

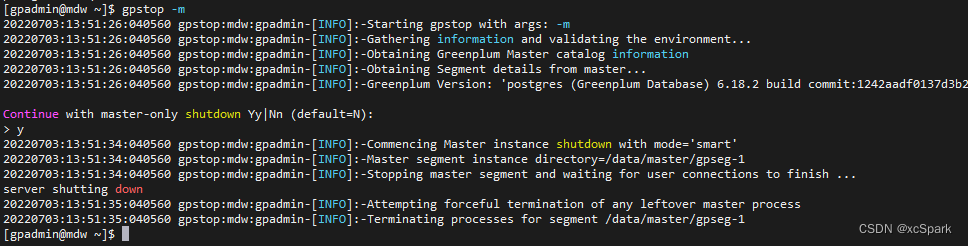

# close master

[[email protected] ~]$ gpstop -m -M fast

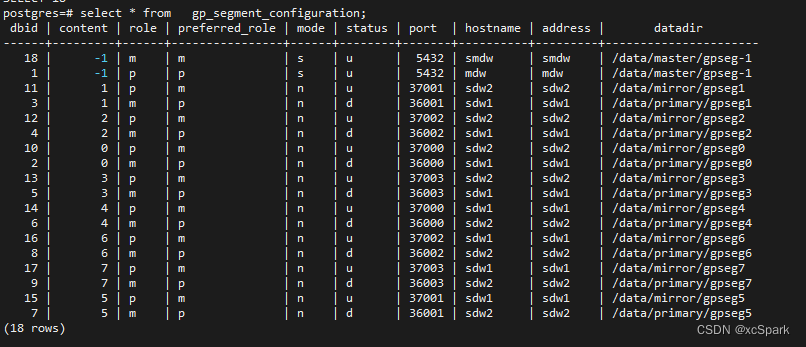

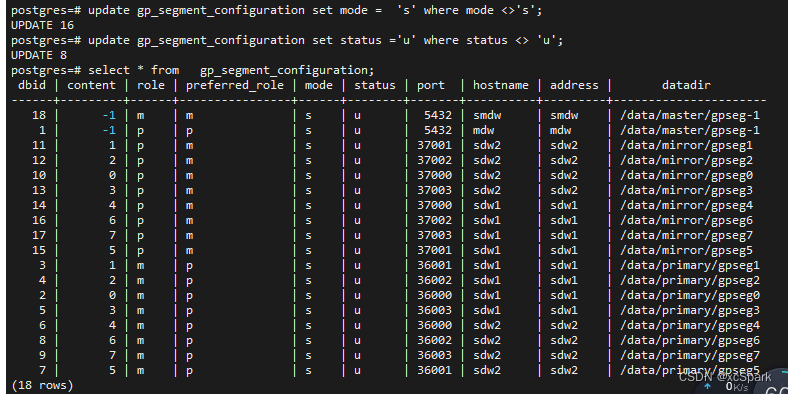

Before the change

After modification

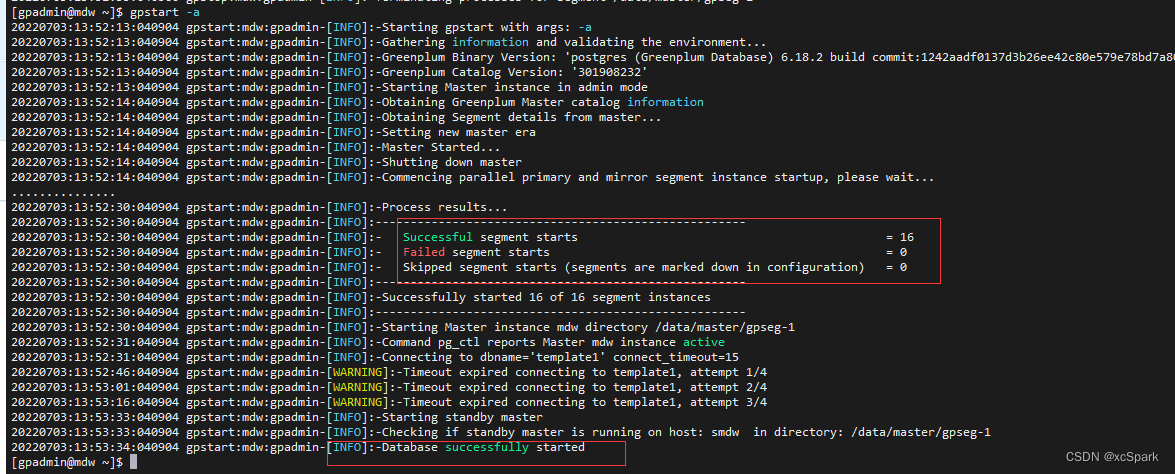

6. Start cluster

# start-up

gpstart -a

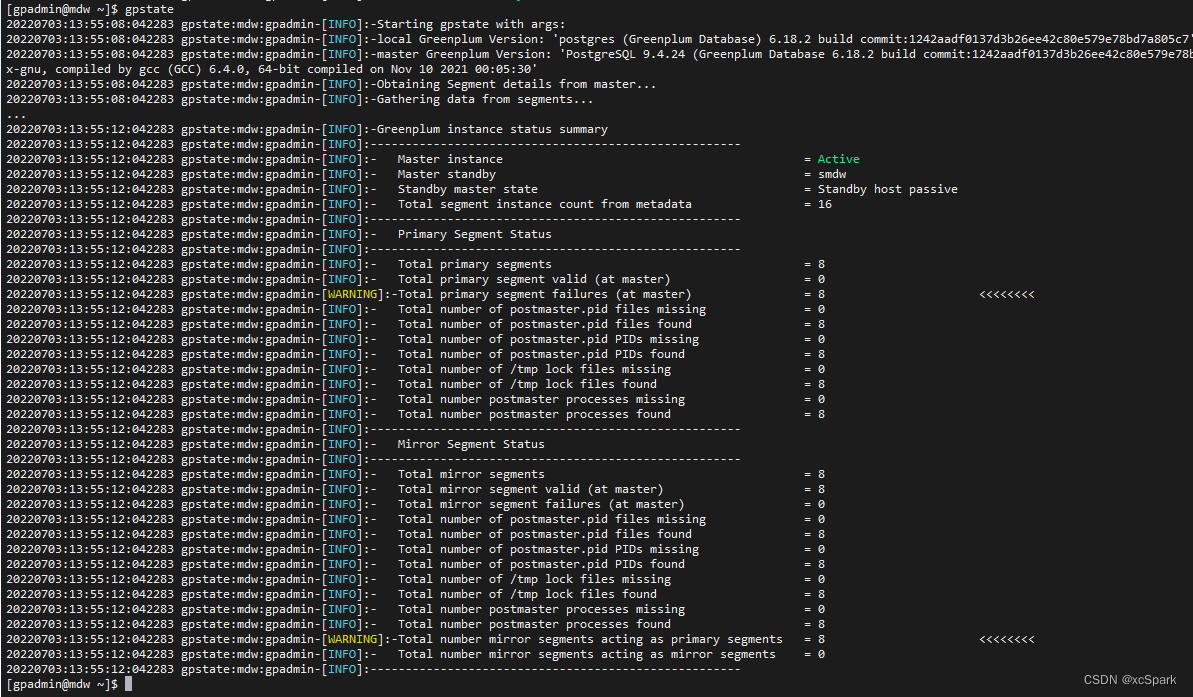

7. Check the status

# Check the status

gpstate

Still out of sync

8. monitor

[[email protected] ~]$ gpcc stop

2022-07-03 13:56:46 Stopping the gpcc agents and webserver...

2022-07-03 13:56:48 GPCC webserver and metrics collection agents have been stopped. Use gpcc start to start them again

[[email protected] ~]$ gpcc start

2022-07-03 13:56:51 Starting the gpcc agents and webserver...

2022-07-03 13:56:56 Agent successfully started on 4/4 hosts

2022-07-03 13:56:56 View Greenplum Command Center at http://mdw:28080

[[email protected] ~]$

Still wrong

9. gprecoverseg Repair

perform gprecoverseg command

[[email protected] ~]$ gprecoverseg

20220703:14:23:41:055380 gprecoverseg:mdw:gpadmin-[INFO]:-Starting gprecoverseg with args:

20220703:14:23:41:055380 gprecoverseg:mdw:gpadmin-[INFO]:-local Greenplum Version: 'postgres (Greenplum Database) 6.18.2 build commit:1242aadf0137d3b26ee42c80e579e78bd7a805c7'

20220703:14:23:41:055380 gprecoverseg:mdw:gpadmin-[INFO]:-master Greenplum Version: 'PostgreSQL 9.4.24 (Greenplum Database 6.18.2 build commit:1242aadf0137d3b26ee42c80e579e78bd7a805c7) on x86_64-unknown-linux-gnu, compiled by gcc (GCC) 6.4.0, 64-bit compiled on Nov 10 2021 00:05:30'

20220703:14:23:41:055380 gprecoverseg:mdw:gpadmin-[INFO]:-Obtaining Segment details from master...

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:-Heap checksum setting is consistent between master and the segments that are candidates for recoverseg

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:-Greenplum instance recovery parameters

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:----------------------------------------------------------

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:-Recovery type = Standard

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:----------------------------------------------------------

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:-Recovery 1 of 8

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:----------------------------------------------------------

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Synchronization mode = Incremental

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Failed instance host = sdw1

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Failed instance address = sdw1

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Failed instance directory = /data/primary/gpseg0

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Failed instance port = 36000

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Recovery Source instance host = sdw2

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Recovery Source instance address = sdw2

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Recovery Source instance directory = /data/mirror/gpseg0

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Recovery Source instance port = 37000

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Recovery Target = in-place

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:----------------------------------------------------------

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:-Recovery 2 of 8

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:----------------------------------------------------------

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Synchronization mode = Incremental

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Failed instance host = sdw1

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Failed instance address = sdw1

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Failed instance directory = /data/primary/gpseg1

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Failed instance port = 36001

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Recovery Source instance host = sdw2

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Recovery Source instance address = sdw2

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Recovery Source instance directory = /data/mirror/gpseg1

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Recovery Source instance port = 37001

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Recovery Target = in-place

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:----------------------------------------------------------

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:-Recovery 3 of 8

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:----------------------------------------------------------

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Synchronization mode = Incremental

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Failed instance host = sdw1

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Failed instance address = sdw1

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Failed instance directory = /data/primary/gpseg2

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Failed instance port = 36002

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Recovery Source instance host = sdw2

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Recovery Source instance address = sdw2

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Recovery Source instance directory = /data/mirror/gpseg2

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Recovery Source instance port = 37002

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Recovery Target = in-place

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:----------------------------------------------------------

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:-Recovery 4 of 8

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:----------------------------------------------------------

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Synchronization mode = Incremental

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Failed instance host = sdw1

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Failed instance address = sdw1

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Failed instance directory = /data/primary/gpseg3

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Failed instance port = 36003

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Recovery Source instance host = sdw2

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Recovery Source instance address = sdw2

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Recovery Source instance directory = /data/mirror/gpseg3

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Recovery Source instance port = 37003

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Recovery Target = in-place

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:----------------------------------------------------------

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:-Recovery 5 of 8

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:----------------------------------------------------------

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Synchronization mode = Incremental

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Failed instance host = sdw2

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Failed instance address = sdw2

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Failed instance directory = /data/primary/gpseg4

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Failed instance port = 36000

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Recovery Source instance host = sdw1

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Recovery Source instance address = sdw1

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Recovery Source instance directory = /data/mirror/gpseg4

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Recovery Source instance port = 37000

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Recovery Target = in-place

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:----------------------------------------------------------

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:-Recovery 6 of 8

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:----------------------------------------------------------

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Synchronization mode = Incremental

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Failed instance host = sdw2

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Failed instance address = sdw2

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Failed instance directory = /data/primary/gpseg5

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Failed instance port = 36001

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Recovery Source instance host = sdw1

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Recovery Source instance address = sdw1

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Recovery Source instance directory = /data/mirror/gpseg5

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Recovery Source instance port = 37001

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Recovery Target = in-place

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:----------------------------------------------------------

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:-Recovery 7 of 8

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:----------------------------------------------------------

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Synchronization mode = Incremental

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Failed instance host = sdw2

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Failed instance address = sdw2

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Failed instance directory = /data/primary/gpseg6

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Failed instance port = 36002

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Recovery Source instance host = sdw1

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Recovery Source instance address = sdw1

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Recovery Source instance directory = /data/mirror/gpseg6

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Recovery Source instance port = 37002

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Recovery Target = in-place

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:----------------------------------------------------------

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:-Recovery 8 of 8

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:----------------------------------------------------------

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Synchronization mode = Incremental

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Failed instance host = sdw2

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Failed instance address = sdw2

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Failed instance directory = /data/primary/gpseg7

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Failed instance port = 36003

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Recovery Source instance host = sdw1

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Recovery Source instance address = sdw1

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Recovery Source instance directory = /data/mirror/gpseg7

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Recovery Source instance port = 37003

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:- Recovery Target = in-place

20220703:14:23:42:055380 gprecoverseg:mdw:gpadmin-[INFO]:----------------------------------------------------------

Continue with segment recovery procedure Yy|Nn (default=N):

> y

20220703:14:23:51:055380 gprecoverseg:mdw:gpadmin-[INFO]:-Starting to create new pg_hba.conf on primary segments

20220703:14:23:56:055380 gprecoverseg:mdw:gpadmin-[INFO]:-Successfully modified pg_hba.conf on primary segments to allow replication connections

20220703:14:23:56:055380 gprecoverseg:mdw:gpadmin-[INFO]:-8 segment(s) to recover

20220703:14:23:56:055380 gprecoverseg:mdw:gpadmin-[INFO]:-Ensuring 8 failed segment(s) are stopped

20220703:14:23:57:055380 gprecoverseg:mdw:gpadmin-[INFO]:-57638: /data/primary/gpseg0

20220703:14:23:58:055380 gprecoverseg:mdw:gpadmin-[INFO]:-57639: /data/primary/gpseg1

20220703:14:24:00:055380 gprecoverseg:mdw:gpadmin-[INFO]:-57636: /data/primary/gpseg2

20220703:14:24:01:055380 gprecoverseg:mdw:gpadmin-[INFO]:-57633: /data/primary/gpseg3

20220703:14:24:03:055380 gprecoverseg:mdw:gpadmin-[INFO]:-54324: /data/primary/gpseg4

20220703:14:24:04:055380 gprecoverseg:mdw:gpadmin-[INFO]:-54325: /data/primary/gpseg5

20220703:14:24:05:055380 gprecoverseg:mdw:gpadmin-[INFO]:-54310: /data/primary/gpseg6

20220703:14:24:07:055380 gprecoverseg:mdw:gpadmin-[INFO]:-54306: /data/primary/gpseg7

.

20220703:14:24:08:055380 gprecoverseg:mdw:gpadmin-[INFO]:-Ensuring that shared memory is cleaned up for stopped segments

20220703:14:24:08:055380 gprecoverseg:mdw:gpadmin-[INFO]:-Updating configuration with new mirrors

20220703:14:24:08:055380 gprecoverseg:mdw:gpadmin-[INFO]:-Updating mirrors

20220703:14:24:08:055380 gprecoverseg:mdw:gpadmin-[INFO]:-Running pg_rewind on failed segments

sdw1 (dbid 2): no rewind required

sdw1 (dbid 3): no rewind required

sdw1 (dbid 4): no rewind required

sdw1 (dbid 5): no rewind required

sdw2 (dbid 6): no rewind required

sdw2 (dbid 7): no rewind required

sdw2 (dbid 8): no rewind required

sdw2 (dbid 9): no rewind required

20220703:14:24:13:055380 gprecoverseg:mdw:gpadmin-[INFO]:-Starting mirrors

20220703:14:24:13:055380 gprecoverseg:mdw:gpadmin-[INFO]:-era is 8ee3ebb3041964e3_220703142102

20220703:14:24:13:055380 gprecoverseg:mdw:gpadmin-[INFO]:-Commencing parallel segment instance startup, please wait...

......

20220703:14:24:19:055380 gprecoverseg:mdw:gpadmin-[INFO]:-Process results...

20220703:14:24:19:055380 gprecoverseg:mdw:gpadmin-[INFO]:-Triggering FTS probe

20220703:14:24:20:055380 gprecoverseg:mdw:gpadmin-[INFO]:-********************************

20220703:14:24:20:055380 gprecoverseg:mdw:gpadmin-[INFO]:-Segments successfully recovered.

20220703:14:24:20:055380 gprecoverseg:mdw:gpadmin-[INFO]:-********************************

20220703:14:24:20:055380 gprecoverseg:mdw:gpadmin-[INFO]:-Recovered mirror segments need to sync WAL with primary segments.

20220703:14:24:20:055380 gprecoverseg:mdw:gpadmin-[INFO]:-Use 'gpstate -e' to check progress of WAL sync remaining bytes

[[email protected] ~]$

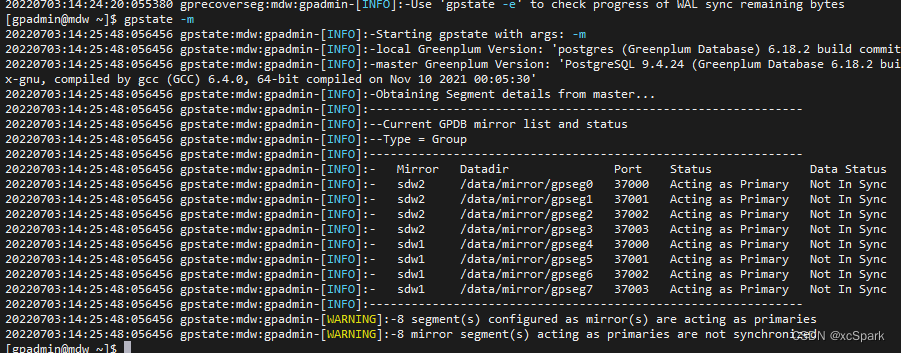

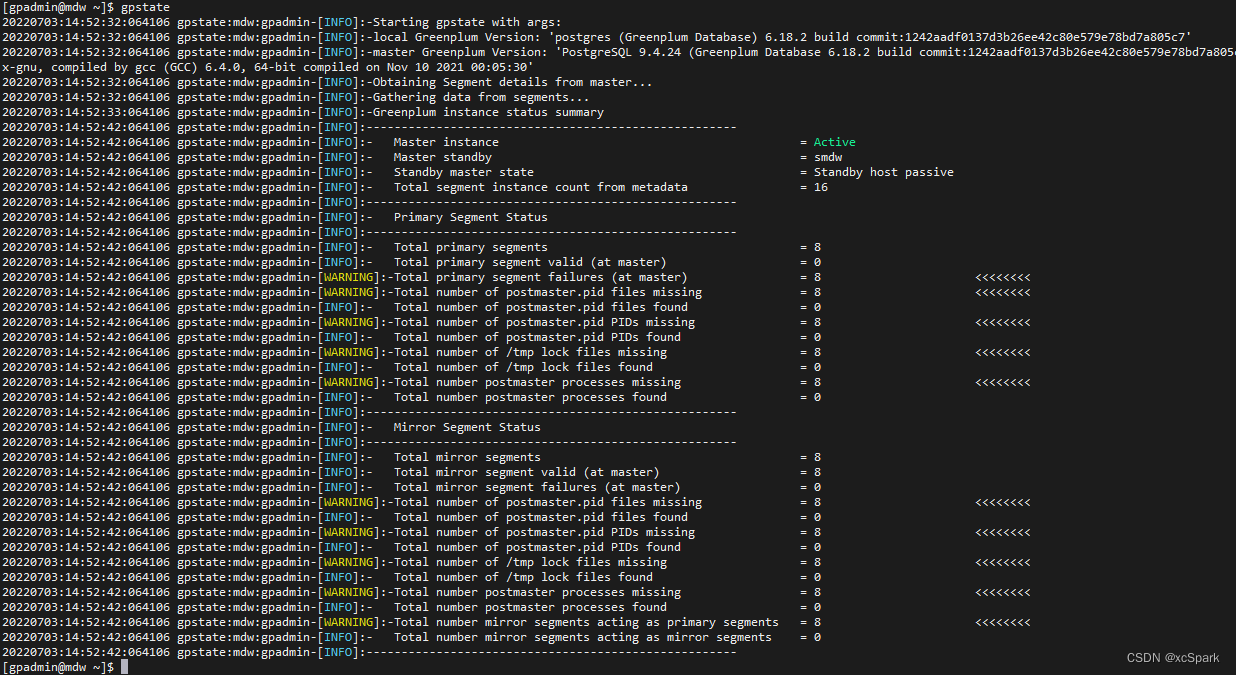

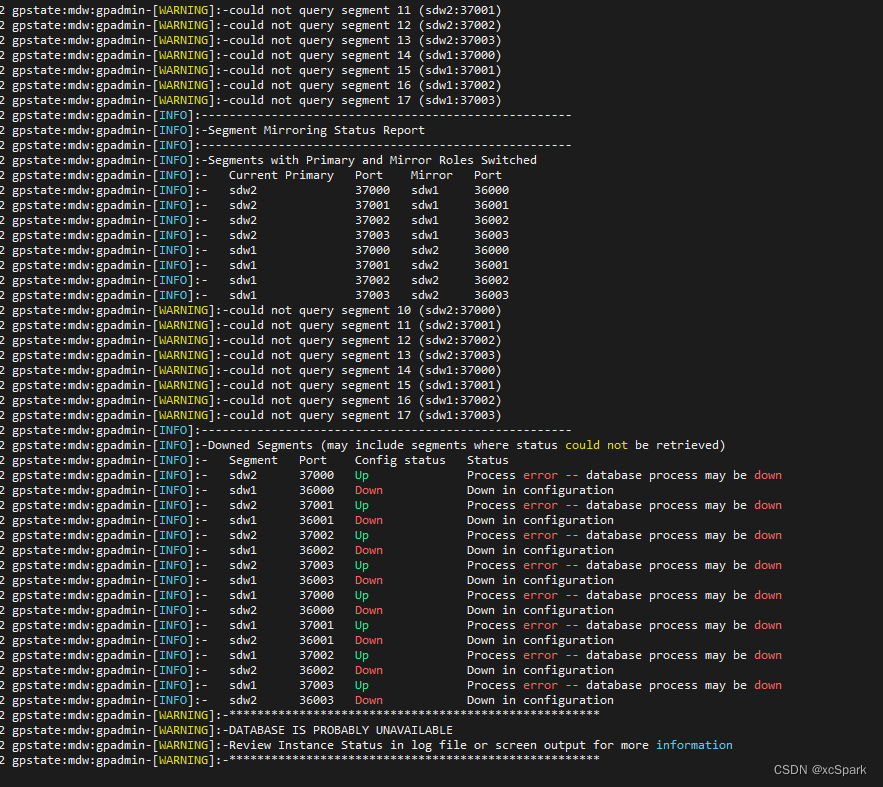

10. Check the status

gpstate -m

At this time, it is still not synchronized

restart

gpstop -M fast

gpstart

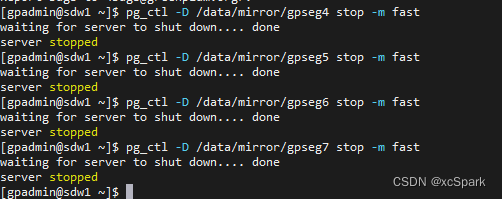

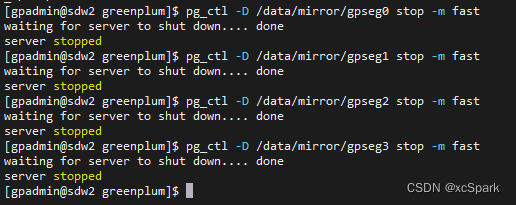

Repair method 2 ( Invalid )

1. Find the corresponding fault node

gpstate -m

2. Log in to the corresponding node cluster , Stop the corresponding instance

Such as gpadmin The user login sdw1

pg_ctl -D /data/mirror/gpseg4 stop -m fast

pg_ctl -D /data/mirror/gpseg5 stop -m fast

pg_ctl -D /data/mirror/gpseg6 stop -m fast

pg_ctl -D /data/mirror/gpseg7 stop -m fast

Such as gpadmin The user login sdw2

pg_ctl -D /data/mirror/gpseg0 stop -m fast

pg_ctl -D /data/mirror/gpseg1 stop -m fast

pg_ctl -D /data/mirror/gpseg2 stop -m fast

pg_ctl -D /data/mirror/gpseg3 stop -m fast

3. Check the status

gpstate

gpstate -e

4. Shut down the cluster

[[email protected] ~]$ gpstop -a -M fast

5. start-up

# restrict The way

gpstart -a -R

6. Fix the fault

Attention must be paid to the problem of switching between primary and standby nodes during database recovery

gprecoverseg -a

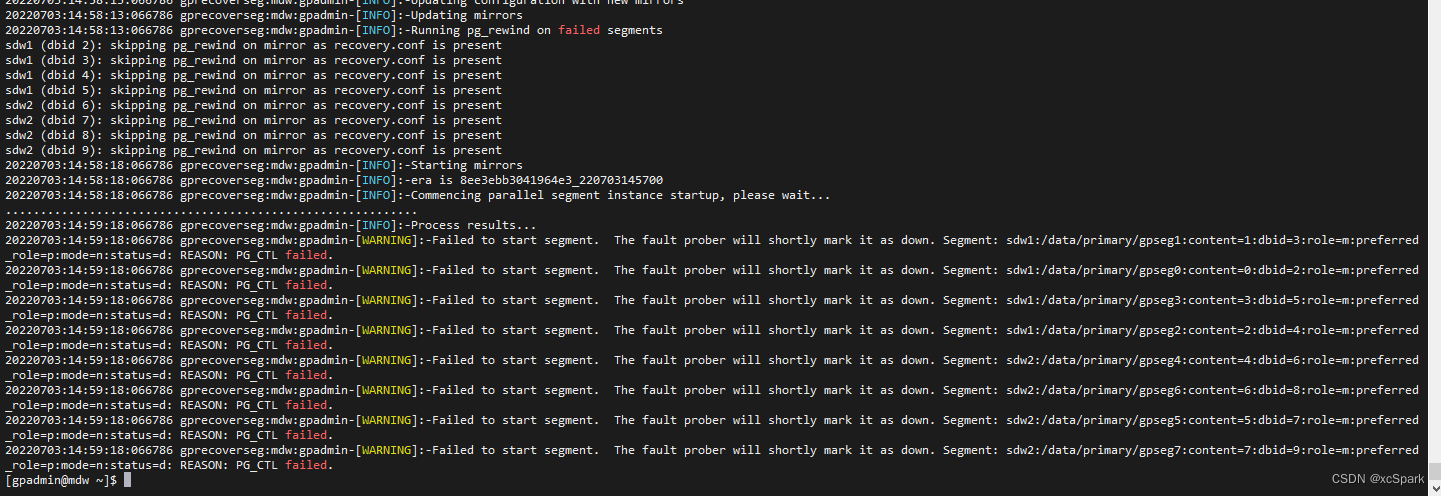

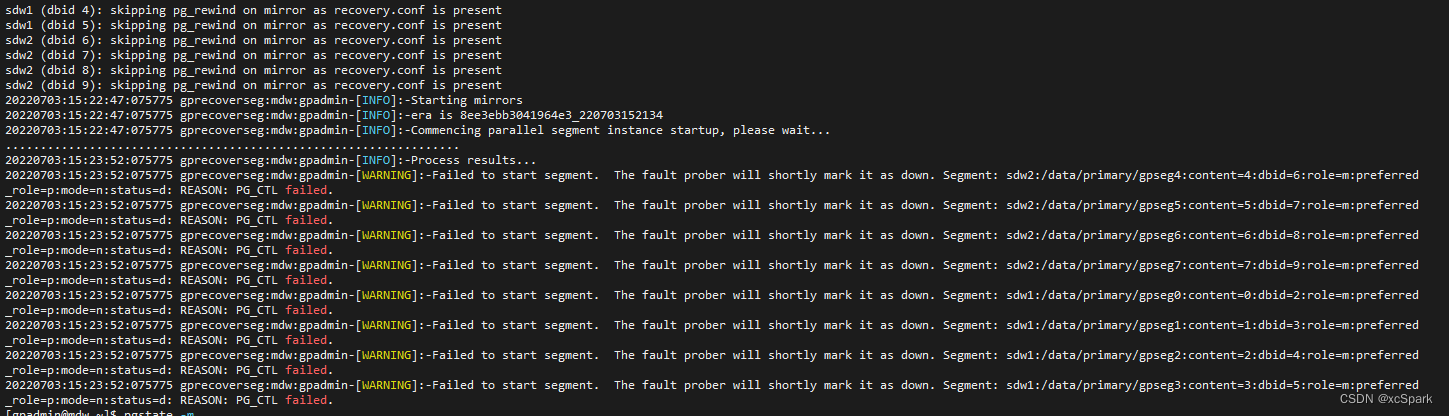

6.1 error Failed to start segment

20220703:15:10:36:071273 gprecoverseg:mdw:gpadmin-[WARNING]:-Failed to start segment. The fault prober will shortly mark it as down. Segment: sdw1:/data/primary/gpseg2:content=2:dbid=4:role=m:preferred_role=p:mode=n:status=d: REASON: PG_CTL failed.

20220703:15:10:36:071273 gprecoverseg:mdw:gpadmin-[WARNING]:-Failed to start segment. The fault prober will shortly mark it as down. Segment: sdw1:/data/primary/gpseg0:content=0:dbid=2:role=m:preferred_role=p:mode=n:status=d: REASON: PG_CTL failed.

20220703:15:10:36:071273 gprecoverseg:mdw:gpadmin-[WARNING]:-Failed to start segment. The fault prober will shortly mark it as down. Segment: sdw1:/data/primary/gpseg1:content=1:dbid=3:role=m:preferred_role=p:mode=n:status=d: REASON: PG_CTL failed.

20220703:15:10:36:071273 gprecoverseg:mdw:gpadmin-[WARNING]:-Failed to start segment. The fault prober will shortly mark it as down. Segment: sdw1:/data/primary/gpseg3:content=3:dbid=5:role=m:preferred_role=p:mode=n:status=d: REASON: PG_CTL failed.

20220703:15:10:36:071273 gprecoverseg:mdw:gpadmin-[WARNING]:-Failed to start segment. The fault prober will shortly mark it as down. Segment: sdw2:/data/primary/gpseg4:content=4:dbid=6:role=m:preferred_role=p:mode=n:status=d: REASON: PG_CTL failed.

20220703:15:10:36:071273 gprecoverseg:mdw:gpadmin-[WARNING]:-Failed to start segment. The fault prober will shortly mark it as down. Segment: sdw2:/data/primary/gpseg7:content=7:dbid=9:role=m:preferred_role=p:mode=n:status=d: REASON: PG_CTL failed.

20220703:15:10:36:071273 gprecoverseg:mdw:gpadmin-[WARNING]:-Failed to start segment. The fault prober will shortly mark it as down. Segment: sdw2:/data/primary/gpseg6:content=6:dbid=8:role=m:preferred_role=p:mode=n:status=d: REASON: PG_CTL failed.

20220703:15:10:36:071273 gprecoverseg:mdw:gpadmin-[WARNING]:-Failed to start segment. The fault prober will shortly mark it as down. Segment: sdw2:/data/primary/gpseg5:content=5:dbid=7:role=m:preferred_role=p:mode=n:status=d: REASON: PG_CTL failed.

6.2 Failed to find segment

See if it exists backup_label file , Exist and can be deleted

[[email protected] mirror]$ cd /data/mirror

[[email protected] mirror]$ ls gpseg*/backup*

gpseg0/backup_label.old gpseg1/backup_label.old gpseg2/backup_label.old gpseg3/backup_label.old

7. restart

cd ~

gpstop -a -r

gprecoverseg -o ./recover_file

gprecoverseg -i ./recover_file

# Exchange after recovery

gprecoverseg -r

gpstate -m

SQL

1. Inquire about gp_segment_configuration

[[email protected] ~]$ psql -c "SELECT * FROM gp_segment_configuration";

dbid | content | role | preferred_role | mode | status | port | hostname | address | datadir

------+---------+------+----------------+------+--------+-------+----------+---------+----------------------

18 | -1 | m | m | s | u | 5432 | smdw | smdw | /data/master/gpseg-1

1 | -1 | p | p | s | u | 5432 | mdw | mdw | /data/master/gpseg-1

12 | 2 | p | m | n | u | 37002 | sdw2 | sdw2 | /data/mirror/gpseg2

4 | 2 | m | p | n | d | 36002 | sdw1 | sdw1 | /data/primary/gpseg2

10 | 0 | p | m | n | u | 37000 | sdw2 | sdw2 | /data/mirror/gpseg0

2 | 0 | m | p | n | d | 36000 | sdw1 | sdw1 | /data/primary/gpseg0

11 | 1 | p | m | n | u | 37001 | sdw2 | sdw2 | /data/mirror/gpseg1

3 | 1 | m | p | n | d | 36001 | sdw1 | sdw1 | /data/primary/gpseg1

13 | 3 | p | m | n | u | 37003 | sdw2 | sdw2 | /data/mirror/gpseg3

5 | 3 | m | p | n | d | 36003 | sdw1 | sdw1 | /data/primary/gpseg3

14 | 4 | p | m | n | u | 37000 | sdw1 | sdw1 | /data/mirror/gpseg4

6 | 4 | m | p | n | d | 36000 | sdw2 | sdw2 | /data/primary/gpseg4

15 | 5 | p | m | n | u | 37001 | sdw1 | sdw1 | /data/mirror/gpseg5

7 | 5 | m | p | n | d | 36001 | sdw2 | sdw2 | /data/primary/gpseg5

16 | 6 | p | m | n | u | 37002 | sdw1 | sdw1 | /data/mirror/gpseg6

8 | 6 | m | p | n | d | 36002 | sdw2 | sdw2 | /data/primary/gpseg6

17 | 7 | p | m | n | u | 37003 | sdw1 | sdw1 | /data/mirror/gpseg7

9 | 7 | m | p | n | d | 36003 | sdw2 | sdw2 | /data/primary/gpseg7

(18 rows)

边栏推荐

- 数据分片介绍

- [kuangbin] topic 15 digit DP

- POJ - 3616 Milking Time(DP+LIS)

- 调用华为游戏多媒体服务的创建引擎接口返回错误码1002,错误信息:the params is error

- Interpolation lookup (two methods)

- 2 - 3 arbre de recherche

- 快速集成认证服务-HarmonyOS平台

- Arm GIC (IV) GIC V3 register class analysis notes.

- Required String parameter ‘XXX‘ is not present

- MySQL partition explanation and operation statement

猜你喜欢

調用華為遊戲多媒體服務的創建引擎接口返回錯誤碼1002,錯誤信息:the params is error

Data type - integer (C language)

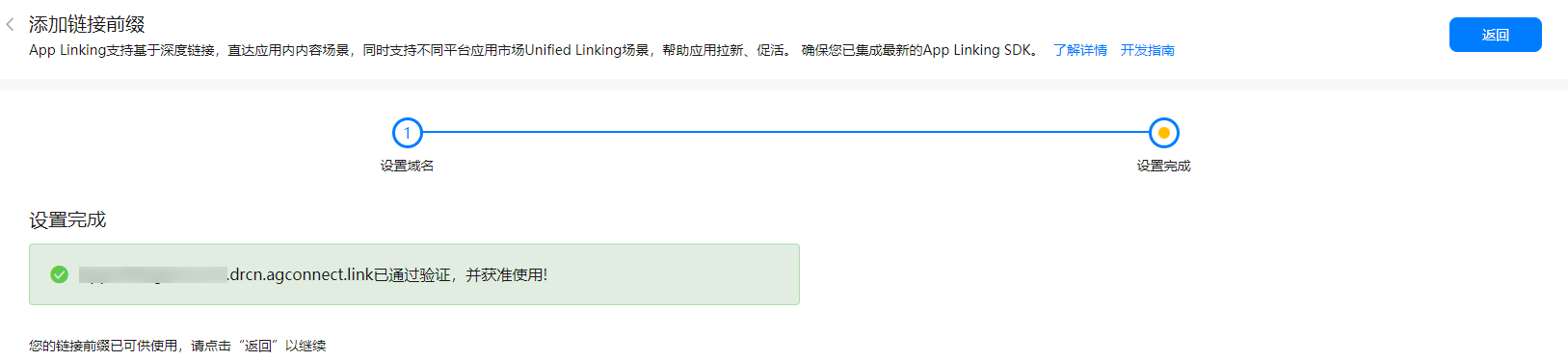

How to integrate app linking services in harmonyos applications

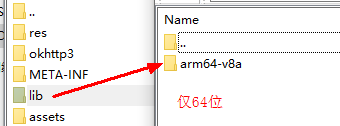

详解华为应用市场2022年逐步减少32位包体上架应用和策略

指针进阶,字符串函数

![[step on the pit] Nacos registration has been connected to localhost:8848, no available server](/img/ee/ab4d62745929acec2f5ba57155b3fa.png)

[step on the pit] Nacos registration has been connected to localhost:8848, no available server

Opencv learning note 4 - expansion / corrosion / open operation / close operation

Input of mathematical formula of obsidan

A method for quickly viewing pod logs under frequent tests (grep awk xargs kuberctl)

Train your dataset with swinunet

随机推荐

Greenplum6.x搭建_安装

【MySQL】数据库进阶之触发器内容详解

mysql分区讲解及操作语句

如何理解分布式架构和微服务架构呢

数据分片介绍

使用AGC重签名服务前后渠道号信息异常分析

Gson converts the entity class to JSON times declare multiple JSON fields named

Required String parameter ‘XXX‘ is not present

字符串操作

Compilation and linking of programs

[paper reading] icml2020: can autonomous vehicles identify, recover from, and adapt to distribution shifts?

快速集成认证服务-HarmonyOS平台

Rapid integration of authentication services - harmonyos platform

[wechat applet: cache operation]

GOLand idea intellij 无法输入汉字

登山小分队(dfs)

POJ - 3616 Milking Time(DP+LIS)

Opencv learning note 3 - image smoothing / denoising

grpc、oauth2、openssl、双向认证、单向认证等专栏文章目录

Database storage - table partition