当前位置:网站首页>Paging of a scratch (page turning processing)

Paging of a scratch (page turning processing)

2022-07-06 01:07:00 【Keep a low profile】

import scrapy

from bs4 import BeautifulSoup

class BookSpiderSpider(scrapy.Spider):

name = 'book_spider'

allowed_domains = ['17k.com']

start_urls = ['https://www.17k.com/all/book/2_0_0_0_0_0_0_0_1.html']

""" This will be explained later start_requests Is the method swollen or fat """

def start_requests(self):

for url in self.start_urls:

yield scrapy.Request(

url=url,

callback=self.parse

)

def parse(self, response, **kwargs):

print(response.url)

soup = BeautifulSoup(response.text, 'lxml')

trs = soup.find('div', attrs={

'class': 'alltable'}).find('tbody').find_all('tr')[1:]

for tr in trs:

book_type = tr.find('td', attrs={

'class': 'td2'}).find('a').text

book_name = tr.find('td', attrs={

'class': 'td3'}).find('a').text

book_words = tr.find('td', attrs={

'class': 'td5'}).text

book_author = tr.find('td', attrs={

'class': 'td6'}).find('a').text

print(book_type, book_name, book_words, book_author)

#

break

""" This is xpath The way of parsing """

# trs = response.xpath("//div[@class='alltable']/table/tbody/tr")[1:]

# for tr in trs:

# type = tr.xpath("./td[2]/a/text()").extract_first()

# name = tr.xpath("./td[3]/span/a/text()").extract_first()

# words = tr.xpath("./td[5]/text()").extract_first()

# author = tr.xpath("./td[6]/a/text()").extract_first()

# print(type, name, words, author)

""" 1 Find... On the next page url, Request to the next page Pagination logic This logic is the simplest kind Keep climbing linearly """

# next_page_url = soup.find('a', text=' The next page ')['href']

# if 'javascript' not in next_page_url:

# yield scrapy.Request(

# url=response.urljoin(next_page_url),

# method='get',

# callback=self.parse

# )

# 2 Brutal paging logic

""" Get all the url, Just send the request Will pass the engine 、 Scheduler ( aggregate queue ) Finish the heavy work here , Give it to the downloader , Then return to the reptile , But that start_url It will be repeated , The reason for repetition is inheritance Spider This class The methods of the parent class are dont_filter=True This thing So here we need to rewrite Spider The inside of the class start_requests Methods , Filter by default That's it start_url Repetitive questions """

#

#

a_list = soup.find('div', attrs={

'class': 'page'}).find_all('a')

for a in a_list:

if 'javascript' not in a['href']:

yield scrapy.Request(

url=response.urljoin(a['href']),

method='get',

callback=self.parse

)

边栏推荐

- Installation and use of esxi

- MIT博士论文 | 使用神经符号学习的鲁棒可靠智能系统

- Cloud guide DNS, knowledge popularization and classroom notes

- MCU realizes OTA online upgrade process through UART

- Construction plan of Zhuhai food physical and chemical testing laboratory

- A preliminary study of geojson

- C language programming (Chapter 6 functions)

- 1791. Find the central node of the star diagram / 1790 Can two strings be equal by performing string exchange only once

- Idea remotely submits spark tasks to the yarn cluster

- Intensive learning weekly, issue 52: depth cuprl, distspectrl & double deep q-network

猜你喜欢

Differences between standard library functions and operators

![[groovy] XML serialization (use markupbuilder to generate XML data | create sub tags under tag closures | use markupbuilderhelper to add XML comments)](/img/d4/4a33e7f077db4d135c8f38d4af57fa.jpg)

[groovy] XML serialization (use markupbuilder to generate XML data | create sub tags under tag closures | use markupbuilderhelper to add XML comments)

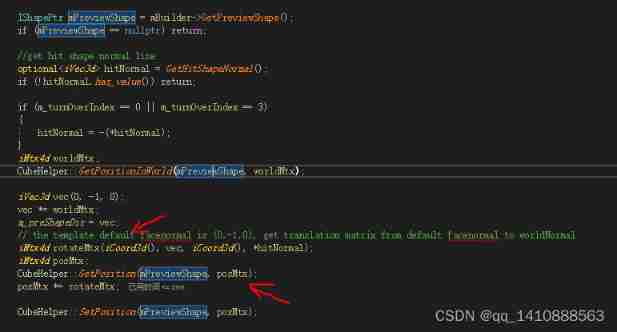

Cve-2017-11882 reappearance

The third season of ape table school is about to launch, opening a new vision for developers under the wave of going to sea

Four dimensional matrix, flip (including mirror image), rotation, world coordinates and local coordinates

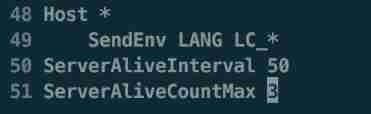

SSH login is stuck and disconnected

Dedecms plug-in free SEO plug-in summary

《强化学习周刊》第52期:Depth-CUPRL、DistSPECTRL & Double Deep Q-Network

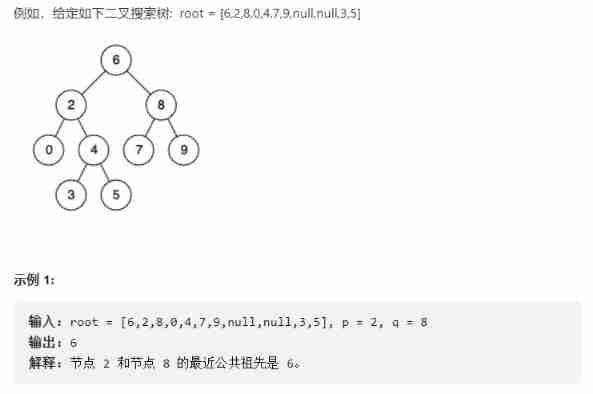

Finding the nearest common ancestor of binary search tree by recursion

For a deadline, the IT fellow graduated from Tsinghua suddenly died on the toilet

随机推荐

【第30天】给定一个整数 n ,求它的因数之和

For a deadline, the IT fellow graduated from Tsinghua suddenly died on the toilet

[groovy] JSON serialization (convert class objects to JSON strings | convert using jsonbuilder | convert using jsonoutput | format JSON strings for output)

How to extract MP3 audio from MP4 video files?

FFT learning notes (I think it is detailed)

Dedecms plug-in free SEO plug-in summary

Vulhub vulnerability recurrence 75_ XStream

The population logic of the request to read product data on the sap Spartacus home page

[groovy] compile time metaprogramming (compile time method interception | find the method to be intercepted in the myasttransformation visit method)

SAP Spartacus home 页面读取 product 数据的请求的 population 逻辑

I'm interested in watching Tiktok live beyond concert

详细页返回列表保留原来滚动条所在位置

程序员成长第九篇:真实项目中的注意事项

[groovy] XML serialization (use markupbuilder to generate XML data | create sub tags under tag closures | use markupbuilderhelper to add XML comments)

Zhuhai's waste gas treatment scheme was exposed

Live broadcast system code, custom soft keyboard style: three kinds of switching: letters, numbers and punctuation

What is the most suitable book for programmers to engage in open source?

ubantu 查看cudnn和cuda的版本

毕设-基于SSM高校学生社团管理系统

Spark SQL null value, Nan judgment and processing