当前位置:网站首页>【Flink】CDH/CDP Flink on Yarn 日志配置

【Flink】CDH/CDP Flink on Yarn 日志配置

2022-07-06 09:15:00 【kiraraLou】

前言

由于 flink 应用程序大多是长时间运行的作业,因此 jobmanager.log 和 taskmanager.log 文件的大小很容易增长到数 GB,这可能会在您查看 flink Dashboard 上的内容时出现问题。这篇文章整理了如何为 flink 启用 jobmanager.log 和 taskmanager.log 的滚动日志记录。

这边文章为在 CDH/CDP 环境下的配置,并且这篇文章适用于 Flink 集群和 Flink on YARN。

配置log4j

Flink 使用的默认日志是 Log4j,配置文件的如下:

log4j-cli.properties: 由Flink命令行客户端使用(例如flink run)log4j-yarn-session.properties: 由Flink命令行启动YARN Session(yarn-session.sh)时使用log4j.properties: JobManager / Taskmanager日志(包括standalone和YARN)

问题原因

默认情况下,CSA flink log4j.properties 没有配置滚动文件附加程序。

如何配置

1. 修改 flink-conf/log4j.properties 参数

进入 Cloudera Manager -> Flink -> Configuration -> Flink Client Advanced Configuration Snippet (Safety Valve) for flink-conf/log4j.properties

2. 插入以下内容:

monitorInterval=30

# This affects logging for both user code and Flink

rootLogger.level = INFO

rootLogger.appenderRef.file.ref = MainAppender

# Uncomment this if you want to _only_ change Flink's logging

#logger.flink.name = org.apache.flink

#logger.flink.level = INFO

# The following lines keep the log level of common libraries/connectors on

# log level INFO. The root logger does not override this. You have to manually

# change the log levels here.

logger.akka.name = akka

logger.akka.level = INFO

logger.kafka.name= org.apache.kafka

logger.kafka.level = INFO

logger.hadoop.name = org.apache.hadoop

logger.hadoop.level = INFO

logger.zookeeper.name = org.apache.zookeeper

logger.zookeeper.level = INFO

logger.shaded_zookeeper.name = org.apache.flink.shaded.zookeeper3

logger.shaded_zookeeper.level = INFO

# Log all infos in the given file

appender.main.name = MainAppender

appender.main.type = RollingFile

appender.main.append = true

appender.main.fileName = ${sys:log.file}

appender.main.filePattern = ${sys:log.file}.%i

appender.main.layout.type = PatternLayout

appender.main.layout.pattern = %d{yyyy-MM-dd HH:mm:ss,SSS} %-5p %-60c %x - %m%n

appender.main.policies.type = Policies

appender.main.policies.size.type = SizeBasedTriggeringPolicy

appender.main.policies.size.size = 100MB

appender.main.policies.startup.type = OnStartupTriggeringPolicy

appender.main.strategy.type = DefaultRolloverStrategy

appender.main.strategy.max = ${env:MAX_LOG_FILE_NUMBER:-10}

# Suppress the irrelevant (wrong) warnings from the Netty channel handler

logger.netty.name = org.jboss.netty.channel.DefaultChannelPipeline

logger.netty.level = OFF

3. 部署客户端配置

从 CM -> Flink -> Actions -> Deploy client 配置保存并重新部署 flink客户端配置。

注意事项

注意 1:以上设置每 100 MB 滚动一次 jobmanager.log 和 taskmanager.log,并保留旧日志文件 7 天,或在总大小超过 5000MB 时删除最旧的日志文件。

注意2:以上log4j.properties 不控制jobmanager.err/out 和taskmanaer.err/out,如果您的应用程序显式打印任何结果到stdout/stderr,您可能会在长时间运行后填满文件系统。我们建议您利用 log4j 日志框架来记录任何消息,或打印任何结果。

注意3:尽管这篇文章是在CDP环境下的变更,但是发现有个bug,就是CDP环境有默认的配置事项,所以即使我们修改了,会有冲突,所以最后的修改方式为直接修改

/etc/flink/conf/log4j.properties文件。

IDEA

这里顺便介绍下 Flink本地idea运行的日志配置。

pom.xml

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-api</artifactId>

<version>1.7.25</version>

</dependency>

<dependency>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-slf4j-impl</artifactId>

<version>2.9.1</version>

</dependency>

<dependency>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-api</artifactId>

<version>2.9.1</version>

</dependency>

<dependency>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-core</artifactId>

<version>2.9.1</version>

</dependency>

resource

在resource 目录下编辑 log4j2.xml 文件

<?xml version="1.0" encoding="UTF-8"?>

<configuration monitorInterval="5">

<Properties>

<property name="LOG_PATTERN" value="%date{HH:mm:ss.SSS} [%thread] %-5level %logger{36} - %msg%n" />

<property name="LOG_LEVEL" value="INFO" />

</Properties>

<appenders>

<console name="Console" target="SYSTEM_OUT">

<PatternLayout pattern="${LOG_PATTERN}"/>

<ThresholdFilter level="${LOG_LEVEL}" onMatch="ACCEPT" onMismatch="DENY"/>

</console>

</appenders>

<loggers>

<root level="${LOG_LEVEL}">

<appender-ref ref="Console"/>

</root>

</loggers>

</configuration>

damo

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

public class Main {

// 创建Logger对象

private static final Logger log = LoggerFactory.getLogger(Main.class);

public static void main(String[] args) throws Exception {

// 打印日志

log.info("-----------------> start");

}

}

参考

- https://github.com/apache/flink/blob/master/flink-dist/src/main/flink-bin/conf/log4j.properties

- https://my.cloudera.com/knowledge/How-to-configure-CSA-flink-to-rotate-and-archive-the?id=333860

- https://nightlies.apache.org/flink/flink-docs-master/zh/docs/deployment/advanced/logging/

- https://cs101.blog/2018/01/03/logging-configuration-in-flink/

边栏推荐

- Codeforces Round #753 (Div. 3)

- Request object and response object analysis

- Solution of deleting path variable by mistake

- [蓝桥杯2017初赛]包子凑数

- Unable to call numpy in pycharm, with an error modulenotfounderror: no module named 'numpy‘

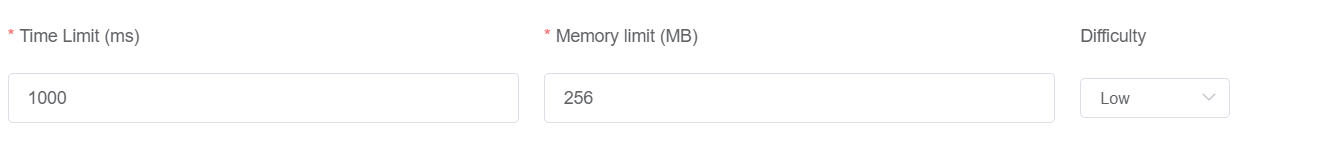

- 保姆级出题教程

- 02-项目实战之后台员工信息管理

- Why can't I use the @test annotation after introducing JUnit

- jS数组+数组方法重构

- 01 project demand analysis (ordering system)

猜你喜欢

Face recognition_ recognition

Machine learning -- census data analysis

Kept VRRP script, preemptive delay, VIP unicast details

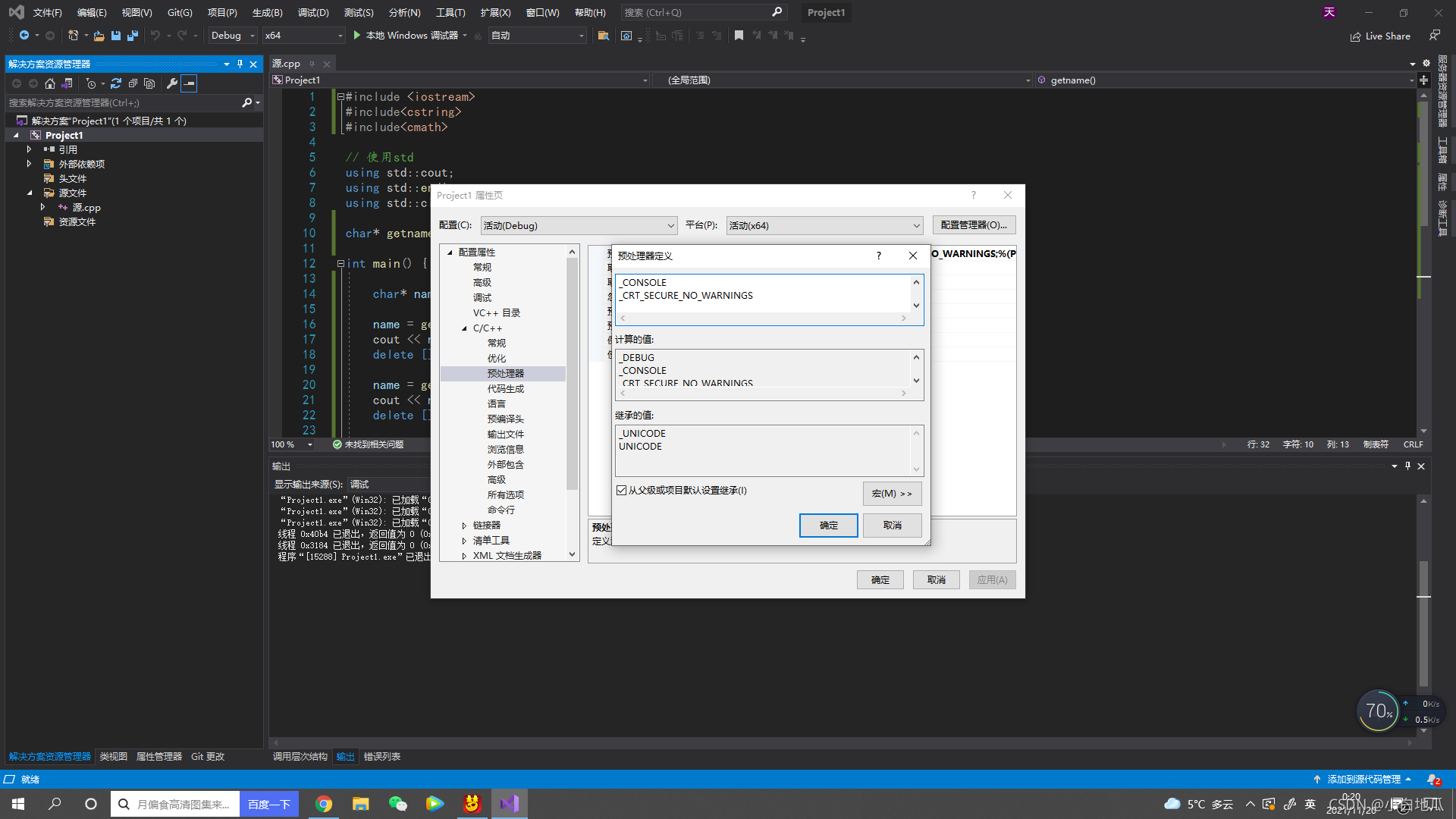

error C4996: ‘strcpy‘: This function or variable may be unsafe. Consider using strcpy_ s instead

Learn winpwn (2) -- GS protection from scratch

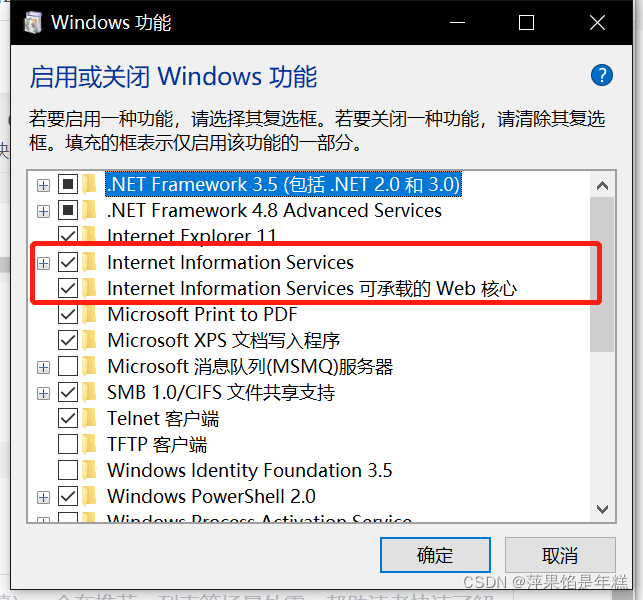

Learning question 1:127.0.0.1 refused our visit

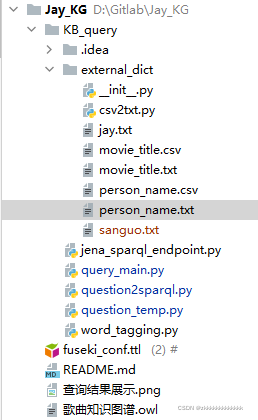

基于apache-jena的知识问答

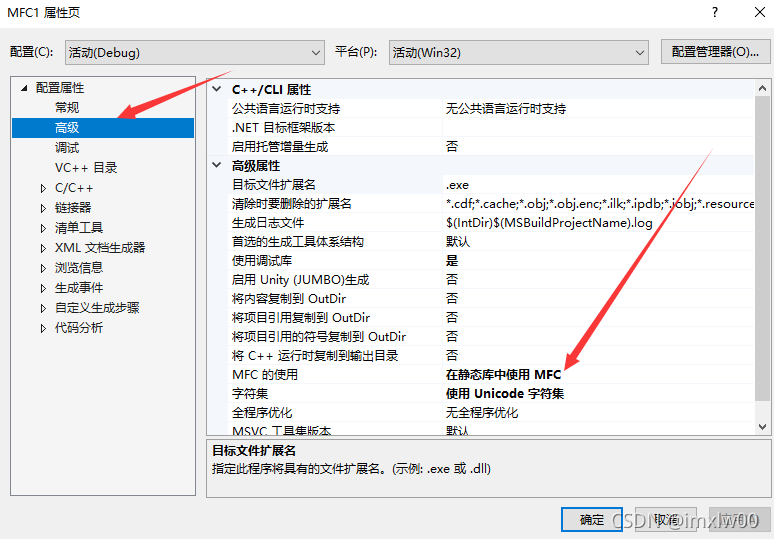

Vs2019 first MFC Application

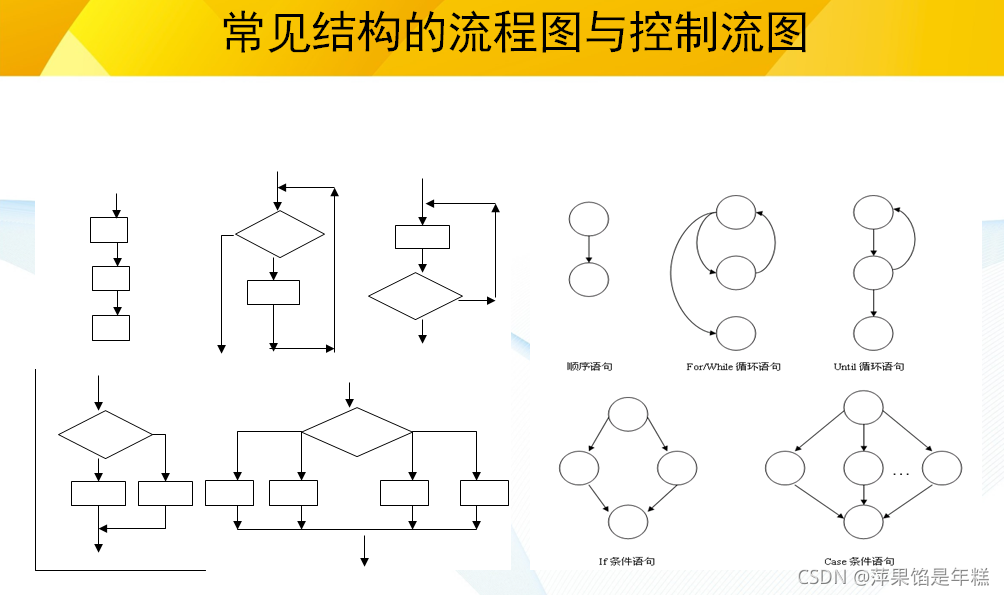

Software testing and quality learning notes 3 -- white box testing

Nanny level problem setting tutorial

随机推荐

Learning question 1:127.0.0.1 refused our visit

[蓝桥杯2017初赛]包子凑数

QT creator support platform

图像识别问题 — pytesseract.TesseractNotFoundError: tesseract is not installed or it‘s not in your path

解决安装Failed building wheel for pillow

AcWing 1298.曹冲养猪 题解

Summary of numpy installation problems

PHP - whether the setting error displays -php xxx When PHP executes, there is no code exception prompt

What does usart1 mean

L2-004 is this a binary search tree? (25 points)

Are you monitored by the company for sending resumes and logging in to job search websites? Deeply convinced that the product of "behavior awareness system ba" has not been retrieved on the official w

[蓝桥杯2017初赛]方格分割

Database advanced learning notes -- SQL statement

[蓝桥杯2020初赛] 平面切分

{一周总结}带你走进js知识的海洋

误删Path变量解决

Deoldify项目问题——OMP:Error#15:Initializing libiomp5md.dll,but found libiomp5md.dll already initialized.

JDBC principle

Why can't STM32 download the program

Request object and response object analysis