当前位置:网站首页>Pyspark operator processing spatial data full parsing (5): how to use spatial operation interface in pyspark

Pyspark operator processing spatial data full parsing (5): how to use spatial operation interface in pyspark

2022-07-06 17:37:00 【51CTO】

park Distributed Computing ,PySpark Actually Python Called Spark The bottom frame of , So how are these frameworks invoked ? The last one said Python Inside use GDAL Space operators implemented by packages , What about these whole call processes ? Today, let's explore .

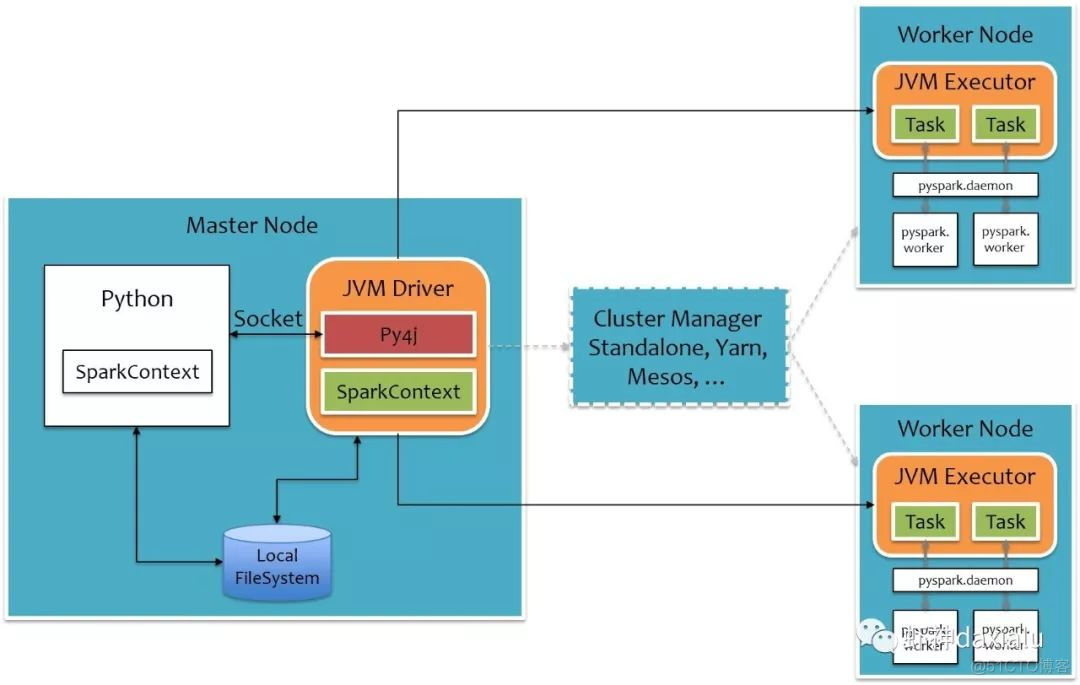

The first article in this series said , Want to run PySpark, Need to use Py4J This package , The function of this package is to use Python To call Java Objects in the virtual machine , The principle is :

Python Algorithm passed Socket Send the task to Java The virtual machine ,JAVA The virtual machine passes through Py4J This package is parsed , And then call Spark Of Worker Computing node , Then restore the task to Python Realization , After the execution is complete , Walk backwards again .

Please check this article for specific instructions , I won't repeat it :

http://sharkdtu.com/posts/pyspark-internal.html

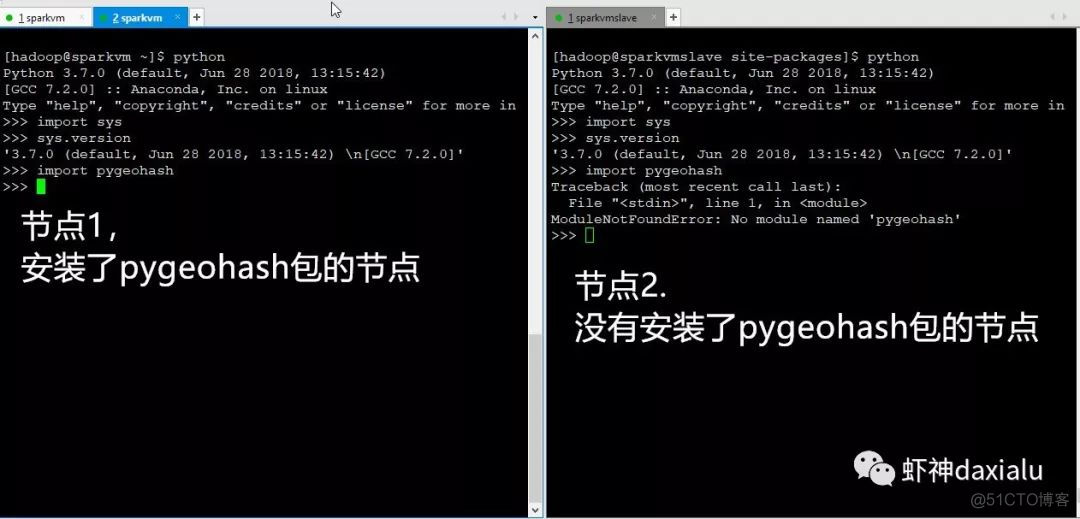

You can see it , use Python What you write , Finally, Worker End , Also use Python Algorithm or package to implement , Now let's do an experiment :

utilize Python Of sys Package to view the running Python Version of , use socket Package to view the machine name of the node , These two bags are Python Peculiar , if PySpark Just run Java Words , On different nodes , It should be impossible to implement .

I have two machines here , named sparkvm.com and sparkvmslave.com, among Sparkvm.com yes master + worker, and sparkvmslave.com just worker.

The last execution shows , Different results are returned on different nodes .

It can be seen from the above experiment that , On different computing nodes , The end use is Python Algorithm package for , So how to use spatial analysis algorithm on different nodes ?

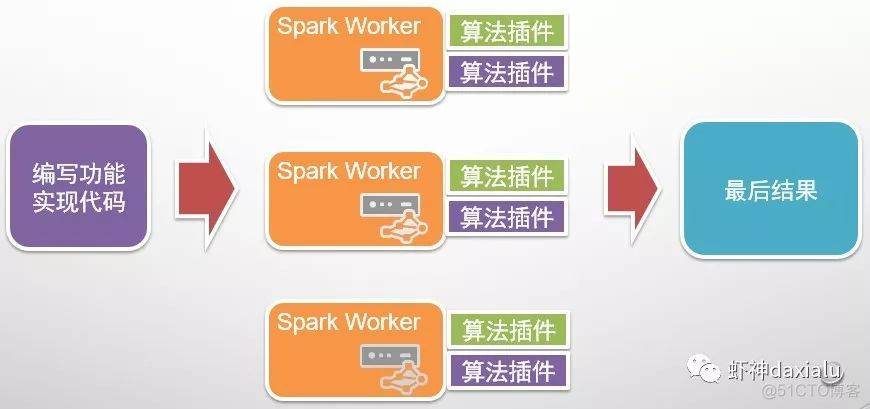

stay Spark On , Utilized Algorithm plug-in In this way :

As long as the same is installed on different nodes Python Algorithm package , You can do it , The key point is to need Configure the system Python, because PySpark The default call is system Python.

Let's do another experiment :

And then in PySpark An example is running above :

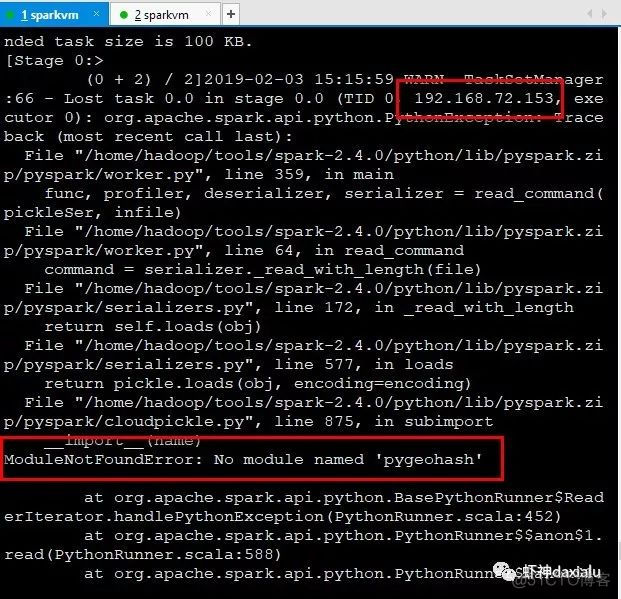

Two nodes , Why is it all executed on one node ? have a look debug Log out :

Found in 153 Node , An exception has been thrown , Said he didn't find pygeohash package .

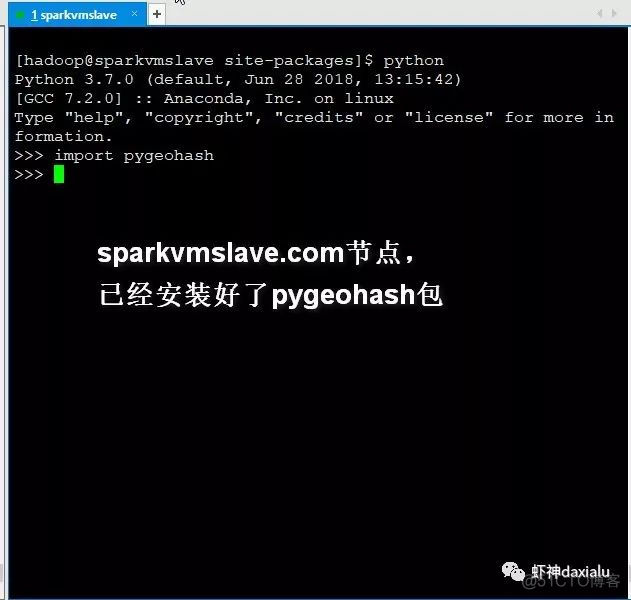

Next I'm in 153 above , hold pygeohash Package installation :

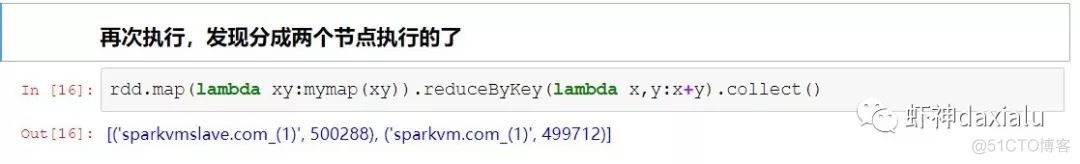

Then execute the above content again :

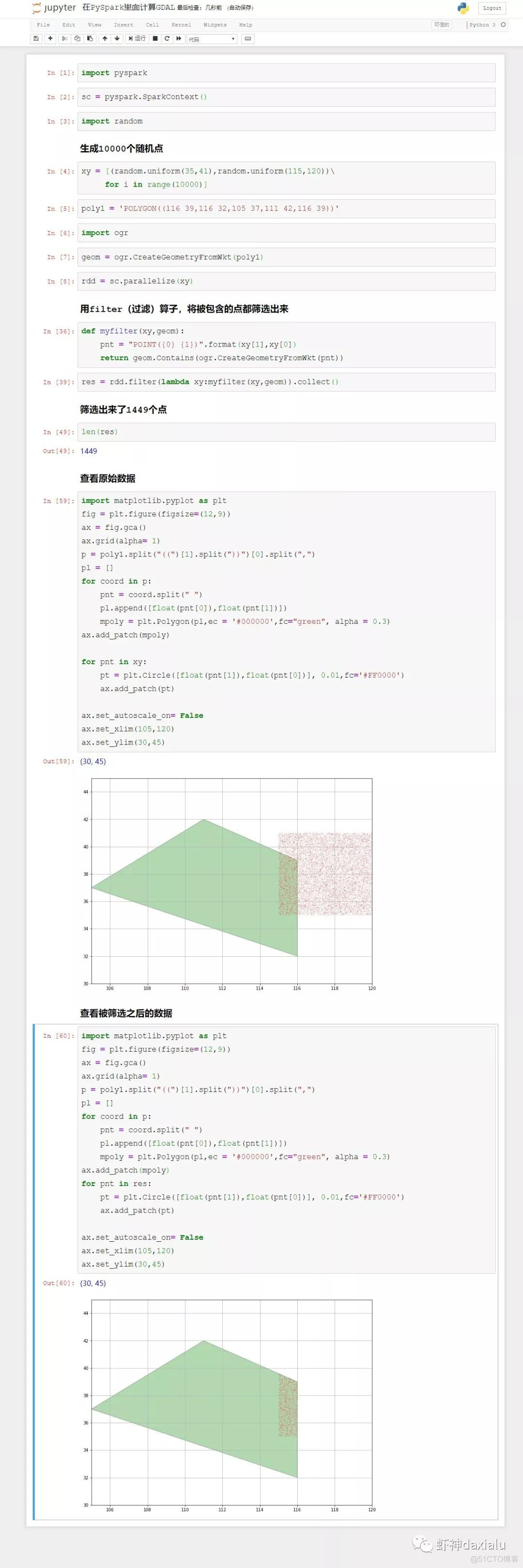

Finally, let's take advantage of gdal Spatial algorithm interface , Let's run an example :

To be continued

The source code can be passed through my Gitee perhaps github download :

github: https://github.com/allenlu2008/PySparkDemo

gitee: https://gitee.com/godxia/PySparkDemo

边栏推荐

- Solrcloud related commands

- JVM 垃圾回收器之Garbage First

- C#版Selenium操作Chrome全屏模式显示(F11)

- [VNCTF 2022]ezmath wp

- 03 products and promotion developed by individuals - plan service configurator v3.0

- List集合数据移除(List.subList.clear)

- Integrated development management platform

- [VNCTF 2022]ezmath wp

- MySQL advanced (index, view, stored procedure, function, password modification)

- Flink 解析(四):恢复机制

猜你喜欢

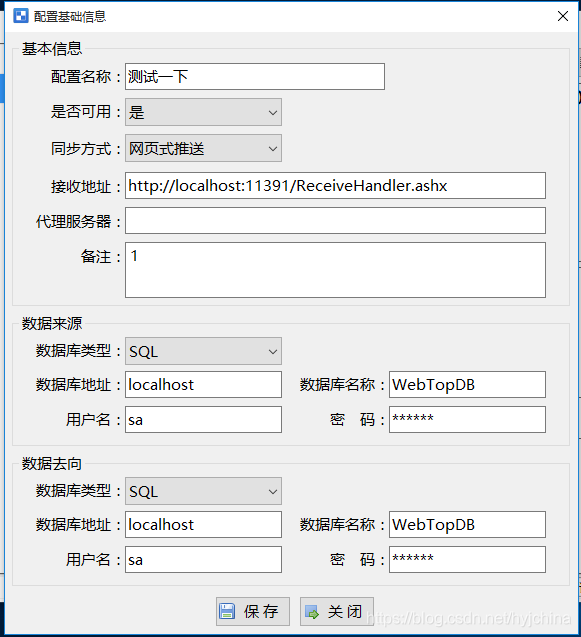

04个人研发的产品及推广-数据推送工具

Vscode matches and replaces the brackets

分布式(一致性协议)之领导人选举( DotNext.Net.Cluster 实现Raft 选举 )

02个人研发的产品及推广-短信平台

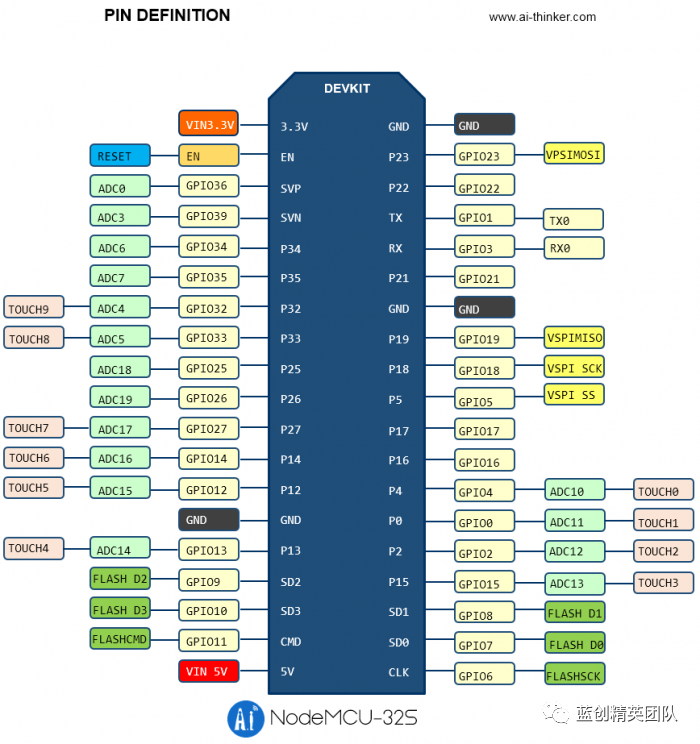

C# NanoFramework 点灯和按键 之 ESP32

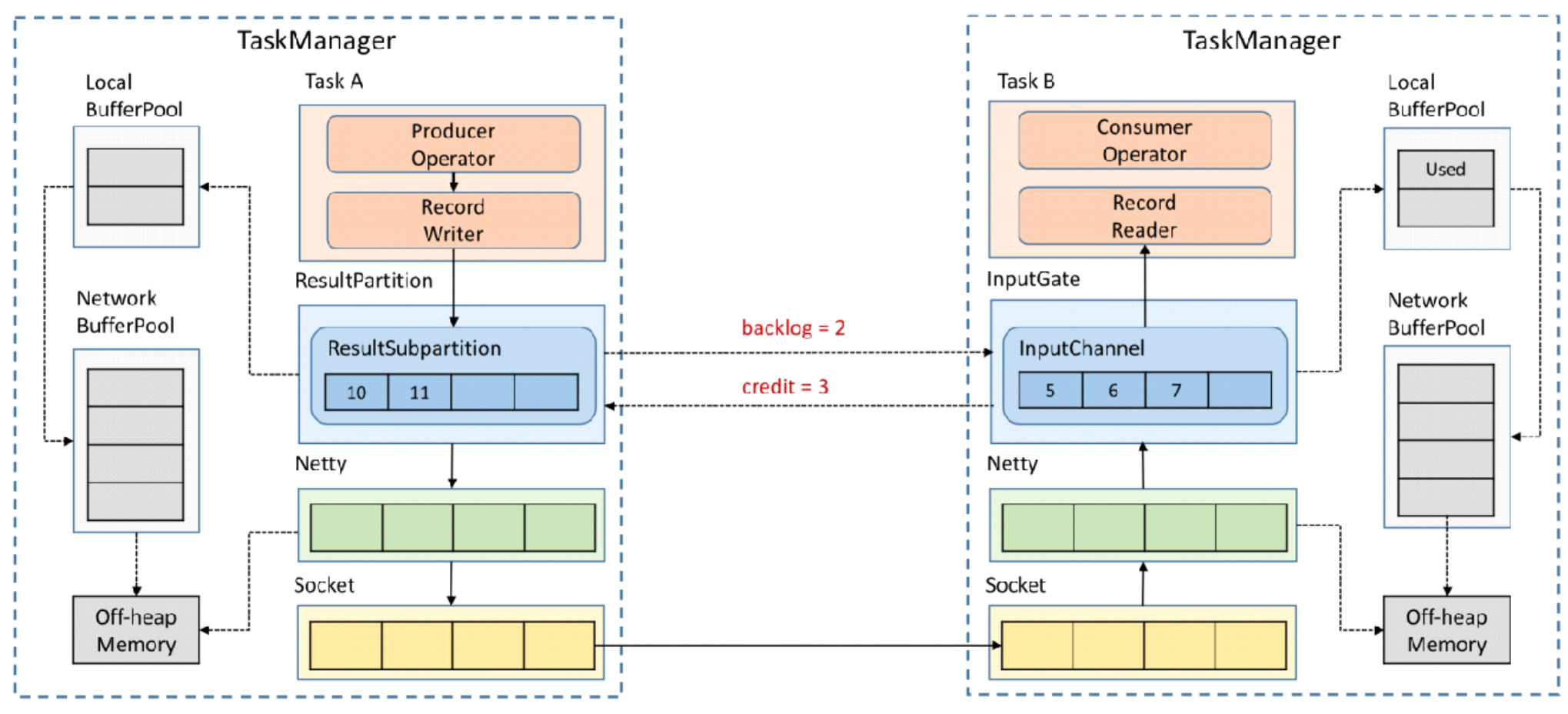

Flink analysis (II): analysis of backpressure mechanism

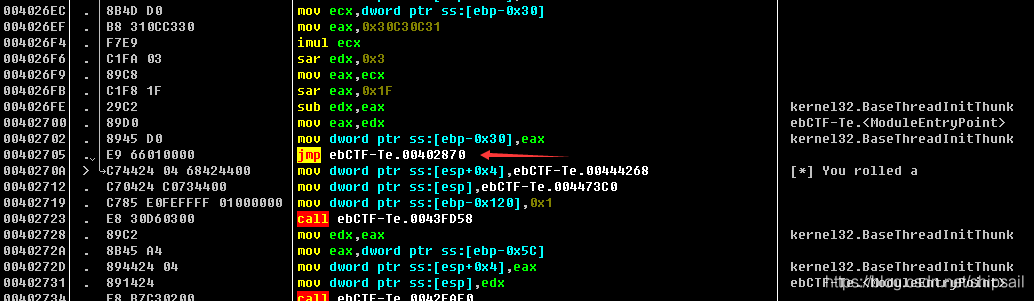

CTF逆向入门题——掷骰子

Akamai anti confusion

PySpark算子处理空间数据全解析(5): 如何在PySpark里面使用空间运算接口

复盘网鼎杯Re-Signal Writeup

随机推荐

Garbage first of JVM garbage collector

网络分层概念及基本知识

05 personal R & D products and promotion - data synchronization tool

Connect to LAN MySQL

06 products and promotion developed by individuals - code statistical tools

Flink analysis (I): basic concept analysis

The NTFS format converter (convert.exe) is missing from the current system

远程代码执行渗透测试——B模块测试

Integrated development management platform

06个人研发的产品及推广-代码统计工具

Uipath browser performs actions in the new tab

Learn the wisdom of investment Masters

The most complete tcpdump and Wireshark packet capturing practice in the whole network

当前系统缺少NTFS格式转换器(convert.exe)

Start job: operation returned an invalid status code 'badrequst' or 'forbidden‘

Vscode replaces commas, or specific characters with newlines

MySQL Advanced (index, view, stored procedures, functions, Change password)

JVM 垃圾回收器之Garbage First

Application service configurator (regular, database backup, file backup, remote backup)

Flink 解析(一):基础概念解析