当前位置:网站首页>Oracle advanced (VI) Oracle expdp/impdp details

Oracle advanced (VI) Oracle expdp/impdp details

2022-07-07 21:57:00 【InfoQ】

One 、ORACLE Data pump

ORCALE10G- Support parallel processing import 、 Export task ;

- Support pause and restart import 、 Export task ;

- Supported by

Database LinkTo export or import objects in a remote database ;

- Support to import through

Remap_schema、Remap_datafile、Remap_tablespaceSeveral parameters are used to automatically modify the owner of the object during the import process 、 Data file or table space where data resides ;

- Import / Export provides very fine-grained object control . adopt

Include、ExcludeTwo parameters , You can even specify whether to include or not to include an object .

Two 、 What is? Directory object

DirectoryOracle10g- Besides using

network_linkUnexpected parameter ,expdpThe generated files are all on the server (Directory Designated location )

2.1 How to call

- The simplest call in the command line mode , But the parameters written are limited ,It is recommended to use parameter files.

- Parameter file is the most commonly used method . Usually you need to write a parameter file first . Specify various parameters required for export . Then call .

expdp user/pwd parfile=xxx.par

expdp user/pwd parfile=xxx.par logfile=a.logexpdp user/pwd logfile=a.log parfile=xxx.par- Interactive mode

Data PumpImport and export tasks support stopping , Restart and other state operations . For example, users perform import or export tasks , Half way through , Use Crtl+C Interrupted the task ( Or other reasons ), At this time, the task is not cancelled , Instead, it was transferred to the backstage . It can be used again expdp/impdp command , additional attach Parameter to reconnect to the interrupted task , And select the subsequent operation . This is the way of interaction .

2.2 What is? attach Parameters

Starting “BAM”.”SYS_EXPORT_SCHEMA_01″:bam/******** parfile=expdp_tbs.par

SYS_EXPORT_SCHEMA_013、 ... and 、 Operation mode

- Full library modeImport or export the entire database , Corresponding

impdp/expdpIn the command full Parameters , Only have dba perhapsexp_full_databaseandimp_full_databaseOnly authorized users can execute .

- Schema PatternExport or import Schema I have my own object , Corresponding

impdp/expdpIn the command Schema Parameters , This is the default mode of operation . If you have dba perhapsexp_full_databaseandimp_full_databaseIf the user with permission executes , You can export or import multipleSchemaObjects in the .

- Table modeExport the specified table or table partition ( If there are partitions ) And objects that depend on the table ( As the index of the table , Constraints etc. , But only if these objects are in the same

Schemain , Or the executing user has the corresponding permission ). Correspondingimpdp/expdpIn the command Table Parameters .

- Tablespace modeExport the contents of the specified tablespace . Corresponding

impdp/expdpMediumTablespacesParameters , This pattern is similar to table pattern and Schema Pattern supplement .

- Transfer tablespace modeCorresponding

impdp/expdpMediumTransport_tablespacesParameters . The most significant difference between this mode and the previous modes is generated Dump The file does not contain specific logical data , Only the metadata of related objects is exported ( That is, the definition of the object , It can be understood as the creation statement of a table ), The logical data is still in the data file of the tablespace , When exporting, you need to copy metadata and data files to the target server at the same time . This export method is very efficient , The time cost is mainly generated by copying data files I/O On .expdp Perform export of transport tablespace pattern , User must Have exp_full_database Role or DBA role . When importing through transport tablespace mode , The user must have imp_full_database Role or DBA horn color .

- Filtering dataFiltering data mainly depends on Query and Sample Two parameters . among Sample The parameters are mainly for expdp Export function .

- QueryAnd exp In the command Query The function is similar to , however Expdp in , This parameter function has been enhanced , Finer granularity of control .Expdp Medium Query It is also specified similar where Statement to limit records . The grammar is as follows :

Query = [Schema.][Table_name:] Query_clause

Schema.table_nameQuery_clauseQuery_clauseQuery=A:”Where id<5″,B:”Where name=’a’”

WhereSchemaQuery=Where id <5- SampleThis parameter is used to specify the percentage of exported data , The range of values that can be specified is from 0.000001 To 99.999999, The grammar is as follows :

Sample=[[Schema_name.]Table_name:]sample_percent

Four 、 Filter objects

- Exclude Against the rules

- Include Just the rules

Excludeexclude/includeEXCLUDE=[object_type]:[name_clause],[object_type]:[name_clause] -- Exclude specific objects

INCLUDE=[object_type]:[name_clause],[object_type]:[name_clause] -- Contains specific objects

object_typetable,sequence,view,procedure,packagename_clausename_clauseobject_typeexpdp <other_parameters> SCHEMAS=scott EXCLUDE=SEQUENCE,TABLE:"IN ('EMP','DEPT')"

impdp <other_parameters> SCHEMAS=scott INCLUDE=PACKAGE,FUNCTION,PROCEDURE,TABLE:"='EMP'"

EXCLUDE=SEQUENCE,VIEW -- Filter all SEQUENCE,VIEW

EXCLUDE=TABLE:"IN ('EMP','DEPT')" -- Filter table objects EMP,DEPT

EXCLUDE=SEQUENCE,VIEW,TABLE:"IN ('EMP','DEPT')" -- Filter all SEQUENCE,VIEW And table objects EMP,DEPT

EXCLUDE=INDEX:"= 'INDX_NAME'" -- Filter the specified index object INDX_NAME

INCLUDE=PROCEDURE:"LIKE 'PROC_U%'" -- Include with PROC_U All stored procedures at the beginning (_ The symbol represents any single character )

INCLUDE=TABLE:"> 'E' " -- Contains characters larger than E All table objects of `

Parameter file:exp_scott.par

DIRECTORY = dump_scott

DUMPFILE = exp_scott_%U.dmp

LOGFILE = exp_scott.log

SCHEMAS = scott

PARALLEL= 2

EXCLUDE = TABLE:"IN ('EMP', 'DEPT')"

D:\> expdp system/manager DIRECTORY=my_dir DUMPFILE=exp_tab.dmp LOGFILE=exp_tab.log SCHEMAS=scott

INCLUDE=TABLE:\"IN ('EMP', 'DEPT')\"

\expdp system/manager DIRECTORY=my_dir DUMPFILE=exp_tab.dmp LOGFILE=exp_tab.log SCHEMAS=scott

INCLUDE=TABLE:\"IN \(\'EMP\', \'DEP\'\)\"

- ORA-39001: invalid argument value

- ORA-39071: Value for INCLUDE is badly formed.

- ORA-00936: missing expression

- ORA-39071: Value for EXCLUDE is badly formed.

- ORA-00904: “DEPT”: invalid identifier

- ORA-39041: Filter “INCLUDE” either identifies all object types or no object types.

- ORA-39041: Filter “EXCLUDE” either identifies all object types or no object types

- ORA-39038: Object path “USER” is not supported for TABLE jobs.

5、 ... and 、 Advanced filtering

ALL: export / Import object definitions and data , The default value of this parameter is ALL

DATA_ONLY: Export only / Import data .

METADATA_ONLY: Export only / Import object definitions .

5.1 Filtering existing data

Table_exists_actionSKIP: Skip this table , Proceed to the next object . This parameter defaults toSKIP. It is worth noting that , If you also specifyCONTENTParameter isData_onlyWords ,SKIPInvalid parameter , The default isAPPEND.

APPEND: Add data to an existing table .

TRUNCATE:TRUNCATECurrent table , Then add records . Use this parameter with caution , Unless you confirm that the data in the current table is really useless . Otherwise, data may be lost .

REPLACE: Delete and rebuild table objects , Then add data to it . It is worth noting that , If you also specifyCONTENTParameter isData_onlyWords ,REPLACEInvalid parameter .

5.2 Redefine the Schema Or table space

Remap_SchemaRemap_tablespaceREMAP_SCHEMA: Redefine the object's ownership Schema The function of this parameter is similar to IMP Medium Fromuser+Touser, Support multiple Schema Transformation , The grammar is as follows :

REMAP_SCHEMA=Source_schema:Target_schema[,Source_schema:Target_schema]

Remap_schema=a:b,c:dremap_schema=a:b,a:c.REMAP_TABLESPACE: Redefine the tablespace in which the object resides . This parameter is used to remap the table space of the imported object , Support simultaneous conversion of multiple tablespaces , Separate each other with commas . The grammar is as follows :

REMAP_TABLESPACE=Source_tablespace:Target_tablespace[,Source_tablespace:Target_tablespace]

Remap_tablespace5.3 Optimize import / Export efficiency

Oracle Data Pump technology enables Very High-Speed movement of data and metadata from one database to another.

Parallel- For exported

parallel

dumpDUMPDUMPEXPDPFILESIZEDUMPparallelfilesizeexpdp user/pwd directory=dump_file dumpfile=expdp_20100820_%U.dmp logfile=expdp_20100820.log filesize=500M parallel=4

- For imported

parallel

parallelparallelimpdp user/pwd directory=dump_file dumpfile=expdp_20100820_%U.dmp logfile=impdp_20100820.log parallel=10

边栏推荐

- Datatable data conversion to entity

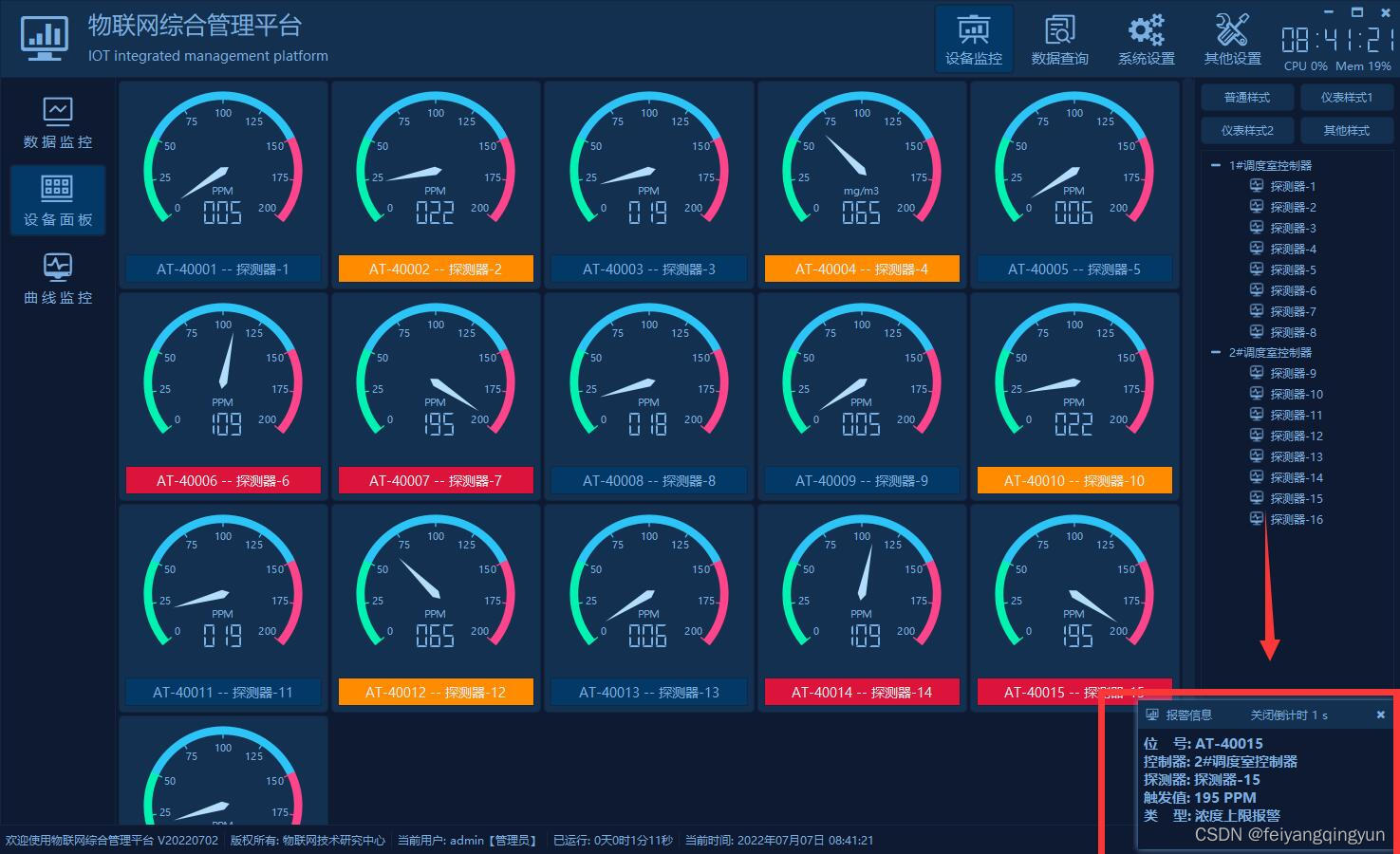

- QT compile IOT management platform 39 alarm linkage

- Goal: do not exclude yaml syntax. Try to get started quickly

- How does win11 unblock the keyboard? Method of unlocking keyboard in win11

- Devil daddy A0 English zero foundation self-improvement Road

- [开源] .Net ORM 访问 Firebird 数据库

- Unity3d 4.3.4f1执行项目

- Solve the problem of uni in uni app Request sent a post request without response.

- Default constraint and zero fill constraint of MySQL constraint

- [C language] advanced pointer --- do you really understand pointer?

猜你喜欢

QT compile IOT management platform 39 alarm linkage

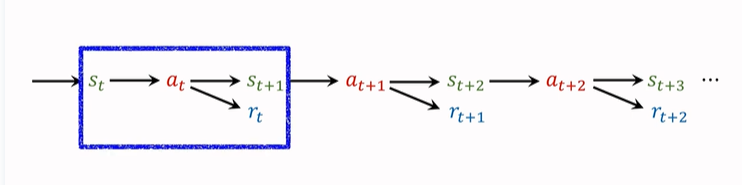

Reinforcement learning - learning notes 9 | multi step TD target

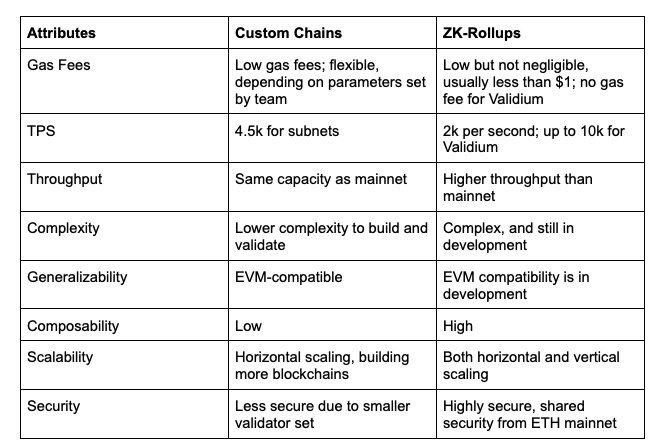

L2: current situation, prospects and pain points of ZK Rollup

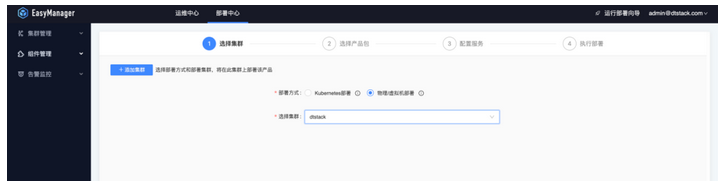

Where is the big data open source project, one-stop fully automated full life cycle operation and maintenance steward Chengying (background)?

![Restapi version control strategy [eolink translation]](/img/65/decbc158f467ab8c8923c5947af535.png)

Restapi version control strategy [eolink translation]

EasyCVR配置中心录像计划页面调整分辨率时的显示优化

![Jerry's initiation of ear pairing, reconnection, and opening of discoverable and connectable cyclic functions [chapter]](/img/14/1c8a70102c106f4631853ed73c4d82.png)

Jerry's initiation of ear pairing, reconnection, and opening of discoverable and connectable cyclic functions [chapter]

Have you ever been confused? Once a test / development programmer, ignorant gadget C bird upgrade

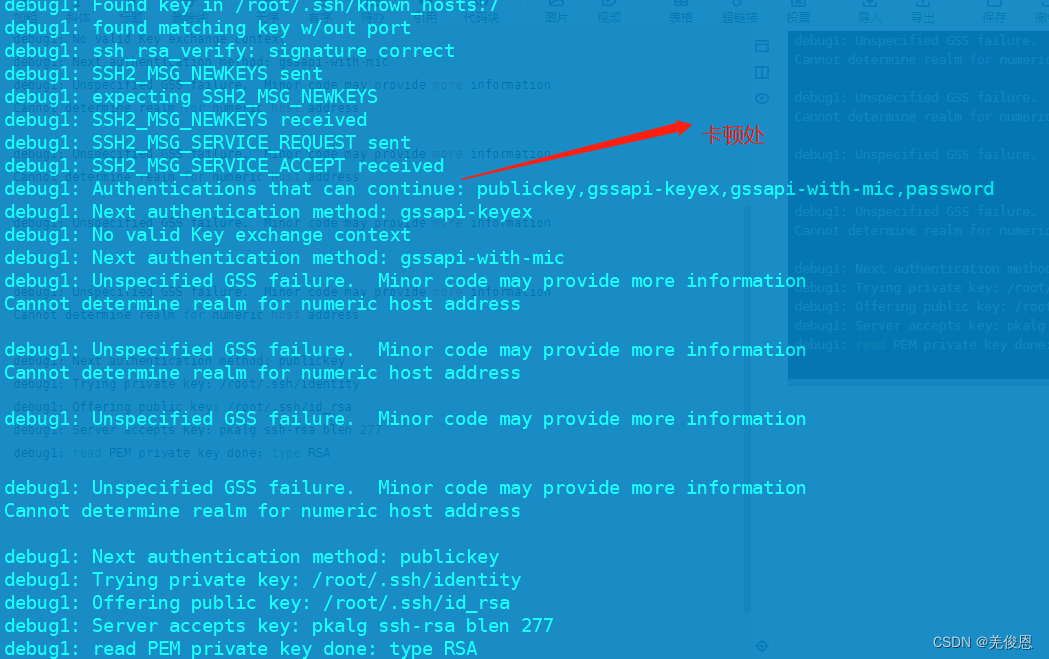

Debugging and handling the problem of jamming for about 30s during SSH login

Open source OA development platform: contract management user manual

随机推荐

Google SEO external chain backlinks research tool recommendation

What stocks can a new account holder buy? Is the stock trading account safe

Jerry's test box configuration channel [chapter]

Time standard library

[开源] .Net ORM 访问 Firebird 数据库

Why can't win11 display seconds? How to solve the problem that win11 time does not display seconds?

Tupu digital twin coal mining system to create "hard power" of coal mining

Feature generation

Deadlock conditions and preventive treatment [easy to understand]

L2:ZK-Rollup的现状,前景和痛点

[colmap] sparse reconstruction is converted to mvsnet format input

Insufficient permissions

The function is really powerful!

三元表达式、各生成式、匿名函数

Jerry's initiation of ear pairing, reconnection, and opening of discoverable and connectable cyclic functions [chapter]

POJ 3140 contents division "suggestions collection"

Ant destination multiple selection

浅解ARC中的 __bridge、__bridge_retained和__bridge_transfer

Demon daddy A3 stage near normal speed speech flow initial contact

ByteDance senior engineer interview, easy to get started, fluent