Paper information

Paper title :Rethinking the Setting of Semi-supervised Learning on Graphs

Author of the paper :Ziang Li, Ming Ding, Weikai Li, Zihan Wang, Ziyu Zeng, Yukuo Cen, Jie Tang

Source of the paper :2022, arXiv

Address of thesis :download

Paper code :download

1 Introduction

This paper mainly studies semi supervised GNNs Overshoot in the model (over-tuning phenomenon), A fair model comparison framework is proposed .

2 The Risk of Over-tuning of Semi-supervised Learning on Graphs

2.1 Semi-Supervised Learning on Graphs

Three commonly used data sets :

2.2 An Analysis of Over-tuning in Current GNNs

Overshoot (over-tuning phenomenon) ubiquitous GNNs in , namely GNN The hyperparametric over fitting validation set of the model .

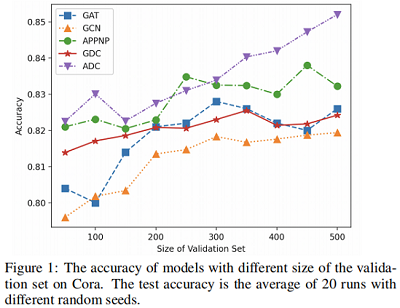

This paper tests 5 It's representative of GNNs frame (GCN, GAT, APPNP, GDC-GCN, ADC) stay Cora Comparison of accuracy rates of different verification set sizes on the data set . In this paper, grid search is used to select the optimal hyperparameter for each model . Change the size of the validation set from 100 To 500. For each validation set , After using the super parameter training model of the best search , Report the results on the test set . The result is as follows Figure1 Shown .

Figure 1 Show ,GNN Models with larger validation sets usually perform better . Because the validation set can only affect the model through hyperparameters , So we can come to a conclusion , The model can benefit from validation tags by using super parameters . If we change the size of the validation set from 100 Add to 500, The accuracy is improved as high as 1%∼3% , This is enough to show that over tuning already exists .

2.3 ValidUtil: Exploring the Limits of Over-tuning

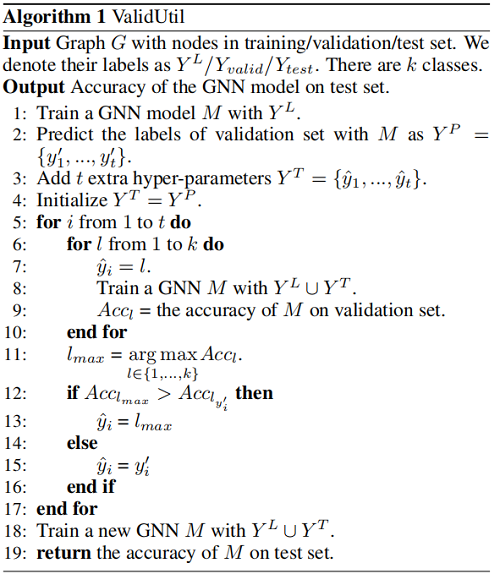

It is usually not possible to add validation sets to training sets , This is considered a data leak . What this article puts forward ValidUtil Such as :

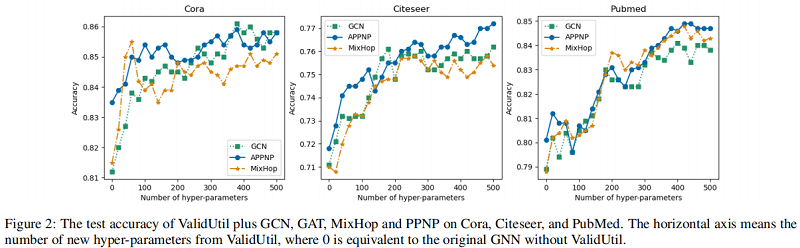

Results found : Only when $\hat{y}_{i}=y_{i}$ when , The model can achieve the best results .Figure 2 Shows hyper-parameters The impact on the experiment :

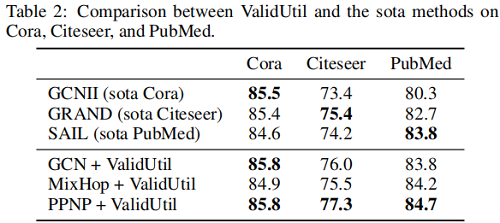

We found that , Even from ValidUtil There are only 20 individual ∼60 A super parameter , It can also bring a leap in performance to some models . When we verify all in the set 500 When adding super parameters to nodes ,PPNP Comparable Table 2 Medium SOTA Method .

remarks : although ValidUtil Work purely by using validation tags , But it is fully effective under the current setting . If we were to GNN+ValidUtil As a black box model , Then the training process is quite normal .ValidUtil In fact, the efficiency of using labels is very low , Because each super parameter can only learn the information of one node —— But this is enough to test our hypothesis . The current setting cannot prevent the validation tag from being used during hyper parameter tuning “ leak ”. We think there are some more effective ways to define influential hyperparameters . These hyperparameters may be entangled with features or model structures , They can get information from multiple authentication tags . according to Figure 1, Such influential hyperparameters may already exist in some models , Not easy to detect . therefore , There is an urgent need to build a new semi supervised learning benchmark on graphs , To avoid over tuning and fairness 、 Compare steadily GNN Model .

3 IGB: An Independent and Identically Distributed Graph Benchmark

3.1 Overview

Two goals of the new benchmark : Avoid over tuning and be more robust .

To avoid over tuning , In this paper, nodes are divided into labeled nodes and unlabeled nodes . You can learn the best model of labeled data sets in any way , And evaluate unlabeled sets ( Test set ) Performance on . If you need to search for super parameters , A part of the marked data set can be regarded as a verification set . Because the verification label has been exposed , Therefore, the problem of over tuning is eliminated . This setting is closer to the real scene , Be able to make a fair comparison between models with different superparameters . In order to easily GNN Migrate to this new setting , We will be in the 3.2 Section introduces a simple and powerful way to create validation sets .

This paper expects that under different random seeds , The performance of the model is stable . A common method of reporting performance in machine learning is : Repeat testing and reporting average performance . So this article expects to be in multiple i.i.d Test the performance of the model on the graph , These are many i.i.d chart It's from sampling .

- 1. Divide the labeled set into training and validation sets.

- 2. Find the best hyper-parameters using grid search on the training and validation sets from the first step.

- 3. Train the model with the best hyper-parameters on the full labeled nodes.

- 4. Test the performance of the model from the third step on the unlabeled (test) sets.

- 5. Repeat the above steps on each graph in a dataset and report the average accuracy

The first two steps aim to find GNN The best hyperparameter of the model . We think this method is applicable to many GNN The model obtains satisfactory hyperparameters . If there are other reasonable methods to determine the optimal hyperparameter with a marked set , They will also be encouraged to replace the first two steps in this pipeline . In this way , You can avoid over tuning by directly exposing all tag information in the validation set in the third step .

3.3 Datasets

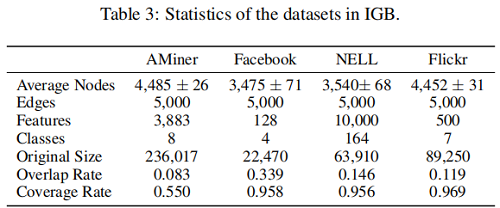

IGB It consists of four data sets :Aminer,Facebook,Nell,Flickr. Each dataset contains 100 An undirected connected graph , According to the first 3.4 The random walk method in the section samples from the original large image . We also report the average node overlap rate of a pair of sampled graphs , That is, the ratio of public nodes to the total size of nodes . Coverage is defined as 100 The ratio of the union of the sampled graph and the original large graph . Low overlap and high coverage are preferred . The statistical data of the data set is shown in table 3.

3.4 Sampling Algorithm

The simplest way to make the node label distribution of a subgraph similar to the original graph is vertex sampling . However , It doesn't meet our expectations , Because it generates disconnected subgraphs . To get close i.i.d. Benchmark of subgraph , We must carefully design sampling strategies and principles . say concretely , We expect the sampling strategy to have the following characteristics :

- 1. The sampled subgraph is a connected graph.

- 2. The distribution of the subgraph’s node labels is close to that of the original graph.

- 3. The distribution of the subgraph’s edge categories (edge category is defined by the combination of its two endpoints’ labels) is close to that of the original graph.

First , Random walk well satisfies the first point , We start from the node $u=n_0$ Start sampling , The following nodes can be selected through the possibility of conversion :

$P_{u, v}=\left\{\begin{array}{ll}\frac{1}{d_{u}}, & \text { if }(u, v) \in E \\0, & \text { otherwise }\end{array}\right.$

among ,$P_{u, v}$ It's from $u$ To $v$ The probability of transfer ,$d_{u}$ Represents the node $u$ Degree .

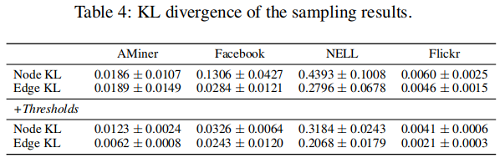

We refuse to adopt a similar sampling method , To ensure the second and third properties . ad locum , We introduced KL The divergence As a measure to measure the difference between two different distributions . In order to get the node label distribution (“Node KL”) And marginal category distribution (“Edge KL”)KL Subgraphs with relatively low divergence , We set a predefined threshold to decide whether to accept the sampling map . The results before and after adding the threshold are compared as Table 4 Shown .

3.5 Benchmarking Results

Results on these four datasets :

3.6 The Stability of IGB

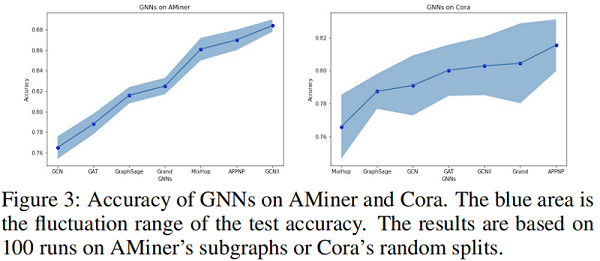

This paper uses two methods to verify IGB The stability of . First , Verify its stability when evaluating the model on different graphs , Because of every IGB Data set containing 100 Close i.i.d. chart . say concretely , We compared 100 individual AMiner Subgraphs and 100 individual Cora Precision variance in random data segmentation .Figure 3 It turns out that , Even if each AMiner The graph uses random data segmentation , Yes IGB Is also better than Cora More stable style .

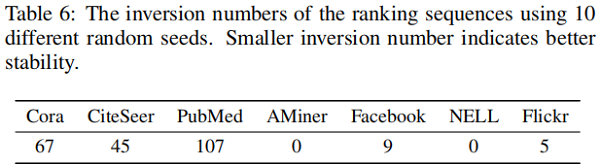

secondly , Focus on IGB Stability in evaluating models with different random seeds . In a stable benchmark , The ranking of different models should not be easily changed when changing random seeds . To test this , We use ranked “inversion number” As a measure .

4 Conclusion

In this paper, we discuss the semi supervised setting on graph again , It also expounds the problem of over optimization , And through VallidUtil Verified his significance . This paper also proposes a new benchmark IGB, A more stable evaluation pipeline . A method based on RW Sampling algorithm to improve the stability of evaluation , This paper hopes to pass IGB It can benefit the society .

Revise history

2022-07-07 Create articles