Welcome to the official account :bin Technology house

Hello everyone , I am a bin, It's time for us to meet every week , My official account is 1 month 10 The first article was published on the th 《 From a kernel perspective IO The evolution of the model 》, In this article, we use a graphic way to C10k The main line is the problem of , From the perspective of kernel 5 Kind of IO The evolution of the model , And two IO Introduction of thread model , Finally, it leads to Netty Network of IO Threading model . Readers and friends feel that the background messages are very hard core , With your support, the current reading volume of this article is 2038, The number of likes is 80, Looking for 32. This is for the trumpet, which has just been born for more than a month , Is a great encouragement . ad locum bin Thank you again for your recognition , Encourage and support ~~

today bin I will bring you a hard core technical article again , In this paper, we will elaborate the object in... From the perspective of computer composition principle JVM How the memory is arranged , And what is memory alignment , If our heads are iron , It's about the consequences of not aligning memory , Finally, the principle and application of compressed pointer are introduced . At the same time, we also introduced in the high concurrency scenario ,False Sharing Causes and performance impact .

I believe that after reading this article , You will gain a lot , Don't talk much , Now let's officially start the content of this article ~~

In our daily work , Sometimes in order to prevent online applications from happening OOM, Therefore, we need to calculate the memory occupation of some core objects in the process of development , The purpose is to better understand the memory occupation of our application .

Then, according to the memory resource limit of our server and the estimated order of object creation, we can calculate the high and low watermark of the memory occupied by the application , If the memory usage exceeds High water level , Then it may happen OOM The risk of .

We can use the estimated in the program High and low water mark , Do something to prevent OOM Processing logic or alarm .

So the core problem is how to calculate a Java The size of the object in memory ??

Before I answer this question for you , Let me first introduce Java Object layout in memory , This is the theme of this paper .

1. Java Memory layout of objects

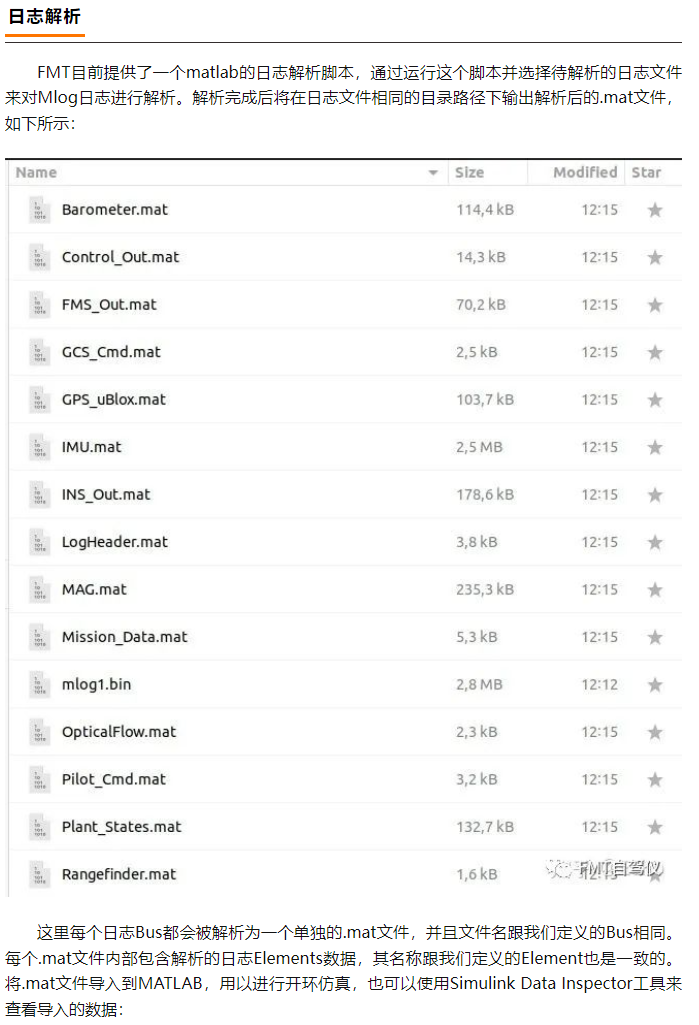

As shown in the figure ,Java The object is JVM Medium used instanceOopDesc Structure represents and Java The object is JVM The memory layout in the heap can be divided into three parts :

1.1 Object head (Header)

Every Java Objects contain an object header , The object contains two classes of information :

MarkWord: stay JVM of usemarkOopDescStructure represents the data used to store the runtime of the object itself . such as :hashcode,GC Generational age , Lock status flag , A lock held by a thread , To the thread Id, Biased timestamps and so on . stay 32 Bit operating system and 64 Bit operating systemMarkWordRespectively take up 4B and 8B Size of memory .Type a pointer:JVM Type pointers in are encapsulated inklassOopDescIn structure , The type pointer points toInstanceKclass object,Java Class in JVM Medium used InstanceKclass Object encapsulated , It contains Java Class , such as : Inheritance structure , Method , Static variables , Constructors, etc .- Without turning on pointer compression (-XX:-UseCompressedOops). stay 32 Bit operating system and 64 In the bit operating system, type pointers occupy 4B and 8B Size of memory .

- With pointer compression on (-XX:+UseCompressedOops). stay 32 Bit operating system and 64 In the bit operating system, type pointers occupy 4B and 4B Size of memory .

If Java If the object is an array type , Then the object header of the array object will also contain a 4B Size attribute used to record the length of the array .

Because the attribute used to record the length and size of the array in the object header only accounts for 4B Of memory , therefore Java The maximum length that the array can apply for is :

2^32.

1.2 The instance data (Instance Data)

Java The instance data area of the object in memory is used to store Java Class , Include instance fields in all parent classes . in other words , Although the subclass cannot access the private instance field of the parent class , Or the instance field of the subclass hides the instance field of the parent class with the same name , However, instances of subclasses still allocate memory for these parent instance fields .

Java Field types in objects fall into two broad categories :

The base type :Java The memory occupation of the basic type defined by the instance field in the instance data area of the class is as follows :

- long | double Occupy 8 Bytes .

- int | float Occupy 4 Bytes .

- short | char Occupy 2 Bytes .

- byte | boolean Occupy 1 Bytes .

Reference type :Java The memory occupation of the reference type of the instance field in the instance data area can be divided into two cases :

- Do not turn on pointer compression (-XX:-UseCompressedOops): stay 32 The memory occupation of reference type in bit operating system is 4 Bytes . stay 64 The memory occupation of reference type in bit operating system is 8 Bytes .

- Turn on pointer compression (-XX:+UseCompressedOops): stay 64 For the operating system , Reference type memory usage becomes 4 Bytes ,32 The memory usage of reference types in bit operating systems continues to be 4 Bytes .

Why? 32 The occupancy of the reference operating system 4 Bytes , and 64 Bit operating system reference type accounts for 8 byte ?

stay Java in , The reference type stores the memory address of the referenced object . stay 32 In the bit operating system, the memory address is determined by 32 individual bit Express , Therefore need 4 Bytes to record the memory address , The virtual address space that can be recorded is 2^32 size , That is, it can only express 4G Size of memory .

And in the 64 In the bit operating system, the memory address is determined by 64 individual bit Express , Therefore need 8 Bytes to record the memory address , But in 64 Only low... Is used in the bit system 48 position , So its virtual address space is 2^48 size , Be able to express 256T Size of memory , Which is low 128T The space is divided into user space , high 128T Divided into kernel space , It can be said to be very big .

After we introduce Java The object is JVM After the memory layout in , So let's see Java How the instance fields defined in the object are arranged in the instance data area :

2. Field rearrangement

Actually, we're writing Java The order of those instance fields defined in the source code file will be changed JVM Reassign permutation , The purpose of this is to align memory , So what is memory alignment , Why memory alignment , With the in-depth interpretation of the article, the author will reveal the answers layer by layer ~~

In this section , The author first introduces JVM Rules for field rearrangement :

JVM The sort order of reassigned fields is affected by -XX:FieldsAllocationStyle The effect of parameters , The default value is 1, The reallocation policy of instance fields follows the following rules :

- If a field occupies

XBytes , So the offset of this fieldOFFSETYou need to align toNX

Offset refers to the memory address of the field and Java The difference between the starting memory addresses of the object . such as long Type field , It takes up memory 8 Bytes , So it's OFFSET Should be 8 Multiple 8N. Insufficient 8N The number of bytes that need to be filled .

When the compression pointer is turned on 64 position JVM in ,Java Of the first field in the class OFFSET You need to align to 4N, When the compressed pointer is turned off, the value of the first field in the class OFFSET You need to align to 8N.

JVM The default assignment field order is :long / double,int / float,short / char,byte / boolean,oops(Ordianry Object Point Reference type pointer ), And the instance variable defined in the parent class will appear before the instance variable of the child class . When setting JVM Parameters

-XX +CompactFieldswhen ( Default ), The memory occupied is less than long / double The field will be allowed to be inserted into the first... In the object long / double In the gap before the field , To avoid unnecessary memory filling .

CompactFields Option parameters are in JDK14 Is marked as expired , And is likely to be deleted in future versions . Details can be viewed issue:https://bugs.openjdk.java.net/browse/JDK-8228750

The three field rearrangement rules above are very important , But it's more brain wracking , It's not easy to understand , The purpose of listing them first is to let everyone have a hazy perceptual understanding , Below, the author gives a specific example to explain in detail , In the process of reading this example, it is also convenient for you to deeply understand these three important field rearrangement rules .

Suppose we now have such a class definition

public class Parent {

long l;

int i;

}

public class Child extends Parent {

long l;

int i;

}

- According to the above

The rules 3We know that the variables in the parent class appear before the variables in the child class , And the field allocation order should be long Type field l, belong int Type field i Before .

If JVM Open the

-XX +CompactFieldswhen ,int Type field is the first field that can be inserted into an object long Type field ( That is to say Parent.l Field ) In the previous gap . If JVM Set up-XX -CompactFieldsbe int This insertion behavior of type field is not allowed .

according to

The rules 1We know long Type field is in the instance data area OFFSET You need to align to 8N, and int Type field OFFSET You need to align to 4N.according to

The rules 2We know that if the compression pointer is turned on-XX:+UseCompressedOops,Child Of the first field of the object OFFSET You need to align to 4N, When the compression pointer is turned off-XX:-UseCompressedOops,Child Of the first field of the object OFFSET You need to align to 8N.

because JVM Parameters UseCompressedOops and CompactFields The existence of , Lead to Child The arrangement order of objects in the instance data area fields can be divided into four cases , Let's combine the three rules extracted above to see the performance of the field arrangement order in these four cases .

2.1 -XX:+UseCompressedOops -XX -CompactFields Turn on compression pointer , Turn off field compression

Offset OFFSET = 8 The location of the is the type pointer , Since the compression pointer is turned on, it takes up 4 Bytes . The object header takes up... In total 12 Bytes :MarkWord(8 byte ) + Type a pointer (4 byte ).

according to

The rules 3:Parent class Parent The field in is to appear in the subclass Child Before the field of and long Type field in int Type field .According to rules 2:When the compression pointer is turned on ,Child The first field in the object needs to be aligned to 4N. here Parent.l Field OFFSET It can be 12 It can also be 16.According to rules 1:long Type field is in the instance data area OFFSET You need to align to 8N, So here Parent.l Field OFFSET Can only be 16, therefore OFFSET = 12 The location of the needs to be filled .Child.l Fields can only be in OFFSET = 32 Store , Can't use OFFSET = 28 Location , because 28 The position of is not 8 Multiples of cannot be aligned 8N, therefore OFFSET = 28 The position of the is filled 4 Bytes .

The rules 1 It also stipulates that int Type field OFFSET You need to align to 4N, therefore Parent.i And Child.i Store separately to OFFSET = 24 and OFFSET = 40 The location of .

because JVM Memory alignment in exists not only between fields, but also between objects ,Java Memory addresses between objects need to be aligned to 8N.

therefore Child The end of the object is filled with 4 Bytes , The object size starts with 44 Bytes are filled into 48 byte .

2.2 -XX:+UseCompressedOops -XX +CompactFields Turn on compression pointer , Turn on field compression

Based on the analysis of the first case , We turned on

-XX +CompactFieldsCompress fields , So lead to int Type Parent.i Fields can be inserted into OFFSET = 12 Location , To avoid unnecessary byte padding .according to

The rules 2:Child The first field of the object needs to be aligned to 4N, Here we seeint typeOf Parent.i The field conforms to this rule .according to

The rules 1:Child All of the objects long Type fields are aligned to 8N, be-all int Type fields are aligned to 4N.

The resulting Child The object size is 36 byte , because Java The memory address between objects needs to be aligned to 8N, So finally Child The end of the object is filled again 4 Bytes eventually become 40 byte .

Here we can see that when the field compression is turned on

-XX +CompactFieldsUnder the circumstances ,Child The size of the object is determined by 48 Bytes become 40 byte .

2.3 -XX:-UseCompressedOops -XX -CompactFields Turn off compression pointer , Turn off field compression

First, close the compression pointer -UseCompressedOops Under the circumstances , The bytes occupied by the type pointer in the object header become 8 byte . This causes the size of the object header to become 16 byte .

according to

The rules 1:long Type variables OFFSET You need to align to 8N. according toThe rules 2:When the compression pointer is turned off ,Child The first field of the object Parent.l You need to align to 8N. So here Parent.l Field OFFSET = 16.because long Type variables OFFSET You need to align to 8N, therefore Child.l Field OFFSET

Need to be 32, therefore OFFSET = 28 The position of the is filled 4 Bytes .

It's calculated like this Child The object size is 44 byte , But considering that Java The memory address of the object and the object needs to be aligned to 8N, So at the end of the object 4 Bytes , Final Child The memory usage of the object is 48 byte .

2.4 -XX:-UseCompressedOops -XX +CompactFields Turn off compression pointer , Turn on field compression

Based on the analysis of the third case , Let's look at the field arrangement of the fourth case :

Because when pointer compression is turned off, the size of the type pointer becomes 8 Bytes , So lead to Child The first field in the object Parent.l There is no gap in front , Just in line 8N, It doesn't need to int Type variable insertion . So even if field compression is turned on -XX +CompactFields, The overall arrangement order of fields remains unchanged .

Pointer compression by default

-XX:+UseCompressedOopsAnd field compression-XX +CompactFieldsIt's all on

3. Alignment filling (Padding)

In the introduction of field rearrangement in the instance data area in the previous section, byte filling caused by memory alignment will not only appear between fields , It also appears between objects .

Previously, we introduced three important rules to be followed for field rearrangement , The rules are 1, The rules 2 Defines the memory alignment rules between fields . The rules 3 It defines the arrangement rules between object fields .

For memory alignment , Between object header and field , And some unnecessary bytes need to be filled between fields .

For example, the first case of field rearrangement mentioned earlier -XX:+UseCompressedOops -XX -CompactFields.

The four cases mentioned above will be filled in the back of the object instance data area 4 Byte size space , The reason is that in addition to the memory alignment between fields , You also need to meet the memory alignment between objects .

Java Memory addresses between objects in the virtual machine heap need to be aligned to 8N(8 Multiple ), If an object takes up less than memory 8N Bytes , Then you must fill in some unnecessary bytes after the object and align them to 8N Bytes .

The memory alignment options in the virtual machine are

-XX:ObjectAlignmentInBytes, The default is 8. That is, how many times does the memory address between objects need to be aligned , It's from this. JVM Parameter controlled .

Let's take the first case above as an example to illustrate : The actual occupation of the object in the figure is 44 Bytes , But it's not 8 Multiple , Then you need to refill 4 Bytes , Align memory to 48 Bytes .

These are between fields for memory alignment purposes , Unnecessary bytes filled between objects , We call it Alignment filling (Padding).

4. Align the filled application

After we know the concept of aligned filling , You may be curious , Why do we need to align and fill , Is there a problem to be solved ?

So let's take this question , Listen to me ~~

4.1 Solve the alignment filling problem caused by pseudo sharing

In addition to the two aligned filled scenes described above ( Between fields , Between objects ), stay JAVA There is also a scene of aligned filling , That is to solve the problem by aligning and filling False Sharing( False sharing ) The problem of .

Introducing False Sharing( False sharing ) Before , Let me first introduce CPU How to read data in memory .

4.1.1 CPU cache

According to Moore's law : The number of transistors in the chip every 18 It will double in a month . Lead to CPU The performance and processing speed of are getting faster and faster , And promotion CPU The running speed of is much easier and cheaper than increasing the running speed of memory , So that's what led to it CPU The speed gap between and memory is getting bigger and bigger .

To make up for it CPU Huge speed difference between and memory , Improve CPU Processing efficiency and throughput , So people introduced L1,L2,L3 Cache integration into CPU in . Of course, L0 That is, registers , Register away from CPU lately , The access speed is also the fastest , Basically no delay .

One CPU It contains multiple cores , When we buy computers, we often see such processor configuration , such as 4 nucleus 8 Threads . It means this CPU contain 4 A physical core 8 Logical core .4 Two physical cores indicate that... Can be allowed at the same time 4 Threads executing in parallel ,8 A logical core indicates that the processor utilizes Hyper threading technology A physical core is simulated into two logical cores , A physical core can only execute one thread at a time , and Hyper threading chip Can achieve fast switching between threads , When a thread is accessing a gap in memory , The hyper threading chip can immediately switch to execute another thread . Because the switching speed is very fast , So the effect is 8 Threads executing at the same time .

In the picture CPU Core refers to the physical core .

We can see from the picture L1Cache It's separation CPU Core recent cache , And then the next thing L2Cache,L3Cache, Memory .

leave CPU The closer the core is, the faster the cache access speed is , The higher the cost , Of course, the smaller the capacity .

among L1Cache and L2Cache yes CPU The physical core is private ( Be careful : This is the physical core, not the logical core )

and L3Cache As a whole CPU Shared by all physical cores .

CPU The logical core shares the physical core to which it belongs L1Cache and L2Cache

L1Cache

L1Cache leave CPU It's the latest , It has the fastest access speed , The capacity is also the smallest .

From the picture we can see L1Cache In two parts , Namely :Data Cache and Instruction Cache. One of them is to store data , One is to store code instructions .

We can go through cd /sys/devices/system/cpu/ Check it out. linux On the machine CPU Information .

stay /sys/devices/system/cpu/ Directory , We can see CPU The number of core , Of course, this refers to the logical core .

The processor on my machine does not use hyper threading technology, so this is actually 4 A physical core .

Now let's enter one of them CPU The core (cpu0) Take a look at L1Cache The situation of :

CPU The cache condition is /sys/devices/system/cpu/cpu0/cache View under directory :

index0 Describe the L1Cache in DataCache The situation of :

level: It means that we should cache What level does information belong to ,1 Express L1Cache.type: Indicates belonging to L1Cache Of DataCache.size: Express DataCache The size is 32K.shared_cpu_list: We mentioned before L1Cache and L2Cache yes CPU Private to the physical core , The logical core simulated by the physical core is share L1Cache and L2Cache Of ,/sys/devices/system/cpu/The information described in the directory is the logical core .shared_cpu_list It describes exactly which logical cores share this physical core .

index1 Describe the L1Cache in Instruction Cache The situation of :

We see L1Cache Medium Instruction Cache So is the size 32K.

L2Cache

L2Cache Information stored in index2 Under the table of contents :

L2Cache The size is 256K, Than L1Cache Be bigger .

L3Cache

L3Cache Information stored in index3 Under the table of contents :

Here we can see L1Cache Medium DataCache and InstructionCache All the same size 32K and L2Cache The size is 256K,L3Cache The size is 6M.

Of course, these values are in different CPU The configuration will be different , But on the whole L1Cache The order of magnitude is tens of KB,L2Cache The order of magnitude is several hundred KB,L3Cache What is the magnitude of MB.

4.1.2 CPU Cache line

We introduced CPU Cache structure , The purpose of introducing cache is to eliminate CPU Speed gap with memory , according to The principle of program locality We know ,CPU The cache must be used to store hot data .

The principle of program locality is : Time locality and space locality . Time locality means that if an instruction in a program is executed , Then the instruction may be executed again soon ; If a piece of data is accessed , The data may be accessed again soon . Spatial locality means that once a program accesses a storage unit , Soon after , Nearby storage units will also be accessed .

So what is the basic unit of accessing data in the cache ??

In fact, hot data is CPU Access in the cache is not in units of individual variables or individual pointers as we think .

CPU The basic unit of data access in the cache is called cache line cache line. The size of cache line access bytes is 2 Multiple , On different machines , The size range of cache rows is 32 Bytes to 128 Between bytes . At present, the size of cache lines in all mainstream processors is 64 byte ( Be careful : The unit here is byte ).

We can see from the picture L1Cache,L2Cache,L3Cache The size of cache lines in the is 64 byte .

That means every time CPU The size of data obtained or written from memory is 64 Bytes , Even if you only read one bit,CPU It will also be loaded from memory 64 Byte data comes in . Same thing ,CPU Synchronizing data from the cache to memory is also in accordance with 64 In bytes .

For example, you visit a long Type of the array , When CPU When the first element in the array is loaded, the following 7 The elements are loaded into the cache together . This speeds up the efficiency of traversing the array .

long Type in the Java To occupy 8 Bytes , A cache line can store 8 individual long Type variable .

in fact , You can traverse any data structure allocated in contiguous blocks of memory very quickly , If the items in your data structure are not adjacent to each other in memory ( such as : Linked list ), So you can't use CPU Advantages of caching . Because data is not stored continuously in memory , Therefore, every item in these data structures may have cache row misses ( The principle of program locality ) The situation of .

Remember when we were 《Reactor stay Netty In the implementation of ( Create an article )》 Described in the Selector The creation of ,Netty Using arrays to achieve custom SelectedSelectionKeySet Type replaced JDK utilize HashSet Type implementation

sun.nio.ch.SelectorImpl#selectedKeys. The purpose is to take advantage of CPU The advantages of caching to improve IO Active SelectionKeys Ergodic performance of collection .

4.2 False Sharing( False sharing )

Let's take a look at such an example first , The author defines an example class FalseSharding, There are two in the class long Type volatile Field a,b.

public class FalseSharding {

volatile long a;

volatile long b;

}

Field a,b They are logically independent , They have nothing to do with each other , They are used to store different data , There is no correlation between the data .

FalseSharding The memory layout between fields in the class is as follows :

FalseSharding Fields in class a,b In memory is adjacent storage , Respectively take up 8 Bytes .

If it happens that the field a,b By CPU Read in the same cache line , At this point, there are two threads , Threads a Used to modify fields a, Simultaneous threads b Used to read fields b.

In this case , The thread will be b What is the impact of the read operation ?

We know the statement volatile keyword Variables can be used in multithreaded processing environment , Ensure memory visibility . The computer hardware layer will ensure the security of being volatile The memory visibility of the shared variable modified by keyword after write operation , And this memory visibility is caused by Lock Prefix instruction as well as Cache consistency protocol (MESI Control agreement ) Jointly guaranteed .

Lock The prefix instruction can make the corresponding cache line data in the processor where the modification thread is located be refreshed back to memory immediately after being modified , And at the same time

lockThe cache line of the modified variable is cached in all processor cores , Prevent multiple processor cores from modifying the same cache line concurrently .Cache consistency protocol is mainly used to maintain the consistency between multiple processor cores CPU Consistency between memory and cache data . Each processor will sniff the memory address prepared to be written by other processors on the bus , If this memory address is cached in its own processor , It will set the corresponding cache line in its processor to

Invalid, The next time you need to read the data in this cache row , You need to access memory to get .

Based on the above volatile Keyword principle , Let's first look at the first impact :

When a thread a The processor core0 Middle alignment field a When making modifications ,

Lock Prefix instructionWill cache the fields in all processors a The corresponding cache line oflock, This leads to threads b The processor core1 You cannot read and modify the fields of your own cached rows in b.processor core0 The modified field a The cache line is flushed back to memory .

From the figure, we can see that the field a The value of is in the processor core0 Changes have taken place in the cache lines and in memory . But the processor core1 Middle field a The value of has not changed , also core1 Middle field a The cache line is in locked , Cannot read or write fields b.

From the above process, we can see that even if the field a,b They are logically independent , They have nothing to do with each other , But threads a To field a Modification of , Caused the thread b Unable to read field b.

The second effect :

When the processor core0 Change the field a When the cache line is flushed back to memory , processor core1 Will sniff the field on the bus a The memory address of is being modified by another processor , So set your cache line to invalid . When a thread b The processor core1 Read fields from b The value of , Found that the cache line has been set to invalid ,core1 You need to read the field from memory again b Even if the field b Nothing has changed .

From the above two effects, we can see that the field a And fields b In fact, there is no sharing , There is no correlation between them , In theory, threads a To field a Any operation of , Should not affect threads b To field b Read or write .

But in fact, threads a To field a The modification of resulted in the field b stay core1 Cache rows in are locked (Lock Prefix instruction ), Thus, the thread b Unable to read field b.

Threads a On the processor core0 Change the field a After the cache line is synchronously flushed back to memory , Cause the field b stay core1 The cache line in is set to invalid ( Cache consistency protocol ), This leads to threads b You need to go back to memory to read the field b The value of cannot be used CPU Advantages of caching .

Due to fields a And field b In the same cache line , Resulting in the field a And field b De facto sharing ( Originally should not be shared ). This phenomenon is called False Sharing( False sharing ).

In a high concurrency scenario , This pseudo sharing problem , It will have a great impact on program performance .

If the thread a To field a Make changes , At the same time threads b To field b Also modified , This situation has a greater impact on performance , Because it can lead to core0 and core1 The corresponding cache lines in the are invalidated with each other .

4.3 False Sharing Solutions for

Since it leads to False Sharing The reason is that the field a And field b In the same cache line , So we have to find a way to make the field a And field b Not in a cache line .

So what can we do to make the field a And field b It must not be allocated to the same cache line ?

Now , The topic byte filling in this section comes in handy ~~

stay Java8 Before, we usually used to be in the field a And field b Before and after filling 7 individual long Type variable ( Cache line size 64 byte ), The purpose is to make the field a And field b One cache line is exclusive to each other to avoid False Sharing.

For example, we modify the initial example code to look like this , Fields can be guaranteed a And field b Each has its own cache line .

public class FalseSharding {

long p1,p2,p3,p4,p5,p6,p7;

volatile long a;

long p8,p9,p10,p11,p12,p13,p14;

volatile long b;

long p15,p16,p17,p18,p19,p20,p21;

}

The layout of the modified object in memory is as follows :

We see that in order to solve False Sharing problem , We will occupy 32 Bytes of FalseSharding The sample object is filled with 200 byte . The consumption of memory is very considerable . Usually for ultimate performance , We will work in some high concurrency frameworks or JDK In the source code of False Sharing Solution scenario . Because in high concurrency scenarios , Any small performance loss, such as False Sharing, Will be magnified infinitely .

But the solution False Sharing At the same time, it will bring huge memory consumption , So even in high concurrency frameworks, such as disrupter perhaps JDK It's just For shared variables that are frequently written in multithreaded scenarios .

What I want to emphasize here is that in our daily work , We can't just because we have a hammer in our hand , It's full of nails , If you see any nail, you want to hammer it twice .

We should clearly distinguish what impact and loss a problem will bring , Whether these impacts and losses are acceptable in our current business stage ? Is it a bottleneck ? At the same time, we should also clearly understand the price we have to pay to solve these problems . Be sure to comprehensively evaluate , Pay attention to an input-output ratio . Although some problems are problems , But in some stages and scenarios, we don't need to invest in solving . Some problems are bottlenecks for our current business development stage , We Cannot but To solve the . We are in architecture design or programming , The plan must Simple , appropriate . And estimate some advance, leaving a certain Evolution space .

4.3.1 @Contended annotation

stay Java8 A new annotation has been introduced in @Contended, For resolution False Sharing The problem of , At the same time, this annotation will also affect Java The fields in the object are arranged .

In the introduction of the previous section , We solved the problem by filling in the fields False Sharing The problem of , But there's also a problem here , Because we need to consider when manually filling in fields CPU The size of the cache row , Because although the cache line size of all mainstream processors is 64 byte , However, the cache line size of some processors is 32 byte , Some even 128 byte . We need to consider many hardware constraints .

Java8 Through the introduction of @Contended Annotations help us solve this problem , We no longer need to fill in fields manually . Let's take a look at @Contended How annotations help us solve this problem ~~

The manual byte filling described in the previous section is filled before and after the shared variable 64 Byte size space , This only ensures that the program has a cache line size of 32 Byte or 64 Bytes of CPU Next exclusive cache line . But if CPU The cache line size of is 128 byte , This still exists False Sharing The problem of .

introduce @Contended Annotations can make us ignore the differences of underlying hardware devices , Achieve Java The original intention of language : Platform independence .

@Contended By default, annotations are only in JDK The interior works , If we need to use... In our program code @Contended annotation , Then you need to turn on JVM Parameters

-XX:-RestrictContendedWill take effect .

@Retention(RetentionPolicy.RUNTIME)

@Target({ElementType.FIELD, ElementType.TYPE})

public @interface Contended {

//contention group tag

String value() default "";

}

@Contended Annotations can be marked on a class or on fields in a class , By @Contended The annotated object will monopolize the cache line , Cache lines are not shared with any variables or objects .

@Contended A label on a class represents... In an object of that class

Instance data as a wholeNeed exclusive cache lines . Cache rows cannot be shared with other instance data .@Contended Marked on a field in the class indicates that the field needs exclusive cache lines .

besides @Contended The concept of grouping is also provided , In the annotations value Attribute representation

contention group. Variables that belong to a unified grouping , They are stored continuously in memory , You can allow shared cache rows . Shared cache rows are not allowed between different groups .

Let's take a look at each of them @Contended How do annotations affect the arrangement of fields in these three usage scenarios .

@Contended Mark on class

@Contended

public class FalseSharding {

volatile long a;

volatile long b;

volatile int c;

volatile int d;

}

When @Contended Marked on FalseSharding When on the sample class , Express FalseSharding In the sample object The entire instance data area Need exclusive cache lines , Cannot share cache rows with other objects or variables .

Memory layout in this case :

As shown in the picture ,FalseSharding The sample class is labeled @Contended after ,JVM Will be in FalseSharding The instance data area of the sample object is filled before and after 128 Bytes , Ensure that the memory between the fields in the instance data area is continuous , And ensure that the entire instance data area has exclusive cache lines , Cache rows are not shared with data outside the instance data area .

Careful friends may have found the problem , We didn't mention that the size of the cache line is 64 Byte ? Why is it filled with 128 Bytes ?

Moreover, the manual filling introduced before is also filled 64 byte , Why? @Contended Annotations will use twice as much Cache line size to fill it ?

In fact, there are two reasons :

- First, the first reason , As we have already mentioned before , At present, most mainstream CPU The cache line is 64 byte , But there are also parts of it CPU The cache line is 32 Byte or 128 byte , If only fill 64 bytes , The cache line size is 32 Byte and 64 Bytes of CPU In, you can monopolize the cache line to avoid FalseSharding Of , But the cache line size is

128 byteOf CPU There will still be FalseSharding problem , here Java Adopted a pessimistic approach , The default is to fill128 byte, Although it is wasteful in most cases , But it shields the differences of the underlying hardware .

however @Contended The size of the annotation fill byte can be determined by JVM Parameters

-XX:ContendedPaddingWidthAppoint , Range of valid values0 - 8192, The default is128.

- The second reason is actually the most core reason , Mainly to prevent CPU Adjacent Sector Prefetch(CPU Adjacent sector prefetching ) Features bring FalseSharding problem .

CPU Adjacent Sector Prefetch:https://www.techarp.com/bios-guide/cpu-adjacent-sector-prefetch/

CPU Adjacent Sector Prefetch yes Intel Processor specific BIOS features , The default is enabled. The main function is to use The principle of program locality , When CPU Request data from memory , And read the cache line of the current request data , Will further prefetch The next cache line adjacent to the current cache line , So when our program processes data sequentially , Will increase CPU Processing efficiency . This also reflects the spatial locality feature in the principle of program locality .

When CPU Adjacent Sector Prefetch Characteristic by disabled When disabled ,CPU It will only get the cache line where the current request data is located , The next cache line will not be prefetched .

So when CPU Adjacent Sector Prefetch Enable (enabled) When ,CPU In fact, two cache lines are processed at the same time , under these circumstances , You need to fill twice the cache line size (128 byte ) To avoid CPU Adjacent Sector Prefetch What it brings is FalseSharding problem .

@Contended Marked on the field

public class FalseSharding {

@Contended

volatile long a;

@Contended

volatile long b;

volatile int c;

volatile long d;

}

This time we will @Contended The annotation is marked in FalseSharding Fields in the sample class a And field b On , The effect of this is that the fields a And field b Exclusive cache lines . In terms of memory layout , Field a And field b The front and back are filled 128 Bytes , To ensure that the field a And field b Cache rows are not shared with any data .

Instead of being @Contended Annotation field c And field d Continuously store... In memory , Cache lines can be shared .

@Contended grouping

public class FalseSharding {

@Contended("group1")

volatile int a;

@Contended("group1")

volatile long b;

@Contended("group2")

volatile long c;

@Contended("group2")

volatile long d;

}

This time we're going to a And fields b Put it in the same place content group Next , Field c And fields d Put it on another content group Next .

So in the same group group1 The fields below a And fields b It's continuously stored in memory , Cache lines can be shared .

In the same group group2 The fields below c And fields d It is also stored continuously in memory , Shared cache rows are also allowed .

However, cache rows cannot be shared between groups , So fill in before and after the field grouping 128 byte , To ensure that variables between groups cannot share cache lines .

5. Memory alignment

From the above we know that Java The instance data area field in the object needs memory alignment, resulting in JVM Will be rearranged and avoided by populating cache rows false sharding The purpose brought about by byte alignment filling .

We also learned that memory alignment does not just happen between objects , It also happens between fields in the object .

So in this section, the author will introduce you to what is memory alignment , Before the content of this section begins, the author will first ask two questions :

Why memory alignment ? If the head is more iron , Just out of memory alignment , What are the consequences ?

Java Why does the starting address of the object in the virtual machine heap need to be aligned to

8Multiple ? Why not all to 4 A multiple of or 16 A multiple of or 32 The multiple of ?

With these two questions , Now let's officially start this section ~~~

5.1 Memory structure

What we usually call memory is also called random access memory (random-access memory) Also called RAM. and RAM There are two kinds of :

One is static RAM(

SRAM), This kind of SRAM Used to introduce CPU Cache L1Cache,L2Cache,L3Cache. It is characterized by fast access speed , The access speed is1 - 30 individualClock cycle , But the capacity is small , High cost .The other is dynamic RAM(

DRAM), This kind of DRAM Used in what we often call main memory , It is characterized by slow access speed ( Relative cache ), The access speed is50 - 200 individualClock cycle , But the capacity is large , It's cheaper ( Relative cache ).

Memory consists of memory modules one by one (memory module) form , They are plugged into the expansion slot of the motherboard . Common memory modules are usually represented by 64 Bit as unit (8 Bytes ) Transfer data to or from the storage controller .

As shown in the figure, the black component on the memory module is the memory module (memory module). Multiple memory modules are connected to the storage controller , It is aggregated into main memory .

As mentioned earlier DRAM chip It's packaged in a memory module , Each memory module contains 8 individual DRAM chip , The serial number is 0 - 7.

And every one DRAM chip The storage structure is a two-dimensional matrix , The elements stored in a two-dimensional matrix are called superelements (supercell), Every supercell The size is one byte (8 bit). Every supercell By a coordinate address (i,j).

i Represents the row address in the two-dimensional matrix , In the computer, the row address is called RAS(row access strobe, Row access strobe ).

j Represents the column address in the two-dimensional matrix , In the computer, the column address is called CAS(column access strobe, Column access strobe ).

Image below supercell Of RAS = 2,CAS = 2.

DRAM chip The information in the flows in and out through the pin DRAM chip . Each pin carries 1 bit The signal of .

In the figure DRAM The chip contains two address pins (addr), Because we're going through RAS,CAS To locate the supercell. also 8 Data pins (data), because DRAM Chip IO The unit is one byte (8 bit), So we need to 8 individual data Pin from DRAM Chip incoming and outgoing data .

Note that this is only to explain the concept of address pin and data pin , The number of pins in the actual hardware is not necessarily .

5.2 DRAM Chip access

Now let's read the coordinate address in the figure above as (2,2) Of supercell For example , To illustrate access DRAM The process of chip .

First, the storage controller stores the row address

RAS = 2Send to... Through the address pinDRAM chip.DRAM Chip based on

RAS = 2Copy all the contents of the second row in the two-dimensional matrix toInternal row bufferin .Next, the storage controller will send... Through the address pin

CAS = 2To DRAM In chip .DRAM The chip from the internal line buffer according to

CAS = 2Copy out the... In the second column supercell And send it to the storage controller through the data pin .

DRAM Chip IO The unit is one supercell, That's one byte (8 bit).

5.3 CPU How to read and write main memory

Earlier, we introduced the physical structure of memory , And how to access... In memory DRAM Chip acquisition supercell Data stored in ( A byte ).

In this section, let's introduce CPU How to access memory .

It's about CPU We are introducing the internal structure of the chip false sharding I've already introduced it in detail when I was here , Here we mainly focus on CPU On the bus architecture between and memory .

5.3.1 Bus structure

CPU The data interaction with memory is through bus (bus) Accomplished , The transmission of data on the bus is completed through a series of steps , These steps are called bus transactions (bus transaction).

Where data is transferred from memory to CPU be called Read business (read transaction), Data from CPU Transfer to memory is called Write business (write transaction).

Signal transmission on the bus : Address signal , Data signals , Control signals . The control signal transmitted on the control bus can synchronize transactions , And can identify the transaction information currently being executed :

- The current transaction is to memory ? Or to the disk ? Or to other IO The equipment ?

- This business is read or write ?

- Address signals transmitted on the bus (

Memory address), Or data signals (data)?.

Remember what we said earlier MESI Cache consistency protocol ? When core0 Modify fields a The value of , other CPU The core will be on the bus

SniffingField a Memory address of , If a field appears on the bus a Memory address of , It means someone is modifying the field a, So other CPU The core willinvalidCache your own fields a Wherecache line.

As shown in the figure above , The system bus is connected CPU And IO bridge Of , The storage bus is to connect IO bridge And main memory .

IO bridge Be responsible for converting the electronic signal on the system bus into the electronic signal on the storage bus .IO bridge The system bus and storage bus will also be connected to IO Bus ( Disks, etc IO equipment ) On . Here we see IO bridge In fact, the function is to convert electronic signals on different buses .

5.3.2 CPU The process of reading data from memory

hypothesis CPU Now I want to change the memory address to A The contents of are loaded into the register for operation .

First CPU In the chip Bus interface A read transaction is initiated on the bus (read transaction). The read transaction is divided into the following steps :

CPU Put the memory address A Put it on the system bus . And then

IO bridgeTransfer the signal to the storage bus .The main memory feels the... On the storage bus

Address signalThe memory address on the storage bus is stored by the storage controller A Read out .Storage controller through memory address A Locate the specific memory module , from

DRAM chipTake out the memory address A Correspondingdata X.What the storage controller will read

data XPut it on the storage bus , And then IO bridge takeStorage busThe data signal on the is converted intoThe system busData signals on the , Then continue to pass... Along the system bus .CPU The chip senses the data signal on the system bus , Copy the data from the register to the system .

That's all CPU The complete process of reading memory data into registers .

But there is also an important process involved , Here we still need to spread out and introduce , That's how the storage controller passes Memory address A Read the corresponding... From main memory data X Of ?

Next, let's combine the memory structure introduced earlier and from DRAM The process of reading data from the chip , Let's generally introduce how to read data from main memory .

5.3.3 How to read data from main memory according to memory address

The previous introduction to , When the storage controller in main memory feels the... On the storage bus Address signal when , The memory address will be read from the storage bus .

Then it will locate the specific memory module through the memory address . Remember the memory module in the memory structure ??

Each memory module contains 8 individual DRAM chip , Number from 0 - 7.

The storage controller converts the memory address to DRAM In chip supercell Coordinate address in two-dimensional matrix (RAS,CAS). And send the coordinate address to the corresponding memory module . The memory module will then RAS and CAS Broadcast to all... In the memory module DRAM chip . Pass... In turn (RAS,CAS) from DRAM0 To DRAM7 Read the corresponding supercell.

We know one supercell Store 8 bit data , Here we are from DRAM0 To DRAM7

Read in turn 8 individual supercell That is to say 8 Bytes , And then 8 Bytes are returned to the storage controller , The storage controller places the data on the storage bus .

CPU Always with word size Read data from memory in , stay 64 In a bit processor word size by 8 Bytes .64 Bit memory can only be processed at a time 8 Bytes .

CPU One at a time

cache lineSize data (64 Bytes), But memory can only throughput at a time8 Bytes.

So in the memory module corresponding to the memory address ,DRAM0 chip Store the first low byte (supercell),DRAM1 chip Store the second byte ,...... By analogy DRAM7 chip Store the last high byte .

The unit of memory read and write at one time is 8 Bytes . And in the eyes of programmers, continuous memory addresses are actually physically discontinuous . Because this continuous

8 BytesIt's actually stored in differentDRAM chipUpper . Every DRAM The chip stores a byte (supercell).

5.3.4 CPU The process of writing data to memory

Let's now assume CPU To put the data in the register X Write to memory address A in . Same thing ,CPU The bus interface in the chip will initiate a write transaction to the bus (write transaction). The steps to write a transaction are as follows :

CPU Memory address to be written A Put it on the system bus .

adopt

IO bridgeSignal conversion , Put the memory address A To the storage bus .The memory controller senses the address signal on the memory bus , Put the memory address A Read it from the storage bus , And wait for the data to arrive .

CPU Copy the data in the register to the system bus , adopt

IO bridgeSignal conversion , Transfer data to the storage bus .The storage controller senses the data signal on the storage bus , Read the data from the storage bus .

Storage controller through memory address A Locate the specific memory module , Finally, write the data into the memory module 8 individual DRAM In chip .

6. Why memory alignment

We learned about the memory structure and CPU After the process of reading and writing memory , Now let's go back to the question at the beginning of this section : Why memory alignment ?

Next, the author introduces the reasons for memory alignment from three aspects :

Speed

CPU The unit of reading data is based on word size To the , stay 64 Bit processor word size = 8 byte , therefore CPU The unit of reading and writing data to memory is 8 byte .

stay 64 Bit memory , Memory IO Unit is 8 Bytes , We also mentioned earlier that the memory module in the memory structure is usually 64 Bit as unit (8 Bytes ) Transfer data to or from the storage controller . Because every time memory IO The read data is contained in the specific memory module where the data is located 8 individual DRAM In the chip with the same (RAM,CAS) Read one byte in turn , Then aggregate in the storage controller 8 Bytes Return to CPU.

Due to this in the memory module 8 individual DRAM Limitations of the physical storage structure composed of chips , Memory can only read data in address order 8 Sequential reading of bytes ----8 Bytes 8 Read data in bytes .

Suppose we now read

0x0000 - 0x0007On this contiguous memory address 8 Bytes . Because the memory is read according to8 BytesRead sequentially in units , The starting address of the memory address we want to read is 0(8 Multiple ), therefore 0x0000 - 0x0007 The coordinates of each address in the are the same (RAS,CAS). So he can 8 individual DRAM Through the same... In the chip (RAS,CAS) Read out at one time .If we read now

0x0008 - 0x0015On this continuous memory 8 Bytes are the same , Because the starting address of the memory segment is 8(8 Multiple ), So every memory address in this memory is DREAM The coordinate address in the chip (RAS,CAS) It's the same , We can also read it at one time .

Be careful :

0x0000 - 0x0007Coordinate address in memory segment (RAS,CAS) And0x0008 - 0x0015Coordinate address in memory segment (RAS,CAS) It's not the same .

- But if we read now

0x0007 - 0x0014On this continuous memory 8 A byte is different , Due to the starting address0x0007stay DRAM In the chip (RAS,CAS) And the back address0x0008 - 0x0014Of (RAS,CAS) inequality , therefore CPU We have to start with0x0000 - 0x0007Read 8 Bytes come out and put them intoResult registerCenter and move left 7 Bytes ( The purpose is to obtain only0x0007), then CPU In from0x0008 - 0x0015Read 8 Bytes come out, put them in the temporary register and move them to the right 1 Bytes ( The purpose is to get0x0008 - 0x0014) Finally, with the result registerOr operations. The resulting0x0007 - 0x0014On the address field 8 Bytes .

From the above analysis process , When CPU When accessing memory aligned addresses , such as 0x0000 and 0x0008 Both start addresses are aligned to 8 Multiple .CPU You can pass once read transaction Read out .

But when CPU When accessing addresses that are not aligned in memory , such as 0x0007 This starting address is not aligned to 8 Multiple .CPU Just twice read transaction To read the data .

Remember the question I asked at the beginning of the section ?

"Java Of objects in the virtual machine heapInitial addressWhy do I need to align to8 Multiple? Why not all to 4 A multiple of or 16 A multiple of or 32 The multiple of ?"

Now can you answer ???

Atomicity

CPU An aligned... Can be manipulated atomically word size memory.64 Bit processor word size = 8 byte .

Try to allocate in one cache line

I'm introducing false sharding When we mentioned the current mainstream processors cache line The size is 64 byte , The starting address of the object in the heap is aligned to... Through memory 8 Multiple , You can assign objects to a cache line as much as possible . An object whose memory start address is not aligned may be stored across cache lines , And that leads to CPU The execution efficiency of is slow 2 times .

One of the important reasons for the memory alignment of fields in objects is to make fields appear only in the same CPU In the cache line of . If the field is not aligned , It is possible to have fields across cached rows . in other words , Reading this field may require two cache rows to be replaced , The storage of this field will also pollute two cached rows at the same time . Both of these are detrimental to the efficiency of program execution .

In addition to 《2. Field rearrangement 》 The three field alignment rules described in this section , This is to ensure that the memory occupied by the instance data area is as small as possible on the basis of field memory alignment .

7. Compression pointer

After introducing the related contents of memory alignment , Let's introduce the compression pointer often mentioned earlier . Can pass JVM Parameters XX:+UseCompressedOops Turn on , Of course, the default is on .

Before starting this section , Let's start with a question , That's why compressed pointers are used ??

Suppose we are now preparing to 32 The bit system switches to 64 Bit system , At first, we might expect the system performance to improve immediately , But this may not be the case .

stay JVM The main reason for performance degradation in is 64 In bit system Object reference . We mentioned earlier ,64 References to objects and type pointers in the bit system 64 bit That is to say 8 Bytes .

This leads to the 64 The memory space occupied by object references in the bit system is 32 Twice the size in a bit system , Therefore, it indirectly leads to 64 More memory consumption and more frequent GC happen ,GC The amount of CPU The more time , So our application takes up CPU The less time you have .

Another is that the reference of the object becomes larger , that CPU There are relatively few cacheable objects , Increased access to memory . Combining the above points leads to the decline of system performance .

On the other hand , stay 64 The addressing space of memory in bit system is 2^48 = 256T, In reality, do we really need such a large addressing space ?? It doesn't seem necessary ~~

So we have new ideas : So should we switch back to 32 What about the bit system ?

If we switch back to 32 Bit system , How can we solve it in 32 There are more than... In the bit system 4G Memory addressing space ? Because now 4G The memory size of is obviously not enough for current applications .

I think the above questions , It was also in the beginning JVM Developers need to face and solve , Of course, they also gave a very perfect answer , That's it Using compressed pointers, you can 64 Use... In a bit system 32 Bit object reference gets more than 4G Memory addressing space .

7.1 How do you compress pointers ?

Remember what we mentioned earlier in the introduction to alignment padding and memory alignment , stay Java The starting address of the object in the virtual machine heap must be aligned to 8 Multiple Do you ?

Since the starting addresses of objects in the heap are aligned to 8 Multiple , So the object reference is when the compressed pointer is turned on 32 The last three bits of a binary are always 0( Because they can always be 8 to be divisible by ).

since JVM Having known the memory addresses of these objects, the last three digits are always 0, So these meaningless 0 There is no need to continue storing in the heap . contrary , We can use storage 0 the 3 position bit Store some meaningful information , So we have more 3 position bit Address space of .

So when storing ,JVM Or in accordance with 32 Bits to store , But the last three bits were originally used to store 0 Of bit Now it is used by us to store meaningful address space information .

When addressing ,JVM Will this 32 Bit object reference Move left 3 position ( The last three make up 0). This leads to when the compression pointer is turned on , We were 32 Bit memory addressing space becomes 35 position . Addressable memory space becomes 2^32 * 2^3 = 32G.

thus ,JVM Although some additional bit operations are performed, it greatly improves the addressing space , And reduce the memory occupied by object references by half , Save a lot of space . Besides, these bit operations are for CPU It is a very easy and lightweight operation

Through the principle of compressing pointers, I found Another important reason for memory alignment Is to align memory to 8 Multiple , We can do it in 64 Compressed pointers are used in bit systems through 32 Promote the address bit of the object to the address space 32G.

from Java7 Start , When maximum heap size Less than 32G When , The compression pointer is turned on by default . But when maximum heap size Greater than 32G When , The compression pointer will close .

So how can we further expand the addressing space when the compressed pointer is turned on ???

7.2 How to further expand the addressing space

As mentioned earlier, we are Java The starting address of objects in the virtual machine heap needs to be adjusted to 8 Multiple , But we can pass this value JVM Parameters -XX:ObjectAlignmentInBytes To change ( The default value is 8). Of course, the value must be 2 Power of power , The value range needs to be in 8 - 256 Between .

Because the object address is aligned to 8 Multiple , There will be more 3 position bit Let's store additional address information , And then 4G Increase the addressing space to 32G.

Same thing , If we were to ObjectAlignmentInBytes The value of is set to 16 Well ?

Object addresses are aligned to 16 Multiple , Then there will be more 4 position bit Let's store additional address information . The address space becomes 2^32 * 2^4 = 64G.

Through the above rules , We can know , stay 64 When the compression pointer is turned on in the bit system , Calculation formula of addressing range :4G * ObjectAlignmentInBytes = Addressing range .

But I don't suggest that you do it rashly , Because it increases ObjectAlignmentInBytes Although it can expand the addressing range , But it may also increase byte padding between objects , As a result, the compressed pointer does not achieve the original effect of saving space .

8. Memory layout of array objects

We are discussing a lot of space ahead Java The layout of ordinary objects in memory , In the last section, let's talk about Java How the array objects in are arranged in memory .

8.1 Memory layout of basic type array

The figure above shows the layout of basic type arrays in memory , The basic type array is in JVM of use typeArrayOop The structure represents , The basic type array type meta information is TypeArrayKlass The structure represents .

The memory layout of arrays is roughly the same as that of ordinary objects , The only difference is that there are more in the header of array type objects 4 Bytes The part used to represent the length of the array .

Let's start pointer compression and close pointer compression respectively , This is illustrated by the following example :

long[] longArrayLayout = new long[1];

Turn on pointer compression -XX:+UseCompressedOops

We can see that the red box is one more in the header of the array type object 4 byte The size is used to represent the length of the array .

Because in our example long Type array has only one element , So the size of the instance data area is only 8 byte . If in our example long Type array becomes two elements , Then the size of the instance data area will become 16 byte , And so on .................

Turn off pointer compression -XX:-UseCompressedOops

When pointer compression is turned off , In the object header MarkWord Or occupy 8 Bytes , But the type pointer starts from 4 Bytes become 8 Bytes . The array length property remains unchanged 4 Bytes .

Here we find that the alignment filling occurs between the instance data area and the object header . Do you remember why ??

We introduced three field rearrangement rules in the field rearrangement section, which continue to apply here :

The rules 1: If a field occupiesXBytes , So the offset of this field OFFSET You need to align toNX.The rules 2: When the compression pointer is turned on 64 position JVM in ,Java Of the first field in the class OFFSET You need to align to4N, When the compressed pointer is turned off, the value of the first field in the class OFFSET You need to align to8N.

Here, the instance data area of the basic array type is long type , When pointer compression is turned off , According to rules 1 And rules 2 You need to align to 8 Multiple , So fill in between it and the object header 4 Bytes , Achieve the purpose of memory alignment , The starting address changes to 24.

8.2 Memory layout of reference type array

The above figure shows the layout of the reference type array in memory , Reference type array in JVM of use objArrayOop The structure represents , The basic type array type meta information is ObjArrayKlass The structure represents .

Similarly, there will also be a in the object header of the reference type array 4 byte The size is used to represent the length of the array .

Let's start pointer compression and close pointer compression respectively , This is illustrated by the following example :

public class ReferenceArrayLayout {

char a;

int b;

short c;

}

ReferenceArrayLayout[] referenceArrayLayout = new ReferenceArrayLayout[1];

Turn on pointer compression -XX:+UseCompressedOops

The biggest difference between the reference array type memory layout and the basic array type memory layout lies in their instance data area . Since the compression pointer is turned on , So the memory occupied by object reference is 4 Bytes , In our example, the reference array contains only one reference element , So there are only... In the instance data area 4 Bytes . The same reason , If the reference array in the example contains two reference elements , Then the instance data area will become 8 Bytes , And so on .......

Finally, because of Java Object needs memory aligned to 8 Multiple , Therefore, the instance data area of the reference array is filled with 4 Bytes .

Turn off pointer compression -XX:-UseCompressedOops

When the compression pointer is turned off , The memory occupied by object reference changes to 8 Bytes , Therefore, the instance data area referring to the array type occupies 8 Bytes .

Rearrange rules according to fields 2, Fill in the middle between the object header of reference array type and the instance data area 4 Bytes To ensure memory alignment .

summary

In this paper, the author introduces in detail Java Memory layout of ordinary objects and array type objects , And the calculation method of memory occupied by related objects .

And three important rules for field rearrangement of instance data area in object memory layout . And the following is led out by the aligned filling of bytes false sharding problem , also Java8 In order to solve false sharding And the introduction of @Contented The principle and usage of annotation .

In order to clarify the underlying principle of memory alignment , The author also spent a lot of space explaining the physical structure of memory and CPU The whole process of reading and writing memory .

Finally, the working principle of compressed pointer is derived from memory alignment . From this, we know four reasons for memory alignment :

CPU Access performance: When CPU When accessing memory aligned addresses , Through a read transaction Read a word long (word size) The size of the data comes out . Otherwise you need two read transaction.Atomicity: CPU An aligned... Can be manipulated atomically word size memory.Make the most of CPU cache: Memory alignment allows objects or fields to be allocated to a cache line as much as possible , Avoid storing across cached rows , Lead to CPU Halve execution efficiency .Increase the memory address space of the compressed pointer :Memory alignment between objects , Can make us in 64 Use... In a bit system 32 Bit object references promote memory addressing space to 32G. It not only reduces the memory occupation of object reference , It also improves the memory addressing space .

In this article, we also introduce several problems related to memory layout JVM Parameters :

- -XX:+UseCompressedOops

- -XX +CompactFields

- -XX:-RestrictContended

- -XX:ContendedPaddingWidth

- -XX:ObjectAlignmentInBytes

Finally, thank you for seeing here , See you next time ~~~

Welcome to the official account :bin Technology house

Heavy hard core | The object of a conversation is JVM Memory layout in , And more related articles on the principle and application of memory alignment and compression pointer

- Java The object is JVM Life cycle in

When you pass new Statement to create a java Object time ,JVM A memory space will be allocated to this object , As long as the object is referenced by a referenced variable , Then the object will always reside in memory , otherwise , It will end the life cycle ,JVM At the right time ...

- Classes and objects are in JVM How is it stored in , Half of them couldn't answer !

Preface This blog is mainly about classes and objects JVM How is it stored in , because JVM It's a very big subject , So I'll divide him into many chapters to elaborate on , The exact number has not yet been decided , Of course it doesn't matter , The point is whether you can learn from the article , Whether the J ...

- Ali P7 Arrangement “ hardcore ” Interview document :Java Basics + database + Algorithm + Frame technology, etc

There are more and more programmers now , Most programmers want to be able to work in large factories , But there is a gap in everyone's ability , So not everyone can step in BATJ. Even so , But we can't slack off in the workplace for a moment , Since yes BATJ Be curious , Then we have to face this ...

- hardcore ! Eight pictures to understand Flink End to end processing semantics exactly once Exactly-once( Deep principle , Recommended collection )

Flink stay Flink There are three locations that need end-to-end processing at a time : Source End : Data goes from the last stage to Flink when , We need to ensure that the information is accurate and consumed at one time . Flink Inner end : We already know about this , utilize Ch ...

- 《 thorough Java Virtual machine learning notes 》- The first 7 Chapter Type of life cycle / The object is JVM Life cycle in

One . The beginning of the type life cycle As shown in the figure Initialization time all Java The virtual machine implementation must be initialized the first time each class or interface is actively used : The following situations meet the requirements of active use : When you create a new instance of a class ( Or by executing in bytecode new Instructions ...

- Hibernate series 06 - The object is JVM Life cycle in

Boot directory : Hibernate Series of tutorials Catalog Java Object passing new Command to create ,Java virtual machine (Java Virtual Machine,JVM) For the new Java Object to open up a new space in memory for storing times ...

- jvm details ——2、Java The object is jvm The size in

Java Size of object The size of the basic data type is fixed , Not much here . For non basic types of Java object , Its size is questionable . stay Java in , An empty Object The size of the object is 8byte, This size is just a storage heap without any ...

- c++ What is the object model , Memory layout and structure of objects

stay c++ The initial stage of invention is for c++ The debate on object model never stopped until the Standards Committee passed the final c++ The object model has become a dust bowl .C++ The object model is probably the last thing to explain , But I have to say it again because c++ The first to get started ...

- 10 Java Memory layout of objects

Java How to create objects 1:new Statement and reflection mechanism creation . This method will call the constructor of the class , Meet many constraints at the same time . If a class has no constructor ,Java The compiler automatically adds a parameterless constructor . The constructor of the subclass needs to call the constructor of the parent class ...

- 【JVM Memory and garbage collection 】 Object instantiation, memory layout and access location

Object instantiation, memory layout and access location In terms of their specific memory allocation new Objects in the heap The type information of the object is placed in the method area The local variables in the method are placed in the stack space this new How to glue the three pieces together That's what this chapter is about ...

Random recommendation

- yum

yum repolist: List all available repo grouplist: List all package groups clean {all|packages|metadata|expire-cache|rpmdb|plugins} : ...

- HDU 4632 Palindrome subsequence(DP)

Topic link I have no choice but to do it , At that time, the thinking was very confused , In a flurry , I had an idea , You can do it , But it needs to be optimized . fuck , The train of thought deviated , Dig your own hole , After closing the list , Just came out of the pit , So many teams , It started so fast , It should not be done in this way ...

- sql server database ' ' There are grammar errors nearby

When I was working on the project yesterday , Encountered a problem with the title , Code trace handle sql sentence The copy cannot be executed in the database , Then write a new one exactly the same , And then in Assign to the code , The same mistake , I just don't know what went wrong , Last hold sql The sentence is written as ...

- sublime text3 Some of the tips for recording ( with gif chart )

Slow update 1. Working on multiple lines of data at the same time . Example : Choose the blocks you need , Then press ctrl+shift+L key , And then according to the end perhaps home key .

- javascript The browser executes a breakpoint

stay javascript There is a statement in the code that can trigger the breakpoint when the browser executes here , The order is debugger useful

- Mysq 5.7l Service failed to start , No errors were reported

The system crashed yesterday , Then reassembled Mysql 5.7 Installation steps, problems encountered and solutions . Go to the official website to download Mysql 5.7 Unzip the package (zip), Unzip to the directory you want to install . My installation directory is :D:\Java\Mysql Installation steps ...

- ASP.NET Core MVC – Tag Helpers Introduce

ASP.NET Core Tag Helpers Series catalog , This is the first one , Five articles in total : ASP.NET Core MVC – Tag Helpers Introduce ASP.NET Core MVC – Caching ...

- Android Basic knowledge of learning 4 -Activity Activities 6 speak ( Experience Activity Life cycle of )

One . Experience the execution of the life cycle of activities Code composition : 1. Three Java class :MainActivity.java.NormalActivity.java.DialogActivity.java 2. Three layout files :ac ...

- HAproxy adopt X-Forwarded-For Get the real user of the upper layer of the agent IP Address

Now there is a scene for us haproxy As a reverse proxy , But we received an anti DDoS equipment . So now haproxy Records of the IP Are all anti DDoS The equipment IP Address , Can't get the user's truth IP such , We are haproxy On ...

- use python Implement a simple one socket Internet chat communication (Linux --py2.7 Platform and windows--py3.6 platform )

windows --> windows The writing methods are py3.6 Client writing import socket client = socket.socket() client.connect(('192 ...