当前位置:网站首页>Spark calculation operator and some small details in liunx

Spark calculation operator and some small details in liunx

2022-07-06 17:39:00 【Bald Second Senior brother】

Spark -map operator

map operator :

object Spark01_Oper {

def main(args: Array[String]): Unit = {

val conf = new SparkConf().setMaster("local[*]").setAppName("Value")

val cs = new SparkContext(conf)

val make = cs.makeRDD(1 to 10)

//map operator

val mapRdd = make.map(x => x * 2)

mapRdd.collect().foreach(println)

}

}

map Operators are used to calculate the data in all incoming partitions one by one .

mapPartRdd operator

object Spark02_OPer {

def main(args: Array[String]): Unit = {

val conf = new SparkConf().setMaster("local[*]").setAppName("mapPart")

val sc = new SparkContext(conf)

//map operator

val list = sc.makeRDD(1 to 10)

val mapPartRdd = list.mapPartitions(datas => {datas.map(data => data*2)})

mapPartRdd.collect().foreach(println)

}

}mapPartRdd operator Be similar to map But it calculates data by partition , The output value of his calculation is a list

mapPartitionsWithIndex operator

object Spark03_OPer {

def main(args: Array[String]): Unit = {

val conf = new SparkConf().setMaster("local[*]").setAppName("With")

val sc = new SparkContext(conf)

val list = sc.makeRDD(1 to 10,2)

val indexRDD = list.mapPartitionsWithIndex {

case (num, datas) => {

datas.map((_," Zone number :"+num))

}

}

indexRDD.collect().foreach(println)

}

}mapPartitionsWithIndex The operator is similar to mapPartiyions But in func There will be an index value representing the partition , therefore func There will be one more function similar to Int.

spark Possible problems :

Because every time the calculation data will produce new data, but it will not be deleted , Accumulating all the time will cause memory overflow (OOM)

Driver And Executor The difference between

Driver:

Driver Just create Spark The classes of context objects can be said to be Driver,Driver yes Spark in Application That is, the code release program , It can be understood that it is written for us spark The main program of the code , Secondly, he is also responsible for Executor To allocate tasks ,Driver There can only be one

Executor:

Executor yes Spark In charge of resource calculation , He can exist in multiple .

difference :

Drvier Like a boss , and Executor yes Driver The hands of ,Driver Be responsible for assigning tasks to Executor To execute .

Linux Pick up

linux Method of switching on and off

1. To turn it off : shutdown -h restart :shutdown -r

2. To turn it off : inti -0 restart : init -6

3. To turn it off : poweroff restart :reboot

service And systemctl The difference between

service:

You can start 、 stop it 、 Restart and shut down system services , It can also display the current status of all system services ,service The function of the command is to /etc/init.d Find the corresponding service under the directory , Open and close

systemctl:

It's a systemd Tools , Mainly responsible for control systemd System and service manager , yes service and chkconfig The combination of orders

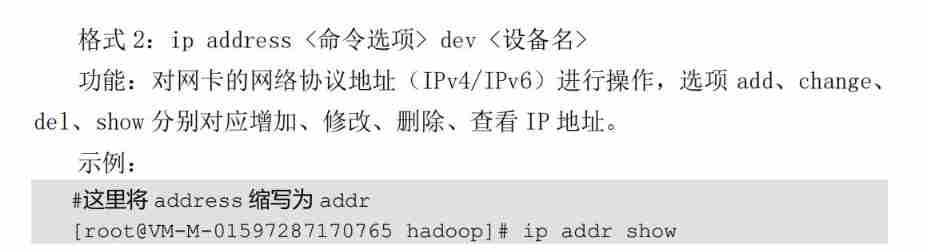

Operation of network equipment :

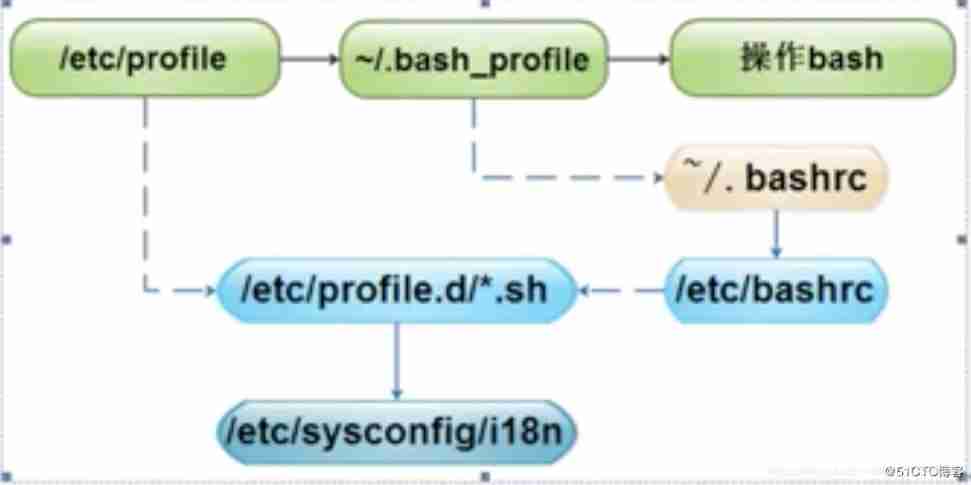

Environment variable loading order

边栏推荐

- Pyspark operator processing spatial data full parsing (5): how to use spatial operation interface in pyspark

- 连接局域网MySql

- Grafana 9 正式发布,更易用,更酷炫了!

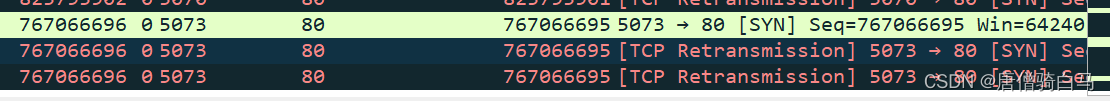

- 全网最全tcpdump和Wireshark抓包实践

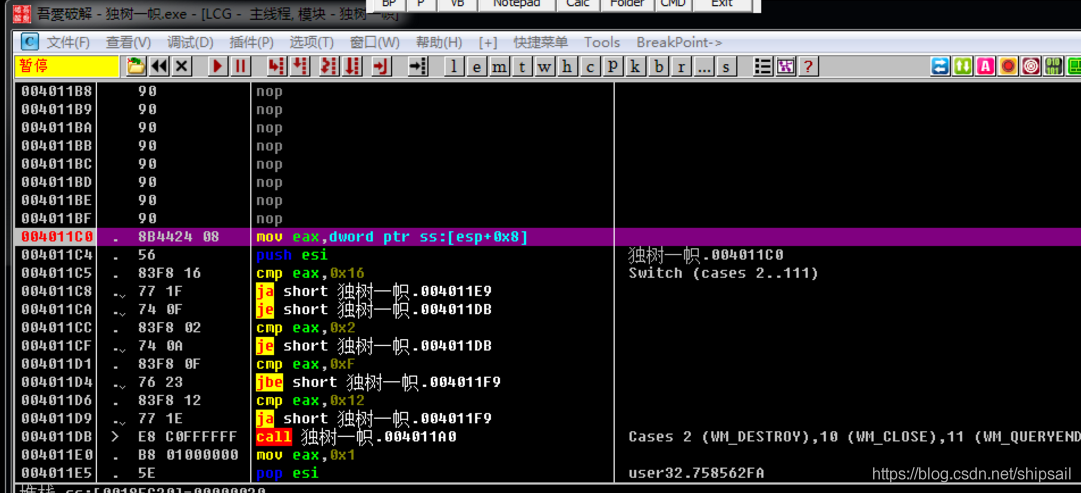

- [reverse intermediate] eager to try

- Vscode matches and replaces the brackets

- Interpretation of Flink source code (I): Interpretation of streamgraph source code

- Redis quick start

- List集合数据移除(List.subList.clear)

- Wu Jun's trilogy experience (VII) the essence of Commerce

猜你喜欢

PyTorch 提取中间层特征?

How does wechat prevent withdrawal come true?

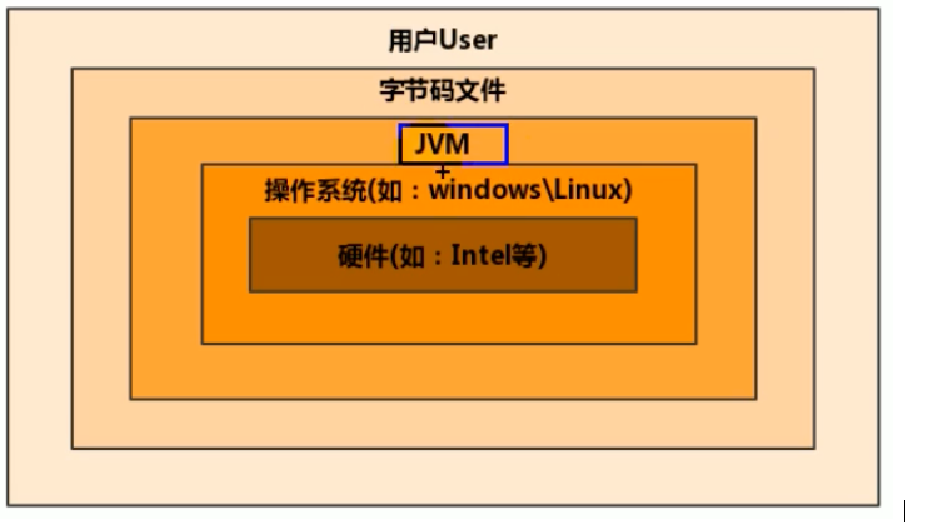

1. Introduction to JVM

![[rapid environment construction] openharmony 10 minute tutorial (cub pie)](/img/b5/feb9c56a65c3b07403710e23078a6f.jpg)

[rapid environment construction] openharmony 10 minute tutorial (cub pie)

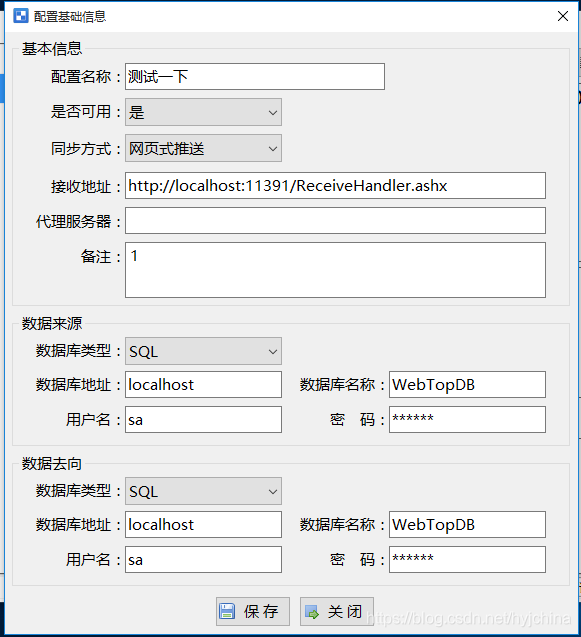

04 products and promotion developed by individuals - data push tool

TCP连接不止用TCP协议沟通

【逆向初级】独树一帜

![Case: check the empty field [annotation + reflection + custom exception]](/img/50/47cb40e6236a0ba34362cdbf883205.png)

Case: check the empty field [annotation + reflection + custom exception]

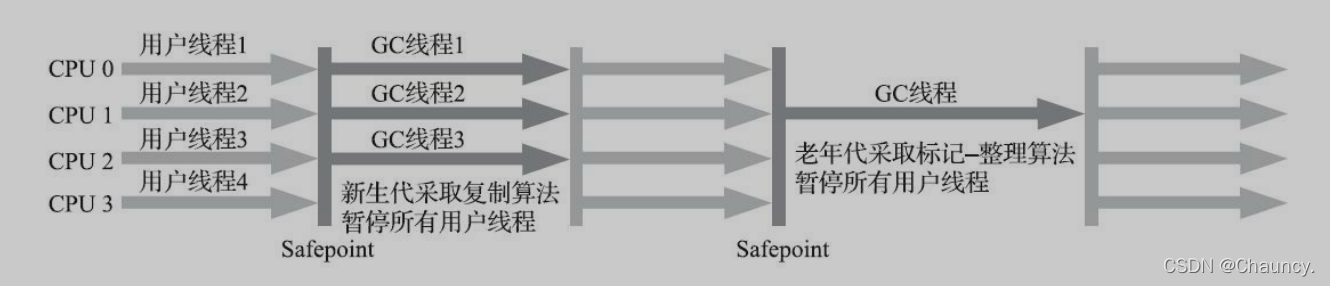

Serial serialold parnew of JVM garbage collector

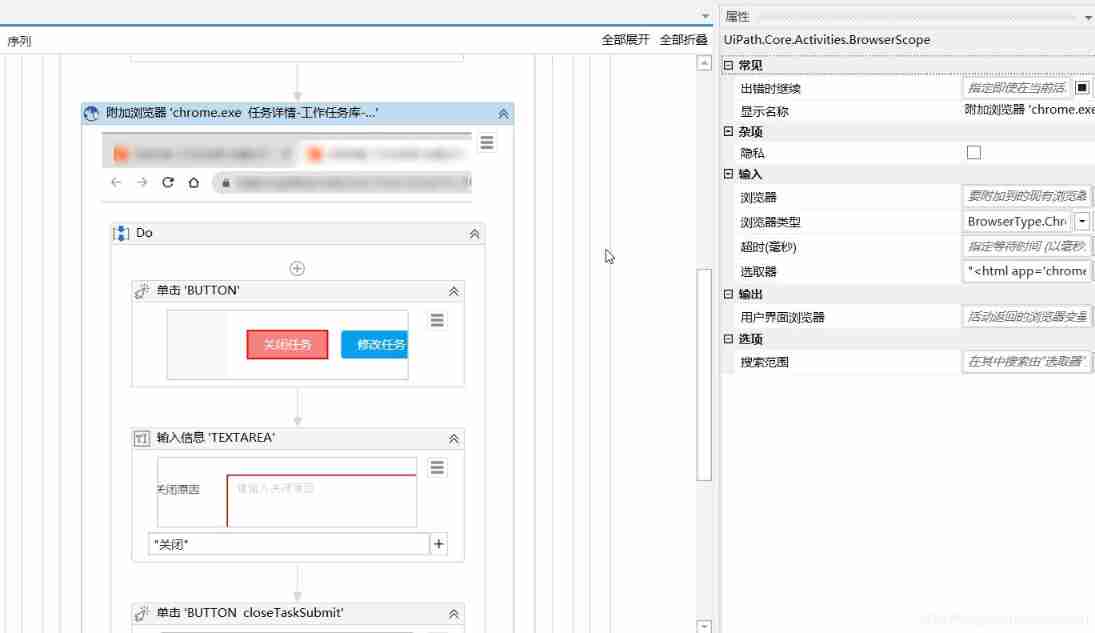

Uipath browser performs actions in the new tab

随机推荐

Based on infragistics Document. Excel export table class

Xin'an Second Edition: Chapter 25 mobile application security requirements analysis and security protection engineering learning notes

Pyspark operator processing spatial data full parsing (5): how to use spatial operation interface in pyspark

About selenium starting Chrome browser flash back

Solrcloud related commands

全网最全tcpdump和Wireshark抓包实践

The art of Engineering (3): do not rely on each other between functions of code robustness

[reverse primary] Unique

学 SQL 必须了解的 10 个高级概念

MySQL advanced (index, view, stored procedure, function, password modification)

[getting started with MySQL] fourth, explore operators in MySQL with Kiko

Flink parsing (V): state and state backend

当前系统缺少NTFS格式转换器(convert.exe)

JVM garbage collector part 1

Detailed explanation of data types of MySQL columns

[CISCN 2021 华南赛区]rsa Writeup

05 personal R & D products and promotion - data synchronization tool

Garbage first of JVM garbage collector

MySQL basic addition, deletion, modification and query of SQL statements

C#WinForm中的dataGridView滚动条定位