当前位置:网站首页>Troubleshooting of high memory usage of redis in a production environment

Troubleshooting of high memory usage of redis in a production environment

2022-07-05 11:44:00 【We've been on the road】

The server goes online after a regular release , It is found that the cache expiration speed is much lower than the configured 10min Be overdue .

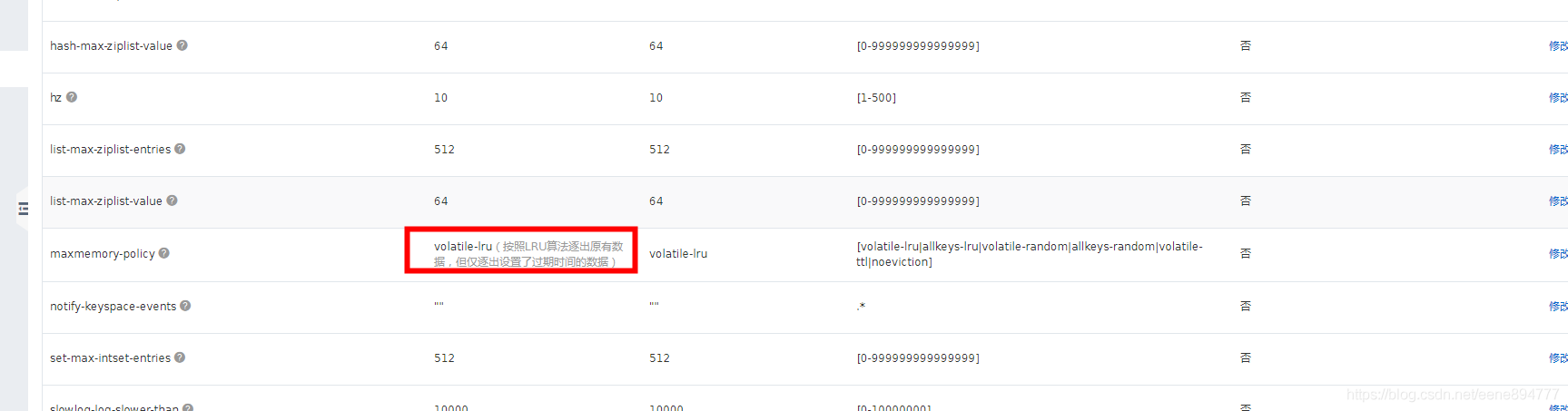

The phenomenon : Just logged into the system background , After that 1 The cache expires in minutes , Unable to log in to the background , Enter Alibaba cloud redis The server , Found logged in key period , During this period, I also encountered various strange problems , Inexplicably log in to the system, but later drop the line , Later, I went to Alibaba cloud and found redis Memory Take up as much as 100%. I guessed that there might be threads in a large number of write caches , And then put redis The space is full , because redis The server configuration is volatile-lru Strategy : When the memory is full , There are a lot of cache writes , Just delete Set expiration time Of key Medium It hasn't been used for the longest time recently Of key.

Then I ran to redis The server puts the current rdb( memory dump ) Download a copy locally to analyze what it is key Writing data crazily , Found this key, I can find and use this in the program key Code for , And then do the corresponding processing .

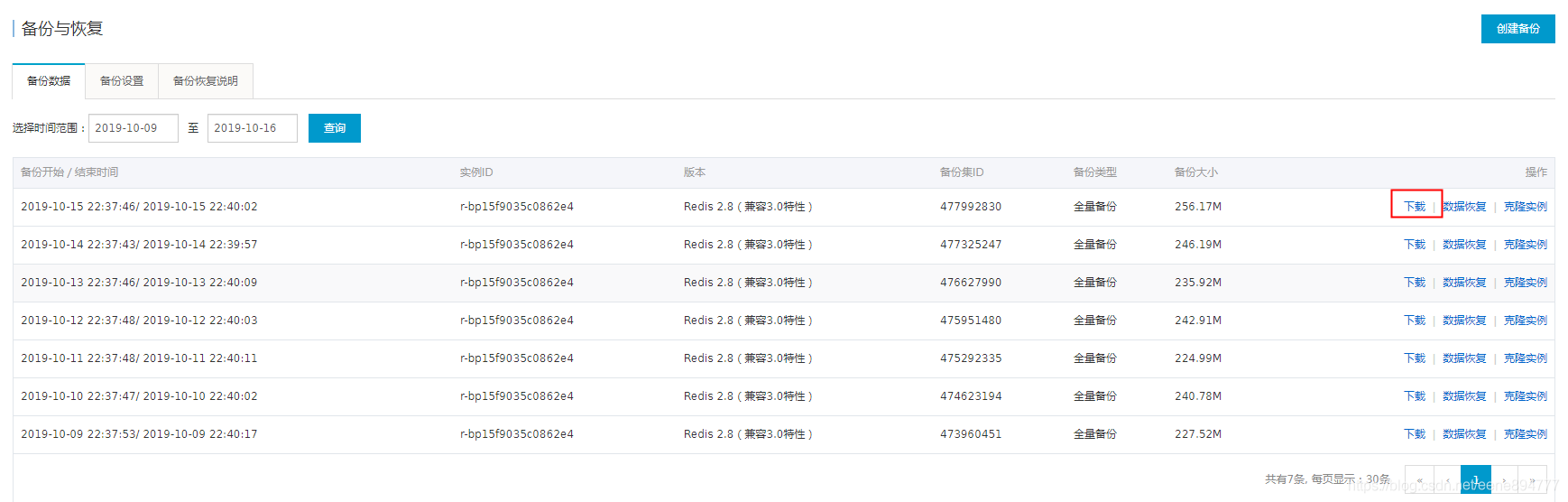

Log in to Alibaba cloud , Go to the latest backup

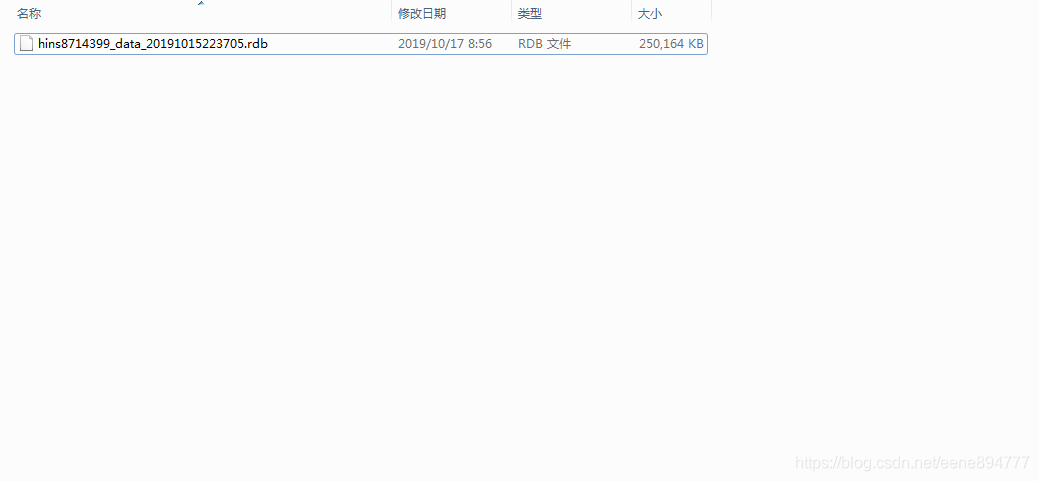

1、 Download to a full volume key Of rdb file

Then execute the following command to install python2.7 And load rdb plug-in unit , Generate csv file .

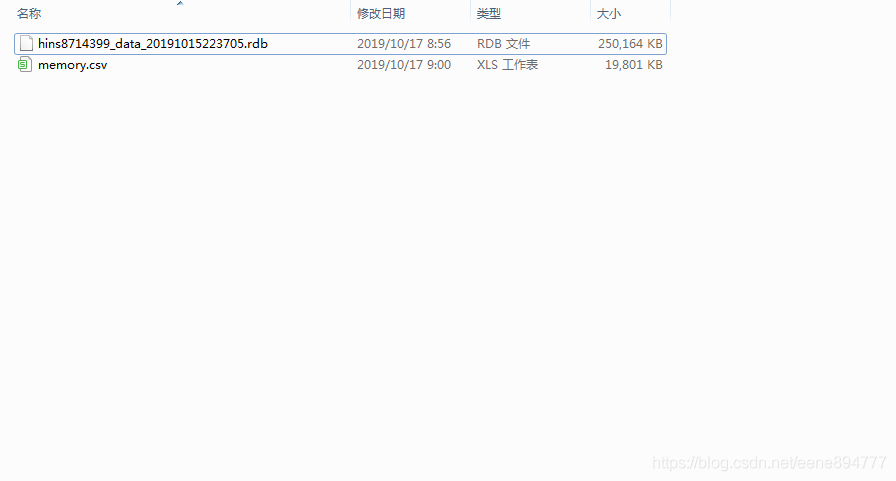

2、 Put this rdb Files use rdbTools Turn it into csv file .

-

pip install rdb

# install rdb

-

rdb -c memory hins8714399_data_20191015223705.rdb > memory.csv

# take redis Memory snapshot file , Store in csv In the document

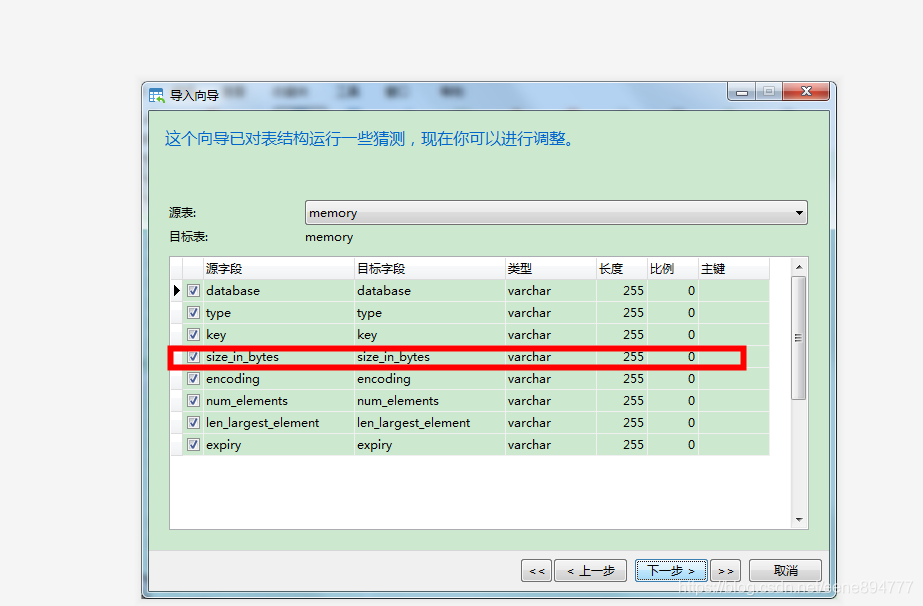

3、 Will change csv Documents can be imported directly into mysql In the database , And then use mysql Query for , You can find out the one with the largest memory consumption key

Be careful A little bit is adopt csv Import mysql The default generated field types are varchar, We need to change the type here to int type , Used for sorting queries .

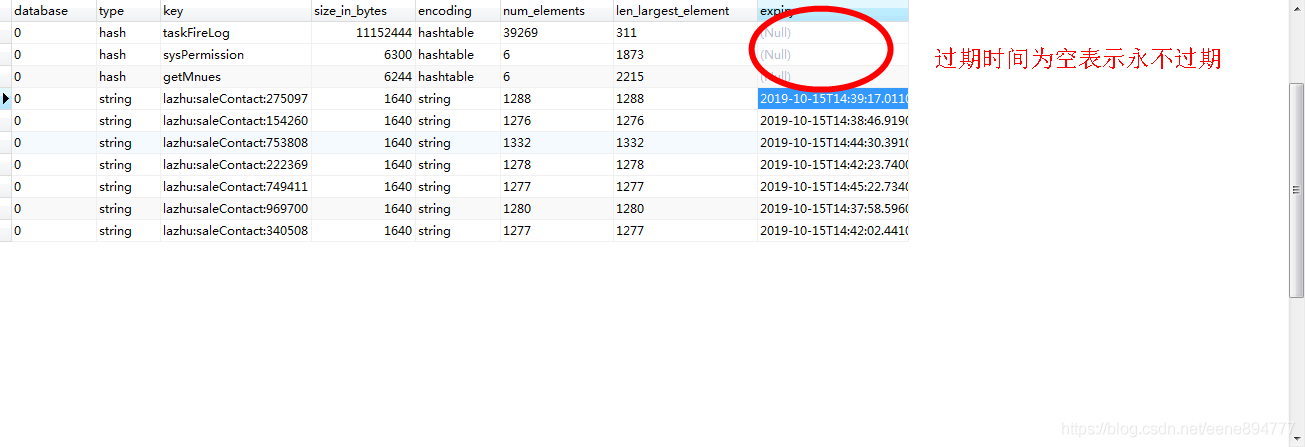

4、mysql There's a memory library , And then execute

SELECT * from memory ORDER BY size_in_bytes desc LIMIT 0, 10

According to the use of memory from large to small , Before the memory is occupied 10 Of key

Found these expired on null Of key, Find out The code does not address these key Set expiration time , It's permanent key, Some more redis Set the queue for consumption , Write a lot of data to the queue that never expires , Then the consumption thread only 2 individual , It's faster to add to the queue , Queue consumption is slow , Backstage I put consumption redis The thread of the queue is set to 20、10、5 Find an optimal number of threads ,CPU The occupation is not too high , The queue is not stacked 、 Make full use of the performance of the machine , Solve the actual needs .

summary : Through a toss , Learned how to parse redis Of rdb Snapshot file , analysis redis Distribution of memory , Memory footprint , that key Take up a lot of , that key Account for less , There is also a memory elimination strategy , Why this key Expire first , The other one will expire later .

Expand 1: Interested students can check more redis Memory retirement strategy

- volatile-lru: Pick the least recently used data from the memory data set with expiration time Eliminate ;

- volatile-ttl: Select the data about to expire from the memory data set with the expiration time Eliminate ;

- volatile-random: Pick any data from the memory data set with the expiration time set Eliminate ;

- allkeys-lru: Pick the least recently used data from the memory data set Eliminate ;

- allkeys-random: Pick any data from the data set Eliminate ;

- no-enviction( deportation ): Exclusion data .( Default elimination strategy . When redis Memory data reaches maxmemory, Under this strategy , Go straight back to OOM error );

About maxmemory Set up , By means of redis.conf in maxmemory Parameter setting , Or by order CONFIG SET Dynamic modification

About the setting of data elimination strategy , By means of redis.conf Medium maxmemory-policy Parameter setting , Or by order CONFIG SET Dynamic modification

</article>

边栏推荐

- pytorch-多层感知机MLP

- 12. (map data) cesium city building map

- Is it difficult to apply for a job after graduation? "Hundreds of days and tens of millions" online recruitment activities to solve your problems

- POJ 3176-Cow Bowling(DP||记忆化搜索)

- Manage multiple instagram accounts and share anti Association tips

- 【上采样方式-OpenCV插值】

- 一次生产环境redis内存占用居高不下问题排查

- Liunx prohibit Ping explain the different usage of traceroute

- 跨境电商是啥意思?主要是做什么的?业务模式有哪些?

- 汉诺塔问题思路的证明

猜你喜欢

yolov5目标检测神经网络——损失函数计算原理

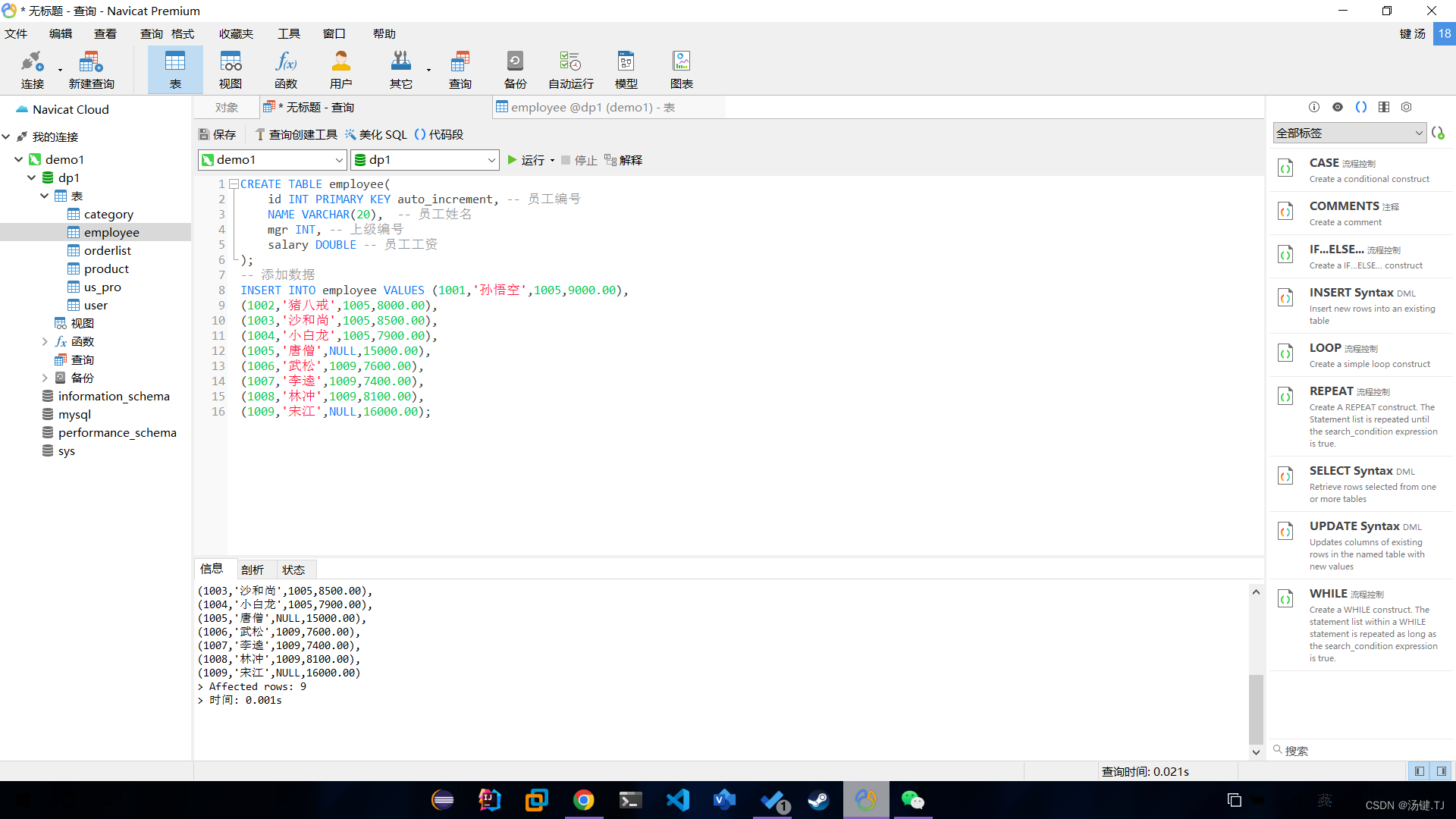

多表操作-自关联查询

How did the situation that NFT trading market mainly uses eth standard for trading come into being?

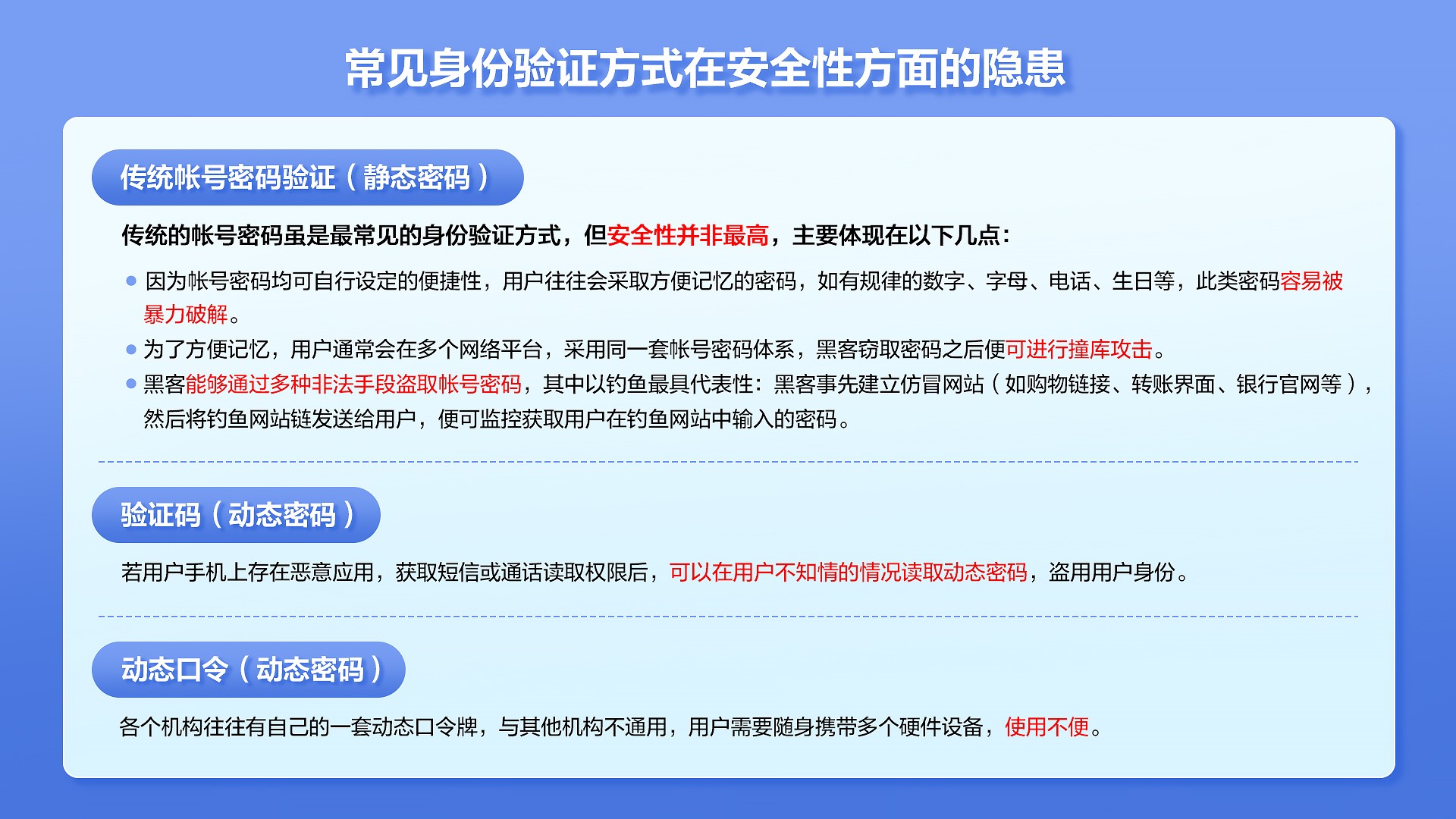

How to protect user privacy without password authentication?

Cdga | six principles that data governance has to adhere to

网络五连鞭

中非 钻石副石怎么镶嵌,才能既安全又好看?

![[yolov5.yaml parsing]](/img/ae/934f69206190848ec3da10edbeb59a.png)

[yolov5.yaml parsing]

pytorch-权重衰退(weight decay)和丢弃法(dropout)

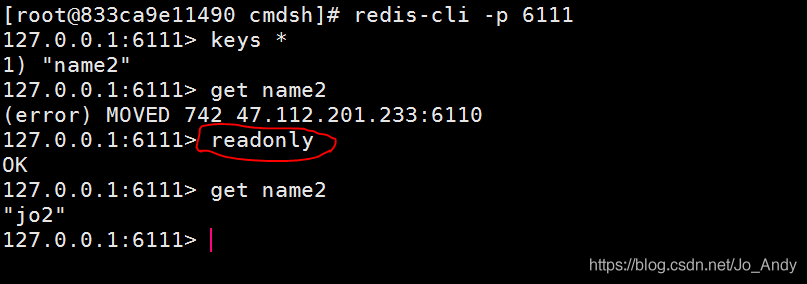

简单解决redis cluster中从节点读取不了数据(error) MOVED

随机推荐

Redis集群的重定向

【云原生 | Kubernetes篇】Ingress案例实战(十三)

【load dataset】

1 plug-in to handle advertisements in web pages

无线WIFI学习型8路发射遥控模块

Evolution of multi-objective sorting model for classified tab commodity flow

XML解析

Programmers are involved and maintain industry competitiveness

redis集群中hash tag 使用

Riddle 1

[crawler] bugs encountered by wasm

项目总结笔记系列 wsTax KT Session2 代码分析

C operation XML file

871. Minimum Number of Refueling Stops

redis 集群模式原理

COMSOL -- three-dimensional graphics random drawing -- rotation

pytorch-权重衰退(weight decay)和丢弃法(dropout)

【yolov3损失函数】

An error is reported in the process of using gbase 8C database: 80000305, host IPS long to different cluster. How to solve it?

简单解决redis cluster中从节点读取不了数据(error) MOVED