当前位置:网站首页>RuntimeError: CUDA error: CUBLAS_ STATUS_ ALLOC_ Failed when calling `cublascreate (handle) `problem solving

RuntimeError: CUDA error: CUBLAS_ STATUS_ ALLOC_ Failed when calling `cublascreate (handle) `problem solving

2022-07-07 07:05:00 【Go crazy first.】

One 、 Problem description

Use transformers package call pytorch Framework of the Bert When training the model , Use normal bert-base-cased Run normally on other datasets , But use it Roberta But always report errors :RuntimeError: CUDA error: CUBLAS_STATUS_ALLOC_FAILED when calling `cublasCreate(handle)`

I worked hard for several days and didn't find out what the mistake was , Keep reminding Online batch_size Is it too big to cause , It is amended as follows 16->8->4->2 It's no use .

By comparing with other data sets , Find me in tokenizer Added new special_token, This may lead to the wrong report !

Two 、 Problem solving

In the original tokenizer Add special_tokens when , Forget to model Of tokenizer Update the vocabulary of Lead to !

The complete update method is :

from transformers import BertTokenizer, BertModel

tokenizer = BertTokenizer.from_pretrained('bert-base-cased')

# Add special words

tokenizer.add_special_tokens({'additional_special_tokens':["<S>"]})

model = BertModel.from_pretrained("bert-base-cased")

# Update the size of the thesaurus in the model !

# important !

model.resize_token_embeddings(len(tokenizer))3、 ... and 、 Problem solving

Can pass , Start training !

边栏推荐

猜你喜欢

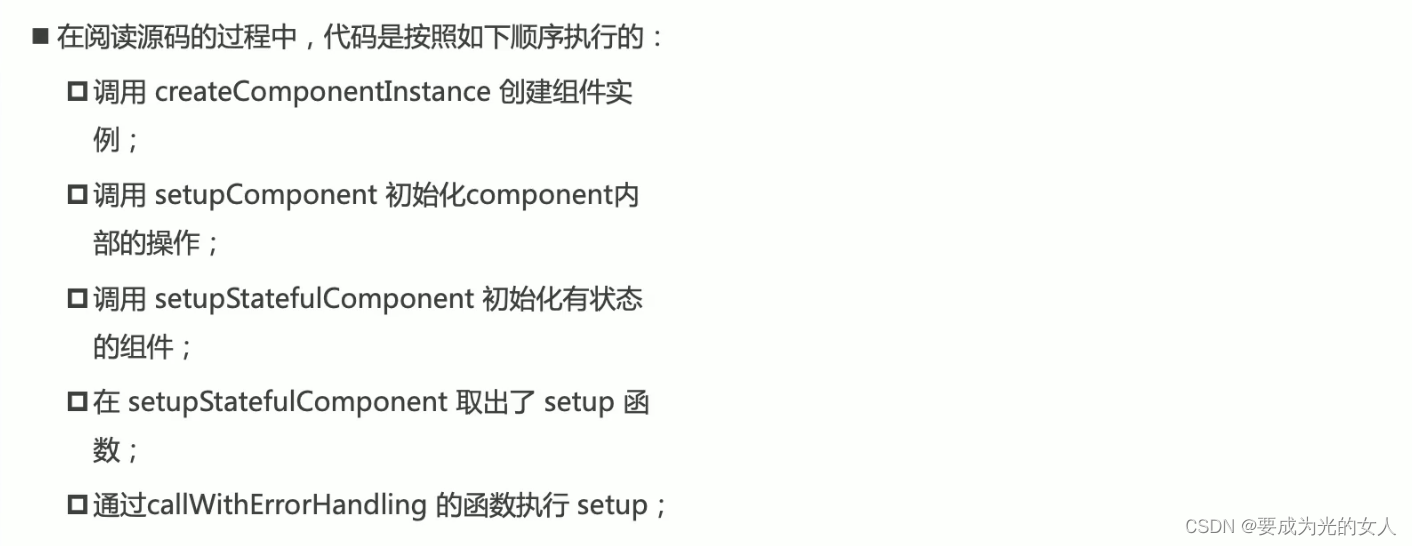

Composition API 前提

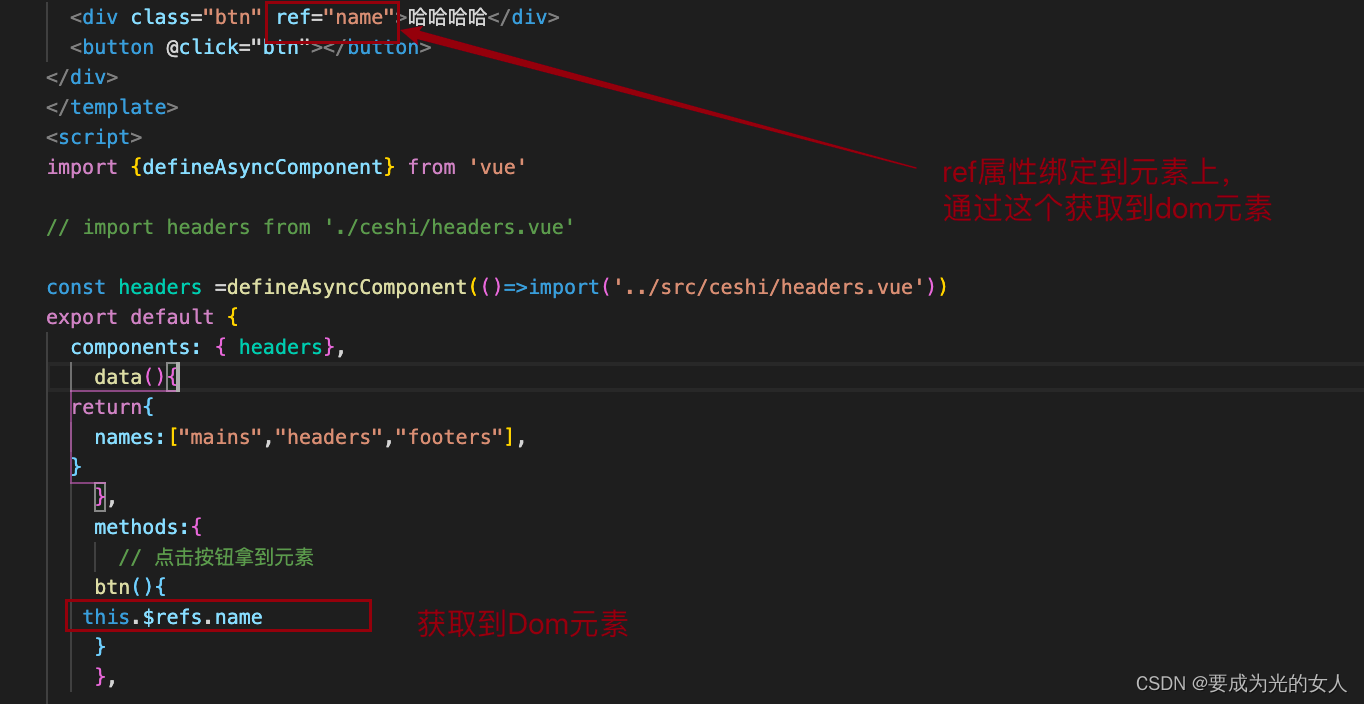

$refs:组件中获取元素对象或者子组件实例:

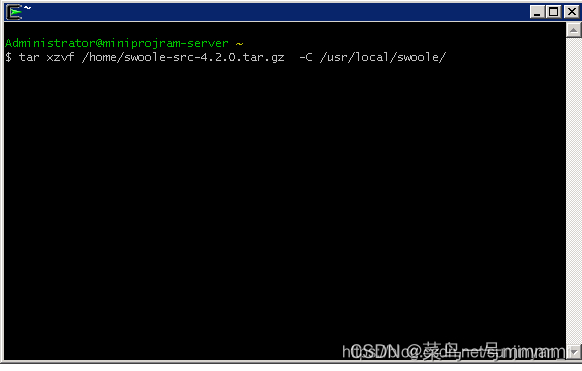

How to install swoole under window

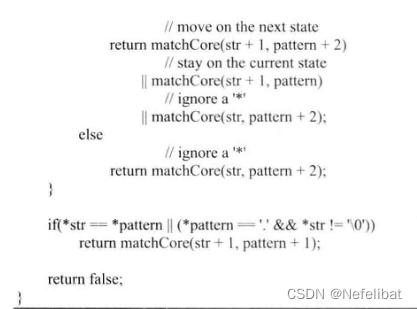

Sword finger offer high quality code

Jetpack compose is much more than a UI framework~

华为机试题素数伴侣

The latest trends of data asset management and data security at home and abroad

健身房如何提高竞争力?

精准时空行程流调系统—基于UWB超高精度定位系统

Leetcode t1165: log analysis

随机推荐

途家、木鸟、美团……民宿暑期战事将起

Please ask a question, flick Oracle CDC, read a table without update operation, and repeatedly read the full amount of data every ten seconds

Learning records on July 4, 2022

Basic process of network transmission using tcp/ip four layer model

Answer to the first stage of the assignment of "information security management and evaluation" of the higher vocational group of the 2018 Jiangsu Vocational College skills competition

请问 flinksql对接cdc时 如何实现计算某个字段update前后的差异 ?

Advantages of using net core / why

Unity3d learning notes

JWT的基础介绍

mysql查看bin log 并恢复数据

impdp的transform参数的测试

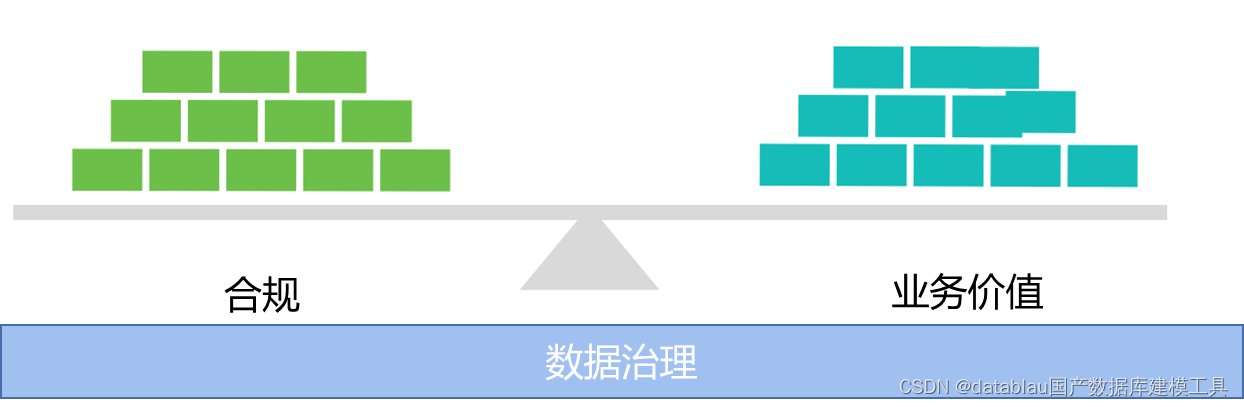

Comment les entreprises gèrent - elles les données? Partager les leçons tirées des quatre aspects de la gouvernance des données

Answer to the second stage of the assignment of "information security management and evaluation" of the higher vocational group of the 2018 Jiangsu Vocational College skills competition

What books can greatly improve programming ideas and abilities?

CompletableFuture使用详解

服装门店如何盈利?

How to do sports training in venues?

企业如何进行数据治理?分享数据治理4个方面的经验总结

The startup of MySQL installed in RPM mode of Linux system failed

Complete process of MySQL SQL