当前位置:网站首页>Design and exploration of Baidu comment Center

Design and exploration of Baidu comment Center

2022-07-05 09:46:00 【Baidu geek said】

Reading guide : Baidu comment center provides convenient access to Baidu series products 、 The ability to comment consistently , It is one of the most important basic abilities in Baidu community atmosphere system , The average daily flow reaches 10 billion yuan , In the process of continuous business development , Baidu comment center has realized rapid functional iteration 、 Steady improvement in performance , This article will introduce the architecture design of Baidu comment center as a whole , At the same time, it describes how to build high availability with specific cases 、 High performance distributed services .

The full text 6444 word , Estimated reading time 17 minute

One 、 background

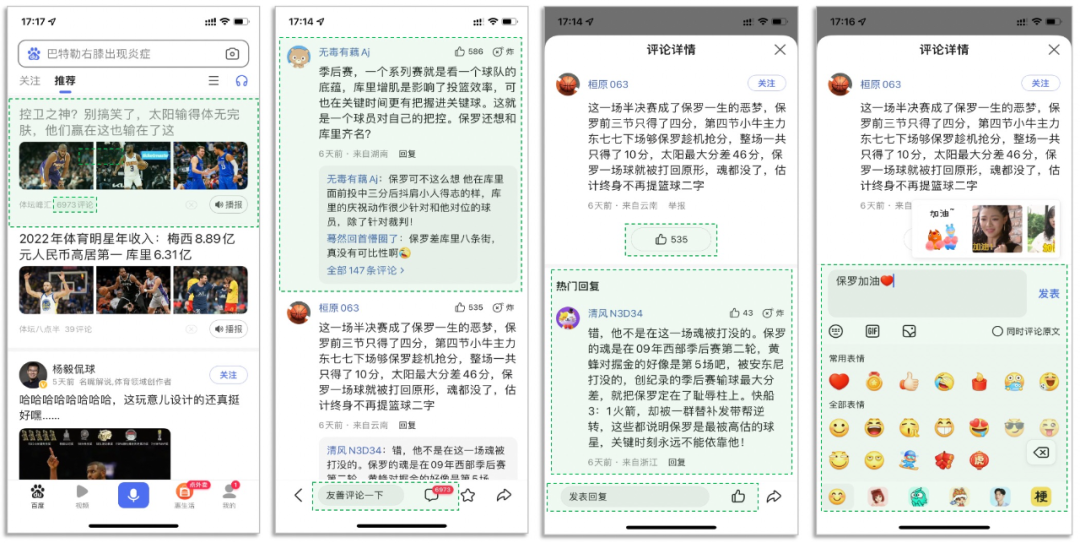

Comments provide emotion as users' active expression / attitude / One of the important ways of thinking , Its product form is based on information , Construction and production - distribution - Browsing user content Ecology , Ultimately promote content and users , Users and users , The value interaction atmosphere between users and authors . After reading a piece of information , Users often browse to the comment area , Or express your inner feelings , Or find some resonance , Or it may just satisfy the curiosity of the people who eat melons , The attractive comment area can meet the interactive demands of users , At the same time, it can also increase the stickiness of users' products , Promote business development ; So how to build a comment system , In terms of business, it can support the diversification of product forms and continuous iteration , It can provide stable capability output in terms of performance , Next, we will introduce in detail the architecture design ideas of Baidu comment center and some challenges and explorations in the process of business development .

Two 、 The concept is introduced

Comments go through many links from production to reading , The following figure is a simple model for commenting on various aspects of the life cycle :

The production of comment data is triggered by the user , The comment system maintains the subject data associated with the resource , Search the comment content that belongs to through the subject information , The comment data itself maintains the publisher , Hierarchy , Whether to show the status , Content , Number of likes , Number of replies , Time, etc. , Each data dimension is used for specific presentation scenarios and data analysis ; In the process of user producing content , The system recognizes malicious requests , For users 、 resources 、 Content and other dimensions are filtered layer by layer , Complete the data input , Data is stored in distributed database , At the same time, vertical query is performed according to the query dimension / Dismantle the warehouse and table horizontally , To solve the data read / write performance bottleneck caused by continuous business growth , Changes at the data level eventually fall into the big data center , It is used to analyze the current business situation and future development direction .

3、 ... and 、 Service design

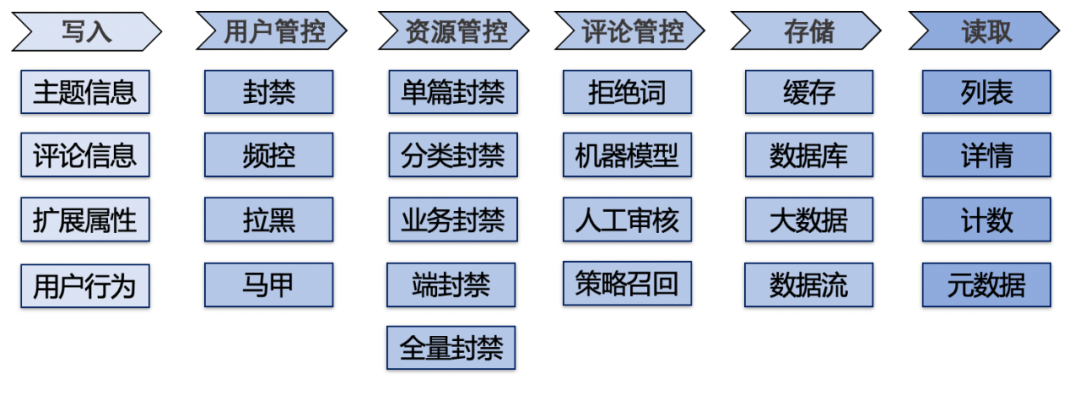

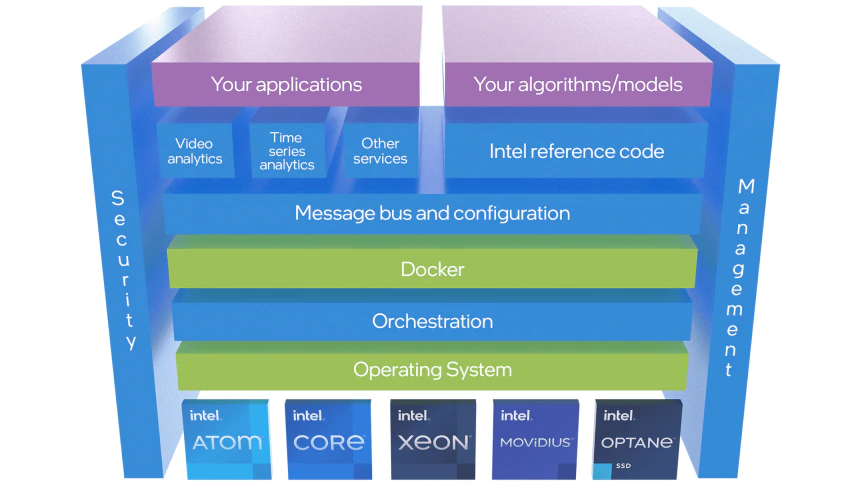

The above foreshadows the relevant concepts of the comment business , Next, we focus on the overall service design , Comment service was initially based on Baidu App Business , by Feed And other content systems provide stable and convenient comment ability , With the strategic transformation of the company in the direction of Zhongtai , The comment service is repositioned as the comment center , For each product line of Baidu series , The transformation of roles , This has led to great changes in architecture design , It also brings new technical challenges :

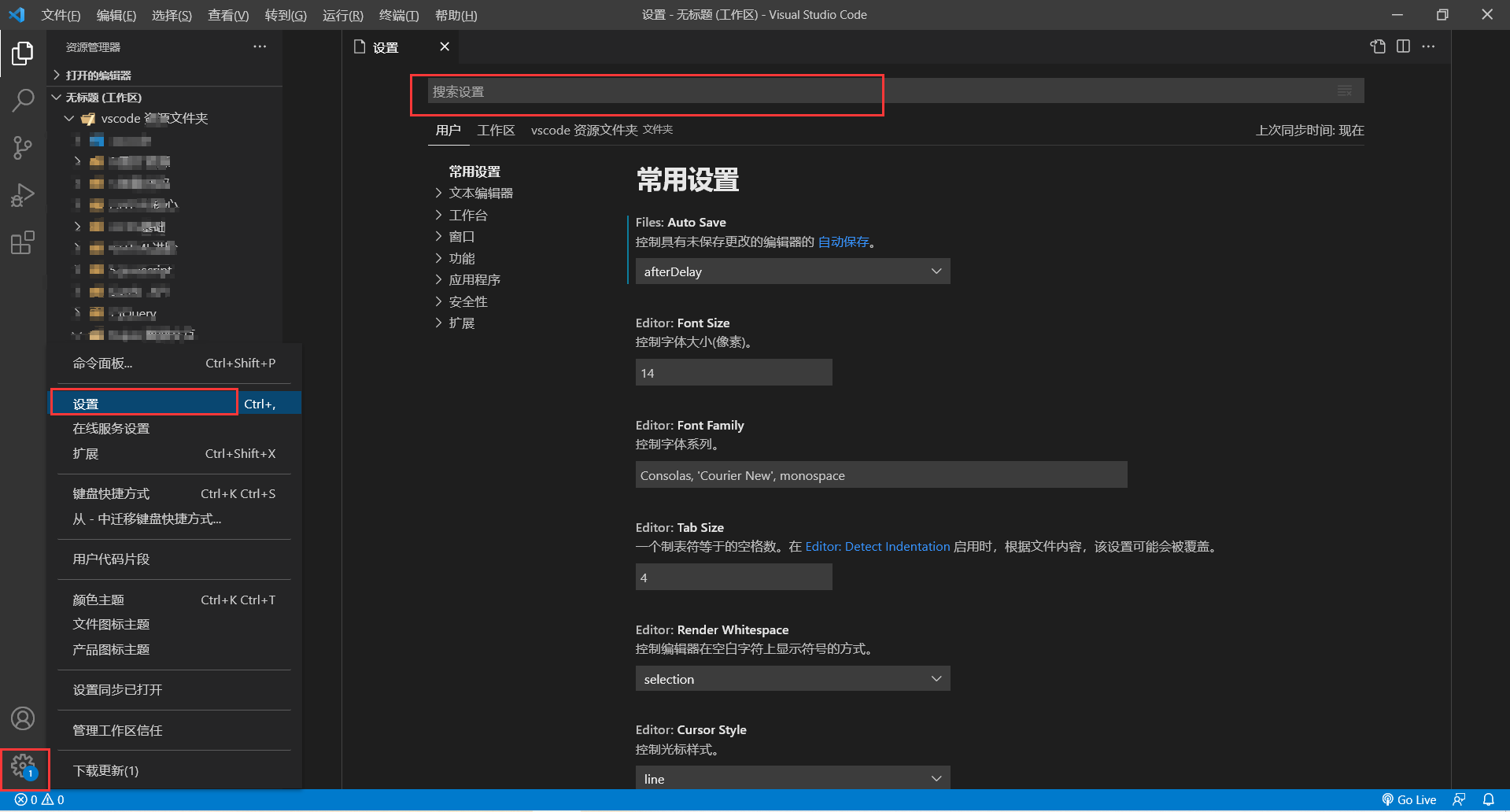

From the perspective of service access , There are many access businesses , There's a lot of demand , High customization requirements , It is required that the middle office side can provide friendly and fast access to the business side , For this part , As the first Chinese and Taiwanese side, we completed the service hosting Mobile Developer Center ( Baidu mobile development management platform ) Access to , Use this platform to manage interface permissions 、 Technical documentation 、 Traffic authentication 、 Service billing and after-sales consulting , Greatly improve the access efficiency ; For the inside of the middle platform side , By building a unified access layer gateway , Realize disaster recovery trigger 、 Flow dyeing 、 flow control 、 Exception detection and other plug-in capabilities , It can provide convenient technical support for business , When there is a technical change in the middle office , You can also make the business imperceptible ;

From the perspective of capability output , The business characteristics of different product lines have different demands for basic capabilities , This requires that the middle office side can meet the business needs , Take full account of generalization in the internal architecture design to ensure the R & D efficiency of the limited middle office manpower , Based on this , We constantly abstract the interface capabilities , Ensure low coupling at the code level 、 High cohesion , Design component capabilities for different product requirements , Special solutions to pain points , At the same time, we also try to target specific functional scenarios , Provide a friendly development framework , Improve the efficiency of demand launch by jointly building with the business side open source , Liberate a certain number of middle and Taiwan manpower , Good results have been achieved ;

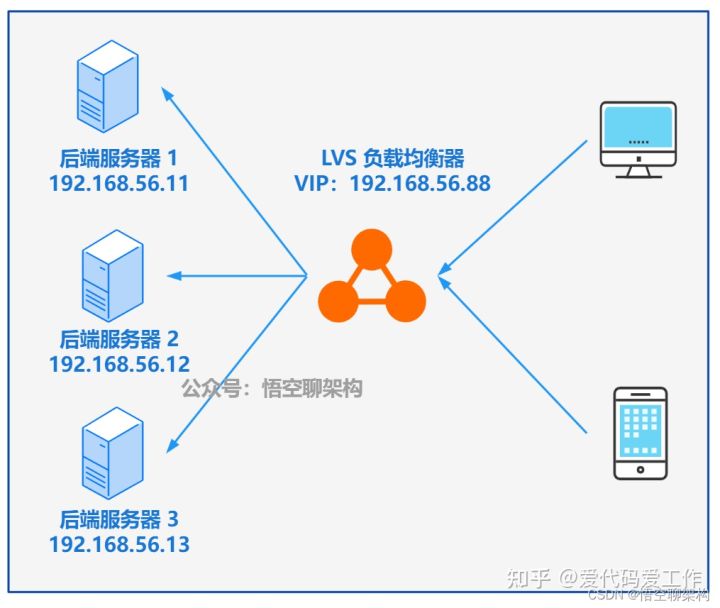

From the perspective of system performance , The access traffic is increasing with the new product lines , Constantly test the performance of the middle office service , We need to commit to the core interface SLA visual angle 99.99% Service stability , architecturally , Remove single point of service , An isolation domain is formed inside the logical machine room , No cross region requests , Consider extreme scenarios , Design technical solutions to prevent cache penetration , Cache breakdown , Service avalanche , Ensure the minimum available unit of service , In terms of capacity , Ensure that the capacity at peak hours meets N+1 Redundancy of , Have the ability to cut off the empty space in a single room , The alarm of core indicators covers the operation and maintenance / Research and development / Test students and so on ;

overall , The construction idea is , Service layered governance , Continuously precipitate the generalization ability of the middle platform , For product and technology upgrades , Constantly improve the infrastructure .

Four 、 Challenge and exploration

The above introduces the architecture design of Baidu comment center from an overall perspective , Below we enumerate several specific problems encountered in the business development iteration process , Let's introduce in detail how Baidu comment China TV station has carried out technical exploration and implementation .

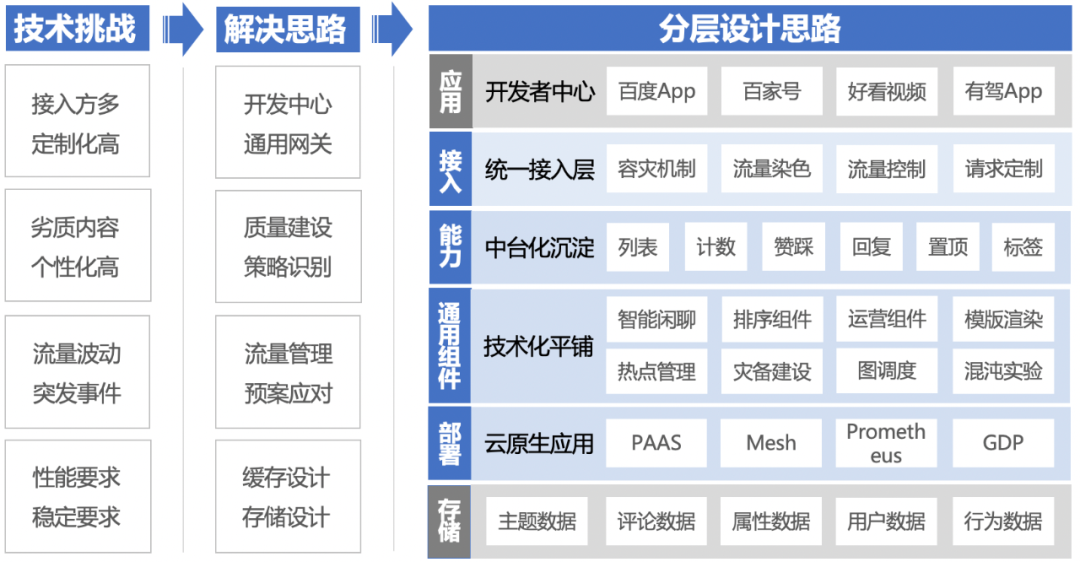

4.1 How to improve service performance and iteration efficiency ?

background

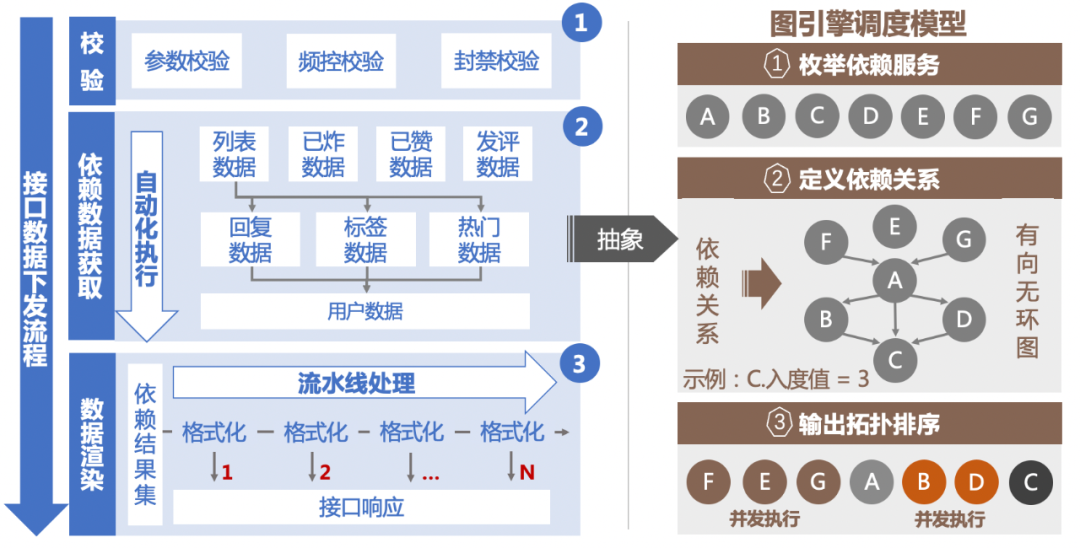

A system goes through countless version iterations , More and more functions can be carried , At the same time, it will also face the problem of increasing development costs , Business logic is mixed with historical baggage , Code blocks are confusing and redundant , Pull one hair and move the whole body , At this time, it is imperative to upgrade the technology of the system itself . The comment list class interface is the core business of the comment service , Most of the function iteration list class interfaces in history have participated in it , This also makes the list class interface expose problems faster . It is not difficult to see from the business logic processing flow before optimization in the following figure , The interface definition is vague , More bearing functions , The relevant logic is intertwined , Resulting in low code readability ; In the business processing section , There are many data dependencies , There are also dependencies between different data acquisitions , Service calls can only be executed sequentially , inefficiency , These problems will affect the efficiency of daily development , And the performance is not controllable , It has buried hidden dangers for the stability of the system .

Ideas

Facing this series of problems , We have made a drastic reform to the list service , Adopt three paths to solve the core problem : At the interface level , Split by function , Define a single responsibility interface to decouple logic ; At the data dependency level , Dispatch and manage downstream calls , Automation , Parallelization ; At the data rendering level , The decorator of the pipeline is used to define the business main line logic and branch line logic , The optimized service interface functions as a whole , Code boundaries are clear , Rely on service scheduling management , Flexible data packaging , Sustainable development , So how do we achieve this process ? Here are the specific solutions .

programme

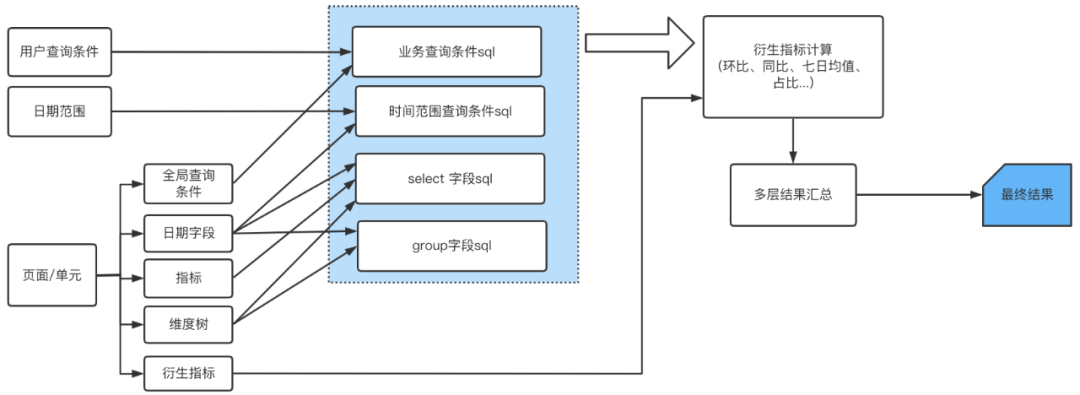

The figure above shows the overall process of data distribution through an interface , To sum up, it can be divided into three stages :

The first stage : As a precondition for interface verification , Verify the basic parameters , Filtering requests ;

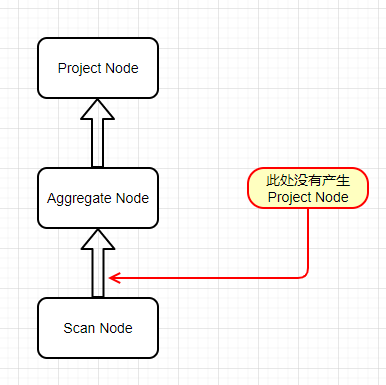

The second stage : Rely on centralized data acquisition , Here is the core of service optimization , Take the comment level-1 list interface as an example , Data distribution of the first level comment list interface , Including first-class comments , Second level leak comment , The total number of comments , In the main state, you can see the comment data , Tag data, etc , Each data source has certain dependencies , such as , First level comment , Main state visible comment , The total number of comments has the highest priority , Because you can request directly without relying on other data sources , For Level 2 leaked comments, the parent comment list obtained from the level 1 comment data can be used as the basic dependency , In this way, the priority of the second level leaked comments is second , In the abstract , Each business interface can sort out its own service dependencies , Then the next question becomes how to manage these dependencies 、 Set it up , Here we use the graph engine scheduling model to complete this task :

The model implementation process is divided into three steps :

First step : Definition , Enumerate all dependent services required in the business logic , And map to nodes , Each node is an atomized node for data dependent calls , This node does not participate in the business logic of the interface dimension ;

The second step : Define the relationship between them , Generate directed acyclic graph , Each node maintains the number of its dependent nodes , Expressed in degrees of penetration ; The characteristic of directed acyclic graph is that there is only one direction between nodes , No ring , This data structure just conforms to the execution logic between business nodes , Mapping service dependent calls to nodes in a directed acyclic graph , The in degree value of each node represents the number of dependent nodes of the node , The vertex is the degree of penetration in the graph 0 The node of , No node points to it ;

The third step : Start with the vertex , Continuously and concurrently execute all input values as 0 The node of , After the upper node pops up , Find the dependent nodes through the dependency relationship , And subtract the input value of the node , The result of this topological sort is output , Realize automatic node operation ;

In conclusion , Configure and manage the node relationship of each interface , When traffic requests , Read the node configuration of the interface , Generate directed acyclic graph , The tasks of nodes are managed and executed by the scheduler , The dependent data output of each node is stored in the currently requested scheduler , When the downstream node task is executed , Obtain the upstream data results from the scheduler as input parameters , Complete the execution logic of this node ;

The third stage : Data rendering phase , After obtaining all the dependent data through phase 2 , Get the dependent data results in all nodes from the scheduler , Define pipelined formatting decorators , Split by function , Modify the response values layer by layer , To realize the templating processing of data rendering .

After transforming the system in this way , The service performance of the interface is greatly improved , The average response time is 99 There was a significant decrease in the quantile dimension , At the same time benefit from Go High performance of language , Saves physical machine resources , The maintainability of the refactored code is also greatly improved .

4.2 How to build high performance 、 Low latency comment sorting capability ?

background

Comments are services that produce user content , The operation of comment content determines the atmosphere of the whole comment area , For quality / Comments that are interesting or have special attributes should get more exposure , At the same time, we also hope to be vulgar / abuse / Meaningless comments get less attention , In this way, the interaction between users can get a positive cycle , This requires the comment service to build a sorting mechanism , At the product level, it can meet the requirements of sorting , In technology, it can also achieve rapid convergence of sorting data , Fast iteration without affecting service performance .

Ideas

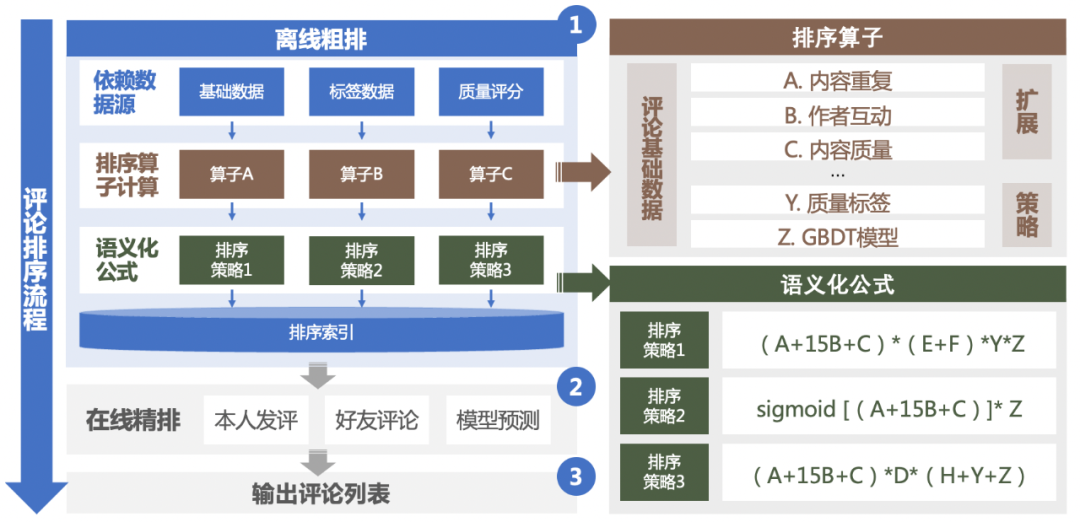

Before making the technical proposal , We need to consider several core issues , The first is how to sort the comments ? The easiest way to think of a solution is to evaluate a score for each comment , Output the comments under an article according to the score , To achieve the sorting effect ; Now that each comment has a rating attribute , The next step is to define the scoring formula , According to this idea, we can list many factors that affect the comment score , For example, the number of likes of a comment , Number of replies , Creation time and so on , At the same time, set corresponding weights for these factors , Combined, the total score can be calculated , In the process , We need to consider the performance impact of formula iteration on the system , When a formula is determined , It can't be pushed online right away , It requires a small amount of traffic to evaluate the benefits brought by the sorting results in order to determine whether the factor or factor weight of the formula is a correct combination , This involves the possible coexistence of multiple groups of formulas , And it can be expected that the adjustment of the formula will be frequent iterations , According to these ideas , We finally developed a comment ranking framework , Use offline to calculate the comment score , Formula configuration , Fan out concurrency model and other technical means have realized the intelligent comment sorting function , Let's look at the architecture design in detail .

programme

The comment sorting service is divided into two parts , Offline rough sorting service and online fine sorting intervention ;

First, let's introduce the offline rough sorting service , We will evaluate the comment score / The task of outputting the sorting list is executed in the offline module , In case of large amount of data, offline operation will not affect online services . The offline module will listen to the comment queue , For example, comment reply , Comment like , Comment delete , Comment topping and other behaviors that can affect the data change of the comment list , After the offline sorting service consumes the behavior data, the comment score is calculated through the policy formula , Finally stored in the sort index , The technical points involved in this process are as follows :

Single row service ; When a comment data changes , Trigger the recalculation of the score of a single comment , Determine whether the full platoon service needs to be triggered , If necessary, write the full queue instruction to the full queue , No responsibility to update the latest score evaluation to the sorting index .

Full platoon service ; Read the full queue instruction from the full queue , Get the subject of the comment ID, Traverse the topic ID All comments under , Re evaluate the rating of each comment , Write to sort index . Here we use Golang Fan out model of language , The queue passes channel Realization , Manage concurrent consumption of multiple collaboration processes channel Full row instructions for , Reduce the backlog of data messages .

Sort operator : During the evaluation of comment scores , Use the scheduler to obtain the data required by the sorting factor concurrently , For example, comment on basic information , Subject material information , Policy model services , Raise the right / Reduced weight vocabulary , Duplicate content library, etc , The obtained meta information completes the evaluation process through the weight of the sorting formula .

Semantic formula : Identify by semantic formula , Realize configuration and go online , The consideration here is , The online effect needs to constantly adjust the weight of each operator to verify the small traffic , In this way, you can quickly go online to complete the verification , It is very efficient for R & D efficiency and product iteration ;

Sort index : The sorting index adopts Redis As a storage medium , adopt zset Data structure to maintain a sorted list of comments under an article . Different formulas make up different strategies , When multiple group policies are in effect at the same time , The sorting index will generate the sorting results of multiple policies in the article dimension , Maintain multiple sets at the same time ID Indexes , The comment online service reads policy groups through small traffic to hit different sorting results , convenient AB experiment .

The offline rough sorting service will output the sorting results for the related attributes of the comments , Online part , We also made a personalized secondary intervention on the sorting results , Including the right to make personal comments , Mail list / Follow your friends' comments and raise your rights , And the intervention of fine rehearsal model , These strategies help us more accurately locate the preferences of user groups , Increase the resonance of users' browsing comments .

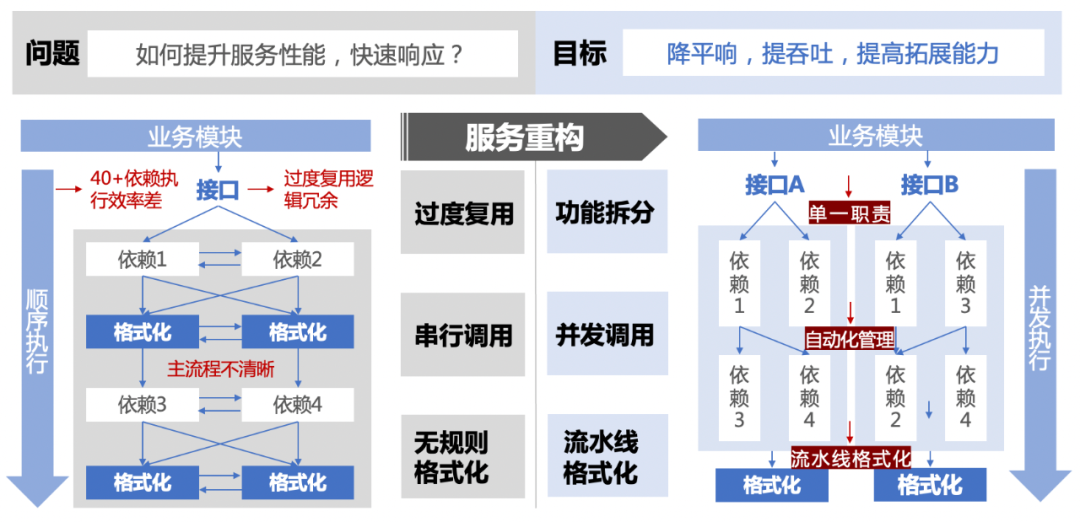

4.3 How to ensure the continuous stability of the service ?

background

As the system carries more and more business traffic , In the direction of service stability construction , The review team also invested a lot of resources / Proportion of manpower , On the one hand, continuously ensure the high availability of system services , Continuously improve the system fault tolerance , Be able to quickly respond to sudden abnormal situations , The smallest available unit to guarantee the service , On the other hand, the high performance of system services is guaranteed in the iterative process of business logic , Continuously improve the user experience . Stability construction is a process of continuous optimization , By constantly exploring , research , Select a technical solution suitable for the review service and implement it , The feature of the comment business is to C End user , Social hot events will have a direct impact on the business , It will cause a sudden increase in read / write traffic , The increase of write traffic , Downstream services , Downstream storage will generate a large load , meanwhile , Excessive write traffic will also test the cache hit rate of read traffic , Once a primary dependency exception occurs , The overall service will be in an unavailable state , Against this risk , Through our hot spots , cache , Demotion starts from three aspects , Guarantee the stability of the service , Let's take a look at the specific implementation scheme :

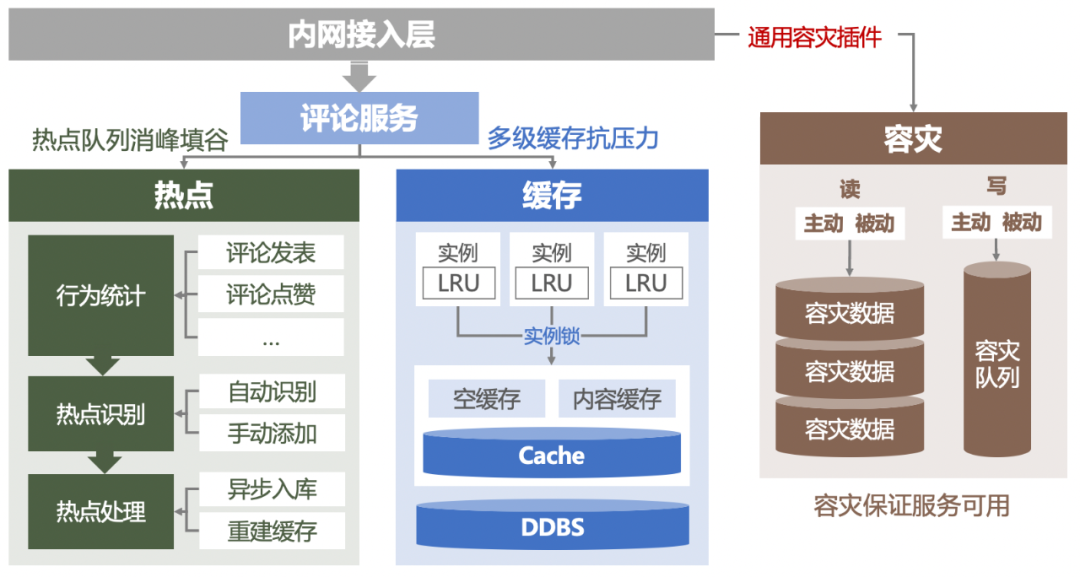

Cache management

The way to deal with the sudden increase of read traffic is to design a multi-level cache , Improve cache hit rate to handle , According to the characteristics of the review business , We designed a comment list cache , Comment count cache, comment content cache, etc , An empty cache is also used , To address the situation that most resources have no comments under a specific scenario , Prevent cache penetration . When receiving a traffic read request , use first LRU The local cache of can withstand a wave of traffic , See if you can get the results in the hottest and most recent memory cache list , Local cache misses , Will be from redis Access to the cache , If it is a sudden hot spot ,redis Your hit rate is very low , Flow back to db There are still great risks , So a layer of instance lock is used here , Control the concurrency from the instance dimension , Only one request is allowed to be transmitted downstream , Other requests wait for results , In this way, cache breakdown is prevented .

Hotspot mechanism

Hot events often lead to a sudden increase in traffic , When a hot event occurs , Usually accompanied by Push Class , This leads to centralized read / write requests for a single resource , And this kind of hot event happens at random , It is difficult to predict ahead of time , The increase of read / write traffic of a single resource , This will cause the cache hit rate to decrease , Even the cache is completely invalidated , A lot of requests go directly to the database , And then the service avalanche , In order to avoid the uncontrollable impact of hot events on the system , We designed a set of hot spot auto discovery / Identification system : Listen for comments generated by the resource dimension through the message queue , For example, comments are published , reply , give the thumbs-up , Auditing, etc , By counting to determine whether the current resource is hot enough, count and count the comment behavior , When the comment behavior under a resource continues to increase , Reach a certain threshold ( Dynamically configurable ) when , This resource is judged as a hot resource , And push the resource to the hotspot configuration center , Resources that hit hotspots , The comments generated under its resources will be written to the hotspot queue , Asynchronous storage , meanwhile , The cache will not be cleaned up after the write operation , Instead, rebuild the cache , To guarantee the hit rate . Through this mechanism , We have successfully dealt with a series of hot issues that have aroused heated discussion among the people , Guaranteed user experience .

Disaster tolerance degraded

When an extreme fault occurs , It will have a great impact on the stability of the system , For example, downstream services cannot afford the sudden increase in business traffic , request timeout , Single point of failure caused by instance problems , The computer room is unavailable due to network problems , Our services carry tens of billions of dollars a day PV Traffic , A few seconds of service unavailability can cause huge losses , Therefore, disaster recovery and degradation capability is an important standard to test the high availability of system services , We have taken a series of measures in this regard to improve the system's ability to cope with risks , The smallest available unit of the construction business under abnormal conditions . On the disaster recovery mechanism , Disaster recovery trigger is divided into active and passive , When the business is jittery with the availability glitch , Perceived by the access layer , Automatically enter the passive disaster recovery logic , Write requests enter the queue asynchronously , The read request directly returns disaster recovery data , Improve the utilization rate of disaster recovery data ; Active disaster recovery is connected with the operation and maintenance platform , Implement by interface 、 By machine room 、 Determine whether the service is degraded to disaster recovery status in proportion ; In terms of dependency management , Sort out weak business dependencies , Once a dependent service exception occurs , Dependencies can be removed directly ; In chaos Engineering , In order to be able to cope with various emergencies online , Fault design for service 、 fault injection , And give feedback on the service , Formulate corresponding plan construction , And make the fault drill routine , Periodically verify the level of risk the system can withstand .

5、 ... and 、 summary

Baidu comments on the development of Zhongtai so far , Experienced role positioning / Transformation and upgrading of technical architecture , Constantly explore application innovation , Create the ultimate user experience , at present , The comment service is Baidu 20+ Our products provide comments , Peak value QPS achieve 40w+, average per day PV Reach a scale of 10 billion yuan , At the same time, it can guarantee SLA The interface stability of the perspective is 99.995% above . In the future development plan , Baidu comments on Zhongtai's service innovation 、 Central Taiwan Construction 、 Stability and other aspects will continue to further study , Help build a high-quality Baidu community atmosphere .

Recommended reading :

Data visualization platform based on template configuration

How to correctly evaluate the video quality

Small program startup performance optimization practice

How do we get through low code “⽆⼈ District ” Of :amis The key design of love speed

Mobile heterogeneous computing technology -GPU OpenCL Programming ( The basic chapter )

Cloud native enablement development test

be based on Saga Implementation of distributed transaction scheduling

边栏推荐

- Kotlin introductory notes (V) classes and objects, inheritance, constructors

- SQL learning - case when then else

- [technical live broadcast] how to rewrite tdengine code from 0 to 1 with vscode

- Tdengine offline upgrade process

- mysql安装配置以及创建数据库和表

- OpenGL - Coordinate Systems

- What should we pay attention to when developing B2C websites?

- Node の MongoDB Driver

- What about wechat mall? 5 tips to clear your mind

- 【技术直播】如何用 VSCode 从 0 到 1 改写 TDengine 代码

猜你喜欢

Community group buying has triggered heated discussion. How does this model work?

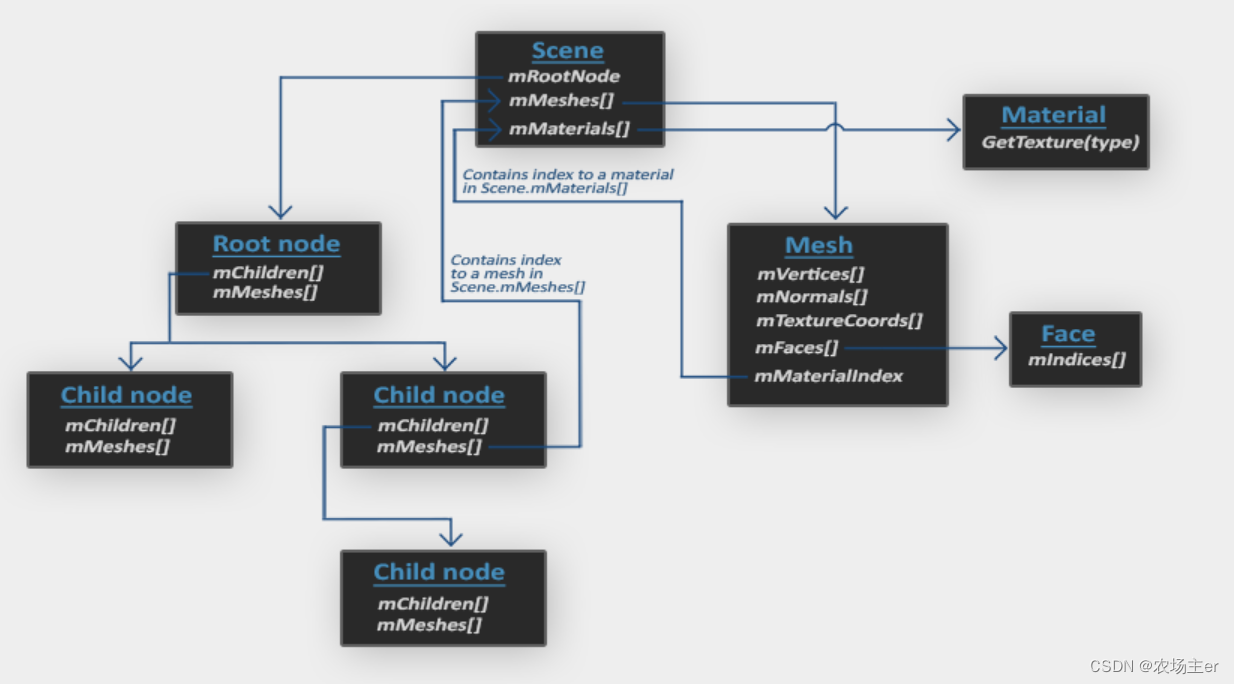

OpenGL - Model Loading

Why do offline stores need cashier software?

VS Code问题:长行的长度可通过 “editor.maxTokenizationLineLength“ 进行配置

一次 Keepalived 高可用的事故,让我重学了一遍它

基于模板配置的数据可视化平台

How to implement complex SQL such as distributed database sub query and join?

Tdengine already supports the industrial Intel edge insight package

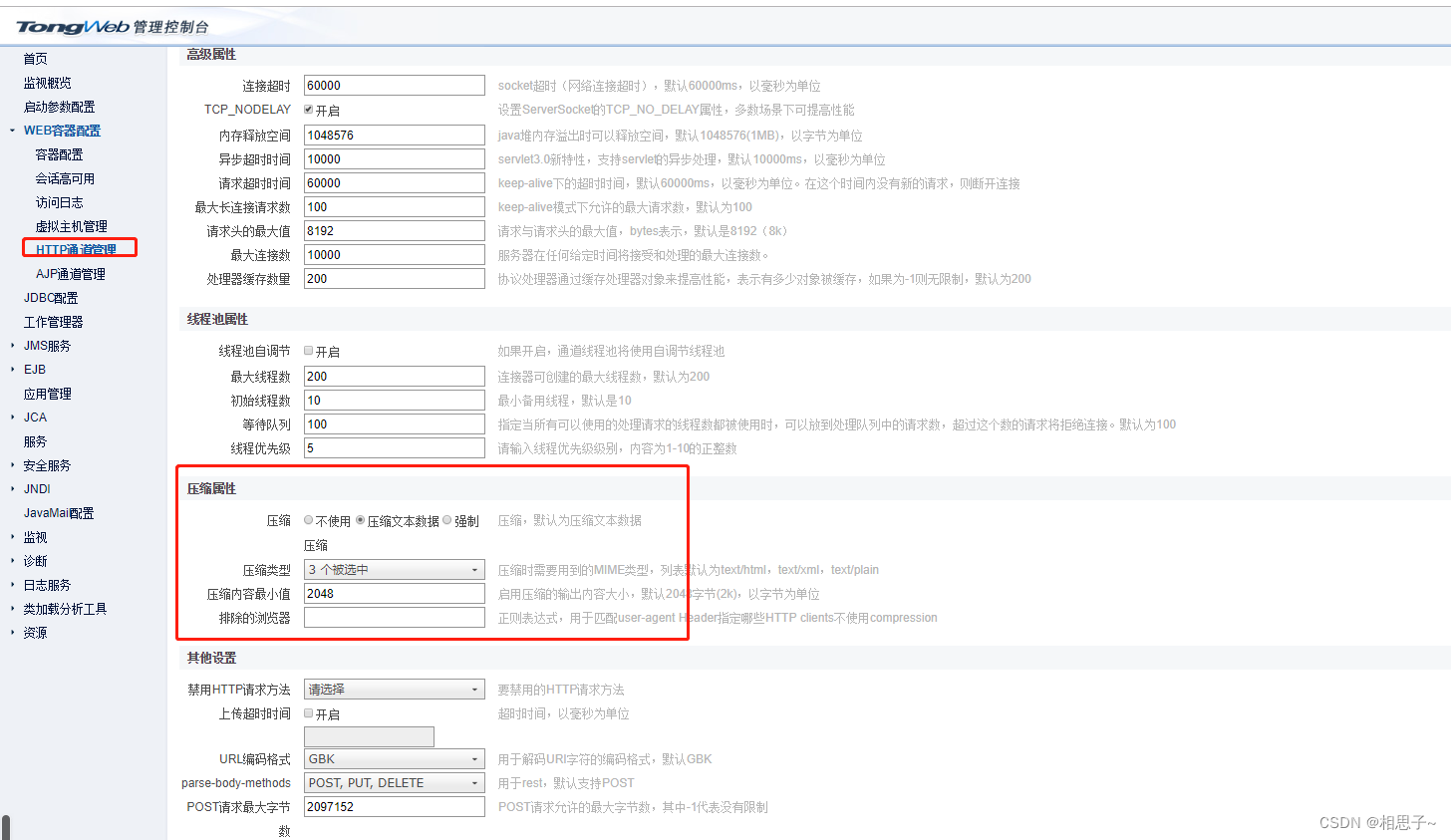

tongweb设置gzip

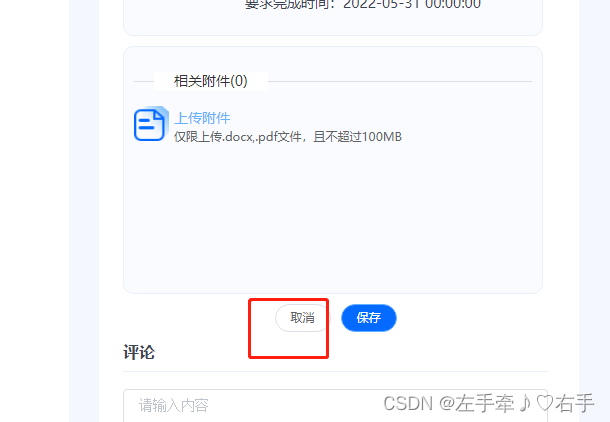

How to empty uploaded attachments with components encapsulated by El upload

随机推荐

What about wechat mall? 5 tips to clear your mind

Kotlin introductory notes (IV) circular statements (simple explanation of while, for)

What are the advantages of the live teaching system to improve learning quickly?

Project practice | excel export function

Viewpager pageradapter notifydatasetchanged invalid problem

Why does everyone want to do e-commerce? How much do you know about the advantages of online shopping malls?

Unity SKFramework框架(二十四)、Avatar Controller 第三人称控制

LeetCode 496. 下一个更大元素 I

观测云与 TDengine 达成深度合作,优化企业上云体验

LeetCode 503. Next bigger Element II

【数组的中的某个属性的监听】

Unity skframework framework (24), avatar controller third person control

Tdengine can read and write through dataX, a data synchronization tool

基于模板配置的数据可视化平台

【技术直播】如何用 VSCode 从 0 到 1 改写 TDengine 代码

LeetCode 556. Next bigger element III

La voie de l'évolution du système intelligent d'inspection et d'ordonnancement des petites procédures de Baidu

Three-level distribution is becoming more and more popular. How should businesses choose the appropriate three-level distribution system?

[how to disable El table]

How do enterprises choose the appropriate three-level distribution system?