当前位置:网站首页>Detailed explanation of interview questions: the history of blood and tears in implementing distributed locks with redis

Detailed explanation of interview questions: the history of blood and tears in implementing distributed locks with redis

2022-07-07 23:56:00 【Ashes collector】

Use Redis What is the detailed scheme of distributed lock ?

A simple answer is to use Redission client .Redission The lock scheme in is Redis The perfect detailed scheme of distributed lock .

that ,Redission Why is the lock scheme in perfect ?

Just right , I use Redis I have rich experience in making distributed locks , In practice , Many uses have also been explored Redis A distributed lock scheme , After countless blood and tears .

therefore , Talking about Redission Why is the lock perfect before , Let's first show you that I used Redis Problems encountered in making distributed locks .

I used to use it. Redis Do distributed locks to solve the problem of a user grabbing coupons . The business requirements are as follows : When the user receives a coupon , The number of coupons must be reduced by one , If you run out of coupons , Users are not allowed to rob again .

At the time of implementation , First read the number of coupons from the database to judge , When the coupon is greater than 0, Allow to receive coupons , then , Then reduce the number of coupons by one , Write back to the database .

At that time, due to the large number of requests , therefore , We used three servers to do the diversion .

There's a problem :

If... On one of the servers A After the app gets the number of coupons , Due to the processing of relevant business logic , The number of coupons in the database is not updated in time ; stay A When the application processes business logic , On another server B The app has updated the number of coupons . that , etc. A When applying to update the number of coupons in the database , It will B The number of coupons updated by the application overwrites .

See here , Someone may be strange , Why not use it directly here SQL:

update Coupon list set Number of coupons = Number of coupons - 1 where Coupon id = xxx

The reason is to do so , Without distributed lock coordination , The number of coupons may be directly negative . Because when the number of coupons is 1 When , If two users request to grab coupons through two servers at the same time , All meet the requirement that the coupon is greater than 0 Conditions , And then do this SQL sentence , As a result, the number of coupons directly becomes -1 了 .

Others say you can use an optimistic lock , For example, use the following SQL:

update Coupon list set Number of coupons = Number of coupons - 1 where Coupon id = xxx and version = xx

In this way, there is a certain probability , It is likely that the data can not be updated all the time , A condition that results in a long retry .

therefore , After comprehensive consideration , We adopted Redis Distributed lock , By mutual exclusion , To prevent multiple clients from updating the number of coupons at the same time .

at that time , The first thing we think about is the use of Redis Of setnx command ,setnx The command is actually set if not exists Abbreviation .

When key After setting the value successfully , Then return to 1, Otherwise it returns 0. therefore , here setnx Setting success can be expressed as obtaining the lock , If you fail , It means that there is a lock , It can be regarded as a failure to acquire a lock .

setnx lock true

If you want to release the lock , perform del Instructions , hold key Delete it .

del lock

Take advantage of this feature , We can make the system before executing the coupon logic , Go first Redis In the implementation of setnx Instructions . Then execute the result according to the instruction , To determine whether the lock has been obtained . If you get , Continue to carry out business , Use after execution del Command to release the lock . If not , Just wait for a certain time , Get the lock again .

At first glance , There's nothing wrong with all this , Use setnx Instructions do have the desired mutual exclusion effect .

however , This is based on the fact that all operating environments are normal . Once an exception occurs in the running environment , The problem arises .

Think about it , The application that holds the lock suddenly crashes , Or the server is down , What happens ?

This will cause a deadlock —— An app that holds a lock cannot release the lock , Other applications have no chance to acquire locks at all . This can cause huge online accidents , We need to improve the scheme , Solve this problem .

How to solve it ? We can see , The root cause of deadlock is , Once there is a problem with the application holding the lock , You won't release the lock . Think in this direction , Can be in Redis Give up key An expiration date .

In this case , Even if something goes wrong ,key It will also be released after a period of time , Has this problem been solved ? actually , That's exactly what everyone did .

however , because setnx The instruction itself cannot set the timeout , So there are usually two ways to do this :

1、 use lua Script , In the use of setnx After the instruction , Reuse expire Give orders to key Set expiration time .

if redis.call("SETNX", "lock", "true") == 1 then

local expireResult = redis.call("expire", "lock", "10")

if expireResult == 1 then

return "success"

else

return "expire failed"

end

else

return "setnx not null"

end

2、 Use it directly set(key,value,NX,EX,timeout) Instructions , Set the lock and timeout at the same time .

redis.call("SET", "lock", "true", "NX", "PX", "10000")

The above two methods , You can use either way .

The script for releasing the lock is the same in both ways , Call directly Redis Of del Command can .

up to now , Our locks are mutually exclusive , Without problems with some systems that hold locks , Causing a deadlock . Is that perfect ?

Suppose there's a situation like this , If an application holds a lock , What happens if the holding time exceeds the timeout we set ? Two things happen :

Found the system in Redis Set in the key There is still

Found the system in Redis Set in the key non-existent

The first situation is normal . Because after all, you're working overtime ,key It is also logical to be cleared normally .

But the most terrible thing is the second situation , Found set key There is still . What does this ? Explain the existing key, Is set by another application .

At this time, if the application holding the lock times out calls del Command to delete the lock , Will accidentally delete the lock set by others , This will directly lead to system business problems .

therefore , To solve this problem , We need to continue with Redis Make changes to the script …… Destroy it , Tired ……

First , We want the application to acquire the lock , To set a unique value that only the application knows .

Through this unique value , When the system releases the lock , You can identify whether the lock is set by yourself . If you set it yourself , Just release the lock , Delete key; If not , Then do nothing .

The script is as follows :

if redis.call("SETNX", "lock", ARGV[1]) == 1 then

local expireResult = redis.call("expire", "lock", "10")

if expireResult == 1 then

return "success"

else

return "expire failed"

end

else

return "setnx not null"

end

perhaps

redis.call("SET", "lock", ARGV[1], "NX", "PX", "10000")

here ,ARGV[1] Is a parameter variable that can be passed in , You can pass in a unique value . For example, only one knows UUID Value , Or through the snowball algorithm , Generate only the one you own ID.

The script for releasing the lock is changed to this :

if redis.call("get", "lock") == ARGV[1]

then

return redis.call("del", "lock")

else

return 0

end

You can see , From a business perspective , in any case , Our distributed locks can meet the real business needs . Can be mutually exclusive , An immortal lock , It won't delete other people's locks by mistake , Only their own locks , You can release .

Everything is so beautiful !!!

unfortunately , There's another hidden danger , We do not rule out . The hidden danger is Redis Oneself .

Need to know ,lua Scripts are used in Redis On the singleton of . once Redis There's something wrong with it , Our distributed lock won't work , Distributed locks don't work , It will have a significant impact on the normal operation of the business , It's something we can't accept .

therefore , We need to take Redis Make it highly available . In general , solve Redis The problem of high availability , Both use master-slave clusters .

But the master-slave cluster , New problems will be introduced . The main problem is ,Redis There is a delay in master-slave data synchronization . This boundary condition produces a delay : When on the host Redis The lock has been built , But the lock data has not been synchronized to the slave , The host is down . And then , The slave is lifted to the host , At this time, there is no lock data set by the master before on the slave —— The lock is lost …… lost …… 了 ……

Come here , Finally, I can introduce Redission( Open source Redis client ) 了 , Let's see how it is implemented Redis Distributed locked .

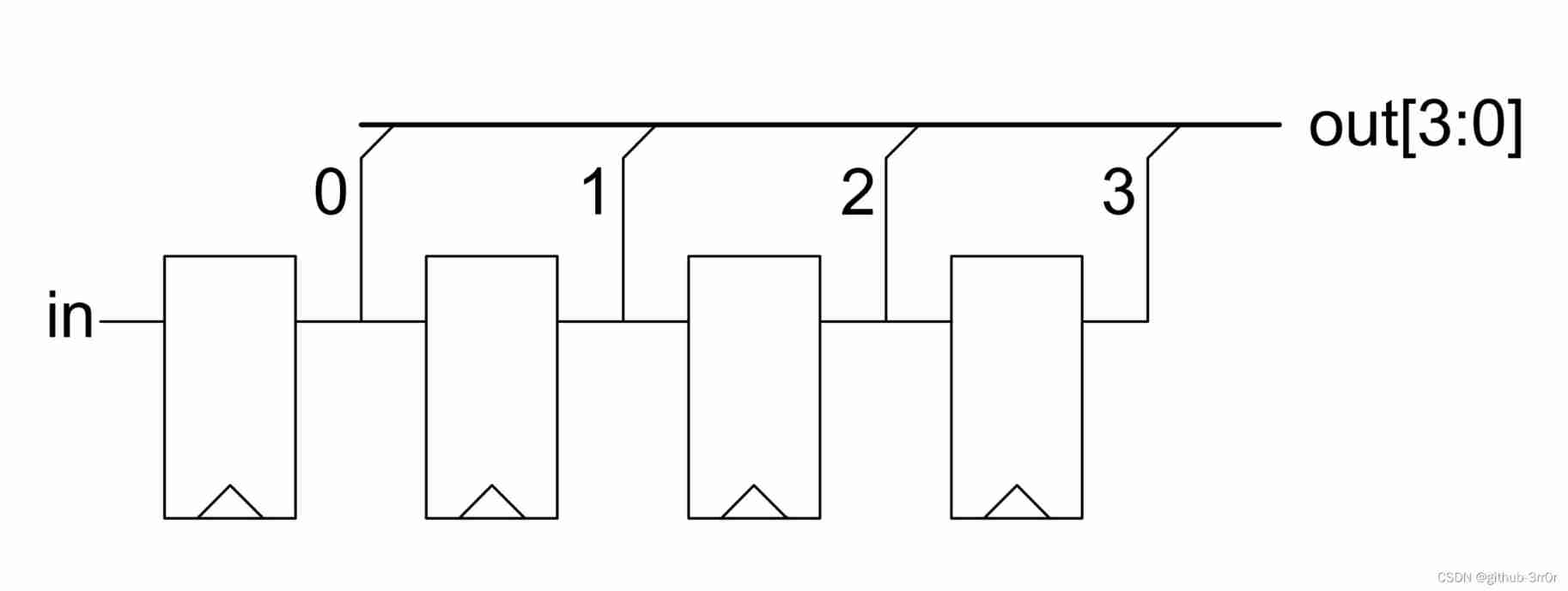

Redission The idea of implementing distributed locks is very simple , Whether it's a master-slave cluster or Redis Cluster colony , It will be applied to every in the cluster Redis, Execute the settings one by one Redis Lock script , That is, each in the cluster Redis Will contain the set lock data .

Let's introduce... Through an example .

hypothesis Redis Cluster has 5 Taiwan machine , At the same time, according to the evaluation , The lock timeout is set to 10 Second is more suitable .

The first 1 Step , Let's first calculate the total waiting time of the cluster , The total waiting time of the cluster is 5 second ( The timeout of the lock 10 second / 2).

The first 2 Step , use 5 Seconds divided by 5 Number of machines , The result is 1 second . This 1 Second is to connect each Redis Acceptable waiting time .

The first 3 Step , Connect in turn 5 platform Redis, And implement lua Script set lock , Then make a judgment :

If in 5 In seconds ,5 All machines have execution results , And more than half ( That is to say 3 platform ) The machine has set the lock successfully , It is considered that setting the lock is successful ; Less than half of the machines set the lock successfully , Failure .

If exceeded 5 second , No matter how many machines set the lock successfully , They all think that setting the lock failed . such as , front 4 It took a total of 3 second , But in the end 1 This machine uses 2 No result in seconds , The total waiting time has exceeded 5 second , Even if more than half of them succeed , This also counts as a failure .

One more word , In many business logic , In fact, there is no need for lock timeout .

such as , Batch execution of processed tasks in the early morning , Distributed locks may be required to ensure that tasks are not repeated . here , It's not clear how long the task will take . If you set the timeout of the distributed lock, here , It doesn't make much sense . however , Don't set timeout , It will cause deadlock problem again .

therefore , The general solution to this problem is , Each client holding a lock starts a background thread , By performing specific lua Script , To constantly refresh Redis Medium key Timeout time , So that before the task is completed ,key Will not be removed .

The script is as follows :

if redis.call("get", "lock") == ARGV[1]

then

return redis.call("expire", "lock", "10")

else

return 0

end

among ,ARGV[1] Is a parameter variable that can be passed in , A unique value that represents the system that holds the lock , That is, only the client holding the lock can refresh key Timeout for .

Only this and nothing more , Only when a complete distributed lock is implemented . The summary implementation scheme is as follows :

Use set Command to set the lock flag , There must be a timeout , So that the client crashes , You can also release the lock ;

For those that do not require a timeout , You need to implement a thread that can continuously refresh the lock timeout ;

Each client that acquires the lock , stay Redis Set in the value It has to be unique , To identify which client set the lock ;

In a distributed cluster , Directly set the same timeout and lock mark for each machine ;

In order to ensure that the locks set by the cluster will not timeout due to network problems , The network waiting time and lock timeout must be set reasonably .

This distributed lock satisfies the following four conditions :

Only one client can hold the lock at any time ;

Deadlock cannot occur , There was a problem when a client held the lock and did not unlock it , It can also ensure that other clients continue to hold the lock ;

Lock and unlock must be the same client , The lock added by the client can only be solved by itself ;

As long as most Redis The node is normal , The client can use the lock normally .

Of course , stay Redission The script in the , In order to ensure the re-entry of the lock , Right again lua The script has been modified , Now put the complete lua The script is posted below .

Get the lock lua Script :

if (redis.call('exists', KEYS[1]) == 0) then

redis.call('hincrby', KEYS[1], ARGV[2], 1);

redis.call('pexpire', KEYS[1], ARGV[1]);

return nil;

end;

if (redis.call('hexists', KEYS[1], ARGV[2]) == 1) then

redis.call('hincrby', KEYS[1], ARGV[2], 1);

redis.call('pexpire', KEYS[1], ARGV[1]);

return nil;

end;

return redis.call('pttl', KEYS[1]);

Script of corresponding refresh lock timeout :

if (redis.call('hexists', KEYS[1], ARGV[2]) == 1) then

redis.call('pexpire', KEYS[1], ARGV[1]);

return 1;

end;

return 0;

Corresponding release lock script :

if (redis.call('hexists', KEYS[1], ARGV[3]) == 0) then

return nil;

end;

local counter = redis.call('hincrby', KEYS[1], ARGV[3], -1);

if (counter > 0) then

redis.call('pexpire', KEYS[1], ARGV[2]);

return 0;

else

redis.call('del', KEYS[1]);

redis.call('publish', KEYS[2], ARGV[1]);

return 1;

end;

return nil;

Up to now , Use Redis The detailed scheme of distributed lock is finished .

I have written a bumpy experience step by step , It also states the details of each problem and how to solve it , I hope you can get something from it .

Finally, let me remind you , Use Redis Clusters do distributed locks , There is some controversy , We also need to use it in practice , According to the reality , Make better choices and trade-offs .

边栏推荐

- Magic fast power

- 第四期SFO销毁,Starfish OS如何对SFO价值赋能?

- One click installation with fishros in blue bridge ROS

- LinkedBlockingQueue源码分析-新增和删除

- Install sqlserver2019

- Pycharm basic settings latest version 2022

- 神奇快速幂

- Dataguard 主备清理归档设置

- Cmake learning notes (1) compile single source programs with cmake

- P2141 [noip2014 popularization group] abacus mental arithmetic test

猜你喜欢

快速回复二极管整流特性

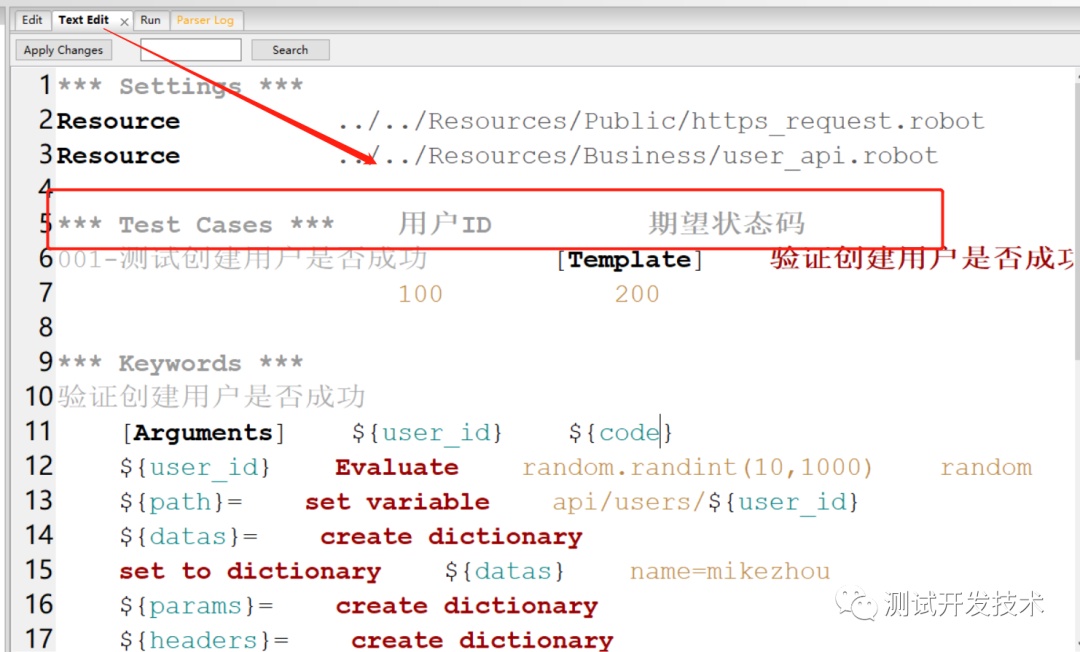

自动化测试:Robot FrameWork框架90%的人都想知道的实用技巧

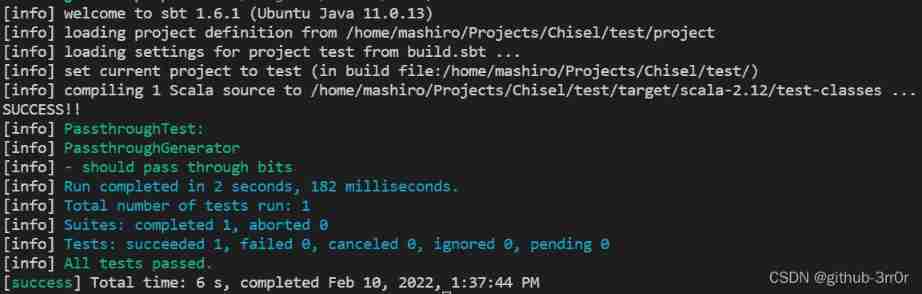

Chisel tutorial - 02 Chisel environment configuration and implementation and testing of the first chisel module

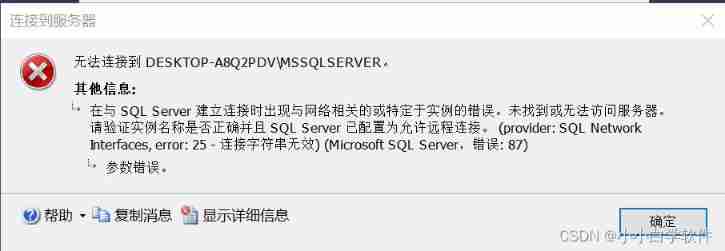

SQL connection problem after downloading (2)

一份假Offer如何盗走了「Axie infinity」5.4亿美元?

HB 5469民用飞机机舱内部非金属材料燃烧试验方法

Les mots ont été écrits, la fonction est vraiment puissante!

Chisel tutorial - 05 Sequential logic in chisel (including explicit multi clock, explicit synchronous reset and explicit asynchronous reset)

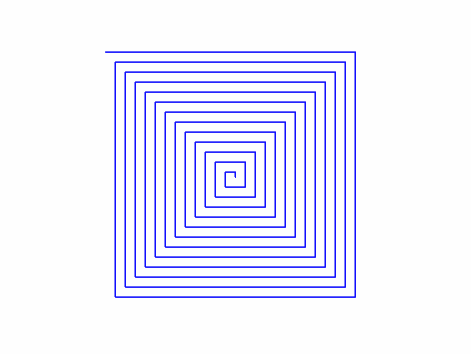

【编程题】【Scratch二级】2019.03 绘制方形螺旋

一鍵免費翻譯300多頁的pdf文檔

随机推荐

Rock-paper-scissors

P1055 [noip2008 popularization group] ISBN number

Database interview questions + analysis

SQL uses the in keyword to query multiple fields

面试题详解:用Redis实现分布式锁的血泪史

QT creator add JSON based Wizard

One click free translation of more than 300 pages of PDF documents

Anaconda+pycharm+pyqt5 configuration problem: pyuic5 cannot be found exe

Pypharm uses, and the third-party library has errors due to version problems

光流传感器初步测试:GL9306

【leetcode】day1

【编程题】【Scratch二级】2019.09 绘制雪花图案

数据库查询——第几高的数据?

postgres timestamp转人眼时间字符串或者毫秒值

第四期SFO销毁,Starfish OS如何对SFO价值赋能?

An example analysis of MP4 file format parsing

Where are you going

Introduction to programming hardware

【路径规划】使用垂距限值法与贝塞尔优化A星路径

Enumeration, simulation, and sorting