当前位置:网站首页>MDM mass data synchronization test verification

MDM mass data synchronization test verification

2022-07-03 20:21:00 【Digital unobstructed connection】

Recently, the implementation of master data governance plan is under way , There is personnel master data in the master data , The personnel master data mainly records the natural persons in the province , Involving tens of millions of records , Because of the huge amount of data , Need to be right MDM Master data management platform 、ESB Test the synchronous interface and integration process of enterprise service bus , Verify whether mass data synchronization is supported .

This mass data synchronization is mainly aimed at 1 A thousand 、1 Ten thousand 、10 Ten thousand 、100 Ten thousand 、1000 Test and verify 10000 data levels , among 1 Batch synchronization mode is adopted for 10000 and below , and 1 For more than 10000 entries, the method of cyclic batch synchronous entry , This paper mainly discusses the test methods 、 The optimization process and the analysis of test results are mainly explained .

1 Overall description

This chapter mainly focuses on the main contents of the test 、 The test of ESB Describe in detail the specific implementation of the application integration process and how to deal with the problems encountered .

1.1 Test ideas

During the test, it is mainly aimed at 1 A thousand 、1 Ten thousand 、10 Ten thousand 、100 Ten thousand 、1000 Test and verify 10000 data levels , The test is divided into two modules , As follows :

1. Optimize the environment , Optimize master data and data respectively ESB Of CPU、 Memory , Also on Redis、JVM、CentOS、Nginx To optimize ;

2. For each order of magnitude ESB Layer testing , First, construct the corresponding input parameter with code , Reuse ESB The data insertion component in the data adapter records the synchronization time of each order of magnitude ;

3. Copy the original integration process , Join the master data scheduling interface for data synchronization , Record synchronization time , And compare with the synchronization time of database batch insertion , Check whether the synchronization interface reduces the synchronization timeliness ;

4. Summarize the test results , Feed back relevant problems to the person in charge of the product for optimization , And retest .

1.2 Testing process

The test process is mainly for different data levels ESB Test the data insertion performance , Main tests 100W Article and 1000W Write test of data , The specific process is as follows :

1. The specific process of batch processing is as follows :

a) Initialization operation record data start time ;

b) Query out 1 thousand /1 Ten thousand data , And make records ;

c) Database direct batch data insertion ;

d) Record the deadline and calculate the time .

2. The process of cyclic batch processing is as follows :

a) Initialization operation record data start time ;

b) Use Java Transform node construction 1 Ten thousand data ;

c) Database direct batch data insertion ;

d) The index grows and circulates ;

e) Construct integration log parameters and record process execution time .

1.3 The results verify that

Analyze the data execution results , Feedback specific optimization points with developers , Because master data is not only the storage of data , There is also data display and analysis , Personnel data management needs to be viewed and verified , And patrol the data of personnel involved 、 Personnel data analysis and other functions to verify , Verify whether the system will crash or Redis Collapse, etc .

2 performance tuning

Performance tuning is mainly for CPU、 Memory 、Redis、JVM、CentOS、Nginx To optimize , The specific optimization process is as follows .

2.1 Memory tuning

Tuning mainly uses UMC For master data 、ESB Etc CPU And memory expansion , Adjust the memory to 4G—8G, In terms of master data :

ESB The adjustment is the same as above :

2.2 System tuning

Yes CentOS tuning , adjustment sysctl.conf file .

Add specific parameters and parameter descriptions :

Next, make the configuration file effective .

2.3 Thread pool tuning

adjustment server.xml file , Adjust thread pool , Parameter description :

1.maxThreads: Maximum number of threads , When high concurrency requests ,tomcat The maximum number of threads that can be created to process a request , Exceeding is queued in the request queue , The default value is 200;

2.minSpareThreads: Minimum number of idle threads , The number of threads that will survive in any case , Even beyond the maximum free time , It won't be recycled , The default value is 4;

2.4Redis tuning

Through adjustment Redis Configuration file for Redis tuning .

add to 、 Modify the configuration :

Need to be closed redis Instance node , Effective after restart .

Verification method :

Input “info” The command view has been changed to 5G Memory , Enabled allkeys-lru Pattern .

2.5Nginx tuning

Nginx Adjust the use of epoll Pattern 、 Adjust the maximum number of connections 、 Timeout time 、 Request header buffer 、 Request body buffer, etc .

3 Implementation steps

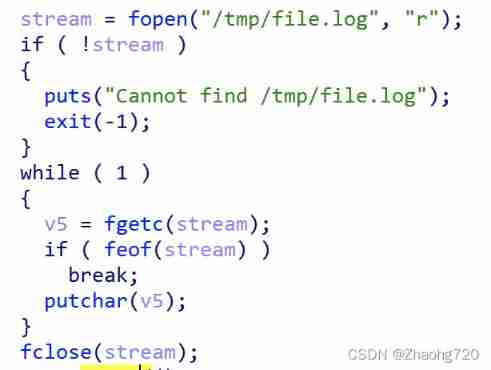

First Create a virtual table Used to simulate business data , Next , after Get data by paging through the database reading component , after Insert data in batches in a circular way .

3.1 Analog data

use first Mysql Function to insert into the database 1000 Ten thousand data .

When the script executes and the number of inserted records is entered, the corresponding data can be inserted into the above Library , The implementation method is as follows :

stay max_num Inserting data can insert quantitative data into the database . See Annex for details .

3.2ESB verification

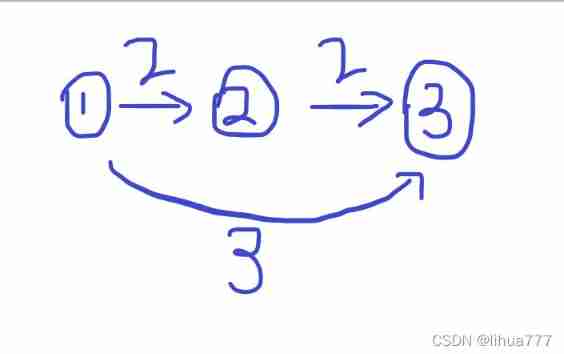

Follow the above test ideas , First pair ESB Verify the data writing ability , Data batch insertion flow chart ( With 100W Take the data ):

Process interpretation begins :

1. Data initialization operation : Set a variable index Index value , Record the start time of the process ;

2. Set the loop 1 Ten thousand days , Construction parameters ;

3. Data insertion component , Insert... Every time 1 Ten thousand data ;

4.Index+1 Index self growth , Set cycle conditions on the line , loop 1000 Time , Insert 1000 Ten thousand data ;

5. Record the data execution time , And insert it into the database. The process ends .

Finally, call each process directly and execute it directly .

3.3 Interface validation

Interface verification mainly verifies whether the master data synchronization interface supports the synchronization of large quantities of data , If the support , How efficient is synchronization , Verify the master data batch synchronization interface , The process just replaces the data structure with the data query component , Replace the data insertion component with a data interface , The specific process is as follows :

Process interpretation begins :

1. Data initialization operation : Set a variable index Index value , Record the start time of the process ;

2. Query data 1W Number of records ;

3. Call the master data bulk synchronization interface imp-all-fields;

4.Index+1 Index self growth , Set cycle conditions on the line , loop 1000 Time , Insert 1000 Ten thousand data ;

5. Record the data execution time , And insert it into the database. The process ends .

Finally, call each process directly and execute it directly .

3.4 Results contrast

The test results show that calling the master data interface will affect the speed of data synchronization , At the same time, the number of database fields will also affect the speed of data synchronization , It can be optimized from the data synchronization interface to improve the synchronization efficiency .

4 Experience

Through the verification of this batch data synchronization test, it has improved its performance adjustment to a certain extent , At the same time, it also summarizes some experience , Now from the way of doing things 、 The ideological and technological accumulation levels are summarized as follows .

4.1 How to do things

Recent work has made me realize : In the process of work, you should Work hard with company leaders 、 Communication and interaction with colleagues , We should face difficulties when encountering difficulties , Look forward to the solution to the problem , Actively solve problems , While solving the problem , Improve their own technical ability .

4.2 ideology

By looking at existing Nginx、Redis And so on , It is found that there are some contents in the document that need to be optimized , With the continuous accumulation of professional knowledge , The cognition of knowledge is also improving . When you look back at your previous work documents , You will find many leaks , At this time, we need to improve the working documents again . In this process of improvement, we compare our understanding at the beginning of learning with that at present , You can learn what your shortcomings were at that time , How to improve the thinking logic . technology 、 Cognition is constantly updated , Working documents are also constantly updated , In this Keep updating iteratively In the process of , Make your knowledge points unknowingly connected into a line .

4.3 Technology accumulation

This data synchronization verification makes itself Linux System tuning has been improved to a certain extent , At the same time, it also exposed the problems caused by careless adjustment of configuration files , You can't just knock the code , The same is true in the daily work process , In the follow-up study and work process, we should avoid such problems .

Linux The system is the most commonly used system on the server side , The importance of learning it well is self-evident , As a string operation as the mainstream system , Want to learn well Linux In fact, it's not easy , Want to master , It also requires a lot of energy , Only through continuous learning and accumulation can we make our own knowledge system more perfect .

边栏推荐

- 2022 Xinjiang latest road transportation safety officer simulation examination questions and answers

- Research Report on the overall scale, major manufacturers, major regions, products and application segmentation of rotary tablet presses in the global market in 2022

- Global and Chinese market of liquid antifreeze 2022-2028: Research Report on technology, participants, trends, market size and share

- Implementation of stack

- [raid] [simple DP] mine excavation

- Micro service knowledge sorting - cache technology

- 6006. Take out the minimum number of magic beans

- Global and Chinese markets for medical temperature sensors 2022-2028: Research Report on technology, participants, trends, market size and share

- 2.2 integer

- MySQL dump - exclude some table data - MySQL dump - exclude some table data

猜你喜欢

Shortest path problem of graph theory (acwing template)

![How to read the source code [debug and observe the source code]](/img/0d/6495c5da40ed1282803b25746a3f29.jpg)

How to read the source code [debug and observe the source code]

Wargames study notes -- Leviathan

Example of peanut shell inner net penetration

1.4 learn more about functions

2.3 other data types

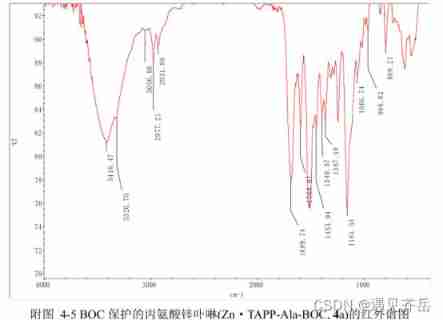

BOC protected alanine zinc porphyrin Zn · TAPP ala BOC / alanine zinc porphyrin Zn · TAPP ala BOC / alanine zinc porphyrin Zn · TAPP ala BOC / alanine zinc porphyrin Zn · TAPP ala BOC supplied by Qiyu

Based on laravel 5.5\5.6\5 X solution to the failure of installing laravel ide helper

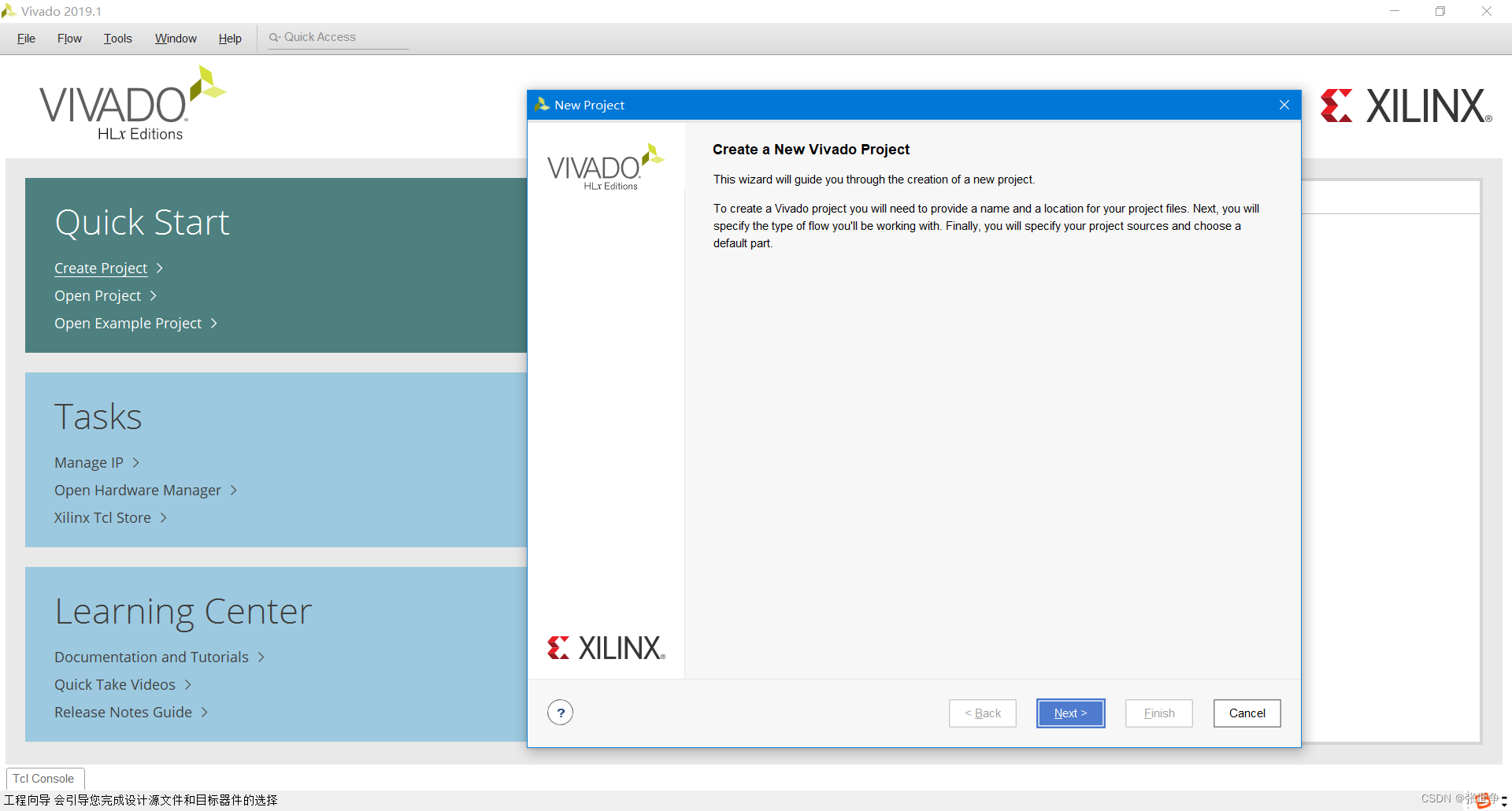

FPGA learning notes: vivado 2019.1 project creation

HCIA-USG Security Policy

随机推荐

PR notes:

unittest框架基本使用

Print linked list from end to end

How can the outside world get values when using nodejs to link MySQL

Based on laravel 5.5\5.6\5 X solution to the failure of installing laravel ide helper

Parental delegation mechanism

1.4 learn more about functions

Upgrade PIP and install Libraries

Titles can only be retrieved in PHP via curl - header only retrieval in PHP via curl

Global and Chinese markets of active matrix LCD 2022-2028: Research Report on technology, participants, trends, market size and share

Deep search DFS + wide search BFS + traversal of trees and graphs + topological sequence (template article acwing)

Promethus

Battle drag method 1: moderately optimistic, build self-confidence (1)

Don't be afraid of no foundation. Zero foundation doesn't need any technology to reinstall the computer system

The simplicity of laravel

Rad+xray vulnerability scanning tool

Global and Chinese market of two in one notebook computers 2022-2028: Research Report on technology, participants, trends, market size and share

Global and Chinese market of rubidium standard 2022-2028: Research Report on technology, participants, trends, market size and share

Q&A:Transformer, Bert, ELMO, GPT, VIT

Virtual machine installation deepin system