The attention mechanism should not be directly in the original backbone network (backbone) Add , So as not to destroy the original pre training parameters , It is best to backbone Then add .

When adding , Pay attention to the input channels The number of .

当前位置:网站首页>Attention apply personal understanding to images

Attention apply personal understanding to images

2022-07-06 11:03:00 【Marilyn_ Manson】

边栏推荐

- Global and Chinese market for intravenous catheter sets and accessories 2022-2028: Research Report on technology, participants, trends, market size and share

- Deoldify项目问题——OMP:Error#15:Initializing libiomp5md.dll,but found libiomp5md.dll already initialized.

- Breadth first search rotten orange

- Ansible practical Series II_ Getting started with Playbook

- API learning of OpenGL (2003) gl_ TEXTURE_ WRAP_ S GL_ TEXTURE_ WRAP_ T

- 解决扫描不到xml、yml、properties文件配置

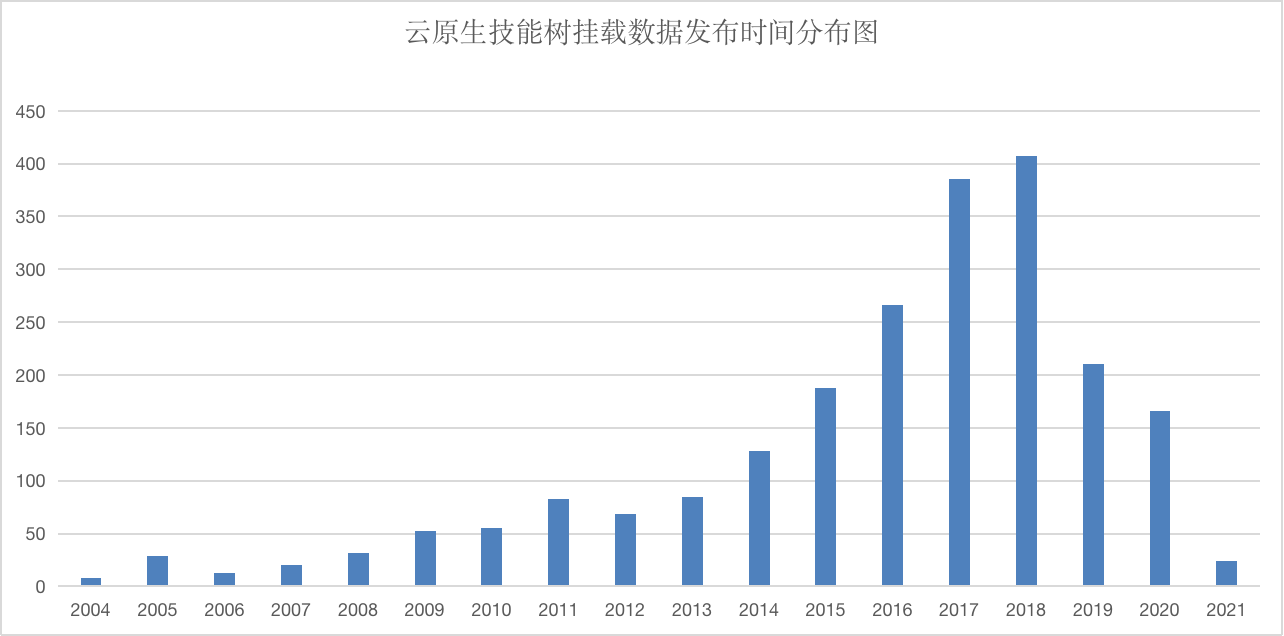

- CSDN问答标签技能树(五) —— 云原生技能树

- Global and Chinese market of thermal mixers 2022-2028: Research Report on technology, participants, trends, market size and share

- Data dictionary in C #

- @controller,@service,@repository,@component区别

猜你喜欢

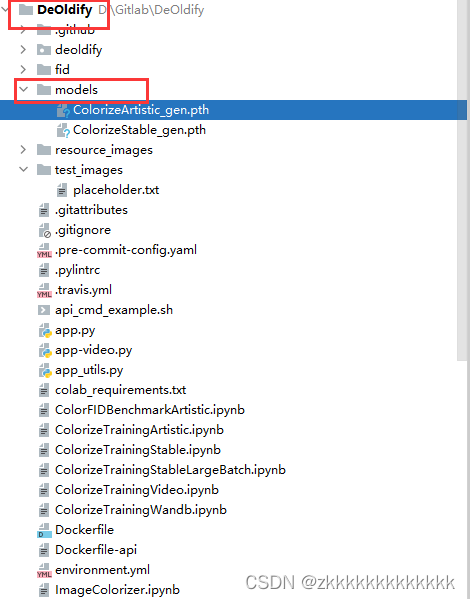

图片上色项目 —— Deoldify

打开浏览器的同时会在主页外同时打开芒果TV,抖音等网站

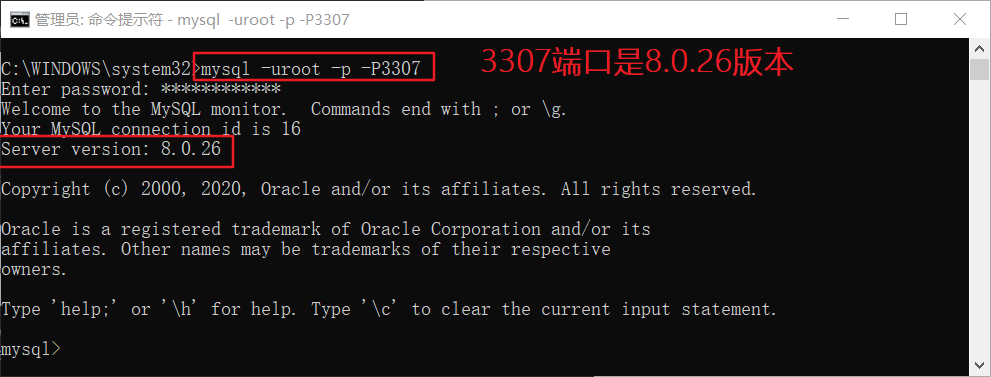

Install mysql5.5 and mysql8.0 under windows at the same time

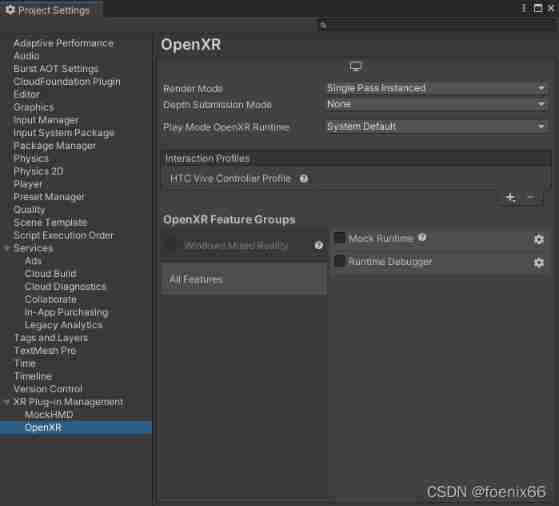

Some problems in the development of unity3d upgraded 2020 VR

CSDN问答标签技能树(五) —— 云原生技能树

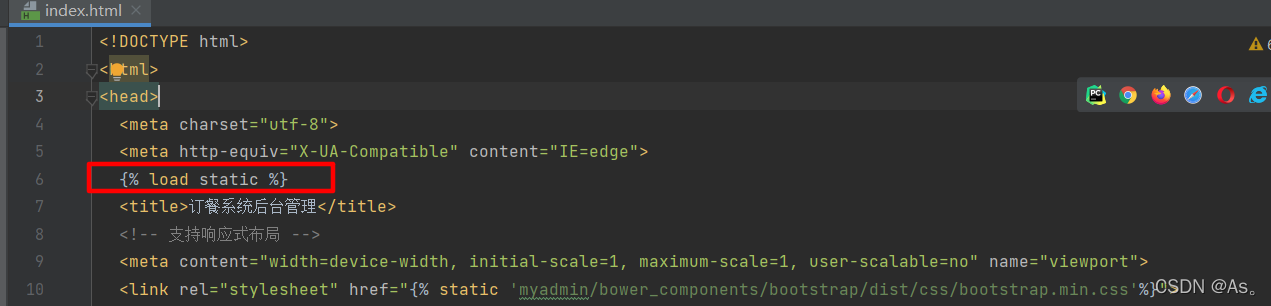

Did you forget to register or load this tag 报错解决方法

【博主推荐】asp.net WebService 后台数据API JSON(附源码)

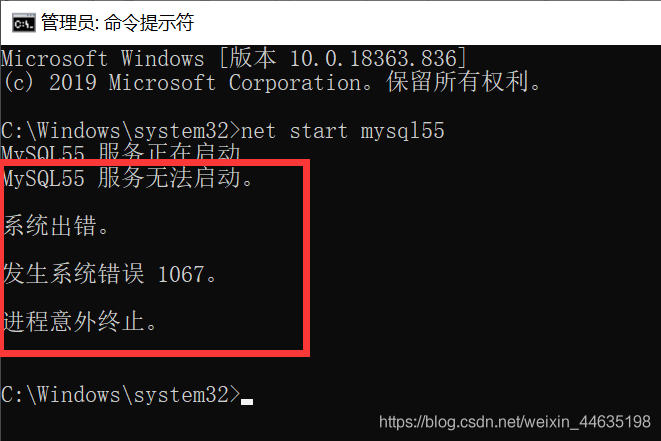

windows无法启动MYSQL服务(位于本地计算机)错误1067进程意外终止

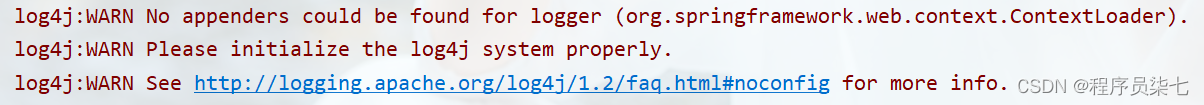

解决:log4j:WARN Please initialize the log4j system properly.

MySQL master-slave replication, read-write separation

随机推荐

JDBC原理

[untitled]

CSDN问答模块标题推荐任务(二) —— 效果优化

CSDN Q & a tag skill tree (V) -- cloud native skill tree

[C language foundation] 04 judgment and circulation

导入 SQL 时出现 Invalid default value for ‘create_time‘ 报错解决方法

Mysql 其他主机无法连接本地数据库

基于apache-jena的知识问答

Mysql26 use of performance analysis tools

SSM integrated notes easy to understand version

Some notes of MySQL

LeetCode #461 汉明距离

【博主推荐】asp.net WebService 后台数据API JSON(附源码)

Csdn-nlp: difficulty level classification of blog posts based on skill tree and weak supervised learning (I)

A trip to Macao - > see the world from a non line city to Macao

CSDN markdown editor

Breadth first search rotten orange

MySQL master-slave replication, read-write separation

February 13, 2022-3-middle order traversal of binary tree

CSDN question and answer module Title Recommendation task (II) -- effect optimization