当前位置:网站首页>Emqtt distribution cluster and node bridge construction

Emqtt distribution cluster and node bridge construction

2022-07-06 15:01:00 【gmHappy】

Catalog

[email protected] Node set

[email protected] Node set

The node exits the cluster

Node discovery and automatic clustering

manual Create clusters manually

be based on static The node list is automatically clustered

be based on mcast Multicast automatic clustering

be based on DNS A Record automatic clustering

be based on etcd Automatic clustering

be based on Kubernetes Automatic clustering

Cluster brain fissure and automatic healing

Cluster nodes are automatically cleared

Cross node sessions (Session)

NGINX Plus -> EMQ colony

EMQ Node bridging configuration

Distributed cluster

Suppose you deploy two servers s1.emqtt.io, s2.emqtt.io Upper deployment cluster :

The node name | Host name (FQDN) | IP Address |

[email protected] or [email protected] | s1.emqtt.io | 192.168.0.10 |

[email protected] or [email protected] | s2.emqtt.io | 192.168.0.20 |

Warning

Node name format : [email protected], Host Must be IP Address or FQDN( Host name . domain name )

[email protected] Node set

emqttd/etc/emq.conf:

- 1.

- 2.

- 3.

You can also use environment variables :

export [email protected] && ./bin/emqttd start

- 1.

Warning

After the node starts to join the cluster , The node name cannot be changed .

[email protected] Node set

emqttd/etc/emq.conf:

- 1.

- 2.

- 3.

Nodes join the cluster

After starting two nodes ,

[email protected] On the implementation :

$ ./bin/emqttd_ctl cluster join [email protected]

Join the cluster successfully.

Cluster status: [{running_nodes,['[email protected]','[email protected]']}]

- 1.

- 2.

- 3.

- 4.

or , [email protected] On the implementation :

$ ./bin/emqttd_ctl cluster join [email protected]

Join the cluster successfully.

Cluster status: [{running_nodes,['[email protected]','[email protected]']}]

- 1.

- 2.

- 3.

- 4.

Query the cluster status on any node :

$ ./bin/emqttd_ctl cluster status

Cluster status: [{running_nodes,['[email protected]','[email protected]']}]

- 1.

- 2.

- 3.

The node exits the cluster

The node exits the cluster , Two ways :

- leave: This node exits the cluster

- remove: Remove other nodes from the cluster

[email protected] Actively exit the cluster :

$ ./bin/emqttd_ctl cluster leave

- 1.

or [email protected] Node , Delete from cluster [email protected] node :

$ ./bin/emqttd_ctl cluster remove [email protected]

- 1.

Node discovery and automatic clustering

EMQ R2.3 Version support is based on Ekka Cluster auto discovery of Library (Autocluster).Ekka Is for Erlang/OTP Cluster management library of application development , Support Erlang Node auto discovery (Discovery)、 Automatic clustering (Autocluster)、 Brain fissures heal automatically (Network Partition Autoheal)、 Automatically delete downtime nodes (Autoclean).

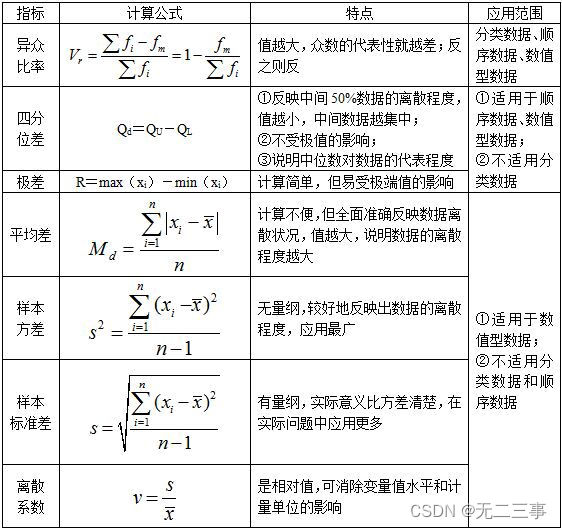

EMQ R2.3 Support multiple strategies to automatically discover nodes and create clusters :

Strategy | explain |

manual | Manually command to create a cluster |

static | Static node list automatic clustering |

mcast | UDP Multicast mode automatic clustering |

dns | DNS A Record automatic clustering |

etcd | adopt etcd Automatic clustering |

k8s | Kubernetes Automatic clustering of services |

manual Create clusters manually

The default configuration is to manually create a cluster , Node passing ./bin/emqttd_ctl join <Node> Order to join :

cluster.discovery = manual

- 1.

be based on static The node list is automatically clustered

Configure fixed node list , Automatically discover and create clusters :

cluster.discovery = static

##--------------------------------------------------------------------

## Cluster with static node list

cluster.static.seeds = [email protected],[email protected]

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

be based on mcast Multicast automatic clustering

be based on UDP Multicast automatically discovers and creates clusters :

cluster.discovery = mcast

##--------------------------------------------------------------------

## Cluster with multicast

cluster.mcast.addr = 239.192.0.1

cluster.mcast.ports = 4369,4370

cluster.mcast.iface = 0.0.0.0

cluster.mcast.ttl = 255

cluster.mcast.loop = on

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

be based on DNS A Record automatic clustering

be based on DNS A Record auto discovery and create clusters :

cluster.discovery = dns

##--------------------------------------------------------------------

## Cluster with DNS

cluster.dns.name = localhost

cluster.dns.app = ekka

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

be based on etcd Automatic clustering

be based on etcd Automatically discover and create clusters :

cluster.discovery = etcd

##--------------------------------------------------------------------

## Cluster with Etcd

cluster.etcd.server = http://127.0.0.1:2379

cluster.etcd.prefix = emqcl

cluster.etcd.node_ttl = 1m

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

be based on Kubernetes Automatic clustering

Kubernetes Automatically discover and create clusters :

cluster.discovery = k8s

##--------------------------------------------------------------------

## Cluster with k8s

cluster.k8s.apiserver = http://10.110.111.204:8080

cluster.k8s.service_name = ekka

## Address Type: ip | dns

cluster.k8s.address_type = ip

## The Erlang application name

cluster.k8s.app_name = ekka

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

Cluster brain fissure and automatic healing

EMQ R2.3 The version officially supports the automatic recovery of cluster cerebral fissure (Network Partition Autoheal):

cluster.autoheal = on

- 1.

Automatic recovery process of cluster cerebral fissure :

- Node receives Mnesia library Of inconsistent_database event 3 Seconds later, confirm the cluster brain fissure ;

- After the node confirms that the cluster brain fissure occurs , towards Leader node ( The earliest startup node in the cluster ) Report brain crack ;

- Leader After the node delays for a period of time , Create brain fissure view when all nodes are online (SplitView);

- Leader The node is in the majority (majority) Choose cluster self-healing partition Coordinator node ;

- Coordinator Node restart minority (minority) Partition node recovery cluster .

Cluster nodes are automatically cleared

EMQ R2.3 Version supports automatic deletion of downtime nodes from the cluster (Autoclean):

cluster.autoclean = 5m

- 1.

Cross node sessions (Session)

EMQ In the message server cluster mode ,MQTT Connected persistent session (Session) Cross node .

For example, two cluster nodes for load balancing : node1 And node2, same MQTT The client connects first node1,node1 The node will create a persistent session ; The client is disconnected and reconnected to node2 when ,MQTT The connection of node2 node , The persistent session is still node1 node :

node1

-----------

|-->| session |

| -----------

node2 |

-------------- |

client-->| connection |<--|

--------------

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

Firewall settings

If there is a firewall between cluster nodes , The firewall needs to be turned on 4369 Port and a TCP Port segment .4369 from epmd The port mapping service uses ,TCP The port segment is used to establish connection and communication between nodes .

After the firewall is set ,EMQ You need to configure the same port segment ,emqttd/etc/emq.conf file :

## Distributed node port range

node.dist_listen_min = 6369

node.dist_listen_max = 7369

- 1.

- 2.

- 3.

Uniformity Hash And DHT

NoSQL Distributed design in database field , Most will adopt consistency Hash or DHT.EMQ The message server cluster architecture can support tens of millions of routes , A larger level of clustering can adopt consistency Hash、DHT or Shard Way to segment the routing table .

Load balancing

HAProxy -> EMQ colony

HAProxy As LB Deploy EMQ colony , And end SSL Connect :

- establish EMQ Cluster nodes , for example :

node | IP Address |

emq1 | 192.168.0.2 |

emq2 | 192.168.0.3 |

- To configure /etc/haproxy/haproxy.cfg, Example :

listen mqtt-ssl bind *:8883 ssl crt /etc/ssl/emqttd/emq.pem no-sslv3 mode tcp maxconn 50000 timeout client 600s default_backend emq_cluster backend emq_cluster mode tcp balance source timeout server 50s timeout check 5000 server emq1 192.168.0.2:1883 check inter 10000 fall 2 rise 5 weight 1 server emq2 192.168.0.3:1883 check inter 10000 fall 2 rise 5 weight 1 source 0.0.0.0 usesrc clientip

Official documents : http://cbonte.github.io/haproxy-dconv/1.8/intro.html

NGINX Plus -> EMQ colony

NGINX Plus Product as EMQ colony LB, And end SSL Connect :

- register NGINX Plus Trial version ,Ubuntu Lower installation : https://cs.nginx.com/repo_setup

- establish EMQ Node cluster , for example :

node | IP Address |

emq1 | 192.168.0.2 |

emq2 | 192.168.0.3 |

- To configure /etc/nginx/nginx.conf, Example :

stream { # Example configuration for TCP load balancing upstream stream_backend { zone tcp_servers 64k; hash $remote_addr; server 192.168.0.2:1883 max_fails=2 fail_timeout=30s; server 192.168.0.3:1883 max_fails=2 fail_timeout=30s; } server { listen 8883 ssl; status_zone tcp_server; proxy_pass stream_backend; proxy_buffer_size 4k; ssl_handshake_timeout 15s; ssl_certificate /etc/emqttd/certs/cert.pem; ssl_certificate_key /etc/emqttd/certs/key.pem; } }

Official documents : https://cs.nginx.com/repo_setup

Node bridging (Bridge)

EMQ The message server supports multi node bridging mode interconnection :

--------- --------- ---------

Publisher --> | Node1 | --Bridge Forward--> | Node2 | --Bridge Forward--> | Node3 | --> Subscriber

--------- --------- ---------

- 1.

- 2.

- 3.

Bridging between nodes is different from clustering , Do not copy the topic tree and routing table , Forward only according to the bridging rules MQTT news .

EMQ Node bridging configuration

Suppose you create two EMQ node , And create a bridge to forward all sensors (sensor) Subject message :

Catalog | node | MQTT port |

emqttd1 | 1883 | |

emqttd2 | 2883 |

start-up emqttd1, emqttd2 node :

cd emqttd1/ && ./bin/emqttd start

cd emqttd2/ && ./bin/emqttd start

- 1.

- 2.

emqttd1 Create to... On the node emqttd2 The bridge :

$ ./bin/emqttd_ctl bridges start [email protected] sensor/#

bridge is started.

$ ./bin/emqttd_ctl bridges list

bridge: [email protected]/#-->[email protected]

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

test emqttd1–sensor/#–>emqttd2 Bridging :

#A Connect emqttd2 node , subscribe sensor/# The theme

#B Connect emqttd1 node , Release sensor/test The theme

#A Accept to sensor/test The news of

- 1.

- 2.

- 3.

- 4.

- 5.

Delete bridge :

./bin/emqttd_ctl bridges stop [email protected] sensor/#

- 1.

边栏推荐

- STC-B学习板蜂鸣器播放音乐

- Face and eye recognition based on OpenCV's own model

- Expanded polystyrene (EPS) global and Chinese markets 2022-2028: technology, participants, trends, market size and share Research Report

- Wang Shuang's detailed learning notes of assembly language II: registers

- Global and Chinese markets of electronic grade hexafluorobutadiene (C4F6) 2022-2028: Research Report on technology, participants, trends, market size and share

- 王爽汇编语言学习详细笔记一:基础知识

- 1.支付系统

- If the position is absolute, touchablehighlight cannot be clicked - touchablehighlight not clickable if position absolute

- Keil5 MDK's formatting code tool and adding shortcuts

- Global and Chinese markets for complex programmable logic devices 2022-2028: Research Report on technology, participants, trends, market size and share

猜你喜欢

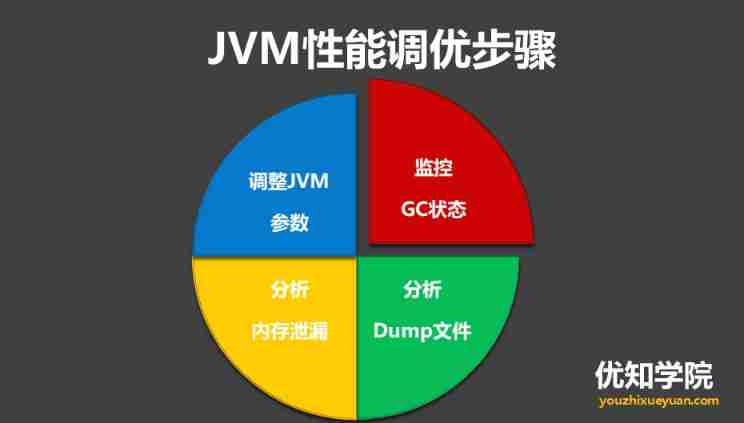

High concurrency programming series: 6 steps of JVM performance tuning and detailed explanation of key tuning parameters

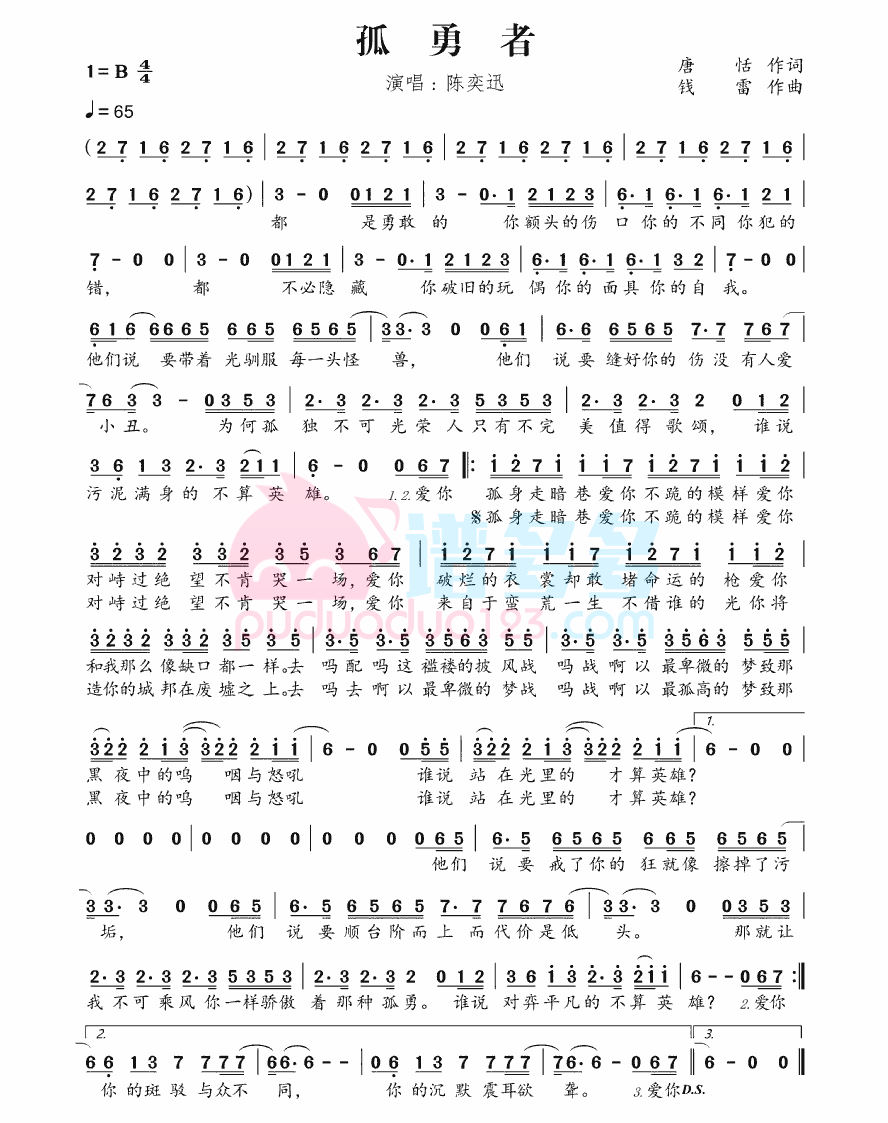

STC-B学习板蜂鸣器播放音乐

Don't you even look at such a detailed and comprehensive written software test question?

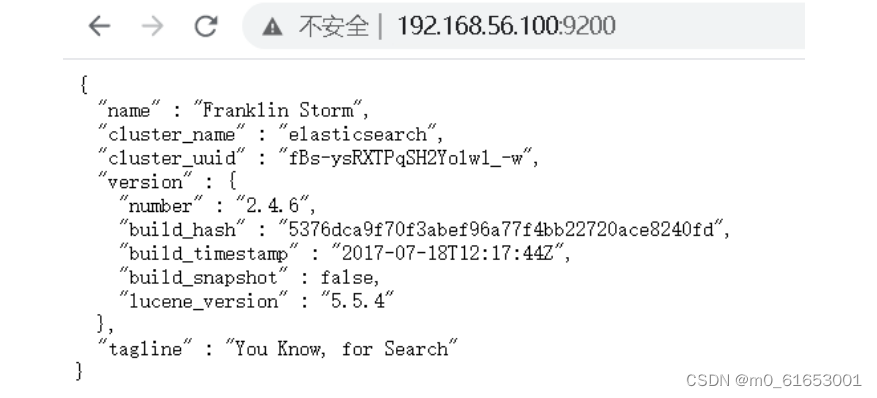

Es full text index

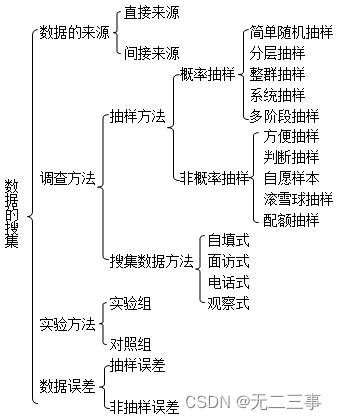

Statistics 8th Edition Jia Junping Chapter 2 after class exercises and answer summary

Statistics 8th Edition Jia Junping Chapter 4 Summary and after class exercise answers

STC-B学习板蜂鸣器播放音乐2.0

CSAPP家庭作業答案7 8 9章

MySQL中什么是索引?常用的索引有哪些种类?索引在什么情况下会失效?

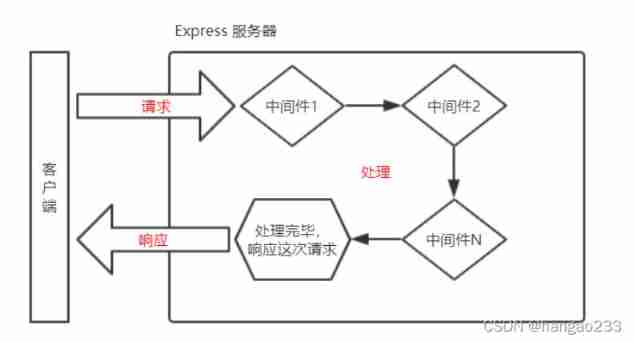

Express

随机推荐

Function: string storage in reverse order

"If life is just like the first sight" -- risc-v

关于交换a和b的值的四种方法

5分钟掌握机器学习鸢尾花逻辑回归分类

Fundamentals of digital circuit (IV) data distributor, data selector and numerical comparator

JDBC 的四种连接方式 直接上代码

Transplant hummingbird e203 core to Da Vinci pro35t [Jichuang xinlai risc-v Cup] (I)

[pointer] the array is stored in reverse order and output

Pointer -- output all characters in the string in reverse order

[pointer] counts the number of times one string appears in another string

指针 --按字符串相反次序输出其中的所有字符

Query method of database multi table link

基于485总线的评分系统双机实验报告

C language learning summary (I) (under update)

The common methods of servlet context, session and request objects and the scope of storing data in servlet.

5 minutes to master machine learning iris logical regression classification

Mysql的事务是什么?什么是脏读,什么是幻读?不可重复读?

Réponses aux devoirs du csapp 7 8 9

Global and Chinese markets of Iam security services 2022-2028: Research Report on technology, participants, trends, market size and share

Global and Chinese market of barrier thin film flexible electronics 2022-2028: Research Report on technology, participants, trends, market size and share