当前位置:网站首页>GFS distributed file system

GFS distributed file system

2022-07-05 02:29:00 【No such person found 0330】

Catalog

1、GlusterFS brief introduction

Scalability and high performance

Glusterd( Background management process )

7、GlusterFS The type of volume

Distributed volumes (Distribute volume)

Distributed replication volumes

8、 Deploy GlusterFS to cluster around

Turn off firewall ( all node node )

Disk partition , And mount ( all node node )

Modify hostname , To configure /etc/hosts file ( all node node )

install 、 start-up GlusterFS( all node Operation on node )

Add nodes to the storage trust pool ( stay node1 Operation on node )

At every Node View cluster status on node

Create distributed striped volumes

Create a distributed replication volume

View a list of all current volumes

Deploy Gluster client (192.168.239.50)

View the Striped volume file distribution

View the distribution of replication volumes

View the distributed striped volume distribution

View the distributed replication volume distribution

1、GlusterFS brief introduction

- GlusterFs Is an open source distributed file system .

- By the storage server 、 Client and NFS/Samba Storage gateway ( Optional , Choose to use as needed ) form .

- No metadata server component , This helps to improve the performance of the whole system 、 Reliability and stability .

MFS

Traditional distributed file systems mostly store metadata through meta servers , Metadata contains directory information on the storage node 、 Directory structure, etc . This design has a high efficiency in browsing the directory , But there are also some defects , For example, single point of failure . Once the metadata server fails , Even if the node has high redundancy , The entire storage system will also crash .

GlusterFs

GlusterFs Distributed file system is based on the design of no meta server , Strong horizontal data expansion ability , High reliability and storage efficiency .

GlusterFs It's also scale-out( Horizontal scaling ) Storage solutions Gluster At the heart of , It has strong horizontal expansion ability in storing data , It can support numbers through extension PB Storage capacity and handling of thousands of clients .

GlusterFs Support with the help of TCP/IP or InfiniBandRDMA The Internet ( A technology that supports multiple concurrent links , With high bandwidth 、 Low latency 、 Features of high scalability ) Bring together physically dispersed storage resources , Provide unified storage services , And use a unified global namespace to manage data .

2、GlusterFS characteristic

Scalability and high performance

GlusterFs Leverage dual features to provide high-capacity storage solutions .

scale-out The architecture allows for Simply add storage nodes to improve storage capacity and performance ( disk 、 The calculation and I/o Resources can be increased independently ), Support 10GbE and InfiniBand High speed internet connection .

Gluster Elastic Hash (ElasticHash) It's solved GlusterFS Dependency on metadata server , Improved single point of failure and performance bottlenecks , The parallel data access is realized .Gluste rFS The elastic hash algorithm can intelligently locate any data fragment in the storage pool ( Store the data in pieces on different nodes ), There is no need to view the index or query the metadata server .

High availability

GlusterFS You can copy files automatically , Such as mirror image or multiple copies , To ensure that data is always accessible , Even in case of hardware failure, it can be accessed normally .

When the data is inconsistent , The self-healing function can restore the data to the correct state , Data repair is performed in the background in an incremental manner , Almost no performance load is generated .

GlusterFS Can support all storage , Because it did not design its own private data file format , Instead, it uses the mainstream standard disk file system in the operating system ( Such as EXT3、XFS etc. ) To store files , Therefore, data can be accessed in the traditional way of accessing disk .

Global unified namespace

Distributed storage , Integrate the namespaces of all nodes into a unified namespace , Form the storage capacity of all nodes of the whole system into a large virtual storage pool , The front-end host can access these nodes to complete data reading and writing operations .

Elastic volume management

GlusterFs By storing data in logical volumes , The logical volume is obtained by independent logical partition from the logical storage pool .

Logical storage pools can be added and removed online , No business interruption . Logical volumes can grow and shrink online as needed , And load balancing can be realized in multiple nodes .

File system configuration can also be changed and applied online in real time , It can adapt to changes in workload conditions or online performance tuning .

Based on standard protocol

Gluster Storage services support NES、CIFS、HTTP、FTP、SMB And Gluster Native protocol , Completely with POSIX standard ( Portable operating system interface ) compatible .

Existing applications can be modified without any modification Gluster To access the data in , You can also use dedicated API Visit .

3、GlusterFS The term

Brick( Memory block )

It refers to the private partition provided by the host for physical storage in the trusted host pool , yes GlusterFs Basic storage unit in , It is also the storage directory provided externally on the server in the trusted storage pool . The format of the storage directory consists of the absolute path of the server and directory , The expression is SERVER:EXPORT, Such as 192.168.239.10:/data/mydir/.

Volume( Logic volume )

A logical volume is a set of Brick Set . A volume is a logical device for data storage , Be similar to LvM Logical volumes in . Days section Gluster Management operations are performed on volumes .

FUSE

It's a kernel module , Allow users to create their own file systems , There is no need to modify the kernel code .

VES

The interface provided by kernel space to user space to access disk .

Glusterd( Background management process )

Run on each node in the storage cluster .

4、 Modular stack architecture

GlusterFs Use modularity 、 Stack architecture .

Through various combinations of modules , To achieve complex functions . for example Replicate The module can implement RAID1,Stripe The module can implement RAID0, Through the combination of the two, we can realize RAID10 and RAID01, At the same time, higher performance and reliability .

5、GlusterFS workflow

- Client or application through GlusterFs The mount point of access data .

- linux The system kernel passes through VFS API Receive requests and process .

- VFS Submit the data to FUSE Kernel file system , And register an actual file system with the system FUSE, and FUSE File systems pass data through /dev/fuse The equipment documents were submitted to GlusterFS client End . Can be FUSE The file system is understood as a proxy .

- GlusterFS client After receiving the data ,client Process the data according to the configuration of the configuration file .

- after GlusterFs client After processing , Transfer data over the network to the remote GlusterFs server, And write the data to the server storage device .

6、 elastic HASH Algorithm

elastic HASH The algorithm is Davies-Meyer The specific implementation of the algorithm , adopt HASH The algorithm can get a 32 Bit integer range hash value , Suppose there are... In the logical volume N Units of storage Brick, be 32 The integer range of bits will be divided into N A continuous subspace , Each space corresponds to a Brick.

When a user or application accesses a namespace , By evaluating the namespace HASH. value , According to the HASH Value corresponding to 32 Bit integer where the spatial positioning data is located Brick.

elastic HASH The advantages of the algorithm

Make sure that the data is evenly distributed in each Brick in .

Solved the dependency on metadata server , And then solve the single point of failure and access bottlenecks .

7、GlusterFS The type of volume

GlusterFS Support 7 Seed roll

- Distributed volumes

- Strip roll

- Copy volume

- Distributed striped volume

- Distributed replication volumes

- Strip copy volume

- Distributed striped replication volumes

Distributed volumes (Distribute volume)

- File by HAS The daily algorithm is distributed to all Brick Server On , This kind of roll is GlusterFS The default volume of ; In document units according to HASH The algorithm hashes to different Brick, In fact, it just expands the disk space , If a disk is damaged , Data will also be lost , File level RAIDO, No fault tolerance .

- In this mode , The file is not partitioned , The file is stored directly in some Server Node .

- Due to the direct use of the local file system for file storage , So access efficiency has not improved , On the contrary, it will be reduced due to network communication .

Example principle

File1 and File2 Store in Server1, and File3 Store in server2, Files are stored randomly , A file ( Such as File1) Either in server1 On , Either in Server2 On , Can't be stored in pieces at the same time Server1 and Server2 On .

Features of distributed volumes

- Files are distributed on different servers , No redundancy .

- Expand the size of the volume more easily and cheaply .

- A single point of failure can cause data loss .

- Rely on the underlying data protection .

Create distributed volumes

Create a file called dis-volume Distributed volume of , The document will be based on HASH Distribution in server1:/dir1、server2:/dir2 and server3:/dir3 in

gluster volume create dis-volume server1:/dirl server2:/dir2 server3:/dir3

Strip roll

- Divide the file into N block (N Strip nodes ), Polling is stored in each Brick Server node

- When storing large files , The performance is particularly outstanding

- No redundancy , similar Raid0

characteristic

- The data is divided into smaller pieces and distributed to different stripe areas in the block server cluster

- Distribution reduces load and smaller files speed up access

- No data redundancy

Create a striped roll

Created a new one called Stripe-volume Strip roll of , The files will be stored in the Server1:/dir1 and Server2:/dir2 Two Brick in

gluster volume create stripe-volume stripe 2 transport tcp server1:/dirl server2:/dir2

Copy volume (Replica volume)

- Synchronize files to multiple Brick On , Make it have multiple copies of files , It belongs to file level RAID1, Fault tolerance . Because the data is scattered in multiple Brick in , So the read performance has been greatly improved ,, But write performance drops .

- Replication volumes are redundant , Even if one node is damaged , It does not affect the normal use of data . But because you want to save a copy , So disk utilization is low .

Example principle

File1 At the same time Server1 and Server2,File2 So it is with , amount to server2 The document in is Server1 A copy of the file in .

Replication volume features

- All servers in the volume keep a complete copy .

- The number of copies of a volume can be determined when the customer creates , However, the number of copies must be equal to Brick Number of storage servers included .

- At least two block servers or more .

- Redundancy .

Create replication volume

Create a rep-volume Copy volume of , The file will store two copies at the same time , Respectively in server1:/dirl and Server2:/dir2 Two Brick in

gluster volume create rep-volume replica 2 transport tcp serverl:/dirl server2:/dir2

Distributed striped volume

- Take into account the functions of distributed volume and striped volume

- Mainly used for large file access processing

- At least... Is needed 4 Servers

Create distributed striped volumes

Created a dis-stripe Distributed striped volume , When configuring distributed striped volumes , In the volume Brick The number of storage servers included must be a multiple of the number of stripes (>=2 times )

gluster volume create dis-stripe stripe 2 transport tcp server1:/dir1 server2:/dir2 server3:/dir3 server4:/dir4

Distributed replication volumes

- Both distributed and replicated volumes

- Used when redundancy is required

Create a distributed replication volume

Create a dis-rep Distributed striped volume , An error occurred while configuring a distributed replication volume , In the volume Brick The number of storage servers included must be a multiple of the number of stripes (>=2 times )

gluster volume create dis-rep replica 2 transport tcp server1:/dir1 server2:/dir2 server3:/dir3 server4:/dir4

8、 Deploy GlusterFS to cluster around

Node1 node :node1/192.168.52.110 disk :/dev/sdb1 Mount point :/data/sdb1

/dev/sdc1 /data/sdc1

/dev/sdd1 /data/sdd1

/dev/sde1 /data/sde1

Node2 node :node2/192.168.52.120 disk :/dev/sdb1 Mount point :/data/sdb1

/dev/sdc1 /data/sdc1

/dev/sdd1 /data/sdd1

/dev/sde1 /data/sde1

Node3 node :node3/192.168.52.130 disk :/dev/sdb1 Mount point :/data/sdb1

/dev/sdc1 /data/sdc1

/dev/sdd1 /data/sdd1

/dev/sde1 /data/sde1

Node4 node :node4/192.168.52.140 disk :/dev/sdb1 Mount point :/data/sdb1

/dev/sdc1 /data/sdc1

/dev/sdd1 /data/sdd1

/dev/sde1 /data/sde1

Client node :192.168.52.50

Prepare the environment ( all node Operation on node )

Add disks and refresh

echo "---">/sys/class/scsi host/hoste/scan

echo "---">/sys/class/scsi host/host1/scan

echo "---">/sys/class/scsi host/host2/scanTurn off firewall ( all node node )

systemctl stop firewalld

setenforce 0

Disk partition , And mount ( all node node )

vim fdisk.sh

#!/bin/bash

NEWDEV=`ls /dev/sd* | grep -o 'sd[b-z]' | uniq`

for VAR in $NEWDEV

do

echo -e "n\np\n\n\n\nw\n" | fdisk /dev/$VAR &> /dev/null

mkfs.xfs /dev/${VAR}"1" &> /dev/null

mkdir -p /data/${VAR}"1" &> /dev/null

echo "/dev/${VAR}"1" /data/${VAR}"1" xfs defaults 0 0" >> /etc/fstab

done

mount -a &> /dev/null

chmod +x ./fdisk.sh

cd /opt/

./fdisk.sh

Modify hostname , To configure /etc/hosts file ( all node node )

# With Node1 Node as an example

hostnamectl set-hostname node1

su

echo "192.168.52.110 node1" >> /etc/hosts

echo "192.168.52.120 node2" >> /etc/hosts

echo "192.168.52.130 node3" >> /etc/hosts

echo "192.168.52.140 node4" >> /etc/hosts

install 、 start-up GlusterFS( all node Operation on node )

# take gfsrepo Software uploaded to /opt Under the table of contents

cd /etc/yum.repos.d/

mkdir repo.bak

mv *.repo repo.bak

vim glfs.repo

[glfs]

name=glfs

baseurl=file:///opt/gfsrepo

gpgcheck=0

enabled=1

yum clean all && yum makecache

#yum -y install centos-release-gluster # If official YUM Source installation , It can point directly to the Internet warehouse

yum -y install glusterfs glusterfs-server glusterfs-fuse glusterfs-rdma

systemctl start glusterd.service

systemctl enable glusterd.service

systemctl status glusterd.service

Add nodes to the storage trust pool ( stay node1 Operation on node )

# Just on one Node Add other nodes to the node

gluster peer probe node1

gluster peer probe node2

gluster peer probe node3

gluster peer probe node4

At every Node View cluster status on node

gluster peer status

Create a volume

According to the plan, create the following volume

| Volume name | Volume type | Brick |

|---|---|---|

| dis-volume | Distributed volumes | node1(/data/sdb1)、node2(/data/sdb1) |

| stripe-volume | Strip roll | node1(/data/sdc1)、node2(/data/sdc1) |

| rep-volume | Copy volume | node3(/data/sdb1)、node4(/data/sdb1) |

| dis-stripe | Distributed striped volume | node1(/data/sdd1)、node2(/data/sdd1)、node3(/data/sdd1)、node4(/data/sdd1) |

| dis-rep | Distributed replication volumes | node1(/data/sde1)、node2(/data/sde1)、node3(/data/sde1)、node4(/data/sde1) |

Create distributed volumes

# Create distributed volumes , No type specified , Distributed volumes are created by default

gluster volume create dis-volume node1:/data/sdb1 node2:/data/sdb1 force

# View volume list

gluster volume list

# Start the new distributed volume

gluster volume start dis-volume

# View information about creating distributed volumes

gluster volume info dis-volume

Create a striped roll

# The specified type is stripe, Values for 2, And followed by 2 individual Brick Server, So you're creating a striped volume

gluster volume create stripe-volume stripe 2 node1:/data/sdc1 node2:/data/sdc1 force

gluster volume start stripe-volume # Start new striped volume

gluster volume info stripe-volume # View information about creating striped volumes

Create replication volume

# The specified type is replica, Values for 2, And followed by 2 individual Brick Server, So what is created is a copy volume

gluster volume create rep-volume replica 2 node3:/data/sdb1 node4:/data/sdb1 force

gluster volume start rep-volume # Start replication volume

gluster volume info rep-volume # View replication volume information

Create distributed striped volumes

# The specified type is stripe, Values for 2, And followed by 4 individual Brick Server, yes 2 Twice as many , So you create a distributed striped volume

gluster volume create dis-stripe stripe 2 node1:/data/sdd1 node2:/data/sdd1 node3:/data/sdd1 node4:/data/sdd1 force

gluster volume start dis-stripe

gluster volume info dis-stripe

Create a distributed replication volume

# The specified type is replica, Values for 2, And followed by 4 individual Brick Server, yes 2 Twice as many , So you create a distributed replication volume

gluster volume create dis-rep replica 2 node1:/data/sde1 node2:/data/sde1 node3:/data/sde1 node4:/data/sde1 force

gluster volume start dis-rep

gluster volume info dis-rep

View a list of all current volumes

gluster volume list

Deploy Gluster client (192.168.239.50)

Install client software

# take gfsrepo Software uploaded to /opt Suborder

cd /etc/yum.repos.d/

mkdir repo.bak

mv *.repo repo.bak

vim glfs.repo

[glfs]

name=glfs

baseurl=file:///opt/gfsrepo

gpgcheck=0

enabled=1

yum clean all && yum makecache

yum -y install glusterfs glusterfs-fuse

Create mount directory

mkdir -p /test/{dis,stripe,rep,dis_stripe,dis_rep}

ls /test

To configure /etc/hosts file

echo "192.168.52.110 node1" >> /etc/hosts

echo "192.168.52.120 node2" >> /etc/hosts

echo "192.168.52.130 node3" >> /etc/hosts

echo "192.168.52.140 node4" >> /etc/hosts

mount Gluster file system

# To mount temporarily

mount.glusterfs node1:dis-volume /test/dis

mount.glusterfs node1:stripe-volume /test/stripe

mount.glusterfs node1:rep-volume /test/rep

mount.glusterfs node1:dis-stripe /test/dis_stripe

mount.glusterfs node1:dis-rep /test/dis_rep

df -Th

Permanently mount

vim /etc/fstab

node1:dis-volume /test/dis glusterfs defaults,_netdev 0 0

node1:stripe-volume /test/stripe glusterfs defaults,_netdev 0 0

node1:rep-volume /test/rep glusterfs defaults,_netdev 0 0

node1:dis-stripe /test/dis_stripe glusterfs defaults,_netdev 0 0

node1:dis-rep /test/dis_rep glusterfs defaults,_netdev 0 0

mount -a

test Gluster file system

Write files to the volume , Client operation

cd /opt

dd if=/dev/zero of=/opt/demo1.log bs=1M count=40

dd if=/dev/zero of=/opt/demo2.log bs=1M count=40

dd if=/dev/zero of=/opt/demo3.log bs=1M count=40

dd if=/dev/zero of=/opt/demo4.log bs=1M count=40

dd if=/dev/zero of=/opt/demo5.log bs=1M count=40

ls -lh /opt

cp /opt/demo* /test/dis

cp /opt/demo* /test/stripe/

cp /opt/demo* /test/rep/

cp /opt/demo* /test/dis_stripe/

cp /opt/demo* /test/dis_rep/

View file distribution

# View distributed file distribution

[[email protected] opt]# ls -lh /data/sdb1 # The data is not fragmented

[[email protected] ~]# ls -lh /data/sdb1

View the Striped volume file distribution

[[email protected] ~]# ls -lh /data/sdc1 # The data is sliced 50% No copy No redundancy

[[email protected] ~]# ll -h /data/sdc1 # The data is sliced 50% No copy No redundancy

View the distribution of replication volumes

[[email protected] ~]# ll -h /data/sdb1 # The data is not fragmented There are copies There's redundancy

[[email protected] ~]# ll -h /data/sdb1 # The data is not fragmented There are copies There's redundancy

View the distributed striped volume distribution

[[email protected] ~]# ll -h /data/sdd1 # The data is sliced 50% No copy No redundancy

[[email protected] ~]# ll -h /data/sdd1

[[email protected] ~]# ll -h /data/sdd1

[[email protected] ~]# ll -h /data/sdd1

View the distributed replication volume distribution

[[email protected] ~]# ll -h /data/sde1 # The data is not fragmented There are copies There's redundancy

[[email protected] ~]# ll -h /data/sde1

[[email protected] ~]# ll -h /data/sde1

[[email protected] ~]# ll -h /data/sde1

Destructive testing

Hang up node2 Node or close glusterd Service to simulate failure

[[email protected] ~]# systemctl stop glusterd.service

Check whether the file is normal on the client

Distributed volume data viewing

[[email protected] ~]# ll /test/dis/ # Found missing... On the client demo5.log file , This is in node2 Upper Strip roll

[[email protected] ~]# cd /test/stripe/ # cannot access , Striped volumes are not redundant

[[email protected] stripe]# ll

Distributed striped volume

[[email protected] ~]# ll /test/dis_stripe/ # cannot access , Distributed striped volumes are not redundant

Distributed replication volumes

[[email protected] test]# ll /test/dis_rep/ # You can visit , Distributed replication volumes are redundant

Hang up node2 and node4 node , Check whether the file is normal on the client

systemctl stop glusterd.service

Test whether the replicated volume is normal

[[email protected] ~]# ls -l /test/rep/ # Test normal on the client The data are

Test whether the distributed stripe volume is normal

[[email protected] ~]# ll /test/dis_stripe/ # There is no data to test on the client

Total usage 0

Test whether the distributed replication volume is normal

[[email protected] ~]# ll /test/dis_rep/ # Test normal on the client There's data

Other maintenance commands

1. see GlusterFS volume

gluster volume list

2. View information for all volumes

gluster volume info

3. View the status of all volumes

gluster volume status

4. Stop a volume

gluster volume stop dis-stripe

5. Delete a volume , Be careful : When deleting a volume , You need to stop the volume first , And no host in the trust pool is in the down state , Otherwise, the deletion will not succeed

gluster volume delete dis-stripe

6. Set the access control for the volume

# Just refuse

gluster volume set dis-volume auth.reject 192.168.239.100

# Only allowed

gluster volume set dis-rep auth.allow 192.168.239.* # Set up 192.168.239.0 All of the segments IP The address can be accessed dis-rep volume ( Distributed replication volumes )

边栏推荐

- [Digital IC hand tearing code] Verilog edge detection circuit (rising edge, falling edge, double edge) | topic | principle | design | simulation

- Can you really learn 3DMAX modeling by self-study?

- The steering wheel can be turned for one and a half turns. Is there any difference between it and two turns

- Problem solving: attributeerror: 'nonetype' object has no attribute 'append‘

- Serious bugs with lifted/nullable conversions from int, allowing conversion from decimal

- d3js小记

- 100 basic multiple choice questions of C language (with answers) 04

- "C zero foundation introduction hundred knowledge and hundred cases" (72) multi wave entrustment -- Mom shouted for dinner

- Prometheus monitors the correct posture of redis cluster

- Uniapp navigateto jump failure

猜你喜欢

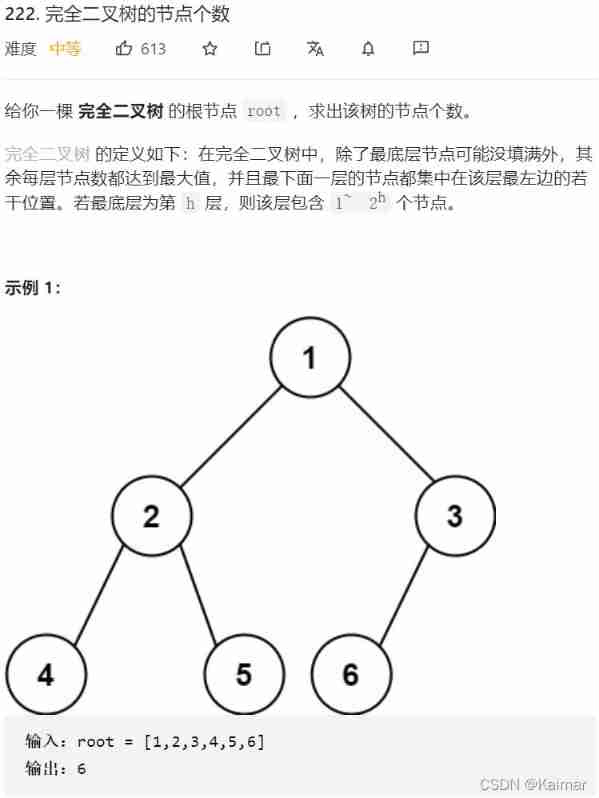

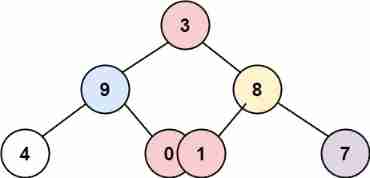

【LeetCode】222. The number of nodes of a complete binary tree (2 mistakes)

Official announcement! The third cloud native programming challenge is officially launched!

JVM - when multiple threads initialize the same class, only one thread is allowed to initialize

丸子百度小程序详细配置教程,审核通过。

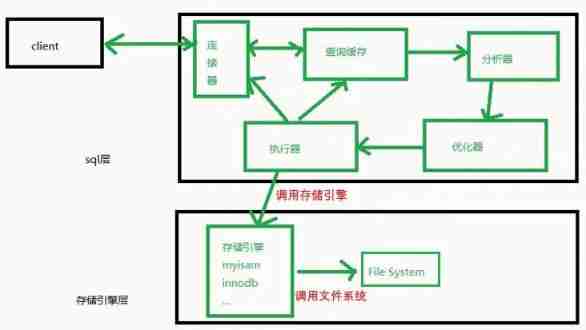

Practical case of SQL optimization: speed up your database

The steering wheel can be turned for one and a half turns. Is there any difference between it and two turns

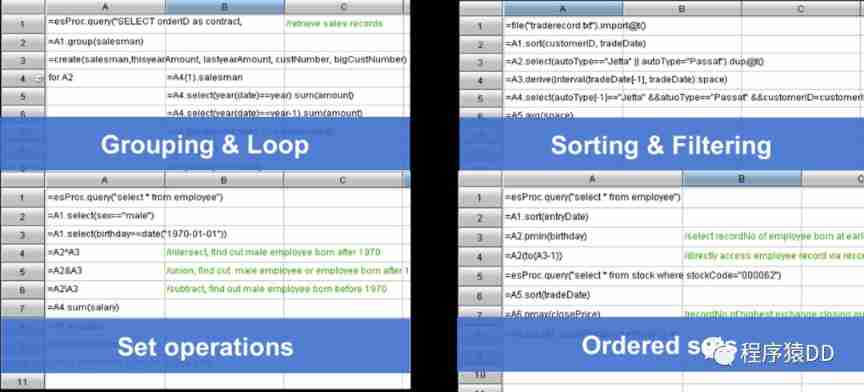

Open source SPL optimized report application coping endlessly

官宣!第三届云原生编程挑战赛正式启动!

LeetCode 314. Binary tree vertical order traversal - Binary Tree Series Question 6

Asynchronous and promise

随机推荐

Problem solving: attributeerror: 'nonetype' object has no attribute 'append‘

Comment mettre en place une équipe technique pour détruire l'entreprise?

[illumination du destin - 38]: Ghost Valley - chapitre 5 Flying clamp - one of the Warnings: There is a kind of killing called "hold Kill"

【LeetCode】404. Sum of left leaves (2 brushes of wrong questions)

Pytest (4) - test case execution sequence

Go RPC call

RichView TRVStyle MainRVStyle

【LeetCode】110. Balanced binary tree (2 brushes of wrong questions)

Use the difference between "Chmod a + X" and "Chmod 755" [closed] - difference between using "Chmod a + X" and "Chmod 755" [closed]

The perfect car for successful people: BMW X7! Superior performance, excellent comfort and safety

Kotlin - coroutine

RichView TRVStyle MainRVStyle

Bert fine tuning skills experiment

Li Kou Jianzhi offer -- binary tree chapter

JVM - when multiple threads initialize the same class, only one thread is allowed to initialize

Prometheus monitors the correct posture of redis cluster

Hmi-31- [motion mode] solve the problem of picture display of music module

RichView TRVUnits 图像显示单位

Marubeni Baidu applet detailed configuration tutorial, approved.

openresty ngx_ Lua execution phase