当前位置:网站首页>Exploration of short text analysis in the field of medical and health (I)

Exploration of short text analysis in the field of medical and health (I)

2022-07-05 02:03:00 【Necther】

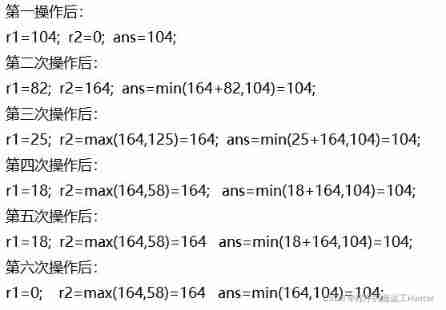

Preface

Briefly review , Last time we talked about phrase mining (Phrase Mining), Extract high-quality noun phrases from unstructured text , It is used to solve the problem of insufficient professional dictionaries in professional fields . We mentioned teacher Han Jiawei's trilogy TopMine,segphrase and AutoPhrase, Its main method is mining frequent patterns based on part of speech tagging , According to the template PMI,KL Divergence, etc , To classify , Sort according to the classification results . After trying these methods , We have added some common features on the original basis , For example, it is constructed according to a part of entity words sorted out before n-gram Model , According to the results of its language model, the features are constructed and classified , The extraction results have been improved , Better boundary treatment , The accuracy has also improved .

thorough Phrase Mining

For structured text ,Phrase Mining It's just the first step , It's the most important step ,phrase The higher the accuracy of , The better the effect of constructing knowledge map and attribute extraction . Actually Phrase Mining There are two important sources of extracted features .

- Contextual features . for example :Is-A pattern, Statistical context co-occurrence information , Context syntax structure and so on .

- phrase Features such as internal words or words . for example one hot encoder, Part of speech tagging , Part of speech tagging template frequency , Statistics PMI Information , And words / Word vector and so on .

The simplest way for contextual features , Use high-quality pattern To match [1], Teacher hanjiawei is 17 In, I mentioned an article MetaPAD, It expounds in detail how to use the rich context information around the entity , To determine the template boundary , And extract content . As shown in the figure below :

President Barack Obama's government of United States reported that... For this sentence , After entity replacement President [&Politician] government of [&Country] reported that-> Extract templates [&Politician] government of [&Country] . Large scale text statistics template information , According to the template quality function , Filter the generated template , Keep high-quality templates [2]. The accuracy of entity extraction by this template method is still relatively high . For extraction , A high-quality template can provide us with accurate entity information , Moreover, accurate entity information and better quality detection methods can provide us with a high-quality template with great quality , This is a bootstrapping The process of , Continuous iteration and continuous acquisition of new data .

phrase Internal characteristics , The simplest way to use word vectors one-hot encoder, Statistics n-gram Information , Actually phrase Internal features also contain more or less context related information , Conventional word2vec, Use CBOW and skip-gram Way to build , In fact, it is the weight of the hidden layer when predicting the current word or context .Pos-Tag Part of speech tagging , Use context sensitive information , Build hidden Markov model , Mark the text in sequence , Judge part of speech . In fact, you can use words n-gram To calculate information entropy and PMI Etc , For example, in 2012 year Matrix67 Put forward 《 Sociolinguistics in the Internet age : be based on SNS Text data mining based on 》 Calculate the information entropy and PMI When mining new words , What is used is the statistical results of words , But under a large number of texts , There are too many results of word permutation and combination , When n Fetch 7 The amount of information and computation has been abnormally large , So the use of pos pattern As a substitute word or word n-gram Statistics , It is acceptable in the amount of calculation , And it's also in AutoPhrase and Segphrase Some obvious effects have been seen in .

Take a few common examples of the above two methods in the dingxiangyuan search log :

As you can see in the picture above , Doing it Phrase Mining In fact, we mainly consider these two aspects , One is template extraction , One is the arrangement and combination of internal structure . In fact, these are very common methods , It has also achieved good results in industry , You can get a large number of high-quality pattern and high-quality phrase, We will introduce the application of internal features later .

Realize automatic extraction and evaluation

stay Segphrase Notes mentioned in 300 High quality samples , But this matter is more troublesome , There are two reasons

- Professional knowledge of domain knowledge is needed for annotation , Due to its dependence on high-quality data, it is inefficient .

- The concept of high quality is not clear , The so-called high quality should refer to the exhaustion of all possible data distribution , Therefore, comparison depends on feature selection , Results of feature distribution and word segmentation .

And then AutoPhrase Use wiki The topic words in the professional field of encyclopedia have been optimized as external knowledge introduction .

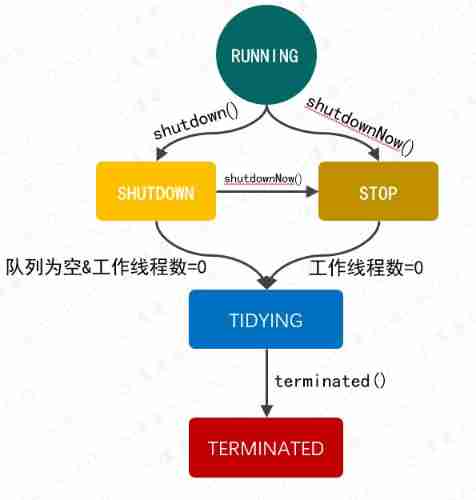

2019 In, Tencent published a paper on interpretable recommendation [3-4], There are three ways to build Concept Mining System, And realize the whole process of automatic extraction and evaluation .

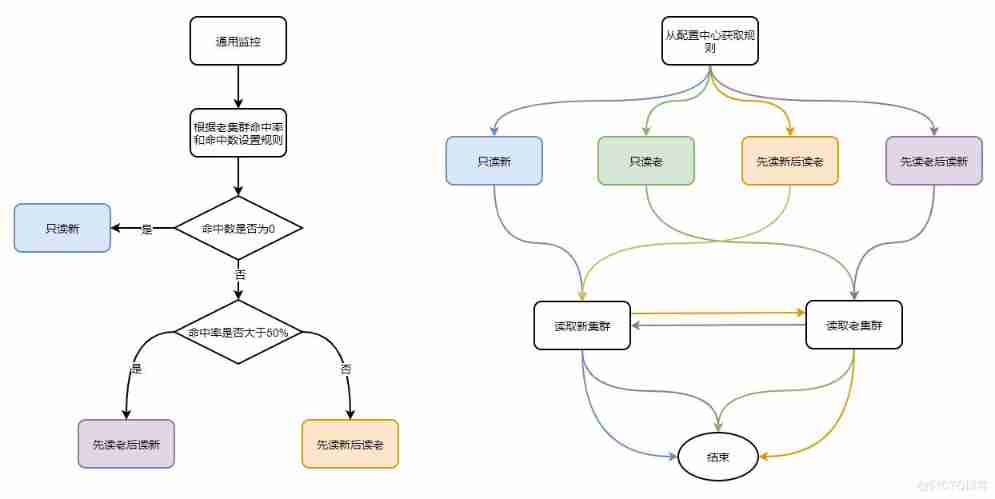

- Bootstrapping: Use some quality seed templates (Pre-defined/Seed Pattern), For users query And click on the post title To extract , Then we go through the classifier model , Using built Discriminator To classify , Scoring , Filter . Use filtered concept Generate a new template , Then filter the template by rules to obtain high-quality templates and add them to Pre-defined Pattern After the iteration .

- Query Click Ngram: According to the user log query And the clicked post title, After word segmentation N-gram extract . First of all query All of the N-gram result , Then according to title Of n-gram Judge query n-gram Whether it is included in title n-gram in , also query On title Whether the first and last words of are the same .

- Sequence Labeling: Sequence tagging , Two ways , If there is a batch of high-quality annotation data , In fact, it is completely unnecessary to use the classifier . In the early stage, you can try some remote supervision methods to build a batch of data , Then add the classifier as a constraint , Will reduce some recalls .

The early construction of this article Concept Mining System In fact, it mainly extracts some upper concepts , As shown in the figure below :

Connect according to the concepts and entities searched , Make interpretable recommendations . For Concept Mining System In the process of building , To realize automatic extraction Discriminator Classifier model , In Chinese, it says to use 300 As training data , And use GBDT To classify .

I stepped on many holes when reproducing this paper , The biggest problem is to build this classifier model , Because there are three different extraction methods , The result of extraction will be very different , At the same time, there will be many details when passing the classifier . For example N-gram Given when extracting N=5, If Bootstrapping The result of the extraction N>5 What to do when ? about N-gram and Sequence Labeling For the extracted results, the template can also be extracted , Can this template be applied to Bootstrapping What about China? ? And when the whole extraction system is industrialized , How to save costs ? How to define concept, for example : Pneumonia looks solid , But when building hierarchies , Acquired pneumonia is a lower instance of pneumonia , And the treatment of pneumonia should belong to the attribute of pneumonia , So does pneumonia count concept? These are all things to consider in reproducing this paper .

Stepped on a lot of pits , Some improved schemes have been added ,Concept Mining System The basic reproduction is completed , Show some extraction results , The score is 0.6 The above results are acceptable :

The main purpose of this paper is to make an interpretable recommendation , Give a post concept, Those of you who are interested can Focus on , Collection , give the thumbs-up . Follow up short query understanding I will elaborate on the relevant contents of .

Just now we mentioned a phrase The concept of internal characteristics , So how should this be applied ? Let's take a simple example , A tricky concept in the medical field is called symptoms , Symptoms are actually composed of atomic words with entity types , For example :

Symptom words : The pain in feet and legs is obvious in the morning /symptom

From the perspective of composition , Symptoms can be split

In the morning /situation foot /body and /conjunction Leg /body

Pain /atomsymptom obvious /severityin other words , For large concept entity words, the coarse granularity is divided into as small granularity as possible , And it can rearrange and combine according to the templates of fine-grained results and some segmentation results, and then there are some new coarse-grained entity words . What are the benefits of doing so ? On the one hand, it can optimize our extraction of symptoms , If there are new symptom words , It can well correspond to its action site , Information such as status . On the other hand , When making entity links , Noun phrases will find some new words without attributes , For example ' Upper sternum ' Is an entity that does not exist in the library , It is easy to connect it to ' Hyposternal fissure ' This symptom word , This problem can be solved by atomic word segmentation , The simplest way is to use its internal template for comparison , Upper sternum It should correspond to Body parts , upper part Should be as Location words It has no effect on the original label . Another example is user retrieval Cardiac function test , Coincidentally, there is no entity word in the database , Only Lung function test , We push users Lung function test I'm afraid I'll be killed , Atomized splitting can also solve this problem , We'll talk about how to do it later .

️ The front begins to advance

Just mentioned some rules extraction methods that are industrialized , In the process of growing from nothing , These are the roads that should be visited . Of course, if you are rich and powerful, find a group of experts in the field , Set a small goal , First, mark the data of 100 million , Training a model with strong generalization ability is definitely the best method (️ The data quality marked by the tagging personnel needs to be guaranteed . It is understandable that labeling is heavy and boring , Abnormal will lead to missed judgment 、 Misjudgment , such as “ Sword in hand ” It is wrongly marked as Surgical instruments )

18 Students of Nian Huadong technology sent an article on structured electronic medical record data [5], It breaks down the symptom words , Divide symptoms into atomic symptoms 、 Body parts 、 Keywords, etc . The content of this article is very simple , Mainly used POS features , Use Bidirectional LSTM-CRF Split in the way of sequence annotation . In terms of data, he divided the symptom combination words into 11 class :

Atomsymptom: Symptom atomic word , Inseparable symptom words , For example : Pain , Soreness

Body: Body part words

Head: Core words , Some target words other than body parts , Like blood pressure

Conjunction: Conjunction , and , and , And

Negative: Negative words , no , nothing , No, wait

Severity: Describe the severity of the symptom , slight , severe

Situation: State words , Postpartum , In the morning

Locative: Location words , upper part , Rear side , Wait above

Sensation: Sensory words , Burning sensation , Leng et al

Feature: Characteristic words , Acquisitiveness , Once in a while ,I/II type , Central type, etc

Modifier: Modifiers , falling , Rise, etc This article mainly describes the splitting of symptoms in electronic medical records , Use fine-grained splitting to structure the symptoms in the electronic medical record , Model is also a general model in sequence annotation , There is not much information about the application .

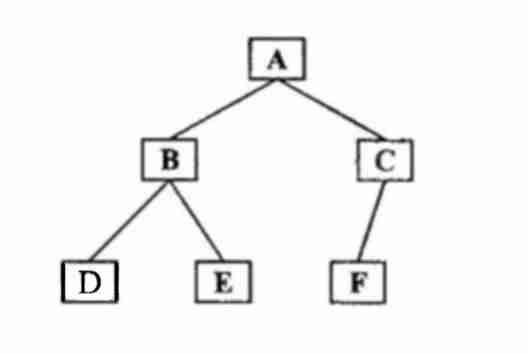

There is another article in this group [6] It is interesting to , It is equivalent to expanding the application in the previous article . Use the knowledge map to construct the hyponymic relationship of a symptom word , Standardize symptom data .

There are two symptom words on the left and right sides , First, standardize the left candidate set , The standardized process includes , Fine grained segmentation , Fine grained standardized substitution ( head Replace with Head ), Go to stop words and other operations , Have a headache Standardize to < Head , Pain >, There is paroxysmal headache on the left side of the head It can be sorted into < Head , Paroxysmal , Pain >. Because of some inclusion relationships , We can Have a headache As There is paroxysmal headache on the left side of the head The superordinate of . There are only three fine-grained types used here Atomsymptom,Body,Head. In fact, the good point of this article is that it can provide a scheme for entity alignment of new symptom words found when doing entity links , If it is a word that does not exist in the Library , We can also infer user intention according to its superordinate . Use segmentation to effectively fine-grained structure the data , Build the upper and lower relationship of knowledge map for storage and reasoning . This method can also effectively help us solve the problem mentioned just now Upper sternum —> Suprasternal fissure and Cardiac function test —> Lung function test This problem .

Simply expand , This year, 2019 ACL Teacher Liu Zhiyuan's team [7] The minimum semantic unit mentioned in ——》 Yi Yuan (sememe), Semaphore is the smallest semantic unit that is not suitable for segmentation , In this paper Sememe Knowledge Apply as a way of external knowledge , It proves the validity of semaphore knowledge in semantic composition experiment . In contrast, atomic word segmentation , In fact, it is the segmentation of the smallest semaphore in the vertical field , Later, we will consider using some semantic knowledge as external features to optimize our extraction and connection results . Interested children's shoes can also see its 2017 An article on the use of semantic knowledge for word expression learning [8].

Since fine-grained segmentation is theoretically beneficial for us to extract and link entities , Then let's have fun , Turn around and look ner Hundreds of thousands of symptoms extracted …... There are hundreds of thousands of diseases …...

Fine grained segmentation is a long-term iterative process , Need to keep updating , Then constantly optimize and complete , So it seems that there is no way to become a fat man in one bite , But some universal things can still be done , For example, find some high-frequency symptoms and diseases according to the search frequency of user logs , A simple split is still possible .

continue ️ Step on the pit

Speaking of structured electronic medical records , In fact, at present, it is a relatively detailed research direction , Just mentioned using sequence annotation , The knowledge map is standardized by constructing the superior subordinate relationship . In fact, it can also be used to express learning [9] Standardize and unify .

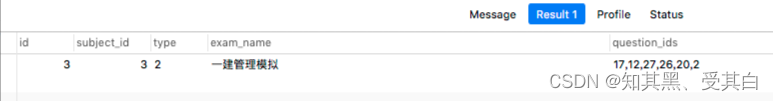

First of all, I need to understand International Classification of Disease 10th revision (ICD-10) This dataset , This data set is based on some characteristics of the disease , Classify diseases according to rules , And expressed by code . It contains about 2w Many diseases . As shown in the figure below

18 year Yizhou Zhang Propose a multi-view attention based denoising auto-encoder(MADAE) Model [9]. The structure of the model is as follows

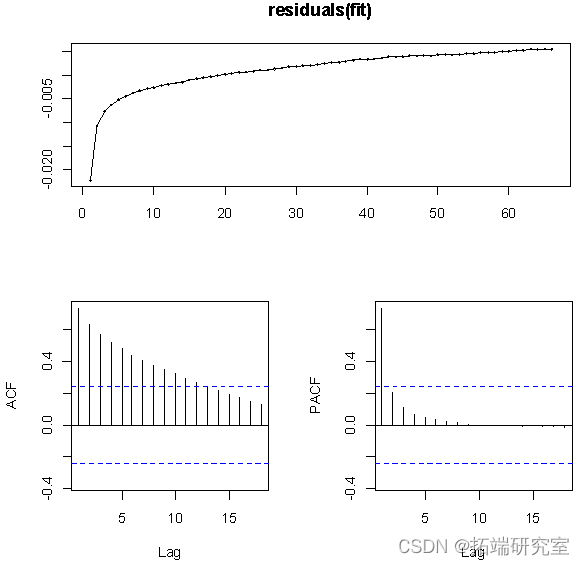

A noise reduction self encoder based on a multi view attention network is used to standardize the medical record data .DAE In order to learn more robust features , On the Internet embedding Layer introduces random noise , It can be said that it destroys the input data and reconstructs the original data , So its trained features will be more robust ,Multi-view encoders According to the original text after adding noise embedding, According to its words , word , The three vectors of tone indicate that the result goes through one layer attention Again decoder Go back to the original input data . Different from the standardization method of splitting mentioned before , As shown in the figure below :

about C2a,C2b,C2c For example, we can use standardized splitting , Divide it into < Instability , angina pectoris >, Then we can launch C2a,C2b,C2c Describing the same content . And in the MADAE In fact, one is the standard in the model , For example C2a For the library about Unstable angina Standard writing , and C2b,C2c It can be said to be its alias or mention. We use MADAE Model encoder Calculate the similarity between the vector result output from the layer and the standardized writing method stored in the knowledge base , It can solve the problem of some homophones and the inconsistency of writing structure , Again according to Denoising Network Emdedding According to the co-occur Statistics context information , Yes Denoising Text Embedding How to weight the similarity , We can also find some synonyms .Denoising Text Embedding The result of the method :

The advantage of doing so is , First of all, we don't need to store too much node information in the database . The node information of the graph can be reduced , Save some space , And we can use this way to mine synonyms .

If ner、phrase mining And so on, when we mine new words that cannot match exactly in the database, we can abandon the similarity calculation method used at the word level , for example bm25、 Edit distance ,jaccard distance . It can be mapped to the vector representation level for similarity calculation , Enhance the robustness of the model , Increase recall rate . Take a brief look at the results of the comparison in the paper , The effect is OK .

And there are many extensions of this method , The optimization of this work in entity links is still greatly improved , I am very grateful to the first author of this article Yizhou Zhang Answers to some of my questions .

summary

In the medical field or other vertical fields , Structural extraction of unstructured data is a very important and fundamental thing , This task determines the effect of your downstream tasks . If the entity is drawn incorrectly , Then the existing relationship must be wrong , Then the knowledge map constructed is meaningless . There are still many ways to go for structured text , Time cost is also very important in industry , Rules are really a good use , Fast efficiency , But his recall is extremely low .Bert Although the effect is good, the cost is high . The methods mentioned in this article are quite basic , And it is also very applicable in industry . From the perspective of expanding ideas later , Must let Bert try , After all, the effect is really good .

reference

[1] Meta Pattern-driven Attribute Discovery from Massive Text Corpora

[2] TruePIE: Discovering Reliable Patterns in Pattern-Based Information Extraction

[3] A User-Centered Concept Mining System for Query and Document Understanding at Tencent

[4] https://github.com/BangLiu/ConcepT

[5] [ICACI 2018] Chinese Symptom Component Recognition via Bidirectional LSTM-CRF

[6] [ICACI 2018] Using a Knowledge Graph for Hypernymy Detection between Chinese Symptoms

[7] [ACL 2019]Modeling Semantic Compositionality with Sememe Knowledge

[8] [ACL 2017] Improved Word Representation Learning with Sememes

[9] [IEEE 2018] Chinese Medical Concept Normalization by Using Text and Comorbidity Network Embedding

[10] Deep Neural Models for Medical Concept Normalization in User-Generated Texts

边栏推荐

- Win:使用 Shadow Mode 查看远程用户的桌面会话

- Data guard -- theoretical explanation (III)

- PowerShell: use PowerShell behind the proxy server

- 增量备份 ?db full

- Exploration and Practice of Stream Batch Integration in JD

- MATLB | multi micro grid and distributed energy trading

- Phpstrom setting function annotation description

- Timescaledb 2.5.2 release, time series database based on PostgreSQL

- [swagger]-swagger learning

- Limited query of common SQL operations

猜你喜欢

CAM Pytorch

Missile interception -- UPC winter vacation training match

Write a thread pool by hand, and take you to learn the implementation principle of ThreadPoolExecutor thread pool

MySQL regexp: Regular Expression Query

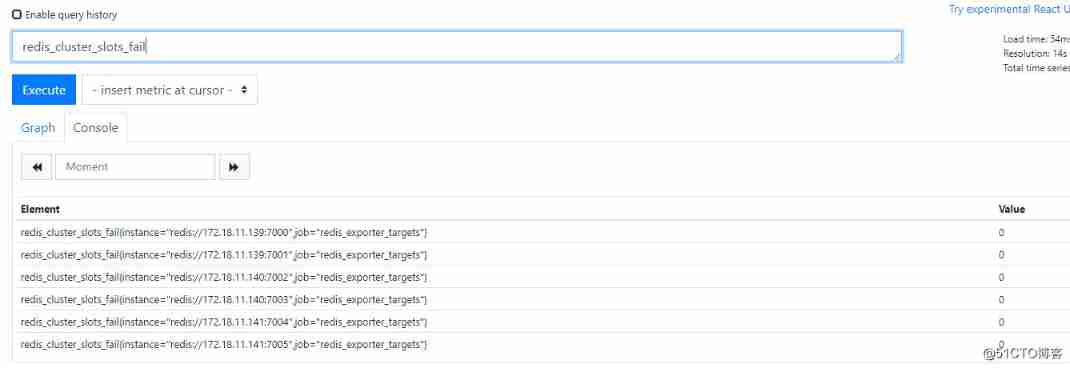

Prometheus monitors the correct posture of redis cluster

A label colorful navigation bar

Application and Optimization Practice of redis in vivo push platform

![[source code attached] Intelligent Recommendation System Based on knowledge map -sylvie rabbit](/img/3e/ab14f3a0ddf31c7176629d891e44b4.png)

[source code attached] Intelligent Recommendation System Based on knowledge map -sylvie rabbit

Binary tree traversal - middle order traversal (golang)

R语言用logistic逻辑回归和AFRIMA、ARIMA时间序列模型预测世界人口

随机推荐

The steering wheel can be turned for one and a half turns. Is there any difference between it and two turns

Use the difference between "Chmod a + X" and "Chmod 755" [closed] - difference between using "Chmod a + X" and "Chmod 755" [closed]

Flutter 2.10 update details

The application and Optimization Practice of redis in vivo push platform is transferred to the end of metadata by

Pgadmin 4 V6.5 release, PostgreSQL open source graphical management tool

Introduce reflow & repaint, and how to optimize it?

Advanced learning of MySQL -- Application -- Introduction

Application and development trend of image recognition technology

Exploration and Practice of Stream Batch Integration in JD

PHP wechat official account development

Security level

Interesting practice of robot programming 14 robot 3D simulation (gazebo+turtlebot3)

Phpstrom setting function annotation description

Lsblk command - check the disk of the system. I don't often use this command, but it's still very easy to use. Onion duck, like, collect, pay attention, wait for your arrival!

Five ways to query MySQL field comments!

phpstrom设置函数注释说明

Practice of tdengine in TCL air conditioning energy management platform

R语言用logistic逻辑回归和AFRIMA、ARIMA时间序列模型预测世界人口

如何做一个炫酷的墨水屏电子钟?

Do you know the eight signs of a team becoming agile?