当前位置:网站首页>Summary and practice of knowledge map construction technology

Summary and practice of knowledge map construction technology

2022-07-05 02:02:00 【Necther】

Preface

Knowledge map , It's a special semantic network , It USES Entity 、 Relationship 、 attribute These basic units , In the form of symbols, it describes the relationship between different concepts and concepts in the physical world . Why is the knowledge map for Information retrieval 、 Recommendation system 、 Question answering system It's very important , Let's use an example to illustrate :

Suppose you are in a search scenario , We type in the search box

Can I take a bath after confinement ?

You can see this sentence Query Is a complete question , If there is a large question and answer corpus in the retrieval system ( such as FAQ scene ), Or a large enough article database ( The article title High coverage ), Use Semantic matching Technical right Query And FAQ Interrogative sentences 、 article title Doing similarity calculation may be a more ideal solution ( Refer to the previous article [ quote ]). however , Reality is always skinny , It is very difficult for the content to be retrieved to be complete in language expression . So for this case, Retrieved baseline What is it like ?

Under the traditional search process , We'll start with Query Conduct participle , That is, the original sentence becomes

sit , Confinement , Sure , Take a shower , Do you ,?

For the articles in the database , utilize Inverted index Index it beforehand , According to BM25 The algorithm recalls the words in the word segmentation results 、 Sort .

Of course , We can create a business dictionary according to the needs of business scenarios , Prepare some business keywords , For example, in the example “ Sitting on the moon ” Is a compound noun ; In addition, some stop words are discarded when indexing , such as “ Sure ”,“ Do you ” etc. . Then adjust the weight according to the part of speech ( For example, disease words are better than other words ), Expect the relevance of the final article ranking to be improved .

But so far , We can see that the search center is based on key word It's done , In other words, the content of the article must appear “ Sitting on the moon ” 、“ Take a shower ” These words . meanwhile , Because the stop words are removed 、 Verb , It can also lead to Query Of Semantic loss ,“ Sitting on the moon ” and “ Take a shower ” Become two concepts of separation .

that , As a natural human , This is how we understand this sentence Query Of :

Sitting on the moon –> Puerperal women

Take a shower –> Activities of daily living

The whole sentence can be understood as “ Whether puerperal women can do daily activities such as bathing ”, And we can also reason naturally , Maybe this one Query The appropriate feedback is in the form of “ Daily taboos during puerperium ” And so on .

You can find , When human beings think about the expression of natural language , yes Based on several concepts , And have a clear distinction between superior and subordinate concepts , These concepts come from the knowledge system acquired by human beings for many years , We can reason from what we already know 、 analysis 、 lenovo , This is what computers do not have . Tradition is based on Corpus Of NLP technology , Hope to reverse map the whole real world from a large amount of text data , This method is easy to implement , You can get good results in some small scenes , But it will expose various problems as mentioned above , The mapped knowledge representation is severely limited by the scenario , Difficult to migrate ; Lack of organization of knowledge systems ; The effect cannot be explained ;

To solve this kind of problem , The author thinks Knowledge map Maybe it is the key to break through . Compared to long text , Knowledge point ( Entity 、 Relationship ) Easier to organize 、 expand 、 to update . Natural language is the abstraction of human cognitive world , Want computers to understand natural languages , It also needs a brain filled with knowledge .

Dingxiangyuan is also trying to build its own medical brain knowledge map , In the future, knowledge extraction related to the knowledge map will be shared 、 Knowledge means 、 Knowledge fusion 、 Technical research notes on knowledge reasoning , As well as the landing practice in search recommendation and other scenarios . This article is mainly for us to share the technical research notes in the construction of knowledge map .

Concept

We already know in each knowledge map Overview , Knowledge mapping is essentially a semantic network , Nodes represent Entity (entity) perhaps Concept (concept), The edge represents the entity ( Or concept ) Between the various Semantic relations . At the level of knowledge system, knowledge atlas has three specific organizational classifications , Include Ontology、Taxonomy and Folksonomy.

These three categories can be simply understood as knowledge map pairs Hierarchy Three different degrees of strictness .Ontology It is a tree structure , It is the most strict between nodes of different layers IsA Relationship ( For example ,Human activities -> sports -> football), The advantage of this kind of atlas is that it is convenient for knowledge reasoning , But it cannot express the diversity of conceptual relationships ;Taxonomy It's also a tree structure , But the hierarchy is less strict , The nodes are connected by Hypernym-Hyponym Relationship structure , The conceptual relationship of such benefits is relatively rich , But it is also easy to cause ambiguity , Difficult to reason effectively ;Folksonomy Is a non hierarchical structure , All nodes are classified by labels , In addition to flexibility , Semantic accuracy 、 The ability to reason has all been lost .

at present ,Taxonomy The organizational structure of is a popular type in the Internet industry , Because it takes into account the relationship between the upper and lower levels and the label system to a certain extent , The flexibility in various applications is the best , This article focuses on Taxonomy Building technology for .

structure

The data source for building large-scale knowledge base can come from some open semi-structured 、 Unstructured and third-party structured databases . Getting data from a structured database is the easiest , Most of what needs to be done is to unify the concept 、 Align entities, etc ; The second is to acquire knowledge from semi-structured data , For example, from Wikipedia :

The entry description in the encyclopedia is a good data source , It provides a wealth of entity words context, And each entry has a detailed table of contents and paragraph differentiation :

We can extract the information of corresponding structured fields from different paragraphs , Of course , Some words may be hidden in the text , This requires some help NLP Algorithm to do recognition and extraction , such as Named entity recognition 、 Relationship extraction 、 Attribute extraction 、 Anaphora digestion wait . Related technologies and model optimization will be discussed in detail in the following articles , I won't elaborate here .

that , As mentioned above Taxonomy The type of knowledge map is a IsA Tree structure of , From structured or semi-structured data sources , Specific types of relationships can be easily obtained , however Hierarchical relationship between superior and subordinate The data is relatively small . Want to expand this category of data , Or expand the relationship types that are not in the original knowledge map , You need to extract from the text paragraphs of unstructured text or semi-structured data sources .

For example, you can extract from the text above “ Gout Is A Purine metabolic disorder ”,“ Proteoglycan Is A Extracellular matrix ”,“ collagen protein Is A Extracellular matrix ”,“ Chondroitin sulfate Is A Extracellular matrix ” etc. .

As the field of knowledge mapping heats up , In recent years, the research in this field has gradually increased , It also includes the construction methods in the Chinese field , Now we're right Taxonomy The construction technology of has been investigated , And introduce some specific methods of Chinese and English domain construction .

Taxonomy Build technology

Although the previous imagination to build a perfect Taxonomy For the follow-up NLP The benefits of the application , However, we should be calm to know that the current research in this field is not perfect , The main difficulties come from the following three reasons : firstly , There is space in the text data 、 Huge differences in theme and quality , Designed extraction template ( For example, some regular expressions ) It is difficult to be compatible with language scenarios in different fields ; second , Due to the diversity of language expression , It is also difficult to extract data integrity , This also greatly affects the final accuracy ; third , It is also the difference in the field , Disambiguation of extracted knowledge is also a headache .

Now we will introduce some existing academic circles 、 The research results of industry , These methods use algorithms from different angles to improve accuracy , It also includes Taxonomy Several subtasks under the build task , Include Hyponym acquisition (hyponym acquisition)、 Superordinate prediction (hypernym prediction)、 Structure induction (taxonomy induction).

At present, it is based on free text-based Of taxonomy In the construction process , The following two main steps can be summarized :i) Using templates (Pattern-based) or Distributed mode (Distributional) Extract... From text Is-A The relationship between ;ii) Apply the extracted relation to the data induct Complete taxonomy structure .

Pattern-based Method

Template method , As the name suggests, it is to design some fixed templates to match the original text , The most intuitive thing is to write Regular expressions Go grab it . This field was first developed by Hearst Several simple templates are designed, such as “[C] such as [E]”,“[C] and [E]” , So we can get the hyponymic word pairs from the sentences that conform to these logical sentence patterns . These templates look simple , But there are also many successful applications based on them , For example, the most famous Microsoft Probase Data sets .

Just because it is simple , The template method obviously has many disadvantages , The biggest problem is the low recall rate , The reason is also simple , Natural language has a variety of expressions , The number of templates is limited , It is difficult to cover all sentence structures . secondly , The flexibility of language will also affect the accuracy of extraction , Common mistakes include Unknown common words 、 Wrong expression 、 Extract and complete 、 Ambiguity etc. . There have been many research results in the academic circles under the template based approach , How to improve recall and accuracy .

a). How to improve recall ?

The first is how to improve the recall rate , The first method is to extend the template (Pattern Generalization), For example, design corresponding templates for different types of entity words ; Template internal article 、 Make flexible substitution of auxiliary words ;

There is also some work to automatically extend templates , such as Rion Snow Of the group 《Learning syntactic patterns for automatic hypernym discovery》 In achievement , Use syntactic dependencies path Automatically get new templates

however , This automatic template generation method will also bring new problems , If our original corpus is very large , The characteristics of a single template are very sparse (Feature sparsity problem), Another idea is to pay attention to Pattern Characteristics of , Improve their generality, To raise the recall rate . Related work includes Navigli The group proposed star pattern The concept of : Replace the low-frequency entity words in the sentence , And then, the more general Of pattern.

Syntactic dependency as a feature of this approach has a similar idea , stay PATTY In the system , Will be in dependency path Random replacement in pos tag,ontological type or Substantive words , Finally, choose pattern.

The second method is Iterative extraction , The main assumption is that some wrong relational data are caused by language ambiguity or semantic drift (semantic drift) Will be some too general Of parttern Multiple extraction of . So if you design an authentication mechanism , Maybe we can get rid of them . For example 《Semantic Class Learning from the Web with Hyponym Pattern Linkage Graphs》 Designed “doubly-anchored” The pattern of , Use one bootstrapping loop To extract .

The third method is The superordinate infers (Hypernym Inference), Another factor that affects the recall rate of using templates to extract relational data is , The goal of the template is to complete sentences , It means that the superior and inferior words of a certain relationship must appear in the sentence at the same time . A natural idea is whether we can make use of the transitivity of words , for instance y yes x The superordinate of , At the same time x And x' Very similar , Then this superior inferior relationship can be used as a transmission , There are already jobs train One HMM To make cross sentence predictions . Except between nouns , There are also some studies that make syntactic inferences based on the modifiers of hyponyms , for instance “grizzly bear” It is also a kind of “bear”

Above, 《Revisiting Taxonomy Induction over Wikipedia》 The job of , Use the head word in the phrase as a feature , We have done a set of heuristic extraction process .

b). How to improve accuracy ?

For problems such as map construction , How to evaluate the accuracy ? The most common are some statistics based methods . such as (x, y) Is a pair of candidates is-a The relationship is right , stay KnowItAll In the system , Use search engines to calculate x And y Point mutual information (PMI); stay Probase Likelihood probability is used to express y yes x Is the upper probability , Take the one with the greatest probability as the result ; Others also have prediction results through Bayesian classifiers 、 External data validation 、 Expert evaluation and verification .

How to improve the accuracy outside the extraction process , Most research methods are Select a verification indicator , Then build a classifier to iteratively optimize . however , The accuracy of simply using templates is still generally low , Most of the work of introducing classifiers is in the template + Distributed hybrid solutions are mentioned , Let's introduce the idea of distributed extraction .

Distributional Method

stay NLP In the field , The distributed approach includes The word vector 、 Sentence vector And so on to show the results of learning . One of the great advantages of distributed representation is that NLP Transform originally discrete data into continuous data in the field 、 Computable . This idea It can also be introduced into map construction , Because computability means that there are some relations between word vectors , These relationships can also be Is-A The upper and lower positions of data pairs . Another advantage of distributed method extraction is that we can Is-A Direct prediction of relationships , Not by extracting . The main steps of this method can be summarized as :i) Get seed data set (Key term); ii) Use unsupervised or supervised models to obtain more candidates Is-A The relationship is right .

a). Key Terms extract

There are many ways to obtain seed data sets , The most intuitive is the strict design pattern, The advantage of this is that it can guarantee Key term With high accuracy , In the case of a large number of corpora, the effect is good , But when there is less corpus data , There may be insufficient number of samples , Lead to subsequent models training Over fitting . Besides using pattern extract , There are also studies using Sequence tagging model or NER Tools Perform pre extraction , Then use several rules to filter .

Part of it is based on the vertical domain Toxonomy In the study of construction , Some domain specific post-processing will be attached (domain filtering). Most of them do some threshold based on some statistical values , such as TF、TF-IDF Or scores related to other fields . There are also studies that give weight to sentences when selecting them , Extract sentences with high domain weight key term.

obtain key term after , The next step is how to expand new relationship pairs based on these seed data .

b).Unsupervised Model

The first direction is the clustering scheme , For clustering , The core of the research is which distance evaluation index is used , Simple indicators include cosine、Jaccard、Jensen-Shannon divergence Can be used as a try , There are also slightly more complex pairs of entities (x, y) Compare with other features or weights , such as LIN measure:

among Fx ,Fy Represents extracted feature,w Express feature The weight of .

Besides , Some researchers have paid attention to , For example, in the entry page of Wikipedia , Hyponyms only appear in some of the words that describe the superordinate context in . But the superordinate may appear in the whole of the hyponym context in , Because of this asymmetry , The distance assessment has also been adjusted accordingly , For example, use WeedPrec:

Such assumptions are called Distributional Inclusion Hypothesis(DIH), Similar distance evaluations include WeedRec、BalAPInc、ClarkeDE、cosWeeds、invCL etc. .

Besides the distance evaluation index , Another concern is feature How to get it , Common examples are Co occurrence frequency in text corpus 、 Point mutual information 、LMI etc. .

c). Supervised Model

In a key term And after the clustering operation , The way to further improve the accuracy is to build a supervised model , have access to Classification or Ranking The way .

From the perspective of classifiers , The most popular solution is to train a language model in advance , such as Word2Vec, Candidate data pairs (x, y) Take the corresponding mapping as a vector , Put two vectors together , Then use, for example SVM To make a binary classifier . This method has been used in many subsequent studies baseline To compare . This method is simple and effective , However, in recent years, it has also been pointed out that there are some problems . Practical discovery , This classifier learns semantic connections , Not the relationship between the superior and the inferior that we expect , In other words, it is very easy to over fit . The alternative is to ( vector x) and ( vector y) do diff operation , Or combine and add 、 Point multiplication and other methods to take a comprehensive feature.

Follow up researchers believe that , Word vector's training It is greatly influenced by the language environment , Map the hyponymic relationship to the word embedding It's more difficult . So on the basis of word vector , by x And y Build a separate layer embedding To express a relationship , The experimental results show that this method has a good index improvement in the construction of maps in specific fields .

Except for classifiers , The generation method of superordinate words (Hypernym Generation) It's also a choice , At the same time, this is the best method at present , It is roughly to build a piecewise linear projection model Used to select and ( vector x) The supernumerary word of is closest to ( vector y) , Also used here Ranking The technique of . We have chosen the related work in the Chinese field to try , See the following chapters for details .

Taxonomy Induction

In the previous chapter , This paper introduces various techniques to extract from text Is-A The relationship is right , The last step is how to merge these relationships into the data , Form a complete map . Most methods are incremental learning (Incremental Learning) The pattern of , Initialize a seed taxonomy, Then the new Is-A Data is added to the graph . The research in this direction is to use the evaluation index as the basis for inserting new data .

A common approach is to treat construction as a clustering problem , Similar subtrees are merged by clustering . Such as 《Unsupervised Learning of an IS-A Taxonomy from a Limited Domain-Specific Corpus》 Just use K-Medoids Clustering to find the minimum common ancestor stage .

Graph related algorithms can also be used as a direction , because Taxonomy It is naturally a graph structure , For example 《A Semi-Supervised Method to Learn and Construct Taxonomies using the Web》 Provides a way of thinking , Find all the entrances for 0 The node of , They are probably taxonomy At the top of the , Find all the outputs with 0 The node of , They are probably at the bottom instance, And then look in the graph for root To instance The longest path of , You can get a more reasonable structure .

Others are also shown in the figure edge Weight values related to various fields are attached , Then we use dynamic programming algorithm to find the optimal segmentation , such as Optimal branching algorithm.

The final step in building is Yes taxonomy Do the cleaning , Put the wrong Is-A Relationships remove data . The first key feature is taxonomy There is no ring structure in the hierarchy of ,Probase The database is built by removing the ring structure , Cleaned up about 74K Error of Is-A The relationship is right .

Another big problem is The ambiguity of entity words , At present, there is no particularly effective solution to this problem . Especially in some automated atlas construction systems , Introduce the... Mentioned above “ Transitivity ” To expand data often brings greater risk of dirty data . for instance , There are two Is-A The relationship is right :

(Albert Einstein, is-a, professor)

(professor, is-a, position)

But we don't Can not be Get by transitivity

(Albert Einstein, is-a, position)

Although there are some jobs that try to learn a entity word multiple senses, But having multiple choices doesn't mean you know which is the right choice . Most of the time , The disambiguation of substantive words needs more circumstantial evidence , That means it requires you to have rich knowledge background data first , What we are doing is building the map , This becomes “ Egg or layer ” The problem of . From the academic level , Construct a fully-disambiguated Of taxonomy A long way to go , Fortunately, there are many other applications trick, This includes collecting users' searches 、 Click log , analysis UCG Content , Get information from it to help us resolve our differences , And feed back to the knowledge map .

Above, we are right Taxonomy This paper gives a brief overview of the construction technology of , Let's take a look at the complete process of building maps in the Chinese and English fields .

Probase The construction of

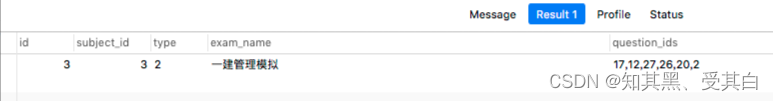

From Microsoft Probase Start , The construction of the atlas emphasizes probabilistic taxonomy The concept of , The existence of knowledge is not “ Black and white ” Of , But in the form of a certain probability , Retaining this level of uncertainty can reduce the impact of noise in the data , It is also helpful for subsequent knowledge calculation . stay Probase Data in , Each pair of hypernym-hyponym In order to retain the Co occurrence frequency To correspond to the degree of certainty , as follows :

such as company And google Associated in the map construction 7816 Time , This value can be used in the subsequent application of the calculation of confidence .

that ,Probase The specific construction process can be understood as two steps , The first is to make use of Hearst Patterns( The figure below ) The candidate pairs of hyponymic noun phrases are obtained from the original corpus .

After obtaining candidate pairs , Then, according to the parent-child relationship, it is merged into a tree data structure , The whole process is relatively simple , as follows :

From the original sentence The candidate entity pairs in ( Such as (company, IBM)、(company, Nokia) etc. ) Dig it out , Form small subtrees ;

Then, according to the horizontal or vertical merging principle, each sub tree is merged into a complete map , The following part of the paper gives a complete construction process

You can see , In the process of merging subtrees , It is not easy to do a direct merger , Instead, a similarity calculation is used Sim(Child(T1), Child(T2)) decision , The similarity calculation is also relatively simple , Use Jaccard similarity that will do ,

Suppose there are three subtrees :

A = {Microsoft, IBM, HP}

B = {Microsoft, IBM, Intel}

C = {Microsoft, IBM, HP, EMC, Intel, Google, Apple}

By calculation, we can get J(A,B) = 2/4 = 0.5,J(A,C) = 3/7 = 0.43, Here, a threshold value will be set during construction , Assuming that 0.5, that A And B Horizontal consolidation can be done , and A And C Do not place .

Problems in the construction of Chinese atlas

In general ,Probase The method is relatively simple , In terms of construction, it provides a large framework level idea , But there are still many problems in the details . In the paper 《Learning Semantic Hierarchies via Word Embeddings》 This problem is discussed in detail in , Especially in Chinese , The first problem is that Chinese grammar is more flexible than English , Use Chinese Hearst-style lexical patterns Although the accuracy will be very high , But the recall rate is very low compared with other methods , then F1 The value is also poor . Because it is difficult to manually traverse and sort out all the sentence structures , It is also very inefficient , It can be seen from the comparison of several methods in the following figure :

On the other hand , Just use patterns Methods , Build in semantic structure (semantic hierarchy construction) There are also deficiencies in the ability to

image Probase This simple construction method is often prone to missing relationships or errors .

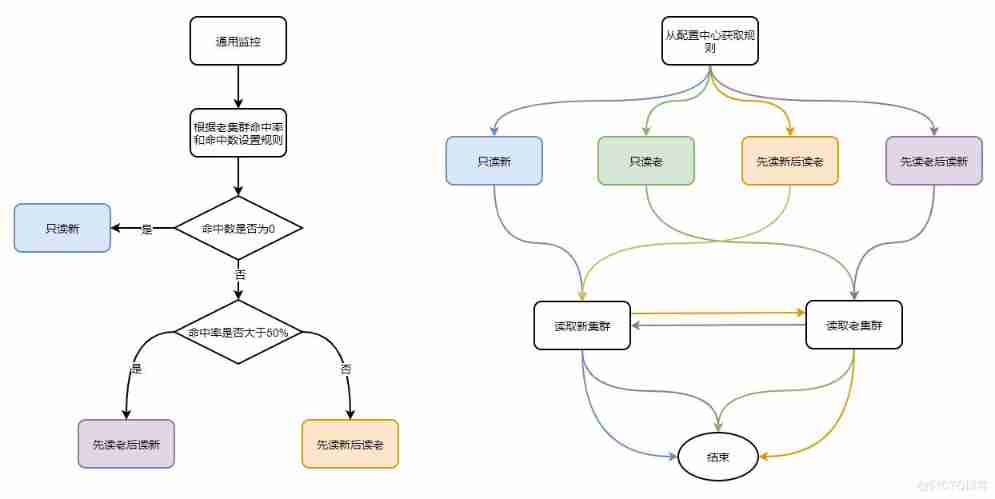

A hybrid framework for mapping models and templates

At present, the mainstream construction schemes are , Besides using Hearst Pattern outside , The common method is to use distributed representation , For example, calculating the upper and lower words PMI、Standard IR Average Precision, Or calculate both The word vector Of offset etc. . Continue the work of the team of Harbin Institute of technology , The team of China Normal University has completed a set of associative word vectors 、 Mapping model and Hearst Pattern Of Taxonomy Construction method , This section focuses on 《Predicting hypernym-hyponym relations for Chinese taxonomy learning》 Introduce the work of the discussion .

Problem definition

First , According to the knowledge background and data of the vertical field , We have built a basic framework of knowledge map , be called Taxonomy, Write it down as T = (V, R), among V Represents an entity vector ,R Relationship means relationship .

Then from the atlas T Sampling out of is-a Relational data , Write it down as R. And then take it out Pass closures Relationship set of , Write it down as R*, It can be understood that the relational data in this set is the data with high reliability .

Take Baidu Encyclopedia as an example , The entity collection obtained from the external data source is recorded as E, Each element in the collection is , The parent of this element is marked as Cat(x) , Then the whole crawled data set can be expressed as :

For the whole problem , Can be defined as : according to Rx Learn an algorithm F , To be able to U in unlabeled The data of , And blend into T in .

Basic framework

In the article 《Learning Semantic Hierarchies via Word Embeddings》 The use of The word vector It's similar to v(king) - v(queen) = v(man) - v(woman) The feature of can help predict the hierarchical relationship of entities in the construction process hypernym-hyponym relations( such as , Diabetes and abnormal glucose metabolism , Gastric ulcer and gastrointestinal diseases ). This article continues the use of word vectors , And train the idea of mapping model in word vector space , The overall framework is as follows :

The process can be expressed as : First, from the existing Taxonomy Extract some initialization data from , Map them to the word vector space (embedding space), Then train in this space Linear mapping model (piecewise linear projection model), A priori representation of this space is obtained . then , Get new relationship pairs from the data source , Through the prediction of the model and the screening of other rules , Extract a new batch of relationships to update the data training set, This batch of new data can be corrected again projection model, In this cycle .

Model definition

According to the previous description , The first step is to use a large corpus train A reliable word vector , The author used Skip-gram Model of , stay 10 One hundred million words Word vectors are obtained from the corpus of , The method will not be repeated here .

After getting the word vector , For a given vocabulary x , Get the words u The conditional probability can be expressed as :

here v(x) Indicates the operation of word taking vector ,V The dictionary obtained from the whole corpus

The second step , Build a mapping model , Such a model is also very simple , For a relation to data (xi, yi) , The model assumes that it can pass through a transformation matrix M And an offset vector :

Besides , The author also draws on the experience of his predecessors , In the experiment, it is found that a single mapping model can not well learn the mapping relationship of this space , For example , Under open domain datasets , It may represent the domain knowledge of natural organisms And It means knowledge in financial and economic fields The spatial representation of is too different , With a single model cover Unable to live . What do I do ? Then make several more models to deal with them separately , However, the author did not introduce prior knowledge , It's about using K-means Find the category directly :

You can see , After clustering algorithm , Animals and professions are divided into different clusters , Then you can build a mapping model for each cluster .

The optimization objectives of the whole model are also well understood , On the real x After transforming the vector of , Be as close to the entity as possible y vector , The objective function is as follows :

among , k Represents the... After clustering k A cluster of , Ck Represents the relational data set under each cluster , The optimization method uses Stochastic gradient descent (Stochastic Gradient Descent)

Training methods

The system is trained in a circular way , The core idea is to dynamically expand the training set through clustering and mapping model R(t)(t = 1, 2, ..., T) , After constantly retraining the model , Gradually enhance the generalization ability of the target data source .

First, in the initialization part, some Term marks :

The following is the circulation process :

Step 1.

Step 2.

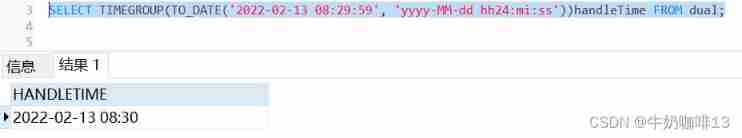

After model prediction , It needs to be filtered by the template , Available Chinese templates such as :

After screening , Finally, we get a set of relations with high reliability :

In particular , Here f Not simply through the template , For the above ”Is-A”、”Such-As” and ”Co-Hyponym” The three templates are analyzed respectively :

Based on the above analysis , Here, a set of algorithms is designed to determine how to U(t)- The relationship data in the filter enters U(t)+ , For candidate data sets U(t)- Relational data in , According to the definition of credibility positive and negative Two quantities ,positive Specifically defined as :

among ,a The range is (0, 1), Is an adjustment factor ,gamma Is the smoothing coefficient , The paper sets the empirical value a = 0.5, gamma = 1.

negative Specifically defined as :

If NS(t) High scores , representative xi And yi It's more likely to be ”co-hyponyms” Relationship , It is ”Is-A” The less likely the relationship is .

next , The algorithm involved here is to maximize and minimize , The formal expression is :

here ,m Express U(t)+ Of size,theta Is a constraint threshold . We found that the problem is Maximum budget coverage (budgeted maximum coverage proplem) A special case of , It's a NP-hard problem , Need to introduce Greedy Algorithm To solve :

Last , In addition to updates U(t)+ , The other is to put U(t)+ And the original training Data merging :

Step 3.

Sep 4.

Model to predict

summary

The knowledge map construction method investigated in this paper is one of the few works in the field of Chinese , Compared with English , Whether it's pattern The design of the 、 The data sources are very different . The second half of the paper also discusses the problems found in this process , First, the clustering method is used in the system , In the process of parameter adjustment , The discovery effect depends on the number of cluster centers K It's not sensitive , stay K In small cases, the effect difference is not much , Almost K = 10 Is the best . But if the setting is too large , The end result will be very poor . But what the author has done is an open domain knowledge map , And we are doing vertical fields ( Medical care ) The map of knowledge , In practice, we try to divide sub datasets artificially according to prior knowledge , Thus, the mapping models are trained respectively .

secondly , In specific cases , Some upper and lower errors are found , for instance “ Herbs ” Be identified as “ traditional Chinese medicine ” The father of , Although it is true that most of Chinese medicine is made up of herbs , But from the perspective of classification, it is unreasonable . This kind of situation may be due to the problem of Chinese expression in the data source , At the same time, it is not easy to deal with it without the help of external knowledge . In our actual practice, we will do some directional work according to specific data sources pattern Design , For example 《 Practical diagnostics 》 In a Book , The common sentence patterns used to describe attribute classification are

according to * Different , It is divided into a1, a2, a3

x Yes * Types :y1, y2, y3 etc.

Artificial early observation helps to improve the recall and accuracy of information extraction .

in addition , It is also found that some word vectors are not well trained , For example, in the paper experiment “ plant ” And “ Monocotyledons ” The word vectors of are very similar , It may be that some words are too low-frequency in the corpus , But this is a hard wound , We need to find ways to improve the effect of pre training in the Chinese field .

reference

1.《Web Scale Taxonomy Cleansing》

2.《Probase- a probabilistic taxonomy for text understanding》

3.《An Inference Approach to Basic Level of Categorization》

4.《Improving Hypernymy Detection with an Integrated Path-based and Distributional Method》

5.《A Short Survey on Taxonomy Learning from Text Corpora- Issues, Resources and Recent Advances》

6.《Learning Semantic Hierarchies via Word Embeddings》

7.《Chinese Hypernym-Hyponym Extraction from User Generated Categories》

8.《Learning Fine-grained Relations from Chinese User Generated Categories》

9.《Predicting hypernym–hyponym relations for Chinese taxonomy learning》

10.《Unsupervised learning of an IS-A taxonomy from a limited domain-specific corpus》

11.《Supervised distributional hypernym discovery via domain adaptation》

12.《Semantic Class Learning from the Web with Hyponym Pattern Linkage Graphs》

13.《What Is This, Anyway-Automatic Hypernym Discovery》

14.《Learning Word-Class Lattices for Definition and Hypernym Extraction》

15.《Taxonomy Construction Using Syntactic Contextual Evidence》

16.《Learning syntactic patterns for automatic hypernym discovery》

17.《A Semi-Supervised Method to Learn and Construct Taxonomies using the Web》

18.《Entity linking with a knowledge base: Issues, techniques, and solutions》

19.《Incorporating trustiness and collective synonym/contrastive evidence into taxonomy construction》

20.《Semantic class learning from the web with hyponym pattern linkage graphs》

21.https://www.shangyexinzhi.com/Article/details/id-23935/

22. depth | Xu Bo : Construction of encyclopedia knowledge atlas - You know

边栏推荐

- 线上故障突突突?如何紧急诊断、排查与恢复

- Talk about the things that must be paid attention to when interviewing programmers

- Codeforces Global Round 19 ABC

- Wechat applet: wechat applet source code download new community system optimized version support agent member system function super high income

- [Digital IC hand tearing code] Verilog edge detection circuit (rising edge, falling edge, double edge) | topic | principle | design | simulation

- Vulnstack3

- 官宣!第三届云原生编程挑战赛正式启动!

- How to make a cool ink screen electronic clock?

- Include rake tasks in Gems - including rake tasks in gems

- phpstrom设置函数注释说明

猜你喜欢

How to build a technical team that will bring down the company?

MATLB|多微电网及分布式能源交易

Variables in postman

JVM - when multiple threads initialize the same class, only one thread is allowed to initialize

Practice of tdengine in TCL air conditioning energy management platform

Application and Optimization Practice of redis in vivo push platform

Logstash、Fluentd、Fluent Bit、Vector? How to choose the appropriate open source log collector

MySQL regexp: Regular Expression Query

Stored procedure and stored function in Oracle

Win:使用 Shadow Mode 查看远程用户的桌面会话

随机推荐

The application and Optimization Practice of redis in vivo push platform is transferred to the end of metadata by

如何搭建一支搞垮公司的技術團隊?

Using openpyxl module to write the binary list into excel file

MATLB|多微电网及分布式能源交易

Include rake tasks in Gems - including rake tasks in gems

STM32 series - serial port UART software pin internal pull-up or external resistance pull-up - cause problem search

85.4% mIOU! NVIDIA: using multi-scale attention for semantic segmentation, the code is open source!

Process scheduling and termination

MATLB | multi micro grid and distributed energy trading

How to build a technical team that will bring down the company?

"2022" is a must know web security interview question for job hopping

使用druid连接MySQL数据库报类型错误

Phpstrom setting function annotation description

Interpretation of mask RCNN paper

线上故障突突突?如何紧急诊断、排查与恢复

runc hang 导致 Kubernetes 节点 NotReady

Numpy library introductory tutorial: basic knowledge summary

Some query constructors in laravel (2)

Serious bugs with lifted/nullable conversions from int, allowing conversion from decimal

RichView TRVUnits 图像显示单位