当前位置:网站首页>How to safely eat apples on the edge of a cliff? Deepmind & openai gives the answer of 3D security reinforcement learning

How to safely eat apples on the edge of a cliff? Deepmind & openai gives the answer of 3D security reinforcement learning

2022-07-05 01:15:00 【QbitAl】

Line early From the Aofei temple

qubits | official account QbitAI

DeepMind&OpenAI This time, we jointly demonstrated the good work of the first-hand safety reinforcement learning model .

They put two-dimensional security RL Model ReQueST To a more practical 3D Scene .

Need to know ReQueST Originally, it was only used in navigation tasks ,2D Racing and other two-dimensional tasks , Learn how to avoid agents from the safety trajectory given by humans “ Self mutilation ”.

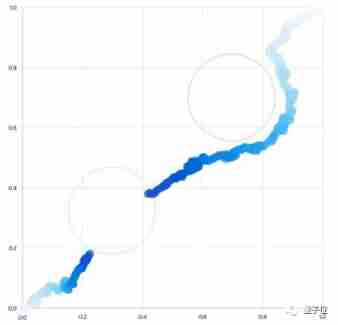

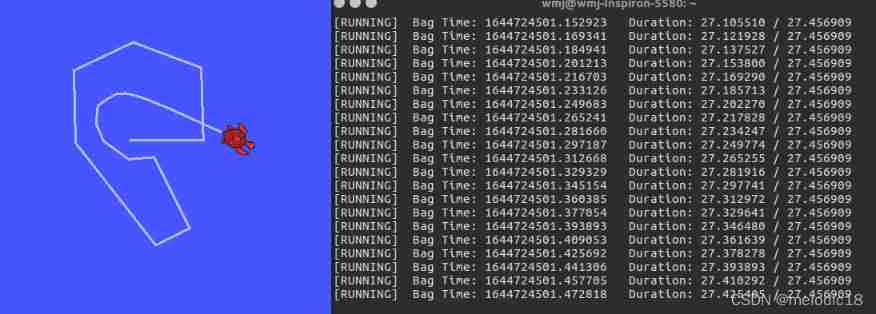

△ Figure note : original ReQueST Two dimensional navigation task ( Avoid the red area ) And racing tasks

But in practice 3D The problem in the environment is more complex , For example, robots performing tasks need to avoid obstacles in their work , Self driving cars need to avoid driving into ditches .

But in practice 3D The problem in the environment is more complex , For example, robots performing tasks need to avoid obstacles in their work , Self driving cars need to avoid driving into ditches .

So here comes the question , be used for 2D Mission ReQueST In a complex 3D Can it work in the environment ? stay 3D Can the quality and quantity of safety trajectory data given by humans in the environment meet the needs of training ?

To solve these two problems ,DeepMind and OpenAI Come up with a more complex dynamic model and a reward model incorporating human feedback , Will succeed ReQueST Migrate to 3D Environment , A step towards application .

And the security has also been improved , In the experiment, the number of unsafe behaviors of agents was reduced to baseline One tenth of .

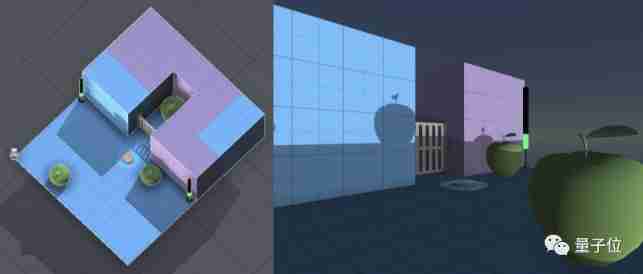

How can I feel it intuitively ? Let's go to simulation 3D Take a look in the environment .

In the scene above , On the upper left side of the room is a cliff , The agent needs to wait until the green light on both sides of the room disappears , Try to eat three apples .

One of the apples needs to press the button to open the door to eat .

In the video shown , The agent presses the button , Open the gate , Successfully eat the apple that is locked , A set of operating procedures .

Let's see how it does it .

3D How to train the version of safety reinforcement learning model

stay ReQueST On the basis of ,DeepMind and OpenAI The problem to be solved is to apply to 3D Of the scene Dynamic model and Reward model .

Let's first look at the roles of these two from the overall process .

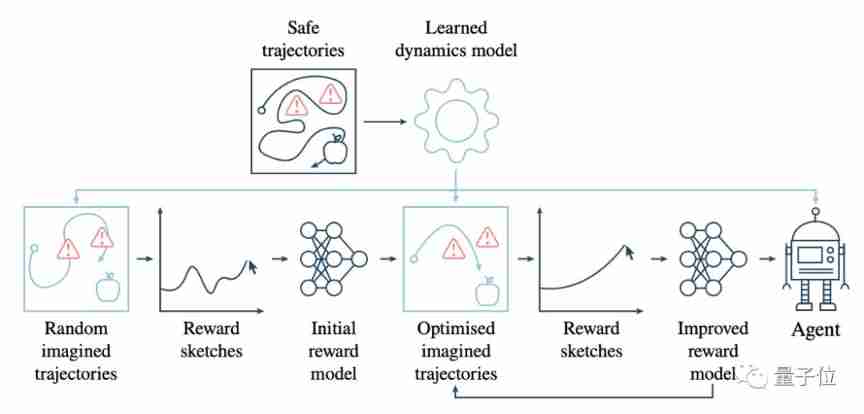

As shown in the figure below , It is the training process of the new model for the task of eating apples .

The light blue box represents the steps involved in the dynamic model . Start from the top row , Provide some safe tracks by people , Avoid red danger areas .

According to these, the dynamic model is trained , Then use it to generate some random tracks .

Then go to the lower row , Let humans follow these random tracks , Provide feedback by rewarding sketches , Then use these reward sketches , Reward model at the beginning of training , And constantly optimize both .

Next, we introduce these two models .

This time, DeepMind and OpenAI The dynamic model used LSTM Predict future image observations based on action sequences and past image observations .

Models and ReQueST Similar to , The encoder network and the deconvolution decoder network are a little larger , And use the mean square error loss of the observed and predicted values of the real image for training .

most important of all , This loss is based on the prediction of the future steps of each step , Thus, the dynamic model can maintain consistency in long-term deployment .

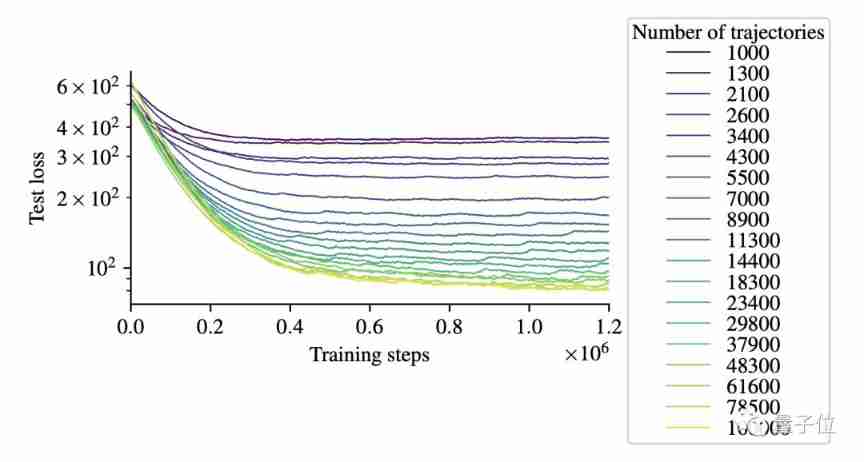

The training curve obtained is shown in the figure below , The horizontal axis represents the number of steps , The vertical axis represents the loss , Curves of different colors represent the number of tracks of different orders :

Besides , In the reward model section ,DeepMind and OpenAI Trained a 220 10000 parameter 11 Layer residual convolution network .

Input is 96x72 Of RGB Images , Output a scalar reward prediction , The loss is also the mean square error .

In this network , The reward sketch of human feedback also plays a very important role .

The reward sketch is simply to score the reward value manually .

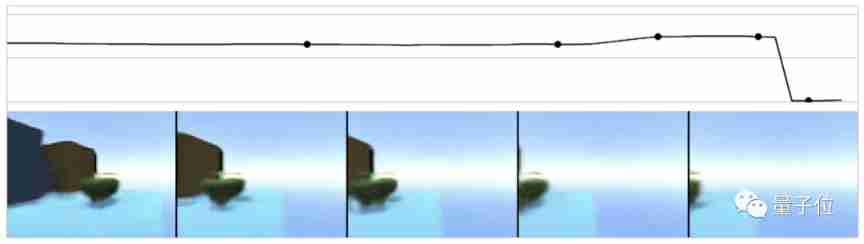

As shown in the figure below , The upper part of the figure is the sketch given by people , In the second half of the prediction observation, there is apple , The reward value is 1, If Apple fades out of sight , The reward becomes -1.

In order to adjust the reward model network .

3D How effective is the security reinforcement learning model version

Next, let's take a look at the new model and other models as well Baseline How about the contrast effect of .

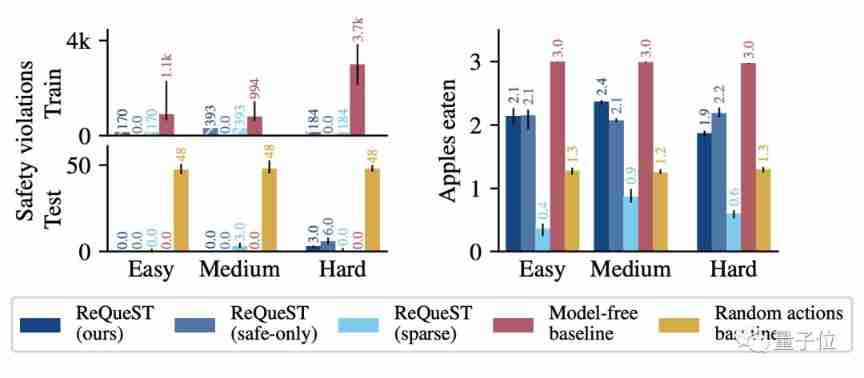

The results are shown in the following figure , Different difficulties correspond to different scene sizes .

On the left side of the figure below is the number of times the agent fell from the cliff , On the right is the number of apples eaten .

It should be noted that , In the legend ReQueST(ours) The representative training set contains the training results of human providing the wrong path .

and ReQueST(safe-only) Represents the training results of using only safe paths in the training set .

in addition ,ReQueST(sparse) It is the result of sketch training without reward .

It can be seen from it that , although Model-free This article baseline Ate all the apples , But at the expense of a lot of security .

and ReQueST The average agent can eat two of the three apples , And the number of falls off the cliff is only baseline One tenth of , Outstanding performance .

Judging from the difference between reward models , Reward sketch training ReQueST And sparse label training ReQueST The effect varies greatly .

Sparse label training ReQueST On average, you can't eat an apple .

It seems ,DeepMind and OpenAI There are indeed improvements in these two points .

Reference link :

[1]https://www.arxiv-vanity.com/papers/2201.08102/

[2]https://deepmind.com/blog/article/learning-human-objectives-by-evaluating-hypothetical-behaviours

边栏推荐

- 微信小程序;胡言乱语生成器

- ROS command line tool

- Innovation leads the direction. Huawei Smart Life launches new products in the whole scene

- Global and Chinese markets for industrial X-ray testing equipment 2022-2028: Research Report on technology, participants, trends, market size and share

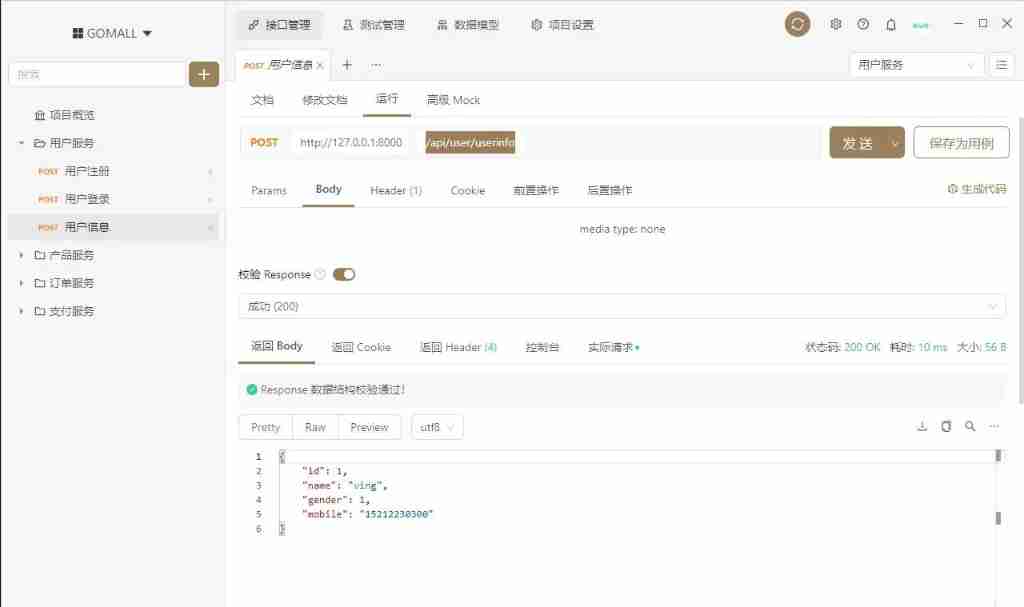

- 【大型电商项目开发】性能压测-性能监控-堆内存与垃圾回收-39

- SAP ui5 application development tutorial 107 - trial version of SAP ui5 overflow toolbar container control introduction

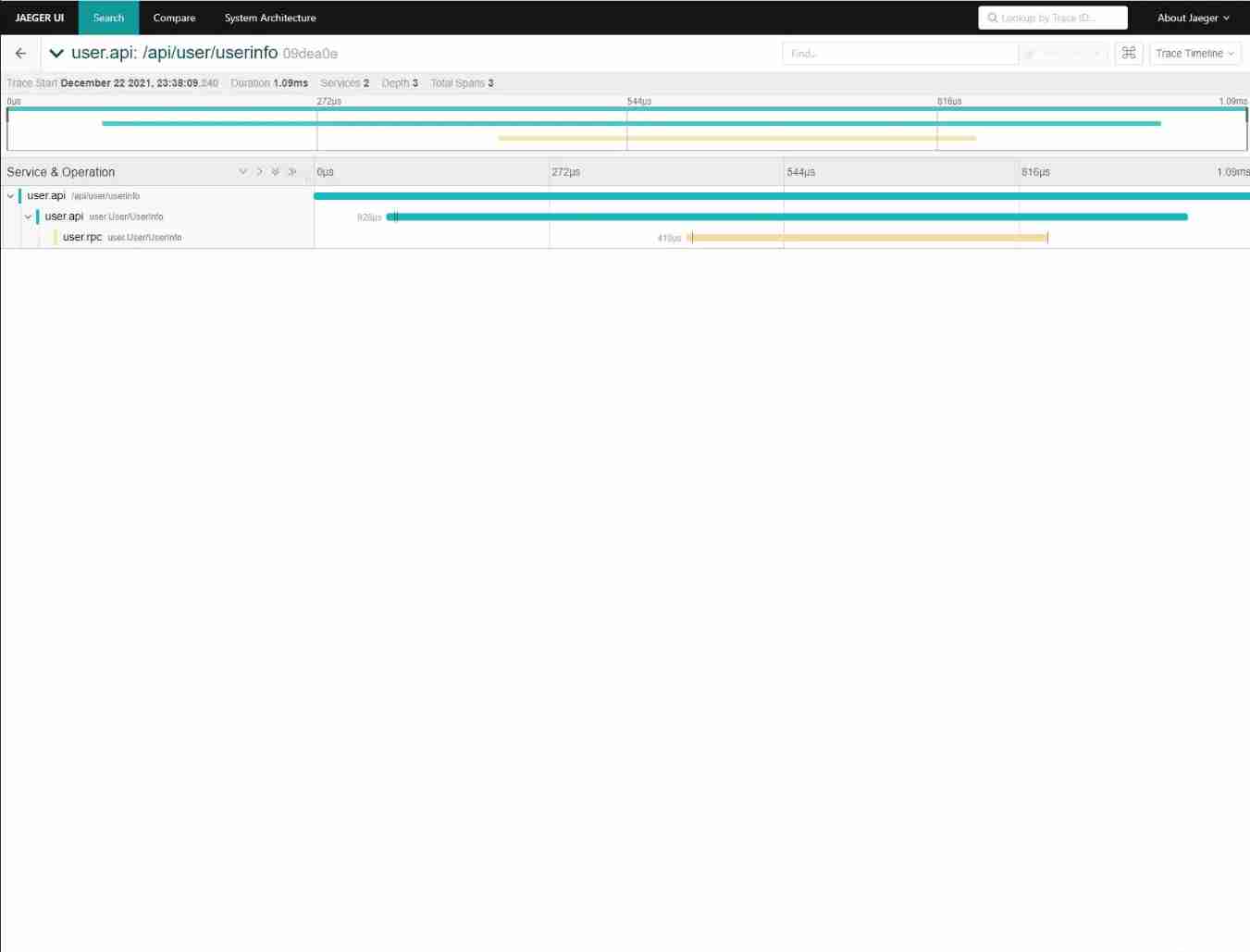

- Take you ten days to easily complete the go micro service series (IX. link tracking)

- 如果消费互联网比喻成「湖泊」的话,产业互联网则是广阔的「海洋」

- 微信小程序:独立后台带分销功能月老办事处交友盲盒

- 实战模拟│JWT 登录认证

猜你喜欢

Take you ten days to easily complete the go micro service series (IX. link tracking)

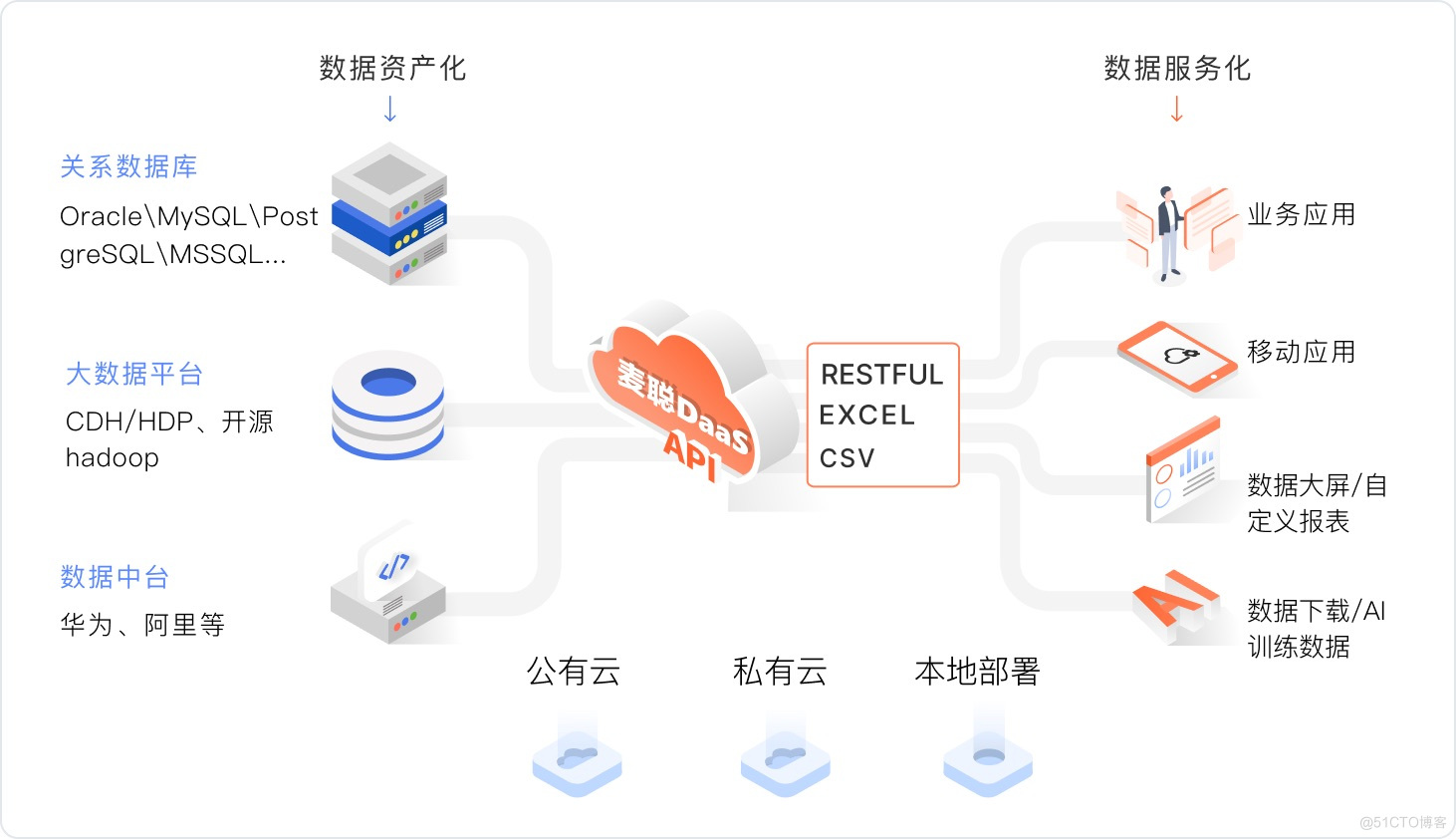

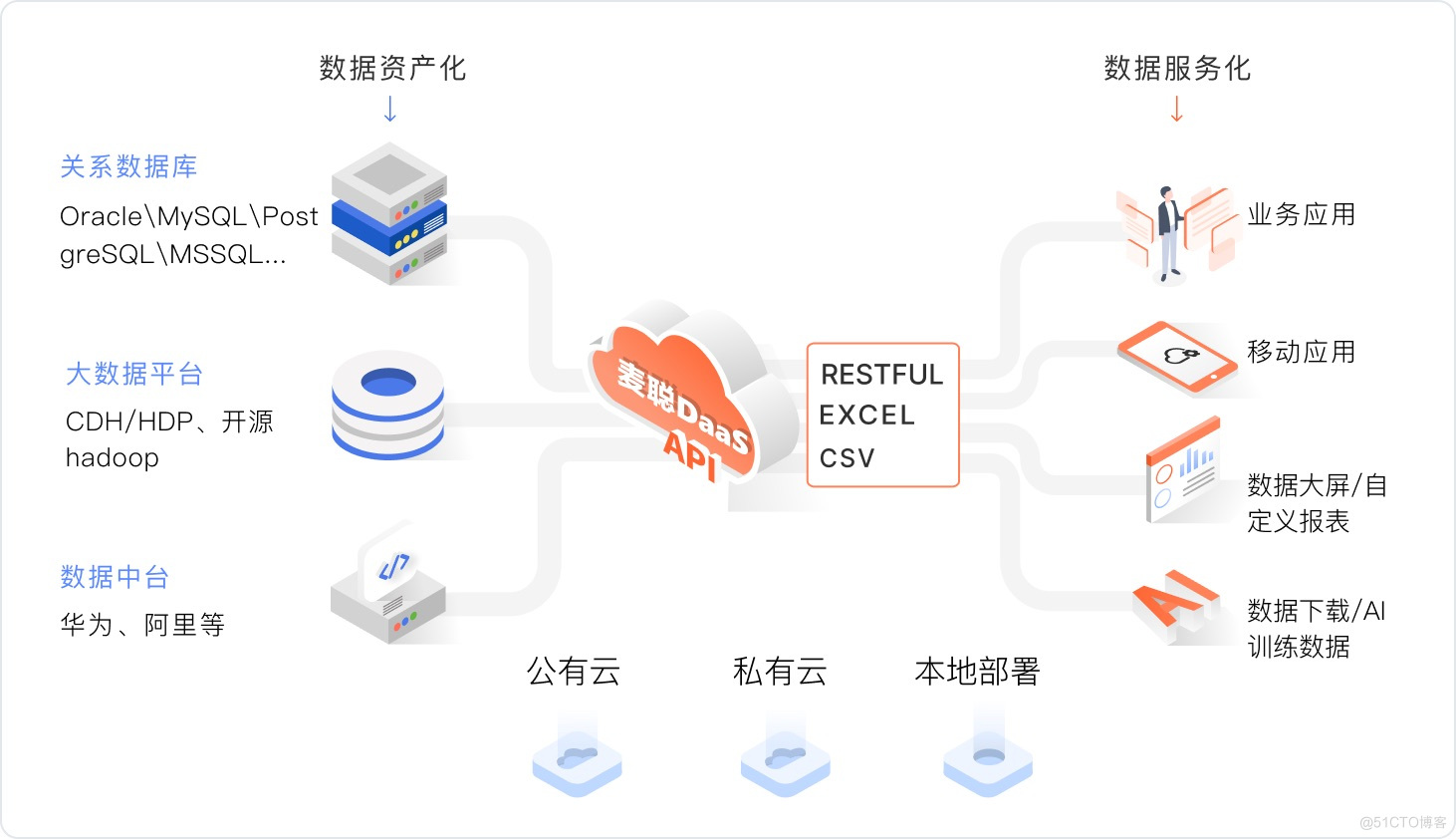

Huawei employs millions of data governance experts! The 100 billion market behind it deserves attention

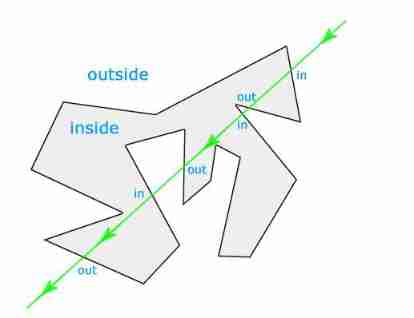

JS implementation determines whether the point is within the polygon range

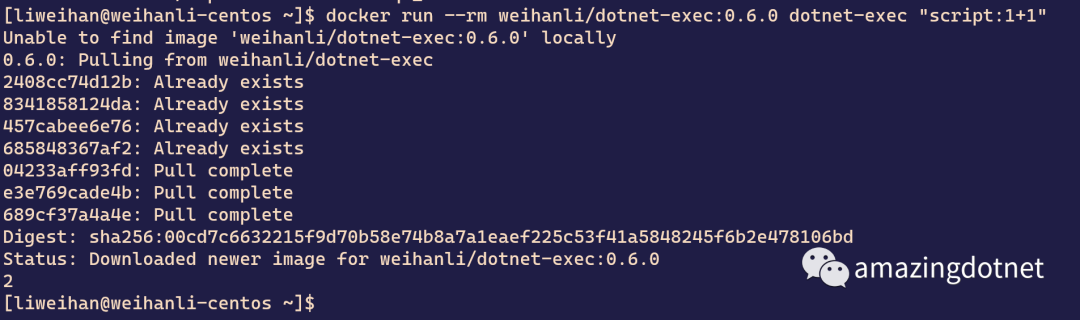

dotnet-exec 0.6.0 released

ROS command line tool

Expose testing outsourcing companies. You may have heard such a voice about outsourcing

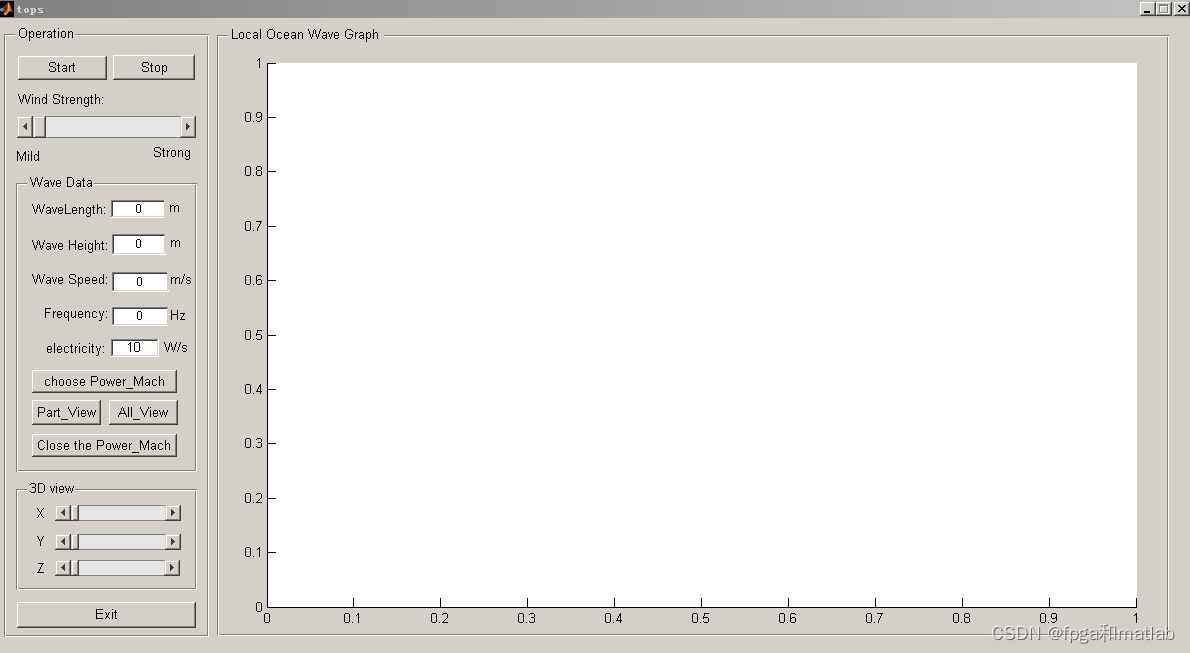

【海浪建模3】三维随机真实海浪建模以及海浪发电机建模matlab仿真

华为百万聘请数据治理专家!背后的千亿市场值得关注

Take you ten days to easily complete the go micro service series (IX. link tracking)

Inventory of more than 17 typical security incidents in January 2022

随机推荐

LeetCode周赛 + AcWing周赛(T4/T3)分析对比

多模输入事件分发机制详解

There is a new Post-00 exam king in the testing department. I really can't do it in my old age. I have

Les phénomènes de « salaire inversé » et de « remplacement des diplômés » indiquent que l'industrie des tests a...

Database postragesql lock management

To sort out messy header files, I use include what you use

Talking about JVM 4: class loading mechanism

La jeunesse sans rancune de Xi Murong

POAP:NFT的采用入口?

Inventory of more than 17 typical security incidents in January 2022

dotnet-exec 0.6.0 released

[pure tone hearing test] pure tone hearing test system based on MATLAB

Discrete mathematics: propositional symbolization of predicate logic

Playwright之录制

Are you still writing the TS type code

Digital DP template

Database postragesql client authentication

Remote control service

The performance of major mainstream programming languages is PK, and the results are unexpected

Database performance optimization tool