当前位置:网站首页>sqoop的表的导入

sqoop的表的导入

2022-07-02 06:36:00 【lucky乐琪】

#------ hdfs -> mysql ------

create table sqp_order(

create_date date,

user_name varchar(20),

total_volume decimal(10,2)

);

sqoop export \

--connect jdbc:mysql://singlelucky:3306/test \

--username root \

--password kb12kb12 \

--table sqp_order \

-m 1 \

--export-dir /kb12/hive/orderinfo \

--fields-terminated-by '\t'

#若发现导入表有问题 NOTFINDCALSS

[root@singlelucky ~]# find / -name 'sqp_order.class'

/tmp/sqoop-root/compile/e24fd4ec30c24475569ee1569d0873a7/sqp_order.class

/tmp/sqoop-root/compile/04c79abd0daca46e7a2a116716d2eb1f/sqp_order.class

[root@singlelucky ~]# cd /tmp/sqoop-root/compile/

[root@singlelucky compile]# ls

04c79abd0daca46e7a2a116716d2eb1f e24fd4ec30c24475569ee1569d0873a7

[root@singlelucky compile]# vim /etc/profile.d/myenv.sh

[root@singlelucky compile]# cd 04c79abd0daca46e7a2a116716d2eb1f

[root@singlelucky 04c79abd0daca46e7a2a116716d2eb1f]# ls

sqp_order.class sqp_order.jar sqp_order.java

#在jdk中CLASSPATH追加sqp_order.jar

export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar:$JAVA_HOME/lib/sqp_order.jar

#------ mysql -> hdfs ------

#–全量导入

sqoop import \

--connect jdbc:mysql://singlelucky:3306/test \

--username root \

--password kb12kb12 \

--table sqp_order \

-m 1 \

--delete-target-dir \

--target-dir /kb12/sqoop/m2h_all \

--fields-terminated-by '\t'

--lines-terminated-by '\n'

#列裁剪

sqoop import \

--connect jdbc:mysql://singlelucky:3306/test \

--username root \

--password kb12kb12 \

--table sqp_order \

--columns user_name,total_volume \

-m 1 \

--delete-target-dir \

--target-dir /kb12/sqoop/m2h_colcut \

--fields-terminated-by '\t' \

--lines-terminated-by '\n'

#行列裁剪+多个reducer

sqoop import \

--connect jdbc:mysql://singlelucky:3306/test \

--username root \

--password kb12kb12 \

--table sqp_order \

--columns user_name,total_volume \

--where "total_volume>=200" \

-m 2 \

--split-by user_name \

--delete-target-dir \

--target-dir /kb12/sqoop/m2h_rowcut \

--fields-terminated-by ',' \

--lines-terminated-by '\n'

sqoop import \

--connect jdbc:mysql://singlelucky:3306/test \

--username root \

--password kb12kb12 \

--query "select user_name,total_volume from sqp_order where total_volume>=300 and \$CONDITIONS" \

-m 2 \

--split-by user_name \

--delete-target-dir \

--target-dir /kb12/sqoop/m2h_mgt2 \

--fields-terminated-by ',' \

--lines-terminated-by '\n'

#增量导入append|merge

sqoop import \

--connect jdbc:mysql://singlelucky:3306/test \

--username root \

--password kb12kb12 \

--query "select * from studentinfo where \$CONDITIONS" \

-m 1 \

--target-dir /kb12/sqoop/m2h_incr_append \

--fields-terminated-by ',' \

--lines-terminated-by '\n' \

--check-column stuId \

--incremental append \

--last-value 0

insert into studentinfo values

(49,'蔡1',32,'男','14568758132',25201,6),

(50,'焦1',28,'男','15314381033',23489,7),

(51,'庞1',23,'男','13892411574',25578,2),

(52,'吴1',27,'男','13063638045',22617,4),

(53,'孟1',32,'男','13483741056',26284,2);

sqoop import \

--connect jdbc:mysql://singlelucky:3306/test \

--username root \

--password kb12kb12 \

--query "select * from studentinfo where \$CONDITIONS" \

-m 1 \

--target-dir /kb12/sqoop/m2h_incr_append \

--fields-terminated-by ',' \

--lines-terminated-by '\n' \

--check-column stuId \

--incremental append \

--last-value 48

#增量导入lastmodified

create table sqp_incr_time(

incrName varchar(20),

incrTime timestamp

);

insert into sqp_incr_time(incrName) values('henry'),('pola'),('ariel'),('john'),('mike'),('jerry'),('mary');

insert into sqp_incr_time(incrName,incrTime) values

('jack','2021-06-29 13:21:40.0'),

('rose','2021-06-29 14:21:40.0'),

('xiaoming','2021-06-29 15:21:40.0'),

('anglea','2021-06-29 16:21:40.0'),

('licheng','2021-06-29 17:21:40.0');

sqoop import \

--connect jdbc:mysql://singlelucky:3306/test \

--username root \

--password kb12kb12 \

--query "select * from sqp_incr_time where \$CONDITIONS" \

-m 1 \

--target-dir /kb12/sqoop/m2h_incr_lastmodified \

--fields-terminated-by ',' \

--lines-terminated-by '\n' \

--check-column incrTime \

--incremental lastmodified \

--merge-key incrTime \

--last-value '2021-06-29 13:21:40.0'

--last-value '0000-00-00 00:00:00'

#分区表单分区导入

#开启动态分区:一次性将某张表中的数据写入另一张分区表的多个分区中

create table sqp_partition(

id int,

name varchar(20),

dotime datetime

);

insert into sqp_partition(id,name,dotime) values

(1,'henry','2021-06-01 12:13:14'),

(2,'pola','2021-06-01 12:55:32'),

(3,'ariel','2021-06-01 13:02:55'),

(4,'rose','2021-06-01 13:22:46'),

(5,'jack','2021-06-01 14:15:12');

insert into sqp_partition(id,name,dotime) values

(6,'henry','2021-06-29 12:13:14'),

(7,'pola','2021-06-29 12:55:32'),

(8,'ariel','2021-06-29 13:02:55'),

(9,'rose','2021-06-29 13:22:46'),

(10,'jack','2021-06-29 14:15:12');

create table sqp_partition(

id int,

name string,

dotime timestamp

)

partitioned by (dodate date)

row format delimited

fields terminated by ','

lines terminated by '\n'

stored as textfile;

sqoop import \

--connect jdbc:mysql://singlelucky:3306/test \

--username root \

--password kb12kb12 \

--table sqp_partition \

--where "cast(dotime as date)='2021-06-01'" \

-m 1 \

--delete-target-dir \

--target-dir /user/hive/warehouse/kb12.db/sqp_partition/dodate=2021-06-01 \

--fields-terminated-by ',' \

--lines-terminated-by '\n'

alter table sqp_partition add partition(dodate='2021-06-01');

#xshell脚本

#!/bin/bash

DATE=`date -d '-1 day' +%F`

sqoop import \

--connect jdbc:mysql://singlelucky:3306/test \

--username root \

--password kb12kb12 \

--table sqp_partition \

--where "cast(dotime as date)='$DATE'" \

-m 1 \

--delete-target-dir \

--target-dir /user/hive/warehouse/kb12.db/sqp_partition/dodate=$DATE \

--fields-terminated-by ',' \

--lines-terminated-by '\n'

hive -e "alter table test.sqp_partition add partition(dodate='$DATE')"

=====================================================================

[root@singlelucky sqoop_job]# vim sq_m2hv_par.sh

[root@singlelucky sqoop_job]# chmod u+x sq_m2hv_par.sh

[root@singlelucky sqoop_job]# ./sq_m2hv_par.sh

=====================================================================

#sqoop job

#vim sqoop-site.xml 将以下注释打开

<property>

<name>sqoop.metastore.client.record.password</name>

<value>true</value>

<description>If true, allow saved passwords in the metastore.

</description>

</property>

#查看job列表

sqoop job --list

#删除job

sqoop job --delete jobname

#查看Job定义

sqoop job --show jobname

#创建job

sqoop job \

--create job_m2hv_par \

-- import \

--connect jdbc:mysql://singlelucky:3306/test \

--username root \

--password kb12kb12 \

--query "select * from sqp_incr_time where \$CONDITIONS" \

-m 1 \

--target-dir /kb12/sqoop/m2h_incr_lastmodified \

--fields-terminated-by ',' \

--lines-terminated-by '\n' \

--check-column incrTime \

--incremental lastmodified \

--append \

--last-value '2021-06-29 13:21:40.0'

#执行job

sqoop job --exec job_m2hv_par

#------ mysql -> hive --------

sqoop import \

--connect jdbc:mysql://192.168.19.130:3306/test \

--username root \

--password kb12kb12 \

--table user_info \

-m 1 \

--hive-import \

--hive-table kb12.user_info \

--create-hive-table

sqoop import \

--connect jdbc:mysql://192.168.19.130:3306/test \

--username root \

--password kb12kb12 \

--table studentinfo \

--where 'stuId>=50'

-m 1 \

--hive-import \

--hive-table kb12.studentinfo

#尝试分区导入

create table sqp_user_par(

user_id int,

user_name string,

user_account string,

user_pass string,

user_phone string,

user_gender string,

user_pid string,

user_province string,

user_city string,

user_district string,

user_address string,

user_balance decimal(10,2)

)

partitioned by (id_rang string)

row format delimited

fields terminated by ','

lines terminated by '\n'

stored as textfile;

sqoop import \

--connect jdbc:mysql://192.168.19.130:3306/test \

--username root \

--password kb12kb12 \

--table user_info \

--where "user_id between 1 and 19" \

-m 1 \

--hive-import \

--hive-table kb12.sqp_user_par \

--hive-partition-key id_rang \

--hive-partition-value '1-19' \

--fields-terminated-by ',' \

--lines-terminated-by '\n'

#!/bin/bash

B=$1

E=$2

sqoop import \

--connect jdbc:mysql://192.168.19.130:3306/test \

--username root \

--password kb12kb12 \

--table user_info \

--where "user_id between $B and $E" \

-m 1 \

--hive-import \

--hive-table kb12.sqp_user_par \

--hive-partition-key id_rang \

--hive-partition-value "$B-$E" \

--fields-terminated-by ',' \

--lines-terminated-by '\n'

#------ mysql -> hbase -------

sqoop import \

--connect jdbc:mysql://192.168.19.130:3306/test \

--username root \

--password kb12kb12 \

--table studentinfo \

--where "stuid between 20 and 38" \

--hbase-table test:studentinfo \

--column-family base \

--hbase-create-table \

--hbase-row-key stuid

mysql+jdbc -> hbase-client ->hbase

java代码java2hbase

java -jar prolog-1.0-jar-with-dependencies.jar /root/data/flume/ logconf/logger.properties

java -jar java2hbase-1.0-jar-with-dependencies.jar mysqlToHbaseConfig/datasources.properties

nohup java -jar java2hbase-1.0-jar-with-dependencies.jar mysqlToHbaseConfig/datasources.properties>/dev/null 2>&1 &

边栏推荐

- Blender volume fog

- Blender model import UE, collision settings

- Introduction and Principle notes of UE4 material

- ERROR 1118 (42000): Row size too large (&gt; 8126)

- [MySQL] an exception occurs when connecting to MySQL: connection must be valid and open

- allure--常用配置项

- Alibaba cloud SLS log service

- Nonlinear optimization: establishment of slam model

- 【虚幻】武器插槽:拾取武器

- [ue5] animation redirection: how to import magic tower characters into the game

猜你喜欢

2837xd code generation module learning (1) -- GPIO module

Blender石头雕刻

2.14 is it Valentine's day or Valentine's day when the mainstream market continues to fluctuate and wait for changes?

Blender ocean production

This article takes you to learn in detail what is fiber to home FTTH

Large neural networks may be beginning to realize: the chief scientist of openai leads to controversy, and everyone quarrels

Beautiful and intelligent, Haval H6 supreme+ makes Yuanxiao travel safer

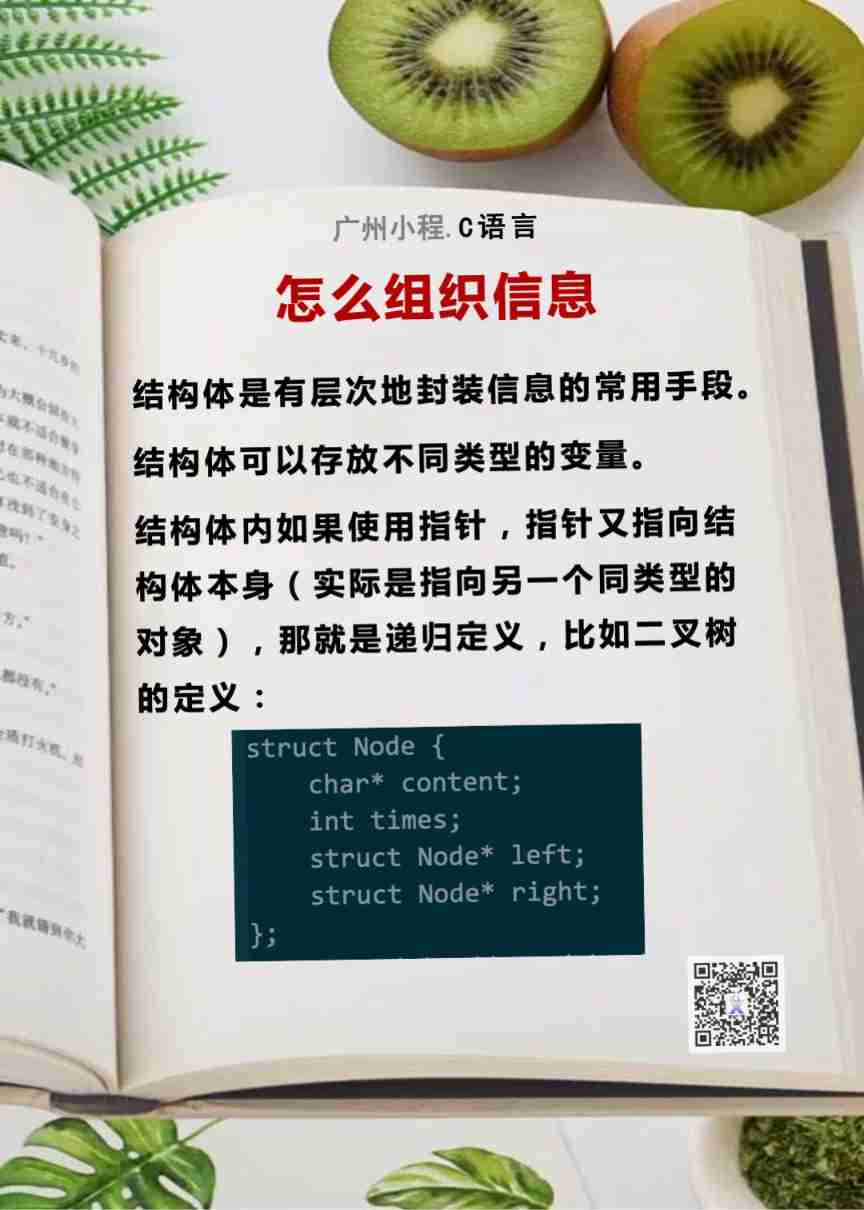

Feature (5): how to organize information

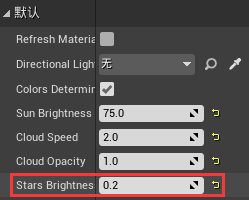

UE4 night lighting notes

【Unity3D】嵌套使用Layout Group制作拥有动态子物体高度的Scroll View

随机推荐

VLAN experiment

It is the most difficult to teach AI to play iron fist frame by frame. Now arcade game lovers have something

[illusory] automatic door blueprint notes

虛幻AI藍圖基礎筆記(萬字整理)

A model can do two things: image annotation and image reading Q & A. VQA accuracy is close to human level | demo can be played

Allure -- common configuration items

AutoCAD - layer Linetype

Blender体积雾

[illusory] weapon slot: pick up weapons

Following nym, the new project Galaxy token announced by coinlist is gal

Brief analysis of edgedb architecture

UE4 night lighting notes

2837xd code generation module learning (1) -- GPIO module

[ue5] animation redirection: how to import magic tower characters into the game

测试--面试题总结

Introduction and prevention of penetration test

2021-09-12

UE5——AI追逐(藍圖、行為樹)

How to judge the quality of primary market projects when the market is depressed?

Message mechanism -- getting to know messages and message queues for the first time