当前位置:网站首页>Reference frame generation based on deep learning

Reference frame generation based on deep learning

2022-07-06 20:56:00 【Dillon2015】

This article comes from the proposal JVET-T0058 and JVET-U0087, This method generates virtual reference frames for inter frame prediction by inserting frames . The whole model consists of several sub models , Perform optical flow estimation respectively 、 Compensation and detail enhancement .

The overall architecture

The overall architecture is as follows Fig.1 Shown , In the process of video coding DPB There is a reference frame for motion estimation , according to GOP Structure the current frame has one or more forward 、 Backward reference frame . The default in the proposal is POC The two reference frames closest to the current frame generate a virtual reference frame , Such as Fig.1 Current frame in POC yes 5, Then use POC by 4 and 6 The frame of generates a reference frame . The generated virtual reference frame will be put into DPB For reference , Virtual reference frame POC Set to the same as the current frame . In order to prevent affecting the time domain MVP According to the POC Distant MV Zoom process , Virtual reference frame MV All set to 0 And is used as a long-term reference frame . In the proposal , After the current frame is decoded, the virtual reference frame starts from DPB Remove .

For high resolution sequences (4K or 8K) Due to resource constraints, neural network processing cannot be directly used for the whole frame , At this time, it is assumed that the virtual reference frame is divided into multiple regions , Each area uses network generation separately , Then put these areas together into a reference frame .

A network model

Optical flow estimation and compensation are mostly used in general video interpolation , Generally, bidirectional optical flow method is used , Then the two optical flows are combined into one through a linear model . Only the single optical flow model is used in the proposal .

Such as Fig.2, First, optical flow is generated by optical flow estimation model ( Input is POC The two nearest reference frames ), And then through backward warping Process processing optical flow , The processed optical flow and two reference frames pass through fusion Process synthesis intermediate frame . The intermediate frame will enhance the quality of the model through details , The detail enhancement model consists of two parts ,PCD(Pyramid, Cascading and Deformable) For space-time optimization and TSA (Temporal and Spatial Attention) Used to improve important features attention.

experimental result

Interested parties, please pay attention to WeChat official account Video Coding

边栏推荐

- R语言可视化两个以上的分类(类别)变量之间的关系、使用vcd包中的Mosaic函数创建马赛克图( Mosaic plots)、分别可视化两个、三个、四个分类变量的关系的马赛克图

- 7、数据权限注解

- 7. Data permission annotation

- Event center parameter transfer, peer component value transfer method, brother component value transfer

- What is the difference between procedural SQL and C language in defining variables

- [weekly pit] positive integer factorization prime factor + [solution] calculate the sum of prime numbers within 100

- 使用.Net分析.Net达人挑战赛参与情况

- [weekly pit] calculate the sum of primes within 100 + [answer] output triangle

- 正则表达式收集

- 每个程序员必须掌握的常用英语词汇(建议收藏)

猜你喜欢

![[DSP] [Part 1] start DSP learning](/img/81/051059958dfb050cb04b8116d3d2a8.png)

[DSP] [Part 1] start DSP learning

1500万员工轻松管理,云原生数据库GaussDB让HR办公更高效

![[weekly pit] positive integer factorization prime factor + [solution] calculate the sum of prime numbers within 100](/img/d8/a367c26b51d9dbaf53bf4fe2a13917.png)

[weekly pit] positive integer factorization prime factor + [solution] calculate the sum of prime numbers within 100

![Mécanisme de fonctionnement et de mise à jour de [Widget Wechat]](/img/cf/58a62a7134ff5e9f8d2f91aa24c7ac.png)

Mécanisme de fonctionnement et de mise à jour de [Widget Wechat]

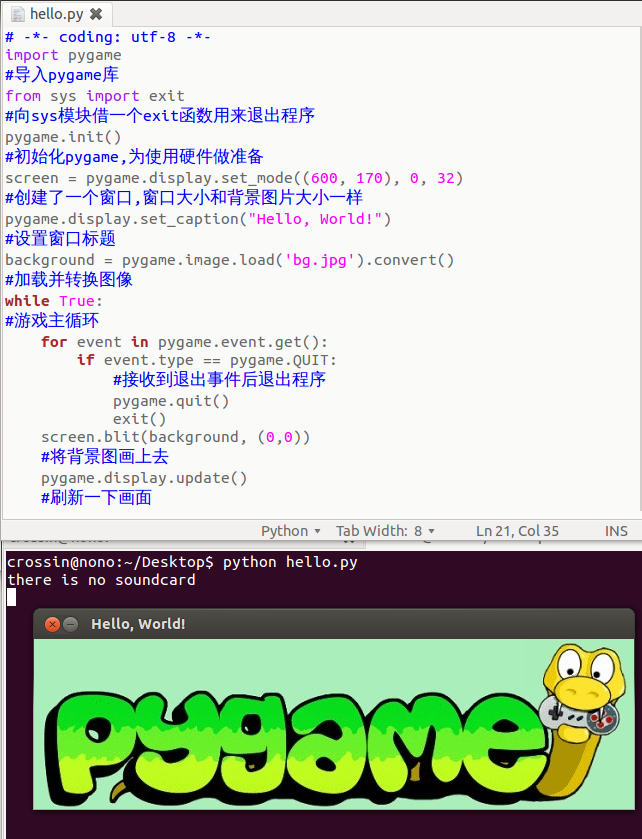

电子游戏的核心原理

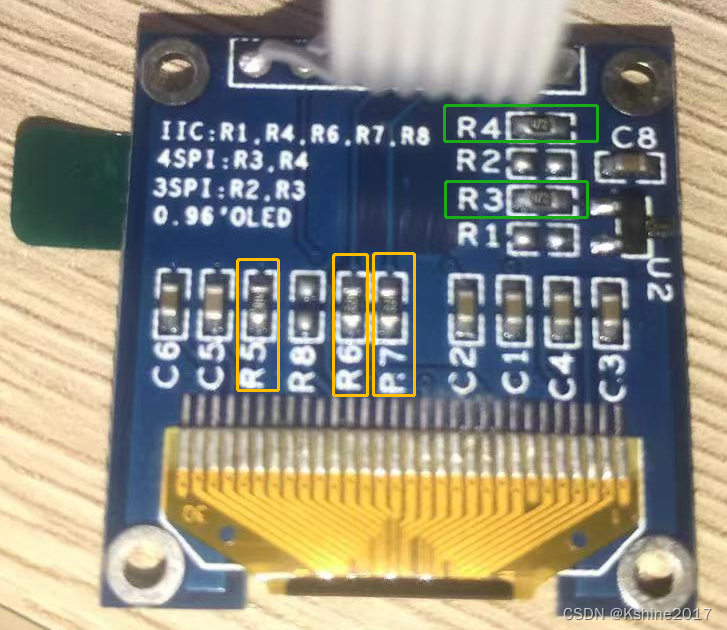

OLED屏幕的使用

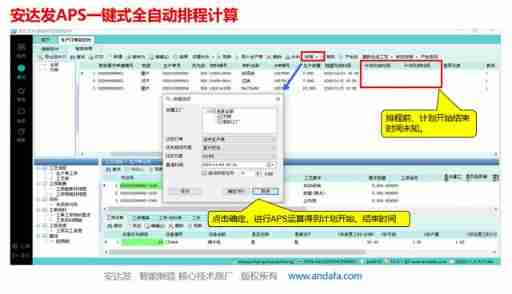

Value of APS application in food industry

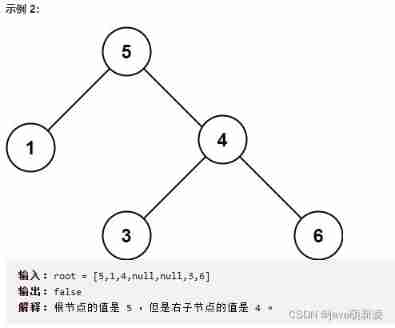

Force deduction brush question - 98 Validate binary search tree

![[weekly pit] output triangle](/img/d8/a367c26b51d9dbaf53bf4fe2a13917.png)

[weekly pit] output triangle

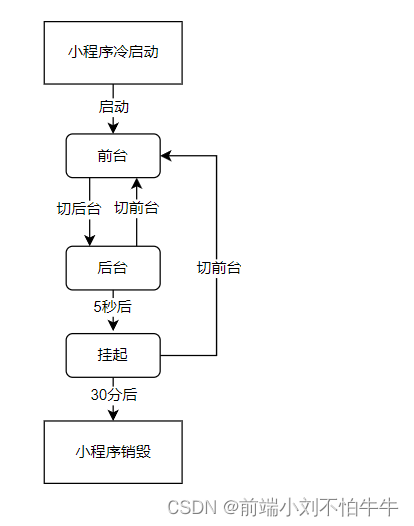

【微信小程序】運行機制和更新機制

随机推荐

Redis insert data garbled solution

C language games - minesweeping

Rhcsa Road

Yyds dry goods count re comb this of arrow function

审稿人dis整个研究方向已经不仅仅是在审我的稿子了怎么办?

[asp.net core] set the format of Web API response data -- formatfilter feature

What programming do children learn?

[wechat applet] operation mechanism and update mechanism

“罚点球”小游戏

Utilisation de l'écran OLED

(work record) March 11, 2020 to March 15, 2021

华为设备命令

Entity alignment two of knowledge map

Trends of "software" in robotics Engineering

基于STM32单片机设计的红外测温仪(带人脸检测)

强化学习-学习笔记5 | AlphaGo

Simple continuous viewing PTA

Build your own application based on Google's open source tensorflow object detection API video object recognition system (IV)

Review questions of anatomy and physiology · VIII blood system

What is the difference between procedural SQL and C language in defining variables