当前位置:网站首页>Use mitmproxy to cache 360 degree panoramic web pages offline

Use mitmproxy to cache 360 degree panoramic web pages offline

2022-07-06 23:11:00 【Xiaoming - code entity】

Blog home page :https://blog.csdn.net/as604049322

Welcome to thumb up Collection Leaving a message. Welcome to discuss !

This paper is written by Xiaoming - Code entities original , First appeared in CSDN

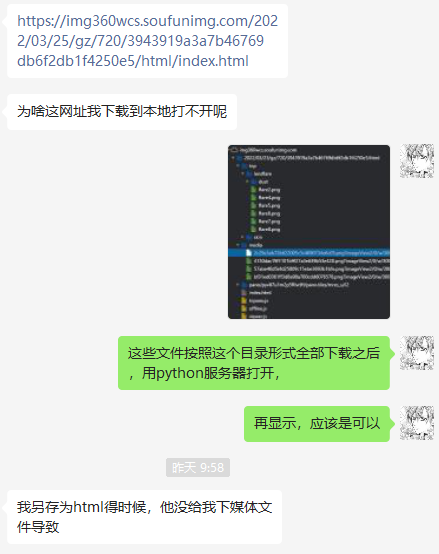

There was a problem yesterday :

Some involve dynamically loaded web pages , It is impossible to save all resources with the browser's own function of saving web pages .

If we save documents one by one by hand , There are too many :

Too many folders , One layer at a time .

At this time, I want to cache the target web page offline , Thought of a good way , That is through support python Programmed agent , Let every request be based on URL Save the corresponding file locally .

MitmProxy Installation

Comparison recommendation MitmProxy, The installation method is executed in the command line :

pip install mitmproxy

MitmProxy It is divided into mitmproxy,mitmdump and mitmweb Three commands , among mitmdump Support the use of specified python The script handles each request ( Use -s Parameter assignment ).

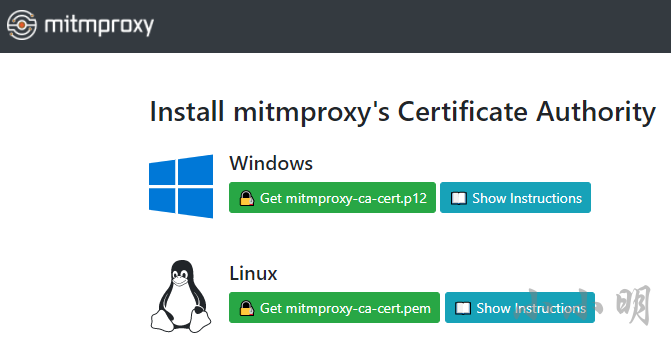

After installation, we need the installation certificate MitmProxy Corresponding certificate , visit :http://mitm.it/

Direct access will show :If you can see this, traffic is not passing through mitmproxy.

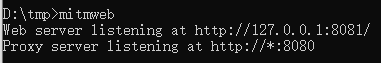

Here we're going to do it first mitmweb Start a web proxy server :

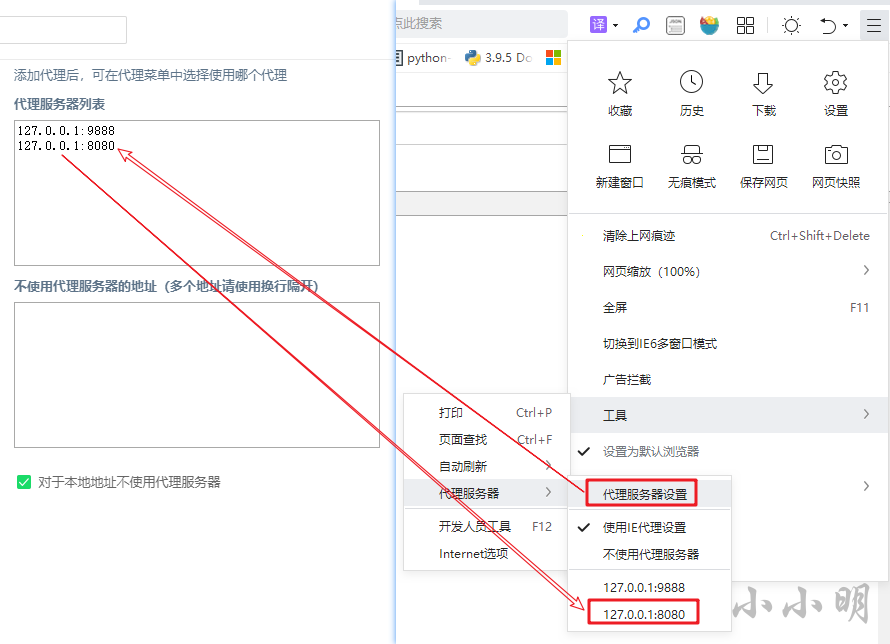

We give the tourist we use , Set the address of the proxy server , With 360 Take the safe tour as an example :

Set up and use MitmProxy Access again after the proxy server provided http://mitm.it/ You can download and install the certificate :

After downloading, open the certificate and click next to complete the installation .

Visit Baidu at this time , You can see MitmProxy Certificate validation information for :

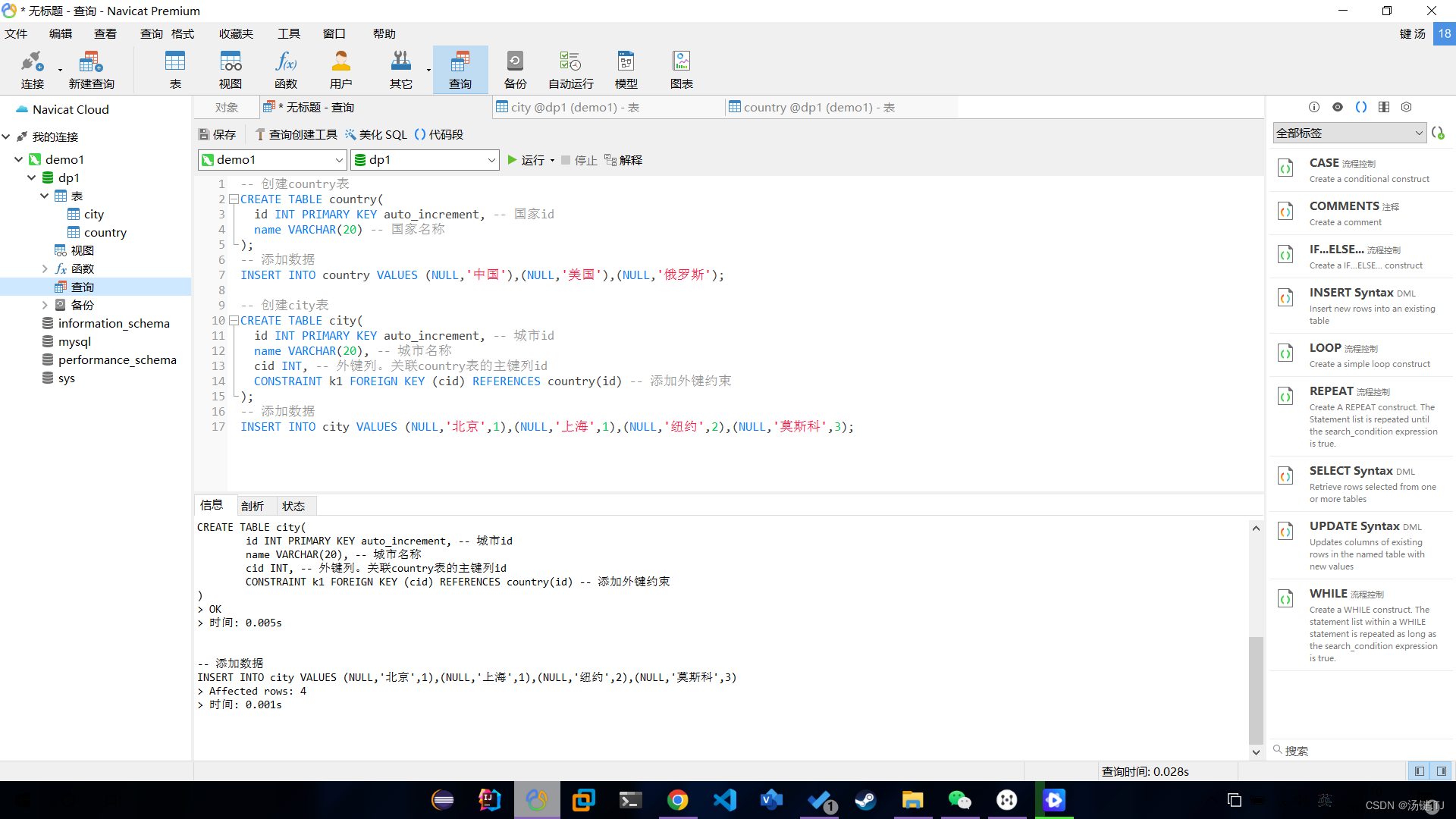

To write mitmdump Required scripts

mitmdump The template code of the supported script is as follows :

# All sent request packets will be processed by this method

def request(flow):

# Get request object

request = flow.request

# All server response packets are processed by this method

def response(flow):

# Get the response object

response = flow.response

request and response Object and the requests The objects in the library are almost the same .

Our demand is based on url Save the file , Just process the response , Try caching Baidu homepage first :

import os

import re

dest_url = "https://www.baidu.com/"

def response(flow):

url = flow.request.url

response = flow.response

if response.status_code != 200 or not url.startswith(dest_url):

return

r_pos = url.rfind("?")

url = url if r_pos == -1 else url[:r_pos]

url = url if url[-1] != "/" else url+"index.html"

path = re.sub("[/\\\\:\\*\\?\\<\\>\\|\"\s]", "_", dest_url.strip("htps:/"))

file = path + "/" + url.replace(dest_url, "").strip("/")

r_pos = file.rfind("/")

if r_pos != -1:

path, file_name = file[:r_pos], file[r_pos+1:]

os.makedirs(path, exist_ok=True)

with open(file, "wb") as f:

f.write(response.content)

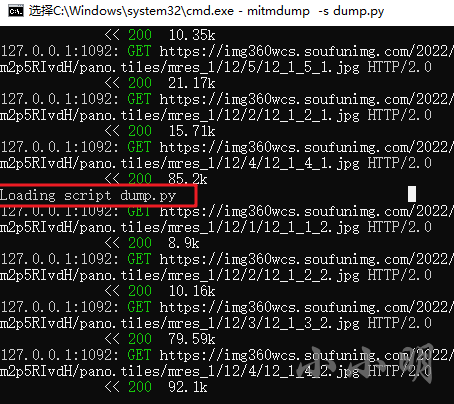

Save the above script as dump.py Then start the agent with the following command ( Close the previously started mitmweb):

>mitmdump -s dump.py

Loading script dump.py

Proxy server listening at http://*:8080

After refreshing the page, baidu home page has been successfully cached :

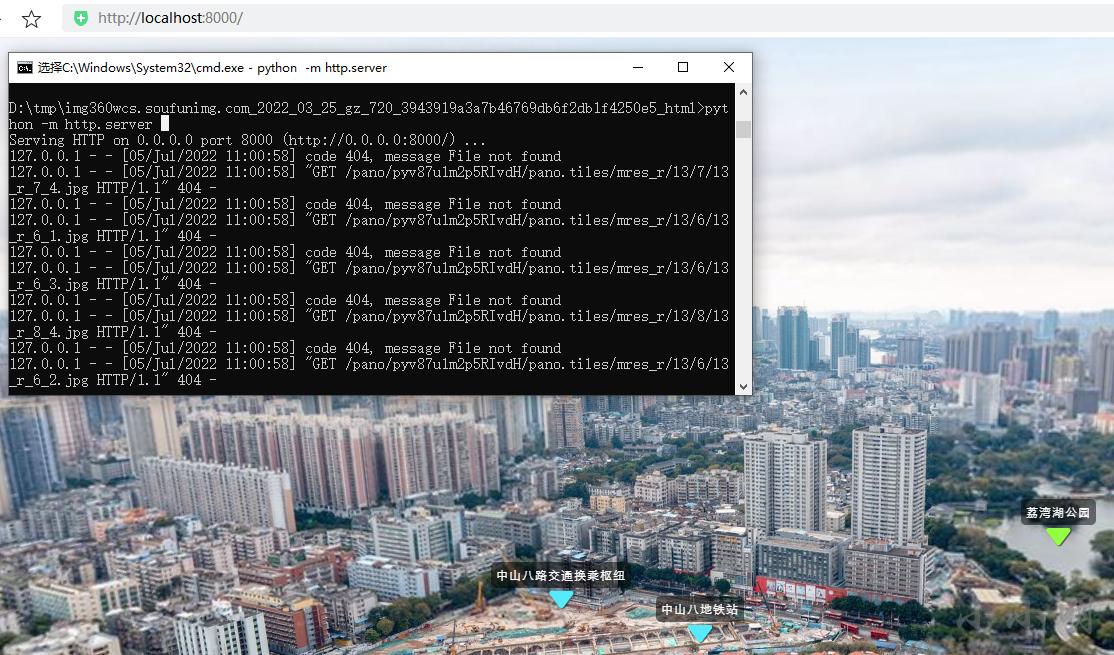

Use python Test the built-in server and visit :

You can see that you have successfully visited the local Baidu .

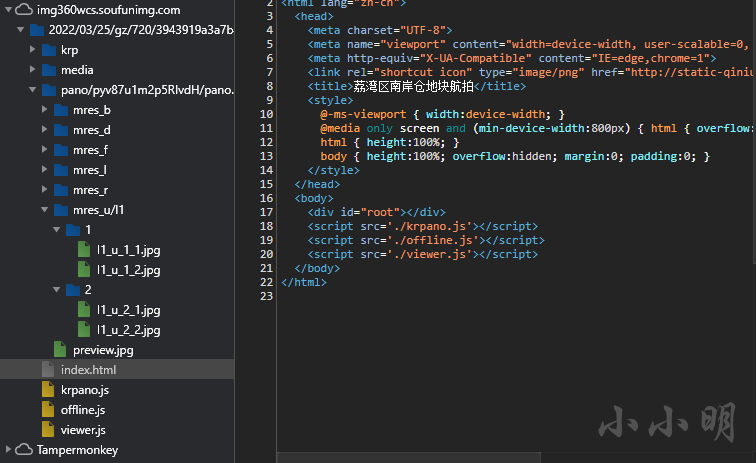

Offline caching 360 Panoramic web page

Put the dest_url Change to the following address and save :

dest_url = "https://img360wcs.soufunimg.com/2022/03/25/gz/720/3943919a3a7b46769db6f2db1f4250e5/html"

Revisit :https://img360wcs.soufunimg.com/2022/03/25/gz/720/3943919a3a7b46769db6f2db1f4250e5/html/index.html

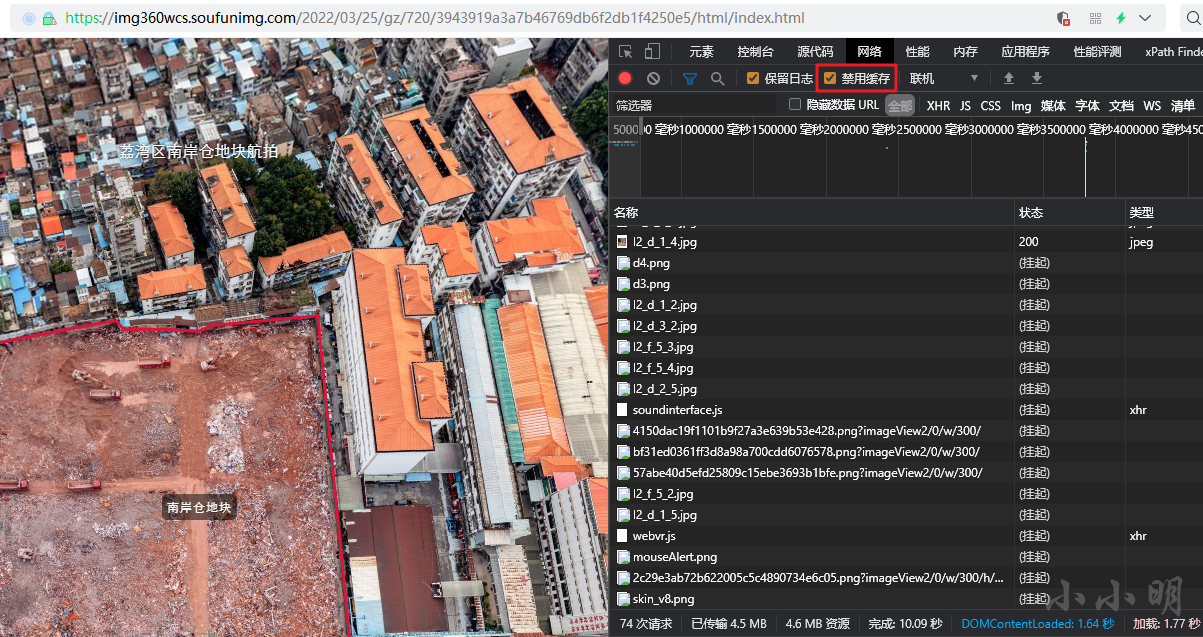

If you find that the saved files are not complete , You can open developer tools , Check the network tab Disable caching after , Refresh the page again :

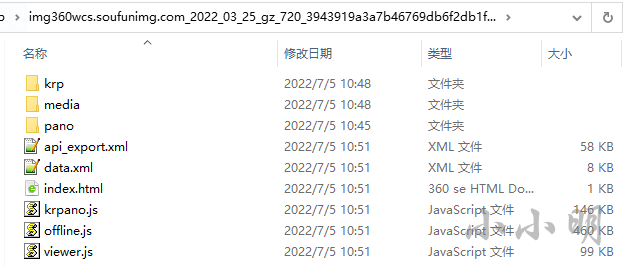

At this time, the main file has been cached :

At this time, just visit all directions on the original web page as much as possible , And zoom in and out to cache as many high-definition detail pictures as possible .

Using the local server to start the test has been successfully accessed :

However, the original script only caches the response code as 200 The ordinary documents of , The above website will also return a response code of 206 Music files , If caching is also needed, it is a little more complicated , Now let's study how to cache music files .

cache 206 Split file

After some research , Modify the above code to the following form :

import os

import re

dest_url = "https://img360wcs.soufunimg.com/2022/03/25/gz/720/3943919a3a7b46769db6f2db1f4250e5/html"

def response(flow):

url = flow.request.url

response = flow.response

if response.status_code not in (200, 206) or not url.startswith(dest_url):

return

r_pos = url.rfind("?")

url = url if r_pos == -1 else url[:r_pos]

url = url if url[-1] != "/" else url+"index.html"

path = re.sub("[/\\\\:\\*\\?\\<\\>\\|\"\s]", "_", dest_url.strip("htps:/"))

file = path + "/" + url.replace(dest_url, "").strip("/")

r_pos = file.rfind("/")

if r_pos != -1:

path, file_name = file[:r_pos], file[r_pos+1:]

os.makedirs(path, exist_ok=True)

if response.status_code == 206:

s, e, length = map(int, re.fullmatch(

r"bytes (\d+)-(\d+)/(\d+)", response.headers['Content-Range']).groups())

if not os.path.exists(file):

with open(file, "wb") as f:

pass

with open(file, "rb+") as f:

f.seek(s)

f.write(response.content)

elif response.status_code == 200:

with open(file, "wb") as f:

f.write(response.content)

Save the modified script ,mitmdump It can be reloaded automatically :

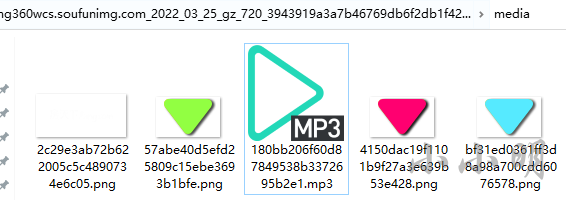

After cleaning up the cache and re accessing , The music files have been downloaded successfully :

summary

adopt mitmdump We have successfully implemented the caching of the designated website , If you want to cache other websites locally in the future, you only need to modify dest_url The website of .

边栏推荐

- Is the more additives in food, the less safe it is?

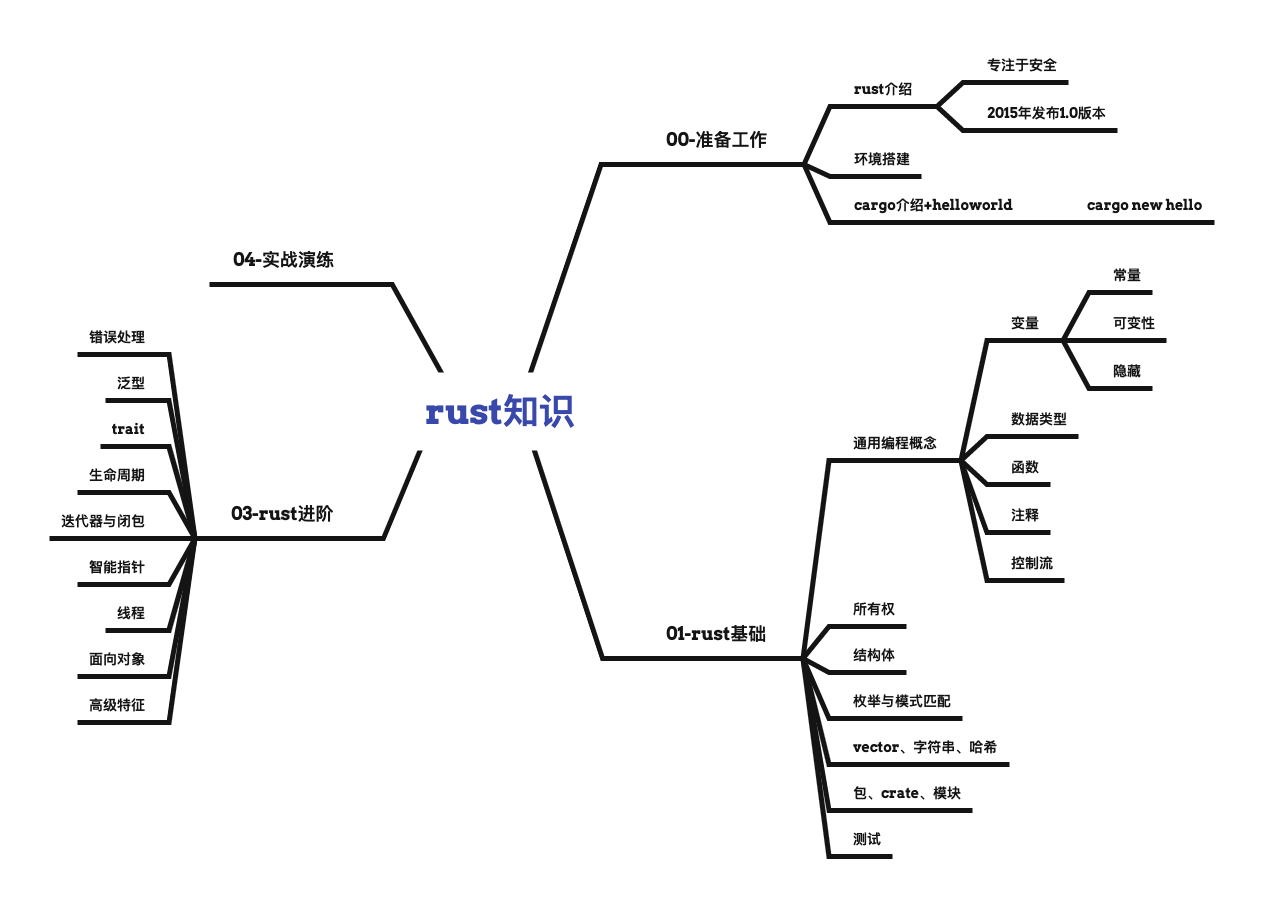

- Rust knowledge mind map XMIND

- PDF批量拆分、合并、书签提取、书签写入小工具

- [compilation principle] LR (0) analyzer half done

- Two week selection of tdengine community issues | phase II

- The application of machine learning in software testing

- 2014 Alibaba web pre intern project analysis (1)

- 机器人材料整理中的套-假-大-空话

- Children's pajamas (Australia) as/nzs 1249:2014 handling process

- 让 Rust 库更优美的几个建议!你学会了吗?

猜你喜欢

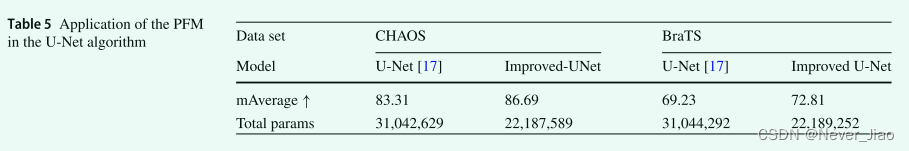

DR-Net: dual-rotation network with feature map enhancement for medical image segmentation

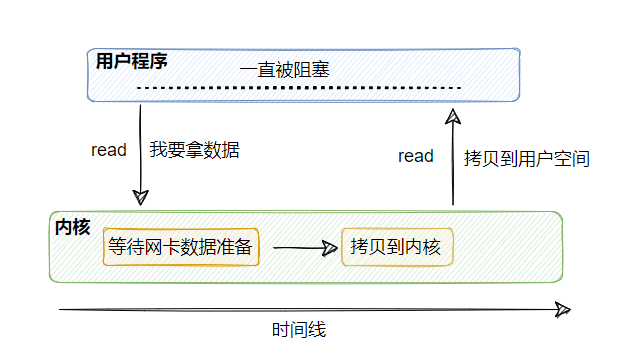

Let's see through the network i/o model from beginning to end

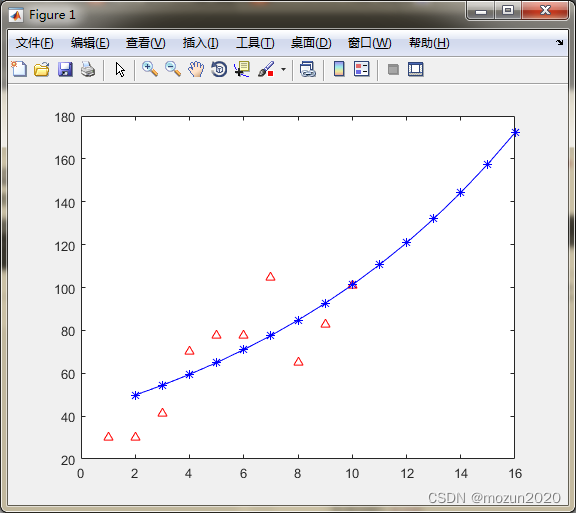

Matlab tips (27) grey prediction

Rust knowledge mind map XMIND

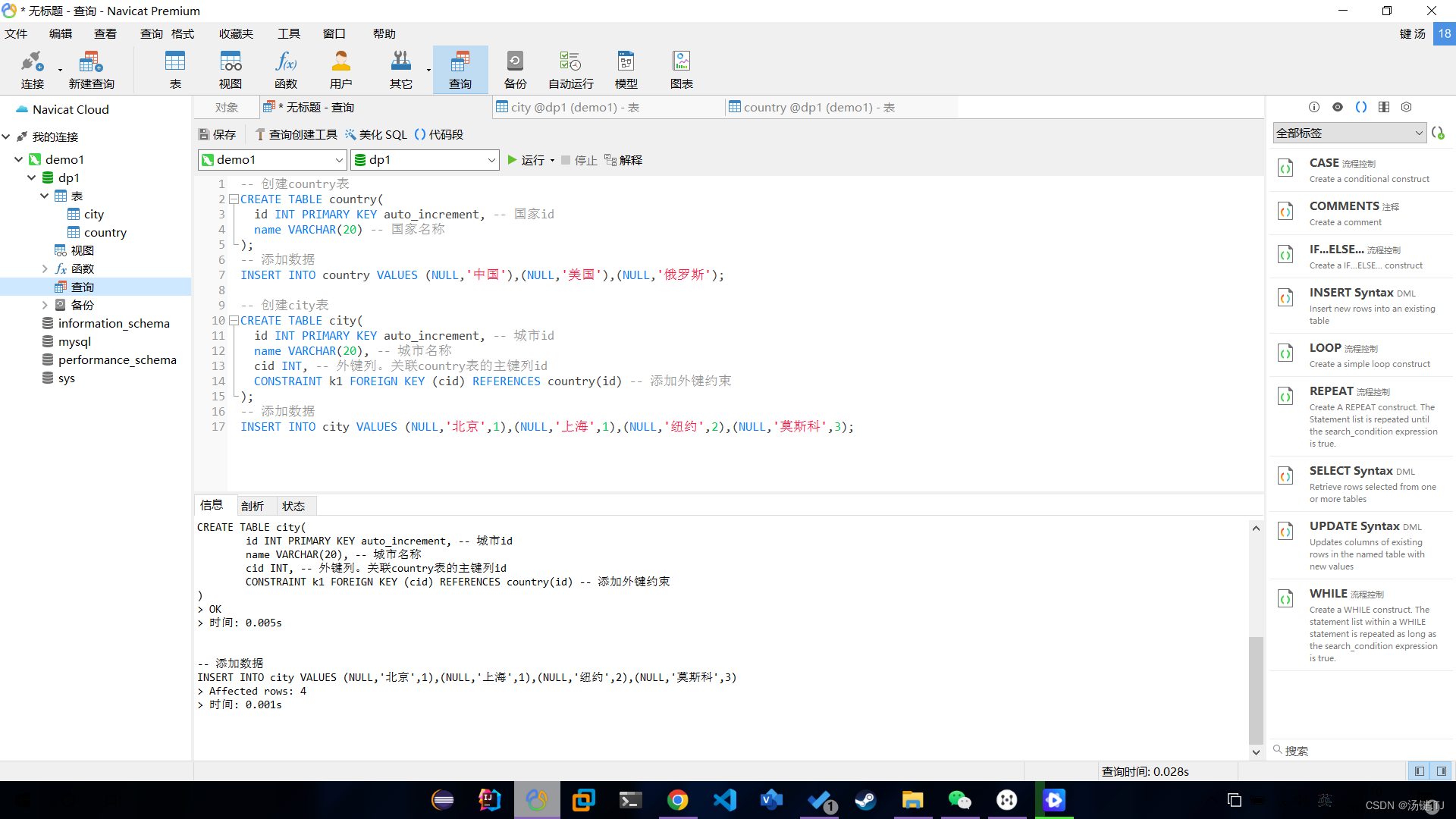

视图(view)

MySQL中正则表达式(REGEXP)使用详解

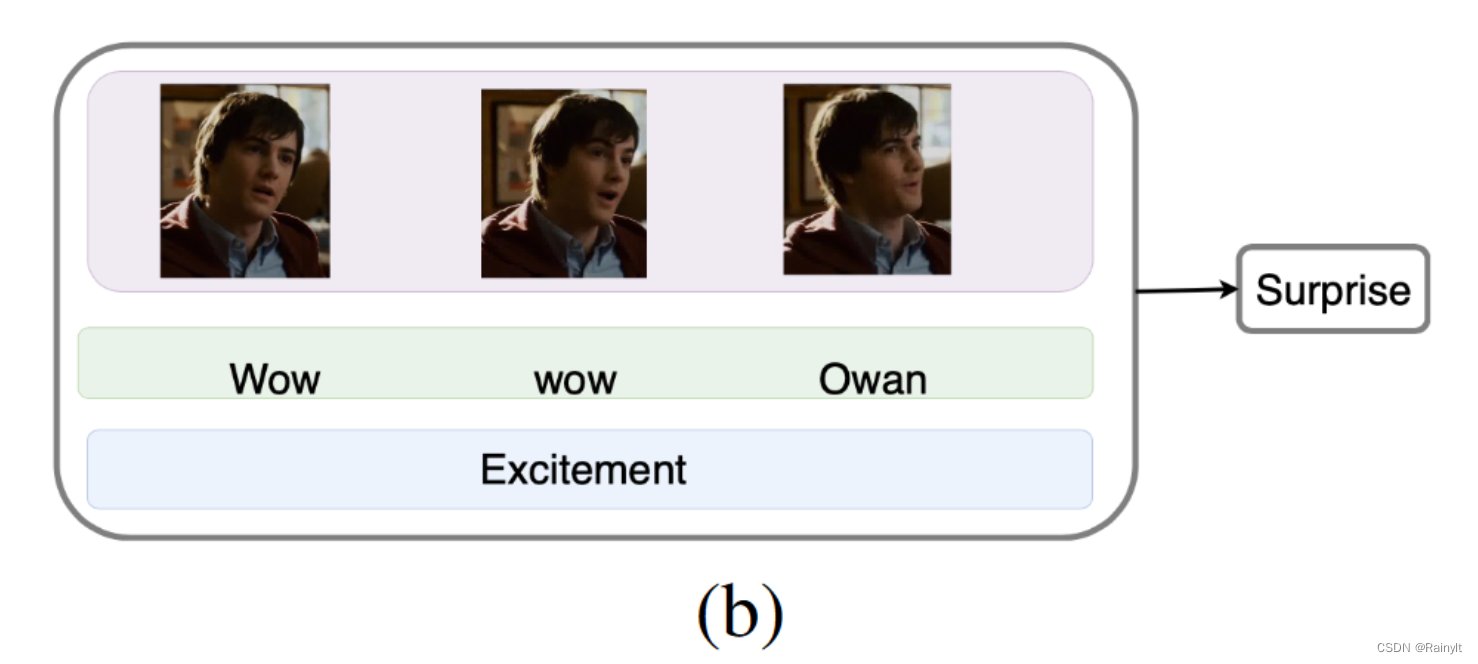

Improving Multimodal Accuracy Through Modality Pre-training and Attention

View

![[compilation principle] LR (0) analyzer half done](/img/ec/b13913b5d5c5a63980293f219639a4.png)

[compilation principle] LR (0) analyzer half done

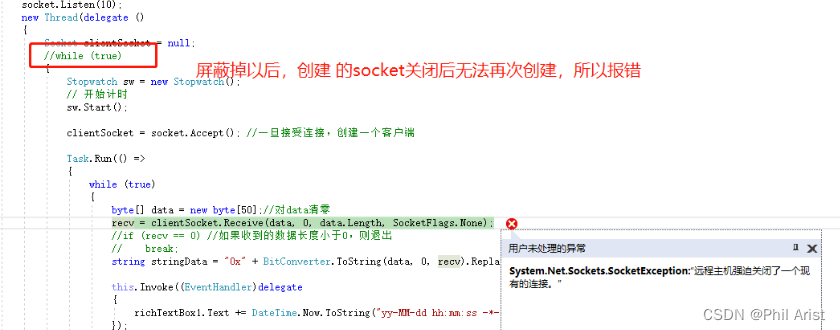

C three ways to realize socket data reception

随机推荐

Les entreprises ne veulent pas remplacer un système vieux de dix ans

View

MySQL authentication bypass vulnerability (cve-2012-2122)

OpenSSL:适用TLS与SSL协议的全功能工具包,通用加密库

使用MitmProxy离线缓存360度全景网页

Graphite document: four countermeasures to solve the problem of enterprise document information security

MySQL中正则表达式(REGEXP)使用详解

Mysql 身份认证绕过漏洞(CVE-2012-2122)

项目复盘模板

HDU 5077 NAND (violent tabulation)

Children's pajamas (Australia) as/nzs 1249:2014 handling process

Chapter 19 using work queue manager (2)

存币生息理财dapp系统开发案例演示

Typescript get function parameter type

Custom swap function

Windows auzre background operation interface of Microsoft's cloud computing products

docker中mysql开启日志的实现步骤

QT信号和槽

【全网首发】Redis系列3:高可用之主从架构的

COSCon'22 社区召集令来啦!Open the World,邀请所有社区一起拥抱开源,打开新世界~