当前位置:网站首页>Importing tables from sqoop

Importing tables from sqoop

2022-07-02 10:31:00 【Lucky lucky】

#------ hdfs -> mysql ------

create table sqp_order(

create_date date,

user_name varchar(20),

total_volume decimal(10,2)

);

sqoop export \

--connect jdbc:mysql://singlelucky:3306/test \

--username root \

--password kb12kb12 \

--table sqp_order \

-m 1 \

--export-dir /kb12/hive/orderinfo \

--fields-terminated-by '\t'

# If problems are found in the imported table NOTFINDCALSS

[root@singlelucky ~]# find / -name 'sqp_order.class'

/tmp/sqoop-root/compile/e24fd4ec30c24475569ee1569d0873a7/sqp_order.class

/tmp/sqoop-root/compile/04c79abd0daca46e7a2a116716d2eb1f/sqp_order.class

[root@singlelucky ~]# cd /tmp/sqoop-root/compile/

[root@singlelucky compile]# ls

04c79abd0daca46e7a2a116716d2eb1f e24fd4ec30c24475569ee1569d0873a7

[root@singlelucky compile]# vim /etc/profile.d/myenv.sh

[root@singlelucky compile]# cd 04c79abd0daca46e7a2a116716d2eb1f

[root@singlelucky 04c79abd0daca46e7a2a116716d2eb1f]# ls

sqp_order.class sqp_order.jar sqp_order.java

# stay jdk in CLASSPATH Additional sqp_order.jar

export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar:$JAVA_HOME/lib/sqp_order.jar

#------ mysql -> hdfs ------

#– Full import

sqoop import \

--connect jdbc:mysql://singlelucky:3306/test \

--username root \

--password kb12kb12 \

--table sqp_order \

-m 1 \

--delete-target-dir \

--target-dir /kb12/sqoop/m2h_all \

--fields-terminated-by '\t'

--lines-terminated-by '\n'

# Column cut

sqoop import \

--connect jdbc:mysql://singlelucky:3306/test \

--username root \

--password kb12kb12 \

--table sqp_order \

--columns user_name,total_volume \

-m 1 \

--delete-target-dir \

--target-dir /kb12/sqoop/m2h_colcut \

--fields-terminated-by '\t' \

--lines-terminated-by '\n'

# Row and column clipping + Multiple reducer

sqoop import \

--connect jdbc:mysql://singlelucky:3306/test \

--username root \

--password kb12kb12 \

--table sqp_order \

--columns user_name,total_volume \

--where "total_volume>=200" \

-m 2 \

--split-by user_name \

--delete-target-dir \

--target-dir /kb12/sqoop/m2h_rowcut \

--fields-terminated-by ',' \

--lines-terminated-by '\n'

sqoop import \

--connect jdbc:mysql://singlelucky:3306/test \

--username root \

--password kb12kb12 \

--query "select user_name,total_volume from sqp_order where total_volume>=300 and \$CONDITIONS" \

-m 2 \

--split-by user_name \

--delete-target-dir \

--target-dir /kb12/sqoop/m2h_mgt2 \

--fields-terminated-by ',' \

--lines-terminated-by '\n'

# Incremental import append|merge

sqoop import \

--connect jdbc:mysql://singlelucky:3306/test \

--username root \

--password kb12kb12 \

--query "select * from studentinfo where \$CONDITIONS" \

-m 1 \

--target-dir /kb12/sqoop/m2h_incr_append \

--fields-terminated-by ',' \

--lines-terminated-by '\n' \

--check-column stuId \

--incremental append \

--last-value 0

insert into studentinfo values

(49,' CAI 1',32,' male ','14568758132',25201,6),

(50,' coke 1',28,' male ','15314381033',23489,7),

(51,' Pang 1',23,' male ','13892411574',25578,2),

(52,' Wu 1',27,' male ','13063638045',22617,4),

(53,' Meng 1',32,' male ','13483741056',26284,2);

sqoop import \

--connect jdbc:mysql://singlelucky:3306/test \

--username root \

--password kb12kb12 \

--query "select * from studentinfo where \$CONDITIONS" \

-m 1 \

--target-dir /kb12/sqoop/m2h_incr_append \

--fields-terminated-by ',' \

--lines-terminated-by '\n' \

--check-column stuId \

--incremental append \

--last-value 48

# Incremental import lastmodified

create table sqp_incr_time(

incrName varchar(20),

incrTime timestamp

);

insert into sqp_incr_time(incrName) values('henry'),('pola'),('ariel'),('john'),('mike'),('jerry'),('mary');

insert into sqp_incr_time(incrName,incrTime) values

('jack','2021-06-29 13:21:40.0'),

('rose','2021-06-29 14:21:40.0'),

('xiaoming','2021-06-29 15:21:40.0'),

('anglea','2021-06-29 16:21:40.0'),

('licheng','2021-06-29 17:21:40.0');

sqoop import \

--connect jdbc:mysql://singlelucky:3306/test \

--username root \

--password kb12kb12 \

--query "select * from sqp_incr_time where \$CONDITIONS" \

-m 1 \

--target-dir /kb12/sqoop/m2h_incr_lastmodified \

--fields-terminated-by ',' \

--lines-terminated-by '\n' \

--check-column incrTime \

--incremental lastmodified \

--merge-key incrTime \

--last-value '2021-06-29 13:21:40.0'

--last-value '0000-00-00 00:00:00'

# Partition form partition import

# Turn on dynamic partitioning : Write the data in one table to multiple partitions of another partition table at one time

create table sqp_partition(

id int,

name varchar(20),

dotime datetime

);

insert into sqp_partition(id,name,dotime) values

(1,'henry','2021-06-01 12:13:14'),

(2,'pola','2021-06-01 12:55:32'),

(3,'ariel','2021-06-01 13:02:55'),

(4,'rose','2021-06-01 13:22:46'),

(5,'jack','2021-06-01 14:15:12');

insert into sqp_partition(id,name,dotime) values

(6,'henry','2021-06-29 12:13:14'),

(7,'pola','2021-06-29 12:55:32'),

(8,'ariel','2021-06-29 13:02:55'),

(9,'rose','2021-06-29 13:22:46'),

(10,'jack','2021-06-29 14:15:12');

create table sqp_partition(

id int,

name string,

dotime timestamp

)

partitioned by (dodate date)

row format delimited

fields terminated by ','

lines terminated by '\n'

stored as textfile;

sqoop import \

--connect jdbc:mysql://singlelucky:3306/test \

--username root \

--password kb12kb12 \

--table sqp_partition \

--where "cast(dotime as date)='2021-06-01'" \

-m 1 \

--delete-target-dir \

--target-dir /user/hive/warehouse/kb12.db/sqp_partition/dodate=2021-06-01 \

--fields-terminated-by ',' \

--lines-terminated-by '\n'

alter table sqp_partition add partition(dodate='2021-06-01');

#xshell Script

#!/bin/bash

DATE=`date -d '-1 day' +%F`

sqoop import \

--connect jdbc:mysql://singlelucky:3306/test \

--username root \

--password kb12kb12 \

--table sqp_partition \

--where "cast(dotime as date)='$DATE'" \

-m 1 \

--delete-target-dir \

--target-dir /user/hive/warehouse/kb12.db/sqp_partition/dodate=$DATE \

--fields-terminated-by ',' \

--lines-terminated-by '\n'

hive -e "alter table test.sqp_partition add partition(dodate='$DATE')"

=====================================================================

[root@singlelucky sqoop_job]# vim sq_m2hv_par.sh

[root@singlelucky sqoop_job]# chmod u+x sq_m2hv_par.sh

[root@singlelucky sqoop_job]# ./sq_m2hv_par.sh

=====================================================================

#sqoop job

#vim sqoop-site.xml Open the following comment

<property>

<name>sqoop.metastore.client.record.password</name>

<value>true</value>

<description>If true, allow saved passwords in the metastore.

</description>

</property>

# see job list

sqoop job --list

# Delete job

sqoop job --delete jobname

# see Job Definition

sqoop job --show jobname

# establish job

sqoop job \

--create job_m2hv_par \

-- import \

--connect jdbc:mysql://singlelucky:3306/test \

--username root \

--password kb12kb12 \

--query "select * from sqp_incr_time where \$CONDITIONS" \

-m 1 \

--target-dir /kb12/sqoop/m2h_incr_lastmodified \

--fields-terminated-by ',' \

--lines-terminated-by '\n' \

--check-column incrTime \

--incremental lastmodified \

--append \

--last-value '2021-06-29 13:21:40.0'

# perform job

sqoop job --exec job_m2hv_par

#------ mysql -> hive --------

sqoop import \

--connect jdbc:mysql://192.168.19.130:3306/test \

--username root \

--password kb12kb12 \

--table user_info \

-m 1 \

--hive-import \

--hive-table kb12.user_info \

--create-hive-table

sqoop import \

--connect jdbc:mysql://192.168.19.130:3306/test \

--username root \

--password kb12kb12 \

--table studentinfo \

--where 'stuId>=50'

-m 1 \

--hive-import \

--hive-table kb12.studentinfo

# Try partition import

create table sqp_user_par(

user_id int,

user_name string,

user_account string,

user_pass string,

user_phone string,

user_gender string,

user_pid string,

user_province string,

user_city string,

user_district string,

user_address string,

user_balance decimal(10,2)

)

partitioned by (id_rang string)

row format delimited

fields terminated by ','

lines terminated by '\n'

stored as textfile;

sqoop import \

--connect jdbc:mysql://192.168.19.130:3306/test \

--username root \

--password kb12kb12 \

--table user_info \

--where "user_id between 1 and 19" \

-m 1 \

--hive-import \

--hive-table kb12.sqp_user_par \

--hive-partition-key id_rang \

--hive-partition-value '1-19' \

--fields-terminated-by ',' \

--lines-terminated-by '\n'

#!/bin/bash

B=$1

E=$2

sqoop import \

--connect jdbc:mysql://192.168.19.130:3306/test \

--username root \

--password kb12kb12 \

--table user_info \

--where "user_id between $B and $E" \

-m 1 \

--hive-import \

--hive-table kb12.sqp_user_par \

--hive-partition-key id_rang \

--hive-partition-value "$B-$E" \

--fields-terminated-by ',' \

--lines-terminated-by '\n'

#------ mysql -> hbase -------

sqoop import \

--connect jdbc:mysql://192.168.19.130:3306/test \

--username root \

--password kb12kb12 \

--table studentinfo \

--where "stuid between 20 and 38" \

--hbase-table test:studentinfo \

--column-family base \

--hbase-create-table \

--hbase-row-key stuid

mysql+jdbc -> hbase-client ->hbase

java Code java2hbase

java -jar prolog-1.0-jar-with-dependencies.jar /root/data/flume/ logconf/logger.properties

java -jar java2hbase-1.0-jar-with-dependencies.jar mysqlToHbaseConfig/datasources.properties

nohup java -jar java2hbase-1.0-jar-with-dependencies.jar mysqlToHbaseConfig/datasources.properties>/dev/null 2>&1 &

边栏推荐

- Delivery mode design of Spartacus UI of SAP e-commerce cloud

- [unity3d] nested use layout group to make scroll view with dynamic sub object height

- Translation d30 (with AC code POJ 28:sum number)

- Brief analysis of edgedb architecture

- Large neural networks may be beginning to realize: the chief scientist of openai leads to controversy, and everyone quarrels

- 虚幻AI蓝图基础笔记(万字整理)

- A model can do two things: image annotation and image reading Q & A. VQA accuracy is close to human level | demo can be played

- UE illusory engine programmed plant generator setup -- how to quickly generate large forests

- Vscode set JSON file to format automatically after saving

- 2021-10-04

猜你喜欢

【虚幻】过场动画笔记

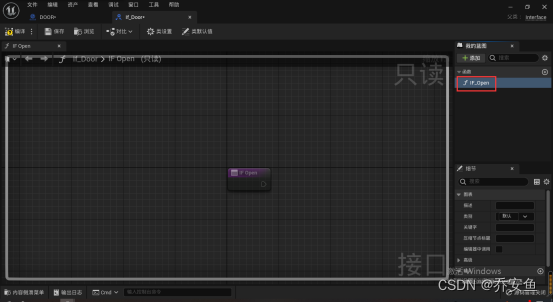

![[unreal] key to open the door blueprint notes](/img/28/f2b00d84dc05183ce93e2a8ee3555f.png)

[unreal] key to open the door blueprint notes

Sum the two numbers to find the target value

Blender model import UE, collision settings

Beautiful and intelligent, Haval H6 supreme+ makes Yuanxiao travel safer

![[Yu Yue education] University Physics (Electromagnetics) reference materials of Taizhou College of science and technology, Nanjing University of Technology](/img/a9/ffd5d8000fc811f958622901bf408d.png)

[Yu Yue education] University Physics (Electromagnetics) reference materials of Taizhou College of science and technology, Nanjing University of Technology

UE4 night lighting notes

pytest--之测试报告allure配置

Applet development summary

【虚幻】按键开门蓝图笔记

随机推荐

【虚幻】过场动画笔记

Pytest-- test report allure configuration

Applet development summary

Bookmark collection management software suspension reading and data migration between knowledge base and browser bookmarks

[illusory] weapon slot: pick up weapons

Feature (5): how to organize information

Introduction and Principle notes of UE4 material

【避坑指南】使用UGUI遇到的坑:Text组件无法首行缩进两格

Project practice, redis cluster technology learning (16)

ue4材质的入门和原理笔记

A model can do two things: image annotation and image reading Q & A. VQA accuracy is close to human level | demo can be played

[pit avoidance guide] pit encountered using ugui: the text component cannot indent the first line by two spaces

2021-10-04

传输优化抽象

pytest学习--base

2021-10-02

Blender volume fog

Database -- acid of transaction -- introduction / explanation

Vscode set JSON file to format automatically after saving

两数之和,求目标值