I got to know you in the last note Sarsa, It can be used to train the action value function \(Q_\pi\); Learn this article Q-Learning, This is another kind of TD Algorithm , For learning Optimal action value function Q-star, This is used for training in previous value learning DQN The algorithm of .

8. Q-learning

Continue the doubts of the previous article , Compare the two algorithms .

8.1 Sarsa VS Q-Learning

These are both TD Algorithm , But the problems solved are different .

Sarsa

- Sarsa Training action value function \(Q_\pi(s,a)\);

- TD target:\(y_t = r_t + \gamma \cdot {Q_\pi(s_{t+1},a_{t+1})}\)

- The value network is \(Q_\pi\) Functional approximation of ,Actor-Critic Method in , use Sarsa Update the value network (Critic)

Q-Learning

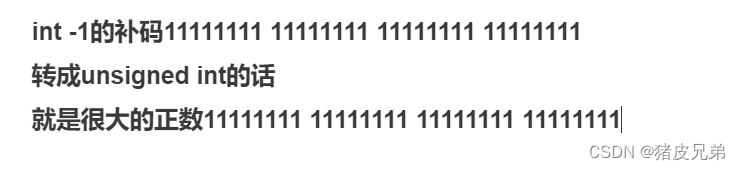

Q-learning It is the value function of training the best action \(Q^*(s,a)\)

TD target :\(y_t = r_t + \gamma \cdot {\mathop{max}\limits_{a}Q^*(s_{t+1},a_{t+1})}\), Yes Q Maximize

Note that this is the difference .

use Q-learning Training DQN

The difference between personal summary is Sarsa Actions are sampled randomly , and Q-learning Is to take the expected maximum

Here's the derivation Q-Learning Algorithm .

8.2 Derive TD target

Be careful Q-learning and Sarsa Of TD target There's a difference .

Before Sarsa This equation is proved :\(Q_\pi({s_t},{a_t})=\mathbb{E}[{R_t} + \gamma \cdot Q_\pi({S_{t+1}},{A_{t+1}})]\)

Equation means ,\(Q_\pi\) It can be written. Reward as well as \(Q_\pi\) An estimate of the next moment ;

Both ends of the equation have Q, And for all \(\pi\) All set up .

So record the optimal strategy as \(\pi^*\), The above formula is also true , Yes :

\(Q_{\pi^*}({s_t},{a_t}) = \mathbb{E}[{R_t} + \gamma \cdot Q_{\pi^*}({S_{t+1}},{A_{t+1}})]\)

Usually put \(Q_{\pi^*}\) Write it down as \(Q^*\), Can represent the optimal action value function , So you get :

\(Q^*({s_t},{a_t})=\mathbb{E}[{R_t} + \gamma \cdot Q^*({S_{t+1}},{A_{t+1}})]\)

Handle the right side Expected \(Q^*\), Write it in maximized form :

because \(A_{t+1} = \mathop{argmax}\limits_{a} Q^*({S_{t+1}},{a})\) ,A It must be maximization \(Q^*\) The action of

explain :

Given state \(S_{t+1}\),Q* Will score all actions ,agent Will perform the action with the highest score .

therefore \(Q^*({S_{t+1}},{A_{t+1}}) = \mathop{max}\limits_{a} Q^*({S_{t+1}},{a})\),\(A_{t+1}\) Is the best action , Can be maximized \(Q^*\);

Bring in the expectation to get :\(Q^({s_t},{a_t})=\mathbb{E}[{R_t} + \gamma \cdot \mathop{max}\limits_{a} Q^*({S_{t+1}},{a})]\)

On the left is t Prediction of time , Equal to the expectation on the right , Expect to maximize ; It's hard to expect , Monte Carlo approximation . use \(r_t \ s_{t+1}\) Instead of \(R_t \ S_{t+1}\);

Do Monte Carlo approximation :\(\approx {r_t} + \gamma \cdot \mathop{max}\limits_{a} Q^*({s_{t+1}},{a})\) be called TD target \(y_t\).

here \(y_t\) There are some real observations , So it's better than the left Q-star A complete guess should be reliable , So try to make the left side Q-star near \(y_t\).

8.3 The algorithm process

a. Form

- observation One transition \(({s_t},{a_t},{r_t},{s_{t+1}})\)

- use \(s_{t+1} \ r_t\) Calculation TD target:\({r_t} + \gamma \cdot \mathop{max}\limits_{a} Q^*({s_{t+1}},{a})\)

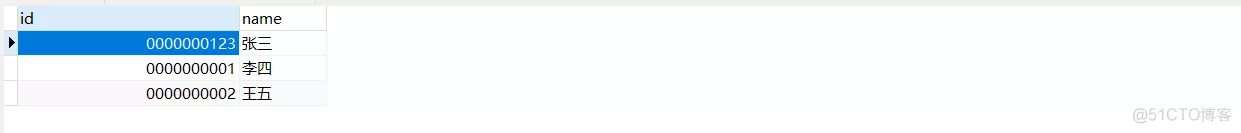

- Q-star This is a table like the following figure :

Find the status \(s_{t+1}\) Corresponding That's ok , Find the biggest element , Namely \(Q^*\) About a The maximum of .

- Calculation TD error: \(\delta_t = Q^*({s_t},{a_t}) - y_t\)

- to update \(Q^*({s_t},{a_t}) \leftarrow Q^*({s_t},{a_t}) - \alpha \cdot \delta_t\), to update \((s_{t},a_t)\) Location , Give Way Q-star It's closer to \(y_t\)

b. DQN form

DQN \(Q^*({s},{a};w)\) The approximate $Q^*({s},{a}) $, The input is the current state s, Output is the scoring of all actions ;

Next, choose the action of maximizing value \({a_t}= \mathop{argmax}\limits_{{a}} Q^*({S_{t+1}},{a},w)\), Give Way agent perform \(a_t\); Use the collected transitions Learn training parameters w, Give Way DQN Of q More accurate ;

use Q-learning Training DQN The process of :

- Observe a transition \(({s_t},{a_t},{r_t},{s_{t+1}})\)

- TD target: \({r_t} + \gamma \cdot \mathop{max}\limits_{a} Q^*({s_{t+1}},{a};w)\)

- TD error: \(\delta_t = Q^*({s_t},{a_t};w) - y_t\)

- gradient descent , Update parameters : \(w \leftarrow w -\alpha \cdot \delta_t \cdot \frac{{s_t},{a_t};w}{\partial w}\)

x. Reference tutorial

- Video Course : Deep reinforcement learning ( whole )_ Bili, Bili _bilibili

- Video original address :https://www.youtube.com/user/wsszju

- Courseware address :https://github.com/wangshusen/DeepLearning

- Note reference :

Reinforcement learning - Learning notes 8 | Q-learning More articles about

- Reinforcement learning series :Deep Q Network (DQN)

List of articles [ hide ] 1. The combination of reinforcement learning and deep learning 2. Deep Q Network (DQN) Algorithm 3. Follow up development 3.1 Double DQN 3.2 Prioritized Replay 3. ...

- Strengthen the study of reading notes - 06~07 - Timing difference learning (Temporal-Difference Learning)

Strengthen the study of reading notes - 06~07 - Timing difference learning (Temporal-Difference Learning) Learning notes : Reinforcement Learning: An Introductio ...

- Reinforcement learning 9-Deep Q Learning

As mentioned before Sarsa and Q Learning Are not suitable for solving large-scale problems , Why? ? Because traditional reinforcement learning has one Q surface , This piece of Q Table records each state , Of each action q value , But practical problems are often extremely complex , There are many states , Even... Even ...

- Reinforcement learning _Deep Q Learning(DQN)_ Code parsing

Deep Q Learning Use gym Of CartPole As an environment , Use QDN Solve the problem of discrete action space . One . Import the required package and define the super parameters import tensorflow as tf import n ...

- Strengthen the study of reading notes - 05 - Monte Carlo method (Monte Carlo Methods)

Strengthen the study of reading notes - 05 - Monte Carlo method (Monte Carlo Methods) Learning notes : Reinforcement Learning: An Introduction, Richard S ...

- Strengthen the study of reading notes - 13 - Strategy gradient method (Policy Gradient Methods)

Strengthen the study of reading notes - 13 - Strategy gradient method (Policy Gradient Methods) Learning notes : Reinforcement Learning: An Introduction, Richa ...

- Strengthen the study of reading notes - 12 - The mark of qualification (Eligibility Traces)

Strengthen the study of reading notes - 12 - The mark of qualification (Eligibility Traces) Learning notes : Reinforcement Learning: An Introduction, Richard S. S ...

- Strengthen the study of reading notes - 10 - on-policy The approximate method of control

Strengthen the study of reading notes - 10 - on-policy The approximate method of control Learning notes : Reinforcement Learning: An Introduction, Richard S. Sutton an ...

- Strengthen the study of reading notes - 09 - on-policy Approximate method of prediction

Strengthen the study of reading notes - 09 - on-policy Approximate method of prediction reference Reinforcement Learning: An Introduction, Richard S. Sutton and A ...

- Strengthen the study of reading notes - 02 - Dobby O The tiger O Machine problems

# Strengthen the study of reading notes - 02 - Dobby O The tiger O Machine problems Learning notes : [Reinforcement Learning: An Introduction, Richard S. Sutton and An ...

Random recommendation

- Process management supervisor Brief description

background : Some scripts in the project need to be run through the background process , Guaranteed not to be interrupted by exception , It was through nohup.&.screen To achieve , With the ability to make a start/stop/restart/reload I want to start my service ...

- Struts2 The way to push attributes into the value stack

Struts2 Initializing Action When the value stack is pressed, a action object , It contains various attributes , How are these attributes pressed into ? Or according to what it is pressed ? until 2016 year 5 month 5 I just understand , It was initializing act ...

- csdn An permutation and combination problem in online programming

yes csdn A problem in online programming Palindrome string refers to the same string from left to right and from right to left , Now give a string consisting only of lowercase letters , You can rearrange its letters , To form different palindrome strings . Input : Non empty characters consisting only of lowercase letters ...

- cStringIO Module example

# Vorbis comment support for Mutagen # Copyright 2005-2006 Joe Wreschnig # # This program is free so ...

- test Web Service interface

1. http://www.iteye.com/topic/142034 2. http://www.iteye.com/topic/1123835 3.http://yongguang423.ite ...

- Devexpress gridview cell Add controls

1. According to the order in the picture above , First add three types of control : 2. The above image is set according to the order columnEdit 3. It opens at ColumnEdit , Set up check and uncheck Of value

- UOJ14 DZY Loves Graph Union checking set

Portal The question : Give me a picture $N$ A little bit , A graph without edges in the beginning ,$M$ operations , The operation is to add edges ( The edge weight is the current operation number ). Before deleting $K$ Dabian . Undo the previous operation , After each operation, the minimum spanning tree edge weight and .$N \leq 3 \tim ...

- Delete by mistake linux System files ? This method teaches you to solve

Reprinted on the Internet and modified appropriately Delete by mistake linux System files ? Don't worry , This article will give you a recovery linux Method of file , It allows you to easily deal with various risks in operation and maintenance . More ways than problems ~ Say what's in front of you For routine maintenance operations , There will inevitably be document errors ...

- 3、 ... and oracle User management one

One . Create user profiles : stay oracle To create a new user to use create user sentence , Generally, it has dba( Database administrator ) You can only use .create user user name identified by password ; ...

- Custom control VS User control

Custom control VS User control 2015-06-16 1 The difference between custom control and user control WinForm in , User control (User Control): Inherited from UserControl, Mainly used for development Container ...

![[C language] advanced pointer --- do you really understand pointer?](/img/ee/79c0646d4f1bfda9543345b9da0f25.png)