当前位置:网站首页>What are the implementations of distributed locks?

What are the implementations of distributed locks?

2020-11-09 12:17:00 【osc_8hwmnuba】

One 、 Business scenario

The same jvm Multiple threads operate on the same stateful variable , Can pass JVM Internal lock ensures thread safety .

If more than one JVM Operate on the same stateful variable , How to ensure thread safety ?

At this time, we need distributed lock to play its role

Two 、 characteristic

Distributed systems tend to have large traffic 、 High concurrency , There are higher requirements for high availability and high performance of distributed locks . The general distributed lock scheme needs to meet the following requirements :

There are highly available lock acquisition and release functions

The performance of acquiring lock and releasing lock is better

If this lock can be reentered ( Avoid deadlock )

This lock is better to be a blocking lock ( Consider this one according to business needs )

This lock is better to be a fair lock ( Consider this one according to business needs )

3、 ... and 、 Distributed lock scheme based on Database

1、 Only do distributed locks based on the table primary key

Take advantage of the unique features of the primary key , If there are multiple requests submitted to the database at the same time , The database will ensure that only one insert operation can succeed , Then we can think that the successful thread obtained the lock of the method , When the method is finished , If you want to release the lock , Delete this database record

1.1、 shortcoming

Database single point

There is no lock timeout mechanism

Do not reenter

Not fair lock

Non blocking lock

1.2、 Optimization point

Database master-slave backup , Solve a single problem . Because there is a delay in master-slave synchronization , Data may be inconsistent

Timed task detection locks automatically release or pass when timeout

connection.commit()Operation to release the lockLock up plus machine and thread information , Check before locking , Support reentry

In the middle of table , Record machine threads that failed to lock , Sort by creation time

Spin for blocking effect

1.3、 principle

General database use innodb Storage engine , Row level lock will be added when inserting data . So as to achieve the effect of sequential execution of concurrent requests

2、 Through the database mvcc Achieve optimistic lock

Update the data with the specified version number , If the version number is updated in advance by other threads , Then this update failed

2.1、 shortcoming

Intrusion into database tables is large , Each watch needs to add version Field

There are a lot of update failures in high and post

3、 The limitations of databases

Use exclusive locks for distributed locks lock, So an exclusive lock does not submit for a long time , It will take up the database connection . Once there are more connections like this , It is possible to burst the database connection pool .

Database writes are disks io, Poor performance

Database can support the largest qps There are also restrictions , It's hard to meet the needs of high concurrency

Four 、 be based on redis Implement distributed locks

1、 principle

1.1、 Lock

Atomic command :SET key value NX PX milliseconds

PX milliseconds Expiration time , Prevent lock thread from dying and cannot be unlocked . Expiration time is set too short , Maybe the lock thread has not finished executing the normal logic , It's time to expire

NX If you don't have this key Is set , There is key Return failed

value Random value ( It's usually used UUID), Can only be unlocked by the lock thread

1.2、 Unlock

lua Script implementation get value,delete The operation of . It's set when locking value It's a random value that doesn't repeat , You have to UUID The same can unlock

2、 shortcoming

Get lock is non blocking

Not fair lock , Does not support scenarios that require fair locks

redis There is a delay , stay master When the master-slave switch occurs during downtime , May cause lock failure

5、 ... and 、 be based on Redlock The algorithm implements distributed lock .redisson Yes Redlock The algorithm is encapsulated

<dependency>

<groupId>org.redisson</groupId>

<artifactId>redisson</artifactId>

<version>3.3.2</version>

</dependency>

1、 principle

stay Redis In the distributed environment of , We assume that there is N individual Redis master. These nodes Completely independent of each other , There is no master-slave replication or other cluster coordination mechanism . We make sure that we will be in N Examples are used in Redis The same way to acquire and release locks in a single instance . Now let's assume that there is 5 individual Redis master node , At the same time we need to be in 5 Run these on servers Redis example , This ensures that they don't all go down at the same time .

1.1、 Lock

Suppose there is cluster-1,cluster-2,cluster-3 A total of 3 individual cluster Pattern clusters . If you want to get distributed locks , Then I need to ask 3 individual cluster Cluster adoption EVAL Command execution LUA Script , need 3/2+1=2, At least 2 individual cluster Cluster response successful .set Of value To be unique ,redisson Of value adopt UUID+threadId Guarantee value Uniqueness

1. Get the current time ( In milliseconds ).

2. Take turns using the same key And random values in N Lock requested on nodes , In this step , Clients in each master When the lock is requested , There will be a much smaller timeout than the total lock release time . For example, if the automatic release time of lock is 10 Second , The timeout for each node lock request may be 5-50 Millisecond range , This can prevent a client from going down master Blocking on nodes for too long , If one master Node not available , We should try the next one as soon as possible master node .

3. The client calculates the time taken to acquire the lock in the second step , Only when the client is in most master Successfully acquired lock on node ( Here it is. 3 individual ), And the total time consumed does not exceed the lock release time , This lock is considered to be a success .

4. If the lock is successful , Now the automatic lock release time is the initial lock release time minus the time consumed to acquire the lock .

5. If the lock acquisition fails , Whether it's because there are no more than half of the locks that succeed (N/2+1) Or because the total consumption time exceeds the lock release time , The client will go to every master Release lock on node , Even the locks that he didn't think were successful .

1.2、 Release the lock

You need to release the lock on all nodes , Whether or not the lock has been successfully acquired at this node before .

If the client does not acquire the lock at most nodes , Be sure to release the lock as soon as possible on the node that obtains the lock successfully , So there's no need to wait for key The lock can only be retrieved after the timeout

2、 Safety demonstration

Before the start , Let's assume that the client can acquire the lock at most nodes , In this way, all nodes will contain one with the same lifetime key. But here's the thing , This key It's set at different time points , So these key It will also time out at different times , But let's assume the worst is the first key Is in T1 Time setting ( When the client connects to the first server ), the last one key Is in T2 Time setting ( The time when the client received the result from the last server ), from T2 Time begins , We can confirm the earliest overtime key At least it will exist for MIN_VALIDITY=TTL-(T2-T1)-CLOCK_DRIFT,TTL It's lock timeout 、(T2-T1) It's the last time to acquire the lock ,CLOCK_DRIFT It's the clock difference between different processes , This is to compensate for the previous (T2-T1). Other key It will be after this time point , So we can make sure that key Before this point in time, at least all exist at the same time .

If the time taken by a client to acquire most node locks is close to or even exceeds the maximum effective time of locks ( Is that we SET Operation settings TTL value ), Then the system will consider the lock invalid and release the locks on these nodes , So we just need to consider the situation that the time to acquire most node locks is less than the effective time . under these circumstances , According to our previous proof , stay MIN_VALIDITY Within time , No client can retrieve the lock successfully , So multiple clients can obtain the result of lock successfully at the same time , It will only take more time for most nodes to acquire locks than TTL In the case of time , In fact, these locks will fail in this case

6、 ... and 、 be based on zookeeper Implement distributed locks

1、 Basic exclusive lock ( Not fair lock )

1.1、 principle

Use temporary nodes and watch Mechanism . Each lock occupies a normal node /lock, When it is necessary to acquire the lock /lock Create a temporary node in the directory , A successful creation indicates a successful lock acquisition , Failure watch/lock node , Remove lock after deletion . The advantage of temporary nodes is that the nodes that can be locked automatically after the process is hung are automatically deleted and the lock is cancelled .

1.2、 shortcoming

All processes that fail to acquire locks listen to the parent node , It's easy to herd , That is, when the lock is released, all waiting processes create nodes together , There's a lot of concurrency .

2、 Optimized exclusive lock ( Fair lock )

2.1、 principle

Lock to create temporary ordered nodes , Every locked node can create a node successfully , It's just that the serial number is different . Only the one with the smallest number can have a lock , If the node sequence number is not the smallest then watch The previous node whose sequence number is smaller than itself ( Fair lock ).

3、 Shared lock

3.1、 principle

Create a temporary sequence node under the lock node . Read node is R+ Serial number , Write the node as W+ Serial number . After creating the node , Get all child nodes , Register the change of the child node of the lock node watcher monitor , Determine the position of your serial number in all child nodes . For read requests , There is no write node smaller than its serial number , It means that the shared lock is obtained , Execute read logic . For write requests , If you are not the lowest number of child nodes , You need to enter and wait . Received watcher Upon receipt of the notice , Repeatedly acquire the lock .

3.2、 shortcoming

Shared lock herding . a large number of watcher Notification and child node list get , Two operations run repeatedly . When the cluster scale is relatively large , Would be right zookeeper Servers cause huge performance impact and network impact

3.3、 Optimize

Read request , Listen to write nodes smaller than yourself . Write requests , Listen to the last node smaller than yourself .

4、zookeeper limited

Performance may not be as high as cache service , Because every time you create a lock and release a lock , All need to be created dynamically 、 Destroy the temporary node to implement the lock function .

ZK You can only create and delete nodes through Leader Server to execute , Then synchronize the data to all Follower On the machine .

Concurrency support is not as good as redis

If you think the article is good , At the end of the article ???? It's back , Remember to give it to me 「 give the thumbs-up 」 and 「 Looking at 」 Oh ~

版权声明

本文为[osc_8hwmnuba]所创,转载请带上原文链接,感谢

边栏推荐

- 真正拖垮你的,是沉没成本

- 理解 OC 中 RunLoop

- Gather in Beijing! Openi / O 2020 Qizhi Developer Conference enters countdown

- Jsliang job series - 08 - handwritten promise

- Kubernetes业务日志收集与监控

- 嗯,查询滑动窗口最大值的这4种方法不错....

- AndroidStudio导入定制化的framework classess.jar AS 4.0.1版本亲测有效

- Tidb x micro banking reduces time consumption by 58%, and distributed architecture helps to realize inclusive finance

- SQL statement to achieve the number of daffodils

- Pay attention to the request forwarding problem of. Net core

猜你喜欢

配置交换机Trunk接口流量本地优先转发(集群/堆叠)

Kubernetes business log collection and monitoring

Stack & queue (go) of data structure and algorithm series

inet_ Pton () and INET_ Detailed explanation of ntop() function

From coding, network transmission, architecture design, Tencent cloud high quality, high availability real-time audio and video technology practice

libssl对CentOS登录的影响

Solve the problem of idea shortcut key Alt + insert invalid

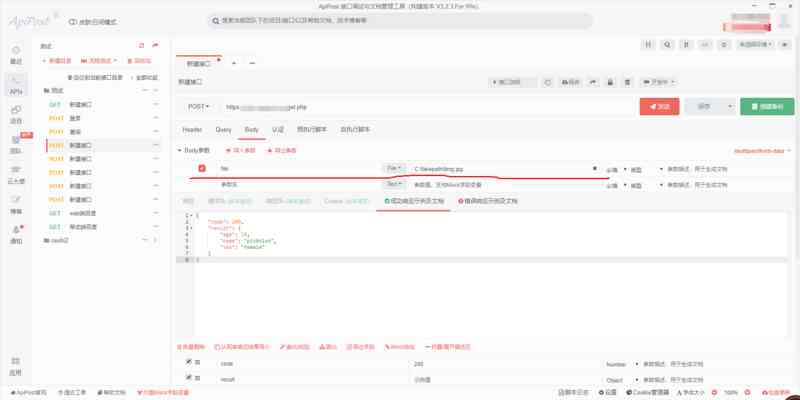

Interface tests how to pass files in post requests

通配符SSL证书应该去哪里注册申请

使用TreeView树型菜单栏(递归调用数据库自动创建菜单)

随机推荐

Dynamo: a typical distributed system analysis

Show profile analysis of SQL statement performance overhead

jsliang 求职系列 - 08 - 手写 Promise

Handwriting Koa.js Source code

On the calculation of non interaction polarizability

Adobe experience design / XD 2020 software installation package (with installation tutorial)

050_ object-oriented

Gather in Beijing! Openi / O 2020 Qizhi Developer Conference enters countdown

服务应用 ClockService安卓实现闹钟

Android check box and echo

Where should wild card SSL certificate register and apply

An attempt to read or write to protected memory occurred using the CopyMemory API. This usually indicates that other memory is corrupted.

From coding, network transmission, architecture design, Tencent cloud high quality, high availability real-time audio and video technology practice

JVM learning (6) - memory model and thread

Flink 系例 之 Reduce

Glsb involves load balancing algorithm

手写Koa.js源码

Setting up a proxy for the WGet command

阿里、腾讯、百度、网易、美团Android面试经验分享,拿到了百度、腾讯offer

技美那么贵,不如找顾问 | AALab企业顾问业务