当前位置:网站首页>2019 Alibaba cluster dataset Usage Summary

2019 Alibaba cluster dataset Usage Summary

2022-07-06 18:03:00 【5-StarrySky】

2019 Summary of Alibaba cluster dataset usage

Some details in the dataset

In the offline load instance table (batch_instance) in , There is a problem with the start time and end time of the instance , If you check carefully , You will find that the start time of the instance is actually larger than the end time of the instance , So the subtraction is negative , At the beginning, I adjusted BUG Why didn't you find it , I know that one day I noticed that my workload was negative , I just found out what happened , Here is also the definition of my task quantity :

cloudlet_total = self.cpu_avg * (self.finished_time_data_set - self.start_time_data_set) cloudmem_total = self.mem_avg * (self.finished_time_data_set - self.start_time_data_set)The second pit , It's up there CPU The number of , For offline loads ,instance Inside CPU The quantity is multiplied by 100 The number of , So you need to be standardized , Because in the machine node CPU The quantity of is not divided by 100 Of , Namely 96, Empathy container_mate Of CPU Quantity also needs attention , If it is 400 And so on. , You need to divide by 100

Create a JOB and APP

# Put together APP One APP Include multiple containers

def create_app(self):

container_dao = ContainerDao(self.a)

container_data_list = container_dao.get_container_list()

container_data_groupby_list = container_dao.get_container_data_groupby()

for app_du,count in container_data_groupby_list:

self.app_num += 1

num = 0

app = Apps(app_du)

index_temp = -1

for container_data in container_data_list:

if container_data.app_du == app_du:

index_temp += 1

container = Container(app_du)

container.set_container(container_data)

container.index = index_temp

app.add_container(container)

num += 1

if num == count:

# print(app.app_du + "")

# print(num)

app.set_container_num(num)

self.App_list.append(app)

break

# Create JOB

def create_job(self):

instance_dao = InstanceDao(self.b)

task_dao = TaskDao(self.b)

task_data_list = task_dao.get_task_list()

job_group_by = task_dao.get_job_group_by()

instance_data_list = instance_dao.get_instance_list()

# instance_data_list = instance_dao.get_instance_list()

for job_name,count in job_group_by:

self.job_num += 1

job = Job(job_name)

for task_data in task_data_list:

if task_data.job_name == job_name:

task = Task(job_name)

task.set_task(task_data)

for instance_data in instance_data_list:

if instance_data.job_name == job_name and instance_data.task_name == task_data.task_name:

instance = Instance(task_data.task_name,job_name)

instance.set_instance(instance_data)

task.add_instance(instance)

pass

pass

task.prepared()

job.add_task(task)

pass

# The following statement can be deleted , Removing means removing DAG Relationship

job.DAG()

self.job_list.append(job)

# establish DAG Relationship

def DAG(self):

for task in self.task_list:

str_list = task.get_task_name().replace(task.get_task_name()[0],"")

num_list = str_list.split('_')

# Serial number of the current task M1

start = num_list[0]

del num_list[0]

# The back points to the front M2_1 1 Point to 2 1 yes 2 The precursor of 2 yes 1 In the subsequent

for task_1 in self.task_list:

str_list_1 = task_1.get_task_name().replace(task_1.get_task_name()[0], "")

num_list_1 = str_list_1.split('_')

del num_list_1[0]

# Current task

for num in num_list_1:

if num == start:

# Save successors

task.add_subsequent(task_1.get_task_name())

# Save precursor

task_1.add_precursor(task.get_task_name())

# task_1.add_subsequent(task.get_task_name())

pass

pass

pass

pass

pass

How to read data and create corresponding instances

import pandas as pd

from data.containerData import ContainerData

""" Read container data in data set attribute : a: Choose which dataset , = 1 : Represents a dataset 1 Containers 124 individual Yes 20 Group = 2 : Represents a dataset 2 Containers 500 individual Yes 66 Group = 3 : Represents a dataset 3 Containers 1002 individual Yes 122 Group """

# Here is just contaienr For example

class ContainerDao:

def __init__(self, a):

# Row number

self.a = a

# Read the container data from the data set , And deposit in contaianer_list

def get_container_list(self):

# Extract data from a dataset : 0 Containers ID 1 machine ID 2 Time stamp 3 Deployment domain 4 state 5 Needed cpu Number 6cpu Limited quantity 7 Memory size

columns = ['container_id','time_stamp','app_du','status','cpu_request','cpu_limit','mem_size','container_type','finished_time']

filepath_or_buffer = "G:\\experiment\\data_set\\container_meta\\app_"+str(self.a)+".csv"

container_dao = pd.read_csv(filepath_or_buffer, names=columns)

temp_list = container_dao.values.tolist()

# print(temp_list)

container_data_list = list()

for data in temp_list:

# print(data)

temp_container = ContainerData()

temp_container.set_container_data(data)

container_data_list.append(temp_container)

# print(temp_container)

return container_data_list

def get_container_data_groupby(self):

""" columns = ['container_id', 'machine_id ', 'time_stamp', 'app_du', 'status', 'cpu_request', 'cpu_limit', 'mem_size'] filepath_or_buffer = "D:\\experiment\\data_set\\container_meta\\app_" + str(self.a) + ".csv" container_dao = pd.read_csv(filepath_or_buffer, names=columns) temp_container_dao = container_dao temp_container_dao['count'] = 0 temp_container_dao.groupby(['app_du']).count()['count'] :return: """

temp_filepath = "G:\\experiment\\data_set\\container_meta\\app_" + str(self.a) + "_groupby.csv"

container_dao_groupby = pd.read_csv(temp_filepath)

container_data_groupby_list = container_dao_groupby.values.tolist()

return container_data_groupby_list

Corresponding container_data class :

class ContainerData:

def __init__(self):

pass

# Containers in data sets ID

def set_container_id(self, container_id):

self.container_id = container_id

# Machines in data sets ID

def set_machine_id(self, machine_id):

self.machine_id = machine_id

# Deployment container group in data set ( Used to build clusters )

def set_deploy_unit(self, app_du):

self.app_du = app_du

# Timestamp in data set

def set_time_stamp(self, time_stamp):

self.time_stamp = time_stamp

# In dataset cpu Limit requests

def set_cpu_limit(self, cpu_limit):

self.cpu_limit = cpu_limit

# In dataset cpu request

def set_cpu_request(self, cpu_request):

self.cpu_request = cpu_request

# The size of memory requested in the dataset

def set_mem_size(self, mem_size):

self.mem_request = mem_size

# Container status in the dataset

def set_state(self, state):

self.state = state

pass

def set_container_type(self,container_type):

self.container_type = container_type

pass

def set_finished_time(self,finished_time):

self.finished_time = finished_time

# Initialize objects with datasets

def set_container_data(self, data):

# Containers ID

self.set_container_id(data[0])

# self.set_machine_id(data[1])

# Container timestamp ( Arrival time )

self.set_time_stamp(data[1])

# Container group

self.set_deploy_unit(data[2])

# state

self.set_state(data[3])

# cpu demand

self.set_cpu_request(data[4])

# cpu Limit

self.set_cpu_limit(data[5])

# Memory size

self.set_mem_size(data[6])

# Container type

self.set_container_type(data[7])

# Completion time

self.set_finished_time(data[8])

Last

边栏推荐

- 模板于泛型编程之declval

- Wechat applet obtains mobile number

- Pytest learning ----- detailed explanation of the request for interface automation test

- HMS core machine learning service creates a new "sound" state of simultaneous interpreting translation, and AI makes international exchanges smoother

- RB157-ASEMI整流桥RB157

- Video fusion cloud platform easycvr adds multi-level grouping, which can flexibly manage access devices

- VR panoramic wedding helps couples record romantic and beautiful scenes

- 容器里用systemctl运行服务报错:Failed to get D-Bus connection: Operation not permitted(解决方法)

- The shell generates JSON arrays and inserts them into the database

- Compilation principle - top-down analysis and recursive descent analysis construction (notes)

猜你喜欢

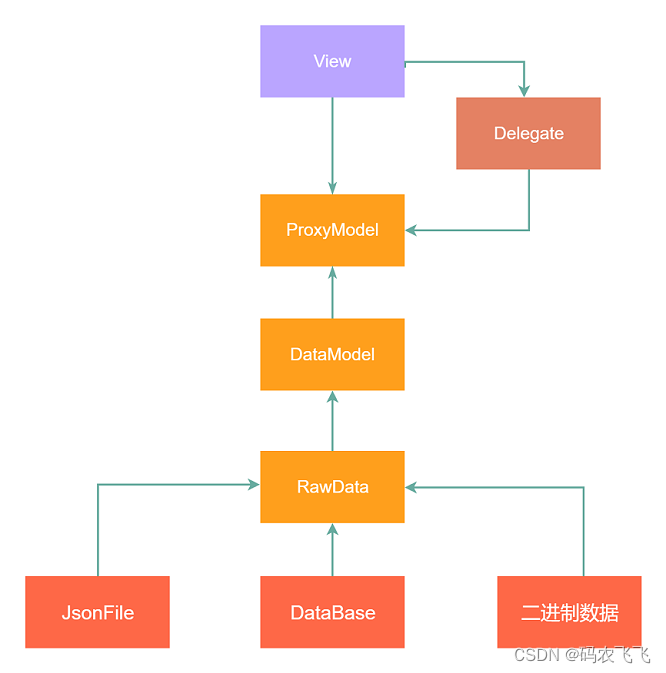

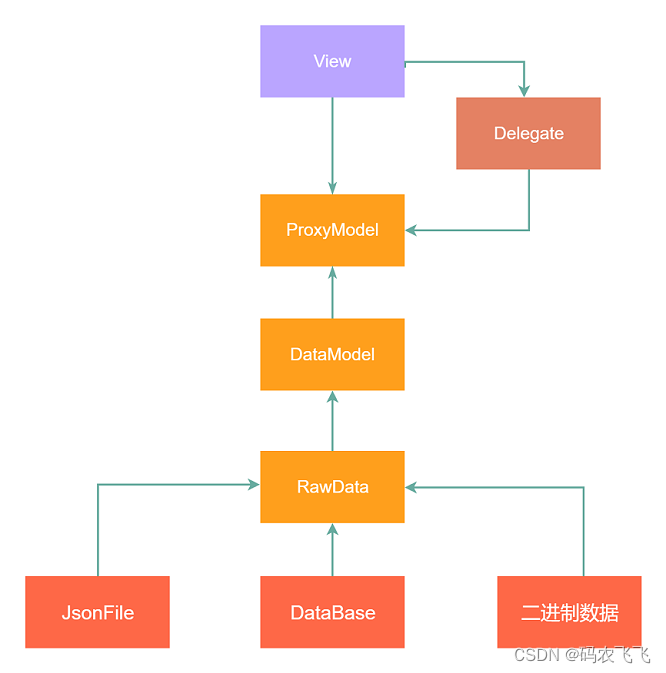

QT中Model-View-Delegate委托代理机制用法介绍

ASEMI整流桥DB207的导通时间与参数选择

Introduction to the usage of model view delegate principal-agent mechanism in QT

78 岁华科教授逐梦 40 载,国产数据库达梦冲刺 IPO

Pytest learning ----- detailed explanation of the request for interface automation test

FMT开源自驾仪 | FMT中间件:一种高实时的分布式日志模块Mlog

Establishment of graphical monitoring grafana

F200——搭载基于模型设计的国产开源飞控系统无人机

Alibaba brand data bank: introduction to the most complete data bank

kivy教程之在 Kivy 中支持中文以构建跨平台应用程序(教程含源码)

随机推荐

一体化实时 HTAP 数据库 StoneDB,如何替换 MySQL 并实现近百倍性能提升

Shell input a string of numbers to determine whether it is a mobile phone number

QT中Model-View-Delegate委托代理机制用法介绍

历史上的今天:Google 之母出生;同一天诞生的两位图灵奖先驱

Appium automated test scroll and drag_ and_ Drop slides according to element position

Why should Li Shufu personally take charge of building mobile phones?

Cool Lehman has a variety of AI digital human images to create a vr virtual exhibition hall with a sense of technology

Transfer data to event object in wechat applet

[introduction to MySQL] the first sentence · first time in the "database" Mainland

How to use scroll bars to dynamically adjust parameters in opencv

node の SQLite

1700C - Helping the Nature

微信小程序获取手机号

开源与安全的“冰与火之歌”

OpenCV中如何使用滚动条动态调整参数

2022年大厂Android面试题汇总(二)(含答案)

分布式不来点网关都说不过去

Alibaba brand data bank: introduction to the most complete data bank

How to solve the error "press any to exit" when deploying multiple easycvr on one server?

After entering Alibaba for the interview and returning with a salary of 35K, I summarized an interview question of Alibaba test engineer