当前位置:网站首页>Crawler career from scratch (II): crawl the photos of my little sister ② (the website has been disabled)

Crawler career from scratch (II): crawl the photos of my little sister ② (the website has been disabled)

2022-07-03 09:18:00 【fishfuck】

List of articles

Preface

Start with this article , We will crawl through several articles in a row (url :https://imoemei.com/) All the pictures of my little sister . With this example, let's learn simple python Reptiles .

See related articles

A reptilian career from scratch ( One ): Crawling for a picture of my little sister ①

A reptilian career from scratch ( 3、 ... and ): Crawling for a picture of my little sister ③

Thought analysis

1. Page source analysis

Because last time we have climbed down all the pictures of the little sister on a page , So now we just need to get the of each page url, Then climb every page again OK 了

Do as you say !

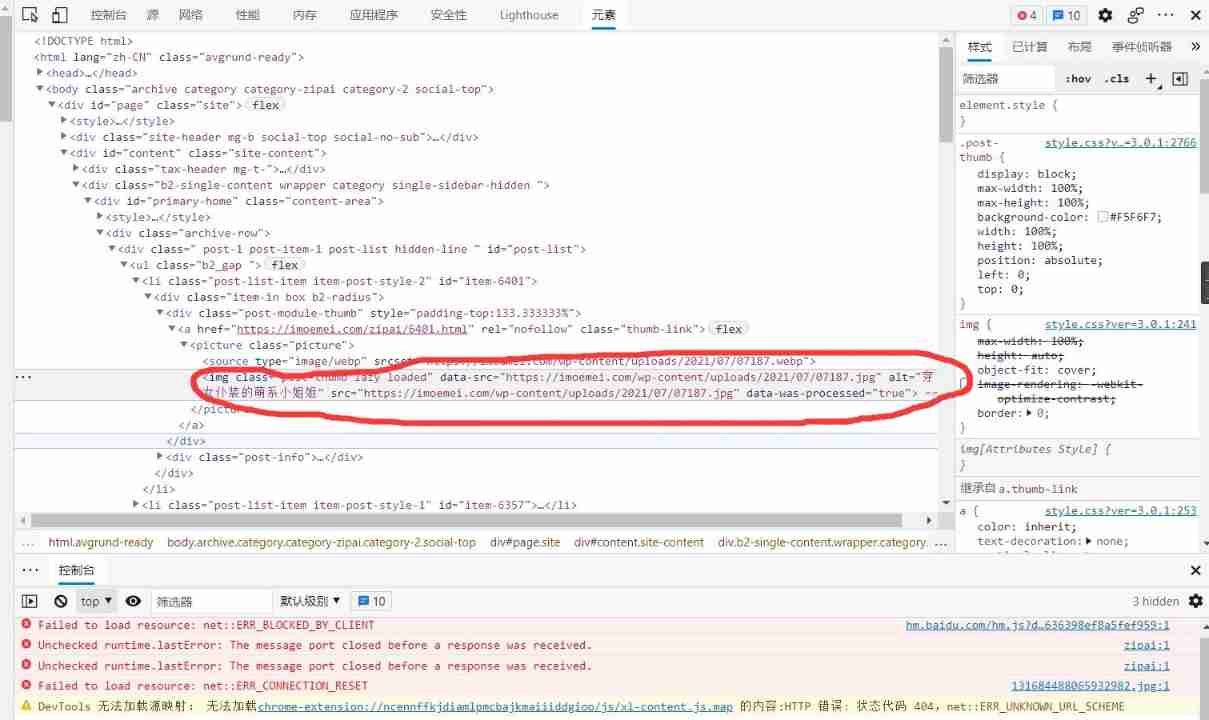

First, let's check the source code of the page

Find a url, Go in and have a look

The result is just the cover ... Look again , I found it on the cover just now ! wuhu !

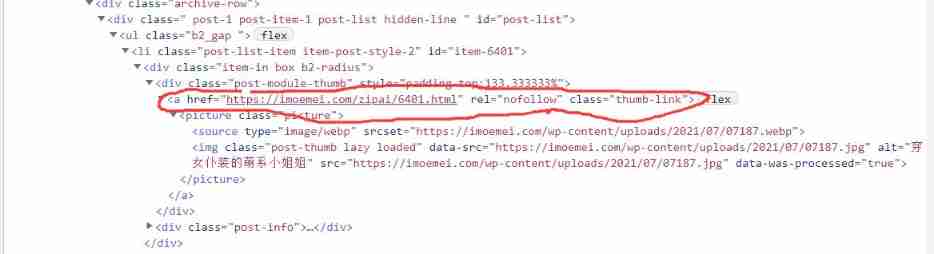

Observe the code of the whole page

Of all pages url Just put it here li In block

Then we just need to take out each page's url It's done !

2. Reptilian thinking

Direct use request Get the whole page , Reuse BeatutifulSoup Parse web pages , Take out all page links , Then traverse the link , Save the picture according to the method in the previous article .

The crawler code

1. development environment

development environment :win10 python3.6.8

Using tools :pycharm

Using third party libraries :requests、os、BeatutifulSoup

2. Code decomposition

(1). Import and stock in

import requests

import os

from bs4 import BeautifulSoup

(2) Get the address of each page

target_url = "https://imoemei.com/zipai/"

r = requests.get(url=target_url)

html = BeautifulSoup(r.text, 'html5lib')

b2_gap = html.find('ul', class_='b2_gap')

print(str(1) + "page is OK")

img_main = b2_gap.find_all('a', class_='thumb-link')

img_main_urls = []

for img in img_main:

img_main_url = img.get('href')

img_main_urls.append(img_main_url)

(3). Get the address of each picture

for j in range(len(img_main_urls) + 1):

print(img_main_urls[j])

r = requests.get(url=img_main_urls[j])

html = BeautifulSoup(r.text, 'html5lib')

entry_content = html.find('div', class_='entry-content')

img_list = entry_content.find_all('img')

img_urls = []

num = 0

name = html.find('h1').text

print(name)

for img in img_list:

img_url = img.get('src')

result = requests.get(img_url).content

(4). Save the picture to the specified folder

path = ' picture '

if not os.path.exists(path):

os.mkdir(path)

f = open(path + '/' + name + str(num) + '.jpg', 'wb')

f.write(result)

num += 1

print(' Downloading {} The first {} A picture '.format(name, num))

3. The overall code

import requests

import os

from bs4 import BeautifulSoup

target_url = "https://imoemei.com/zipai/"

r = requests.get(url=target_url)

html = BeautifulSoup(r.text, 'html5lib')

b2_gap = html.find('ul', class_='b2_gap')

print(str(1) + "page is OK")

img_main = b2_gap.find_all('a', class_='thumb-link')

img_main_urls = []

for img in img_main:

img_main_url = img.get('href')

img_main_urls.append(img_main_url)

for j in range(len(img_main_urls) + 1):

print(img_main_urls[j])

r = requests.get(url=img_main_urls[j])

html = BeautifulSoup(r.text, 'html5lib')

entry_content = html.find('div', class_='entry-content')

img_list = entry_content.find_all('img')

img_urls = []

num = 0

name = html.find('h1').text

print(name)

for img in img_list:

img_url = img.get('src')

result = requests.get(img_url).content

path = ' picture '

if not os.path.exists(path):

os.mkdir(path)

f = open(path + '/' + name + str(num) + '.jpg', 'wb')

f.write(result)

num += 1

print(' Downloading {} The first {} A picture '.format(name, num))

Crawling results

This time, I only climbed the first page under the selfie item , In the next article, we will crawl all pages of all sub items , Coming soon .

边栏推荐

- Use of sort command in shell

- We have a common name, XX Gong

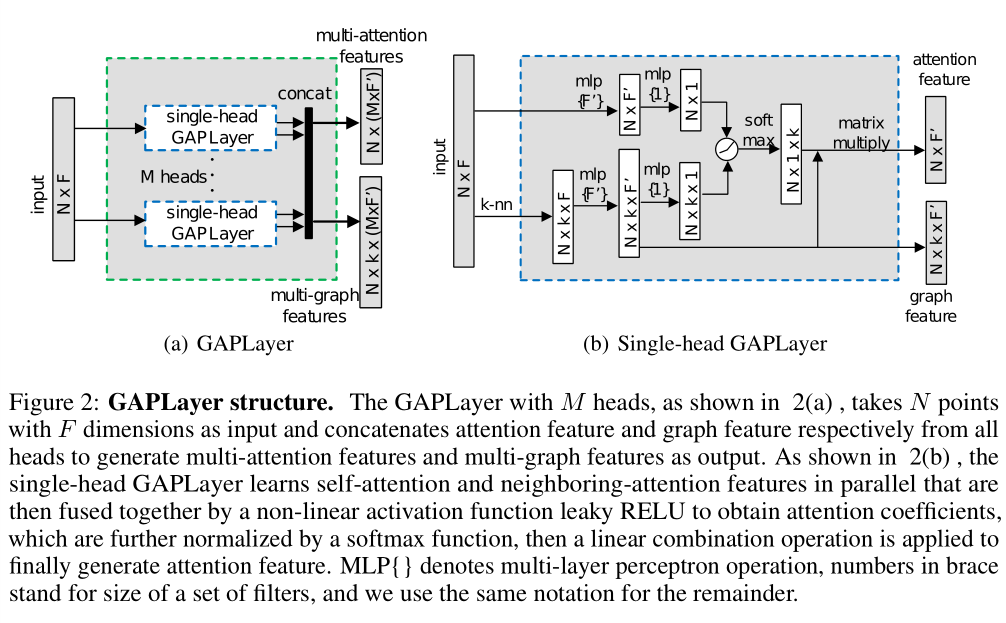

- [point cloud processing paper crazy reading cutting-edge version 12] - adaptive graph revolution for point cloud analysis

- AcWing 787. Merge sort (template)

- Computing level network notes

- What is the difference between sudo apt install and sudo apt -get install?

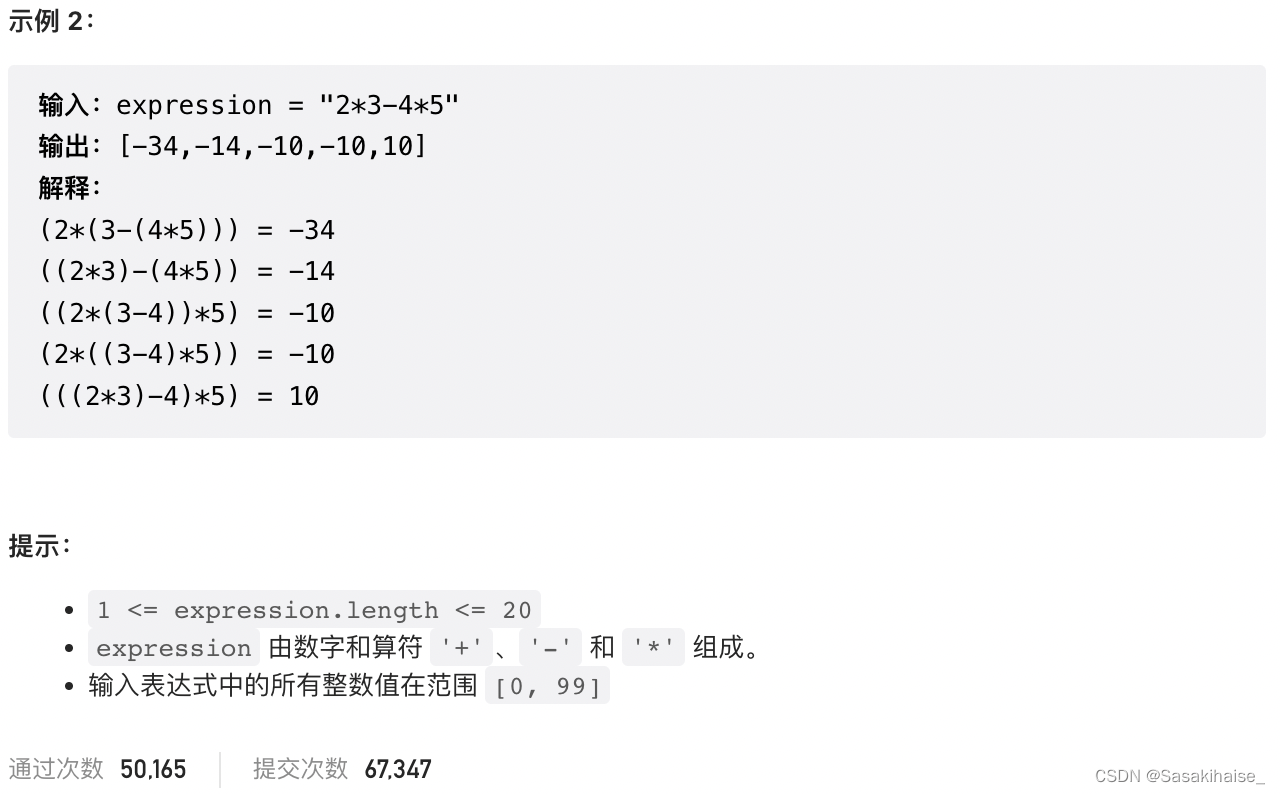

- LeetCode 241. 为运算表达式设计优先级

- CSDN markdown editor help document

- 【点云处理之论文狂读前沿版11】—— Unsupervised Point Cloud Pre-training via Occlusion Completion

- How to check whether the disk is in guid format (GPT) or MBR format? Judge whether UEFI mode starts or legacy mode starts?

猜你喜欢

AcWing 786. Number k

LeetCode 30. Concatenate substrings of all words

![[set theory] order relation (chain | anti chain | chain and anti chain example | chain and anti chain theorem | chain and anti chain inference | good order relation)](/img/fd/c0f885cdd17f1d13fdbc71b2aea641.jpg)

[set theory] order relation (chain | anti chain | chain and anti chain example | chain and anti chain theorem | chain and anti chain inference | good order relation)

Digital statistics DP acwing 338 Counting problem

【点云处理之论文狂读前沿版13】—— GAPNet: Graph Attention based Point Neural Network for Exploiting Local Feature

2022-2-13 learning the imitation Niuke project - home page of the development community

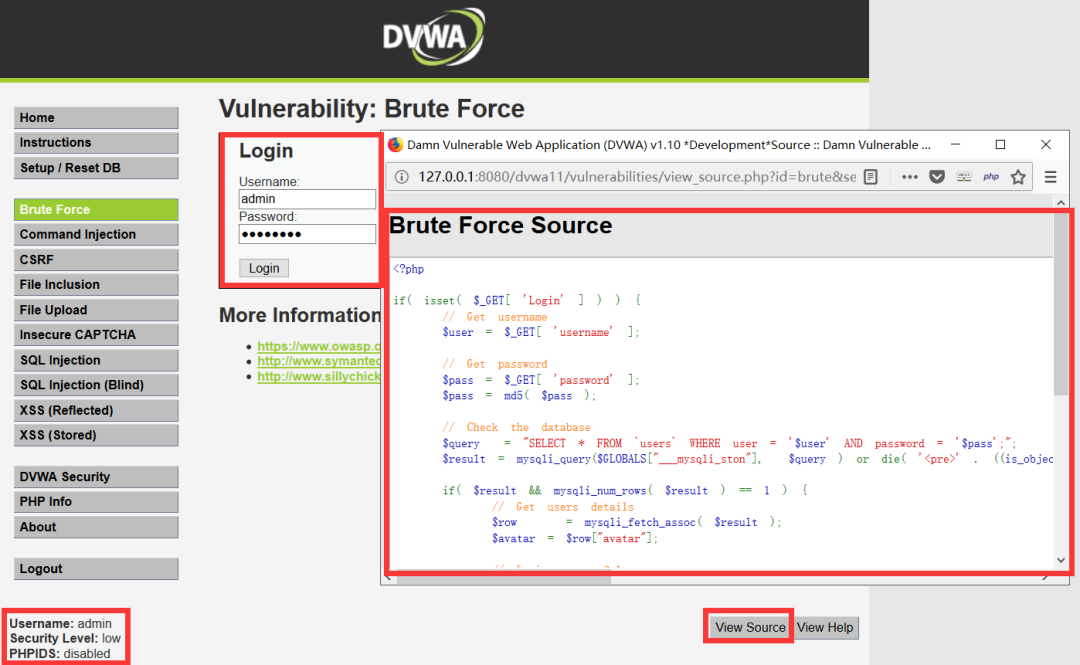

常见渗透测试靶场

【Kotlin学习】类、对象和接口——带非默认构造方法或属性的类、数据类和类委托、object关键字

LeetCode 241. Design priorities for operational expressions

【点云处理之论文狂读前沿版8】—— Pointview-GCN: 3D Shape Classification With Multi-View Point Clouds

随机推荐

Severity code description the project file line prohibits the display of status error c2440 "initialization": unable to convert from "const char [31]" to "char *"

Use the interface colmap interface of openmvs to generate the pose file required by openmvs mvs

Go language - IO project

State compression DP acwing 91 Shortest Hamilton path

浅谈企业信息化建设

Methods of checking ports according to processes and checking processes according to ports

2022-1-6 Niuke net brush sword finger offer

20220630 learning clock in

[set theory] order relation (chain | anti chain | chain and anti chain example | chain and anti chain theorem | chain and anti chain inference | good order relation)

On February 14, 2022, learn the imitation Niuke project - develop the registration function

我們有個共同的名字,XX工

【点云处理之论文狂读经典版7】—— Dynamic Edge-Conditioned Filters in Convolutional Neural Networks on Graphs

【点云处理之论文狂读经典版11】—— Mining Point Cloud Local Structures by Kernel Correlation and Graph Pooling

The less successful implementation and lessons of RESNET

LeetCode 30. Concatenate substrings of all words

Temper cattle ranking problem

LeetCode 871. 最低加油次数

[point cloud processing paper crazy reading classic version 13] - adaptive graph revolutionary neural networks

干货!零售业智能化管理会遇到哪些问题?看懂这篇文章就够了

Digital management medium + low code, jnpf opens a new engine for enterprise digital transformation