当前位置:网站首页>Overview of video self supervised learning

Overview of video self supervised learning

2022-07-05 18:40:00 【Zhiyuan community】

https://arxiv.org/abs/2207.00419

The remarkable success of deep learning in various fields depends on the availability of large-scale annotation data sets . However , Using artificially generated annotations will lead to biased learning of the model 、 Poor domain generalization ability and robustness . Getting comments is also expensive , It takes a lot of effort , This is particularly challenging for video . As an alternative , Self supervised learning provides a way to express learning without annotations , It shows prospects in the field of image and video . Different from image domain , Learning video presentation is more challenging , Because the time dimension , Introduced motion and other environmental dynamics . This also provides an opportunity for the exclusive idea of Promoting Self-regulated Learning in the field of video and multimodality . In this review , We provide an existing method for self supervised learning in the field of video . We summarize these methods into three different categories according to their learning objectives : 1) Text preset task ,2) Generative modeling , and 3) Comparative learning . These methods are also different in the way they are used ; 1) video, 2) video-audio, 3) video-text, 4) video-audio-text. We further introduce the commonly used data sets 、 Downstream assessment tasks 、 Limitations of existing work and potential future directions in this field .

The requirement of large-scale labeled samples limits the use of deep network in the problem of limited data and difficult annotation , For example, medical imaging Dargan et al. [2020]. Although in ImageNet Krizhevsky wait forsomeone [2012a] and Kinetics Kay wait forsomeone [2017] Pre training on large-scale labeled data sets can indeed improve performance , But this method has some defects , For example, note the cost Yang et al. [2017], Cai et al. [2021], Annotation deviation Chen and Joo [2021], Rodrigues and Pereira[2018], Lack of domain generalization Wang wait forsomeone [2021a], Hu wait forsomeone [2020],Kim wait forsomeone [2021], And lack of robustness Hendrycks and Dietterich[2019].Hendrycks etc. [2021]. Self supervised learning (SSL) It has become a successful method of pre training depth model , To overcome some of these problems . It is a promising alternative , Models can be trained on large data sets , You don't need to mark Jing and Tian[2020], And it has better generalization .SSL Use some learning objectives from the training sample itself to train the model . then , This pre trained model is used as the initialization of the target data set , Then fine tune it with the available marker samples . chart 1 Shows an overview of this approach .

边栏推荐

- The easycvr platform reports an error "ID cannot be empty" through the interface editing channel. What is the reason?

- ICML2022 | 长尾识别中分布外检测的部分和非对称对比学习

- [QNX hypervisor 2.2 user manual]6.3.2 configuring VM

- Is it safe for Apple mobile phone to speculate in stocks? Is it a fraud to get new debts?

- Record a case of using WinDbg to analyze memory "leakage"

- 常见时间复杂度

- Use of websocket tool

- 【pm2详解】

- FCN: Fully Convolutional Networks for Semantic Segmentation

- ConvMAE(2022-05)

猜你喜欢

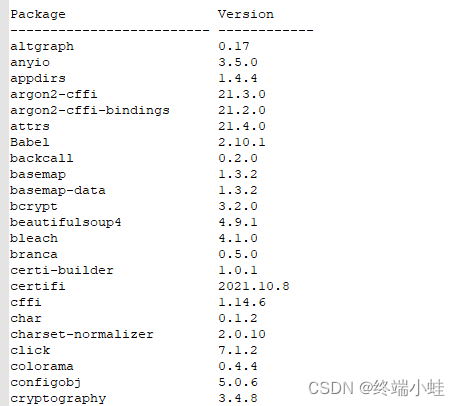

怎么自动安装pythn三方库

How to automatically install pythn third-party libraries

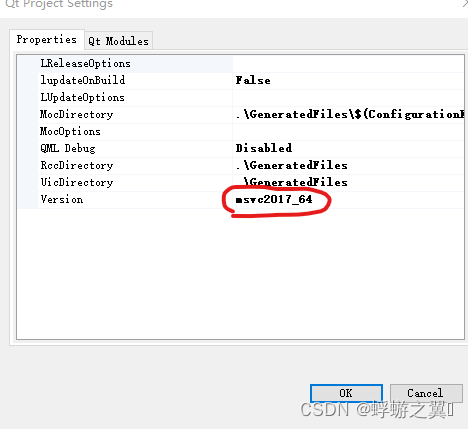

vs2017 qt的各种坑

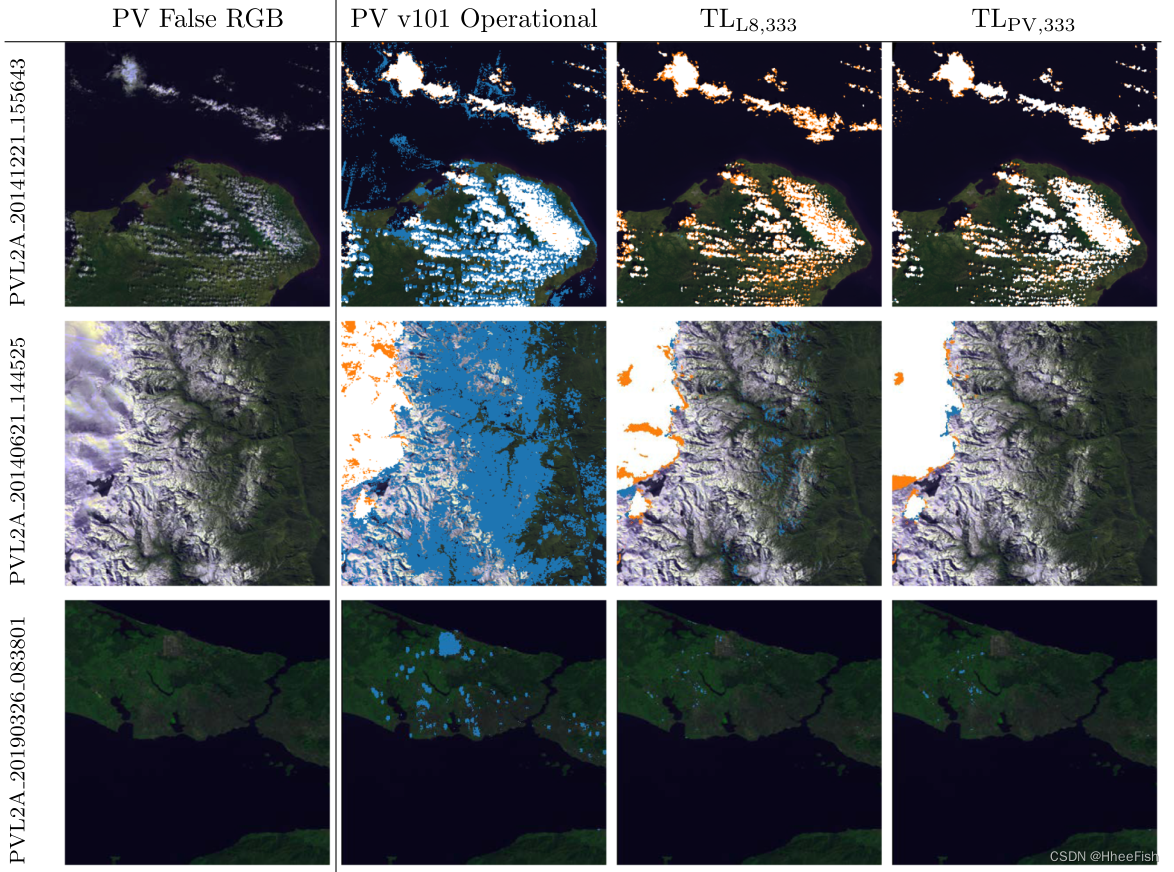

Isprs2020/ cloud detection: transferring deep learning models for cloud detection between landsat-8 and proba-v

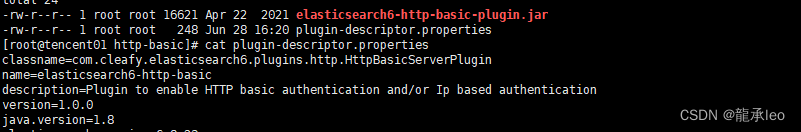

Fix vulnerability - mysql, ES

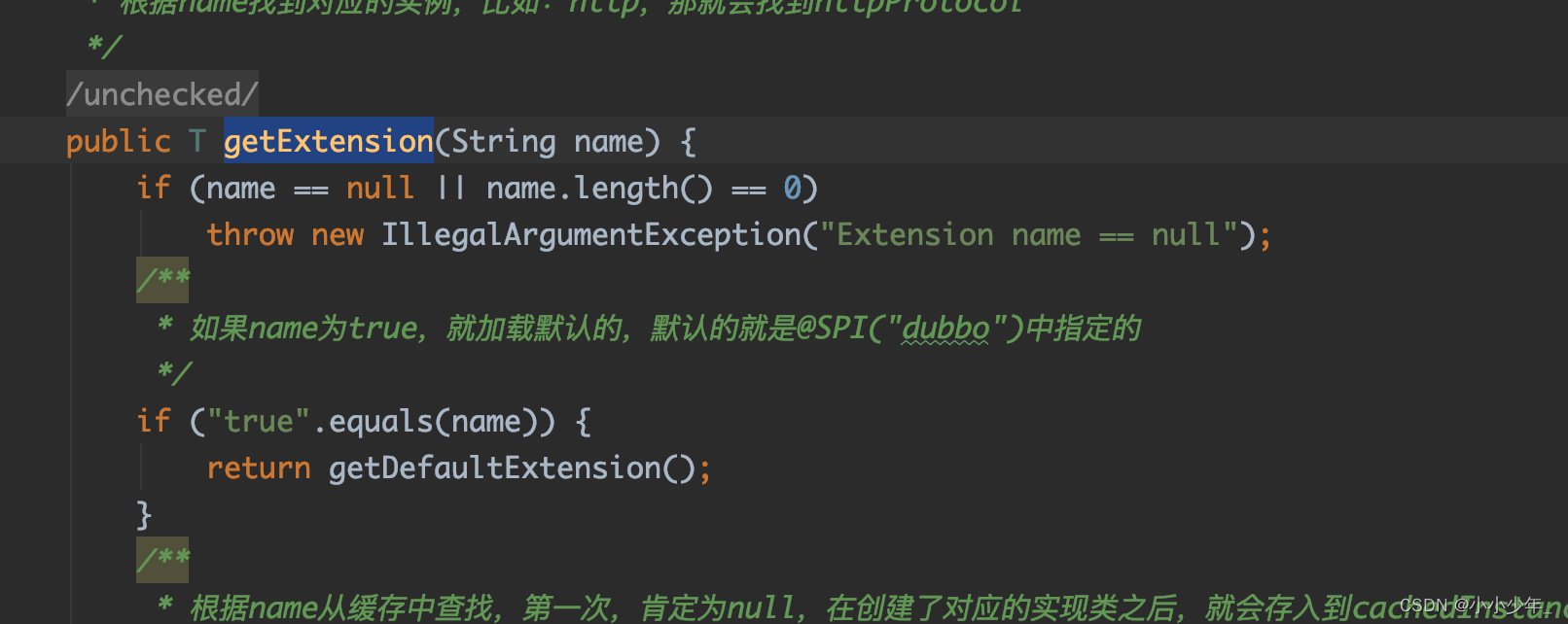

@Extension、@SPI注解原理

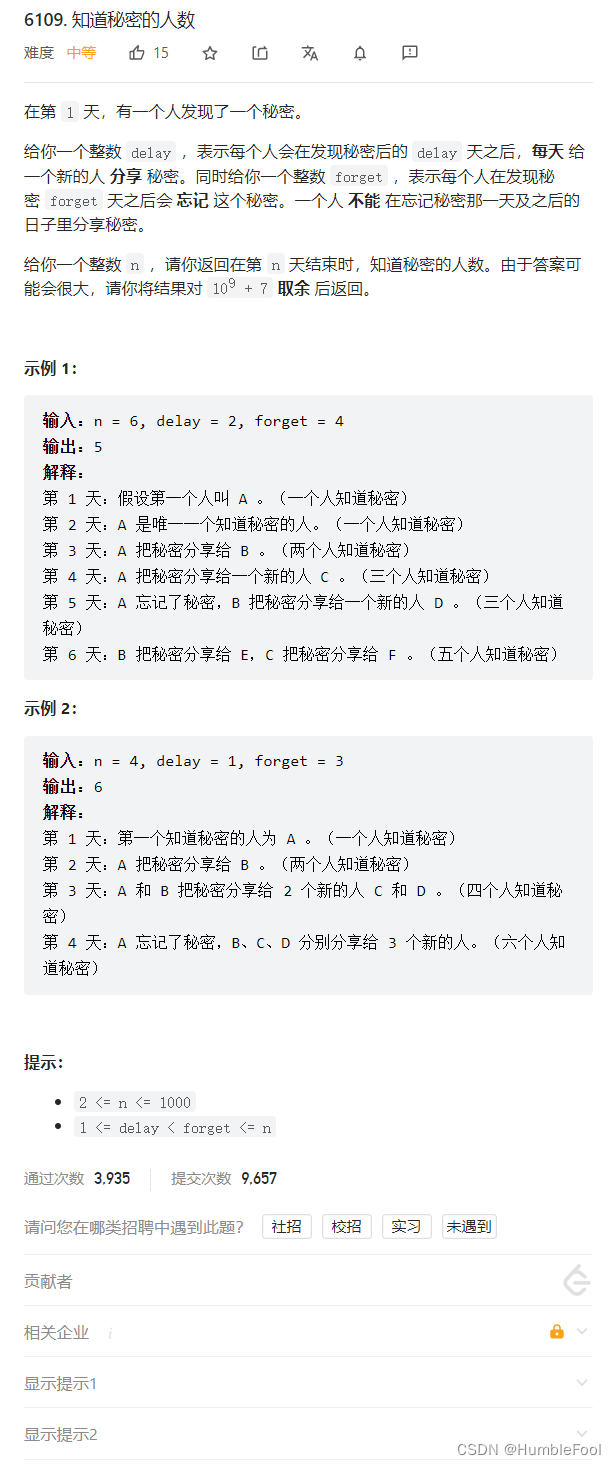

LeetCode 6109. 知道秘密的人数

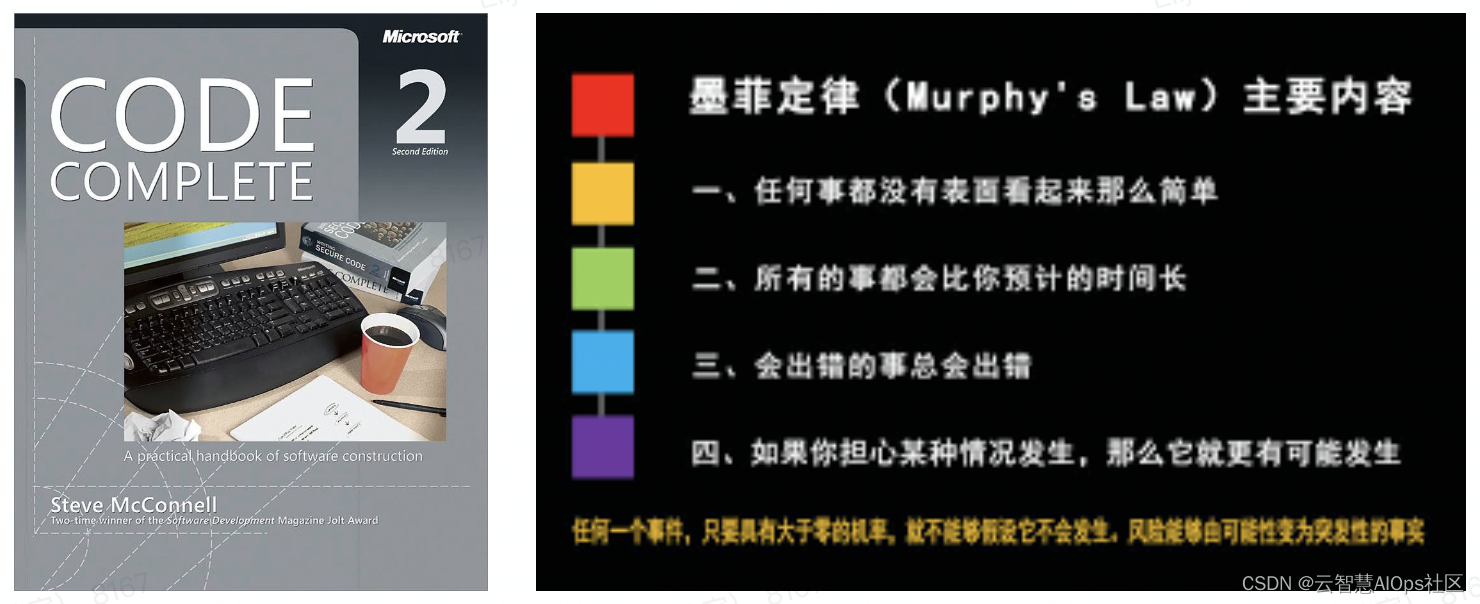

How to write good code defensive programming

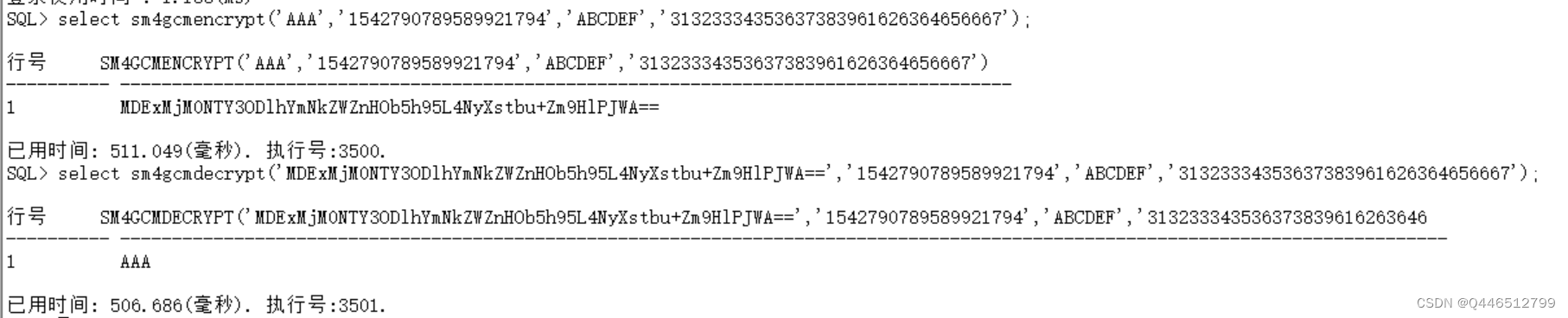

达梦数据库udf实现

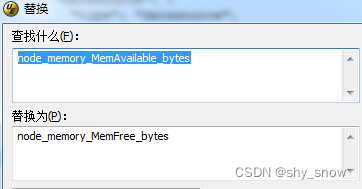

node_ Exporter memory usage is not displayed

随机推荐

How to write good code defensive programming

node_exporter内存使用率不显示

Precautions for RTD temperature measurement of max31865 module

Quickly generate IPA package

音视频包的pts,dts,duration的由来.

爬虫01-爬虫基本原理讲解

Thoroughly understand why network i/o is blocked?

The 11th China cloud computing standards and Applications Conference | China cloud data has become the deputy leader unit of the cloud migration special group of the cloud computing standards working

《2022中国信创生态市场研究及选型评估报告》发布 华云数据入选信创IT基础设施主流厂商!

LeetCode 6109. 知道秘密的人数

2022最新Android面试笔试,一个安卓程序员的面试心得

金太阳开户安全吗?万一免5开户能办理吗?

小程序 修改样式 ( placeholder、checkbox的样式)

Sibling components carry out value transfer (there is a sequence displayed)

Problems encountered in the project u-parse component rendering problems

MYSQL中 find_in_set() 函数用法详解

Login and connect CDB and PDB

Einstein sum einsum

Use of websocket tool

c语言简便实现链表增删改查「建议收藏」