当前位置:网站首页>Solve the cache breakdown problem

Solve the cache breakdown problem

2022-07-07 13:29:00 【Bug Commander】

Text

Current defects

First , Why is it that the current scheme circulating on the Internet , Poor landing performance , Because they all lack a person who can communicate with SpringBoot Combined with the real scene , Basically separated from SpringBoot, Just stand on the ground Java Analyze at this level . That's the question , Now there are only SpringMvc, But not SpringBoot Your company ? therefore , This paper attempts to combine this scheme with SpringBoot Combine , Talk about a practical , A plan that can be landed !

Of course , Let's first talk about several schemes circulating on the Internet , Where the hell is it !

(1) The bloon filter

About the bloon filter , I won't introduce too much , It is understood here as a filter , Used to quickly retrieve whether an element is in a collection ; So when a request comes , Quickly determine the of this request key Whether in the specified set ! If in , The description is valid , The release . If not , Then it is invalid to intercept . As for realization , The major blogs also said it was useful google Provided

<dependency>

<groupId>com.google.guava</groupId>

<artifactId>guava</artifactId>

<version>19.0</version>

</dependency>

There are ready-made ones in this bag java Class used for you , Of course demo I won't post the code , A handful ! Of course , It seems perfect ! Everything is so appropriate !

But here , I really ask , Did you really use this scheme ?

If I'm right , Few people should have experienced cache breakdown ~

What's more? , Prove the correctness of this statement ~

One of the biggest problems with this scheme is Bloom filter does not support reverse deletion , For example, what is active in your project key There are only 1000w individual , But all key The quantity is 5000w individual , What about this 5000w individual key It'll all be in the bloom filter !

Until one day , You'll find the filter too crowded , The misjudgment rate is too high , Have to rebuild !

so, Do you think this is really reliable ?

So where does the term bloom filter come from ? ( You must be curious, aren't you !)

Certainly xx Institutions ~~ Protect your dog's head here ~~ remember , They cut leeks , I will definitely choose something that looks extremely high-end , But the landing plan is unreliable ( This is also a benchmark to distinguish whether an organization is cutting leeks or has a real level , Xiaobai doesn't understand , It's easy to get stuck )~~ See here , It's a shame , My first article also wrote this plan , But in the process of landing , Something's wrong ( A review of 10000 words is omitted here , Smoke brother garbage ~~).

(2) Cuckoo filter

that , In order to solve the problem of weak query performance of Bloom filter 、 The efficiency of space utilization is low 、 Problems such as reverse operation are not supported , Another article was born , It is advocated to use cuckoo filter to solve the cache breakdown problem !

however , There's something magical coming , Basically all the articles are talking about how awesome the cuckoo filter is , But there is no plan to land ~

remember , We usually write code , It must be convenient ! Remember again , An interview is one thing , Code landing is another matter ~

that , What is the really simple solution ? Come on , Let's go step by step ~

Real scheme

hypothesis , You're using springboot-2.x Version of , In order to be able to connect redis, you are here pom Add the following dependencies to the file

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-data-redis</artifactId>

</dependency>

so what , We modify application.yml

spring:

datasource:

...

redis:

database: ...

host: ...

port: ...

( Save trouble , Not all posted )

ok, Speaking of this , I have to say spring-cache 了 ,Spring3.1 after , The annotation caching technology is introduced , In essence, it is not a concrete cache implementation scheme , It's an abstraction of the use of caching , By adding a small amount of custom various annotation, That is, it can achieve the effect of using cache objects and cache methods to return objects .Spring The cache technology of is quite flexible , Not only can you use SpEL(Spring Expression Language) To define cached key And all kinds of condition, It also provides out of the box cache temporary storage scheme , It also supports integration with mainstream professional cache .

for example : We often have such a piece of logic in our code , The method is ahead of the goal , Will be based on key First go to the cache to check whether there is data , If yes, it will be returned directly to the cache key Corresponding value value , The target method is no longer executed ; If not, execute the target method , Go to the database and find the corresponding value, And in the form of key value pairs stored in the cache .

If we don't use, for example spring-cache The annotation framework of , Your code will be full of redundant code , And with this framework , With @Cacheable Note for example , The annotation is on the method , Indicates that the return result of this method can be cached .

in other words , The returned result of this method will be put in the cache , So that when the method is called later with the same parameters , Returns the value in the cache , The method is not actually executed .

that , That's all you need to write your code

@Override

@Cacheable("menu")

public Menu findById(String id) {

Menu menu = this.getById(id);

if (menu != null){

System.out.println("menu.name = " + menu.getName());

}

return menu;

}

In this case ,findById Method with a named menu Associated with the cache . When the method is called , Will check the menu cache , If there are results in the cache , You won't execute the method .

ok, Speaking of this , In fact, they are all things we know !! Now let's start our topic : How to solve the problem of cache breakdown ! By the way, penetration and avalanche problems !

Come on, come on , Let's recall cache breakdown , Penetration and cache avalanche concept !

Cache penetration

At high level , When querying for a non-existent value , Cache will not be hit , Result in a large number of requests directly to the database , For example, query an activity that does not exist in the activity system . Say more : Cache penetration means , The requested data is not in the cache or database !

For cache penetration problems , There is a very simple solution , It's cache. NULL value ~ Data not available from cache , In the database, there is no access to , Return null value directly .

that spring-cache in , There is a configuration like this

spring.cache.redis.cache-null-values=true

After the configuration , Can be cached null The value of , It is worth mentioning that , This cache time should be set less , for example 15 Seconds is enough , If the setting is too long , It will make the normal cache unusable .

Cache breakdown

At high level , Query a specific value , But this time the cache just expired , Cache miss , Result in a large number of requests directly to the database , Such as querying activity information in the activity system , But during the activity, the activity cache suddenly expired . Say more : Cache breakdown means , The requested cache is not , And some data in the database !

remember , The simplest way to solve breakdown , only one , It's current limiting ! As for how to limit , In fact, they can show their magic powers ! For example, the bloom filter mentioned in other articles , Cuckoo filter, etc , It's just one of the current limiting methods ! even to the extent that , You can also use some other current limiting components !

Here we are going to say spring-cahce Another configuration of !

After the cache has expired , If multiple threads request access to a certain data at the same time , Will go to the database at the same time , This leads to an increase in the instantaneous load of the database .Spring4.3 by @Cacheable The annotation provides a new parameter “sync”(boolean type , Default is false), When set to true when , Only one thread's request will go to the database , Other threads wait until the cache is available . This setting can reduce instantaneous concurrent access to the database .

See here !! Isn't this a current limiting scheme ?

So the solution is , Add an attribute sync=true, Just go . The code looks like this

@Cacheable(cacheNames="menu", sync="true")

After using this attribute , You can instruct the underlying layer to lock the cache , So that only one thread can enter the calculation , Other threads are blocked , Until the returned result is updated to the cache .

Of course , See here , Someone will argue with me ! His problem is that !

You only limit the current of a single machine , It is not the current limit of the whole cluster ! in other words , Suppose your cluster is built 3000 individual pod, The worst case is ,3000 individual pod On , Every pod Will initiate a request to query the database , Still, it will lead to insufficient database connections , And so on !

I can only say about this question ! juvenile , Whenever your company's products reach this traffic scale , You won't be reading my article at the moment ! What are your concerns at the moment :

(1) Ah , Buy Shenzhen Bay No. 1 or Shenzhen Bay residence , tangle !

(2) US stocks fell again yesterday , Lost two more suites

(3) The order was cancelled in advance yesterday , Tens of thousands less

....( Omit ten thousand words )

Of course , If you have to solve , There are ways. .spring Of aop There is a routine , such as @Transactional Of Advice yes TransactionInterceptor, that cache Also for dealing with a CacheInterceptor, We just have to change CacheInterceptor, This section can solve . Make a distributed lock in it ! The pseudocode is as follows

flag := Take the distributed lock

if flag {

Go to database query , And cache the results

}{

Sleep for a while , Try again to get key Value

}

however , I still want to say more , There's no need to ~~ Remember a word , Based on reality ~ When your business reaches that level , Regional deployment can be achieved , This kind of problem can be avoided completely .

Cache avalanche

At high level , A lot of caching key Lapse at the same time , Cause a lot of requests to fall on the database , For example, there are many activities in the activity system at the same time , But at a certain point in time all active caches expire .

So to solve this problem , The simplest solution is , Expiration time plus random value !

But the trouble is , We are using @Cacheable When annotating , The native function cannot directly set the random expiration time .

Well, to be honest , There's no good way , Can only inherit RedisCache, Enhance it , Rewrite it put Method , Bring random time !

边栏推荐

猜你喜欢

Centso7 OpenSSL error Verify return code: 20 (unable to get local issuer certificate)

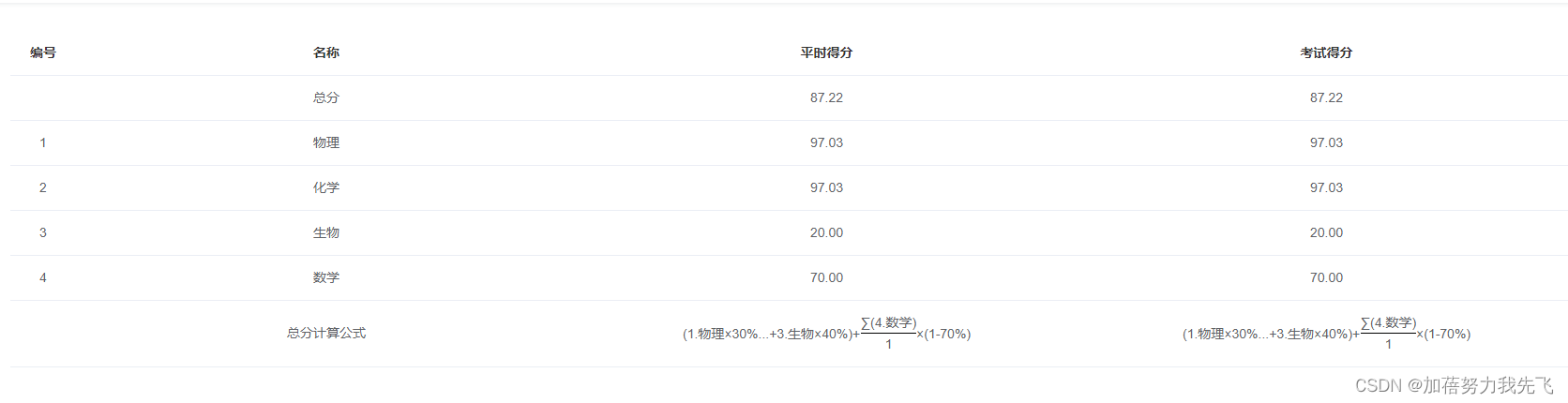

Show the mathematical formula in El table

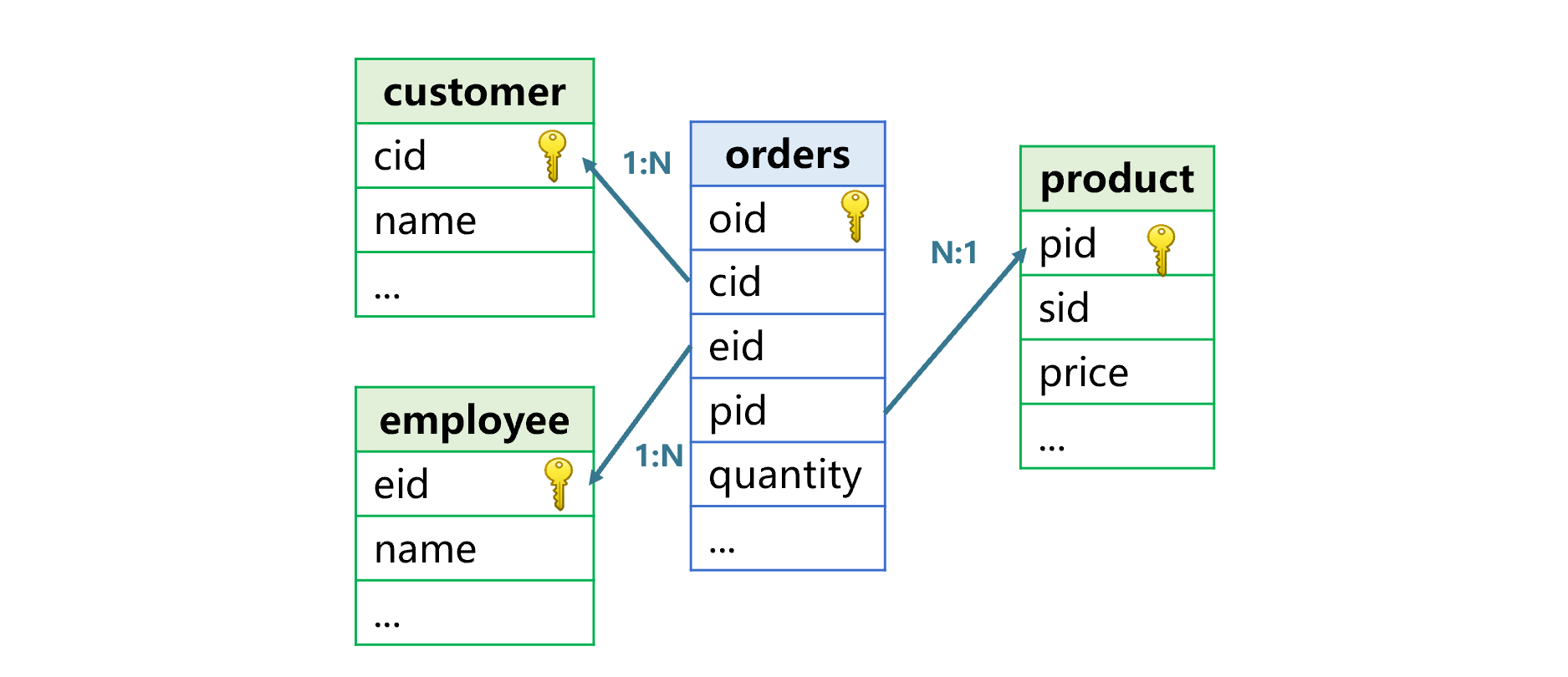

如何让join跑得更快?

Practical example of propeller easydl: automatic scratch recognition of industrial parts

Isprs2021/ remote sensing image cloud detection: a geographic information driven method and a new large-scale remote sensing cloud / snow detection data set

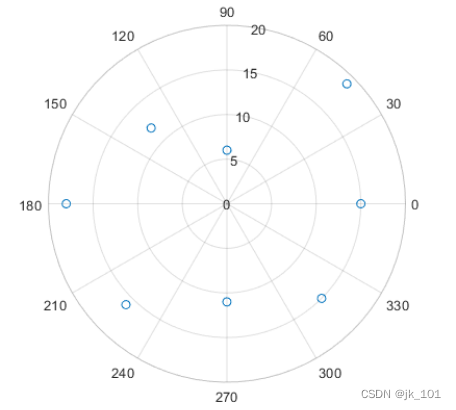

Use of polarscatter function in MATLAB

MATLAB中polarscatter函数使用

Milkdown control icon

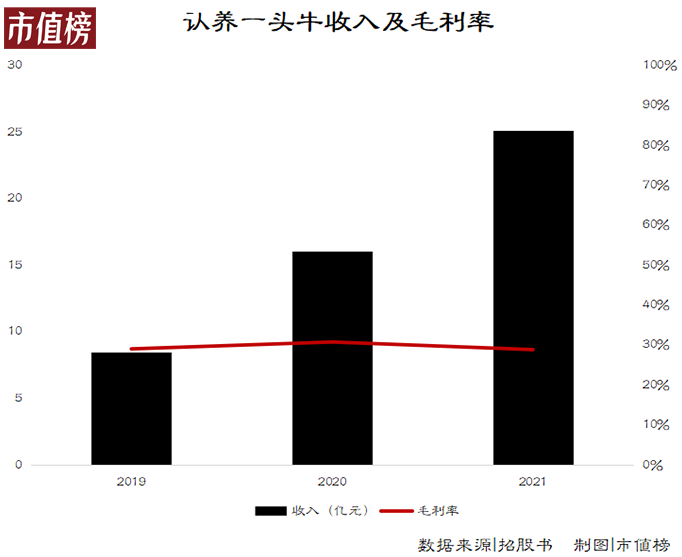

How far can it go to adopt a cow by selling the concept to the market?

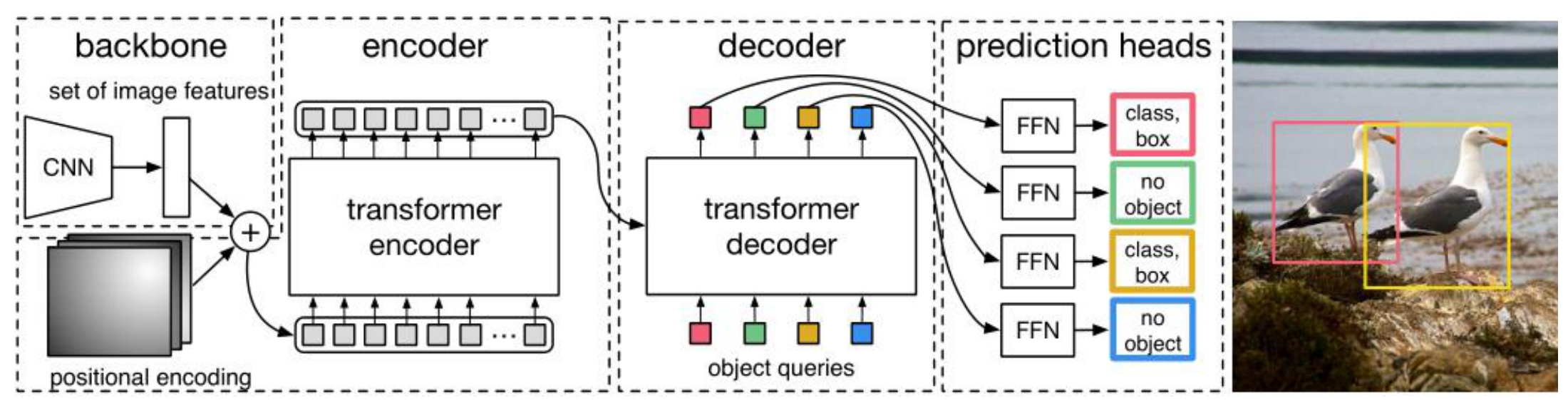

DETR介绍

随机推荐

基于鲲鹏原生安全,打造安全可信的计算平台

About the problem of APP flash back after appium starts the app - (solved)

Show the mathematical formula in El table

mysql 局域网内访问不到的问题

MongoDB 分片总结

自定义线程池拒绝策略

Signal strength (RSSI) knowledge sorting

存储过程的介绍与基本使用

PAcP learning note 3: pcap method description

Cinnamon 任务栏网速

RealBasicVSR测试图片、视频

RecyclerView的数据刷新

Read PG in data warehouse in one article_ stat

MongoDB的导入导出、备份恢复总结

记一次 .NET 某新能源系统 线程疯涨 分析

数字ic设计——SPI

PHP - laravel cache

[QNX Hypervisor 2.2用户手册]6.3.4 虚拟寄存器(guest_shm.h)

Go language learning notes - structure

Ogre introduction