当前位置:网站首页>From then on, I understand convolutional neural network (CNN)

From then on, I understand convolutional neural network (CNN)

2022-07-05 07:34:00 【sukhoi27smk】

Convolutional neural networks It's something I couldn't figure out anyway , Mainly the name is too “ senior ” 了 , Various articles on the Internet to introduce “ What is convolution ” Especially unbearable . After listening to Wu Enda's online class , Be suddenly enlightened , Finally, I understand what this thing is and why . I will probably use 6~7 An article to explain CNN And realize some interesting applications . After reading it, you should be able to do something you like by yourself .

One 、 Introduction : boundary detection

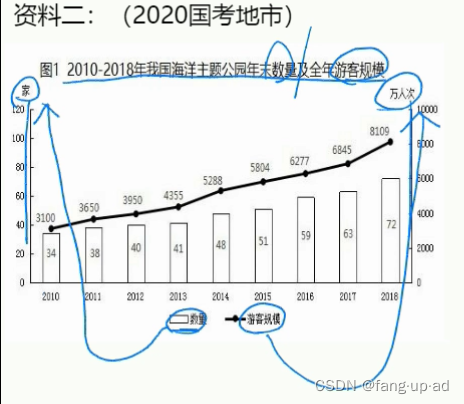

Let's look at the simplest example :“ boundary detection (edge detection)”, Suppose we have such a picture , size 8×8:

The number in the picture represents the pixel value of the position , We know , The larger the pixel value , The brighter the color , So in order to signal , Let's paint the small pixel on the right dark . The dividing line between the two colors in the middle of the figure is the boundary we want to detect .

How to detect this boundary ? We can design such a filter (filter, Also known as kernel), size 3×3:

then , We use this filter, On our pictures “ cover ”, Cover a piece of heel filter After the same large area , Multiply the corresponding elements , Then sum it . After calculating a region , Just move to other areas , Then calculate , Until every corner of the original image is covered . This process is “ Convolution ”.

( We don't care what convolution means in Mathematics , We only know in CNN How to calculate in .)

there “ Move ”, It involves a step , Suppose our step size is 1, After covering a place , Just move one space , It's easy to know , In total, it can cover 6×6 Different areas .

that , We will 6×6 Convolution results of regions , Put together a matrix :

EH ?! What was found ?

This picture , Light in the middle , Dark on both sides , This shows the boundary in the middle of our original picture , It is reflected here !

From the example above , We found that , We can design specific filter, Let it convolute with the picture , You can recognize some features in the picture , Like the border .

The above example is to detect the vertical boundary , We can also design to detect the horizontal boundary , Just put the just filter rotate 90° that will do . For other features , In theory, as long as we go through fine design , You can always design the right filter Of .

our CNN(convolutional neural network), Mainly through one by one filter, Constantly extract features , From local characteristics to general characteristics , So as to carry out image recognition and other functions .

So here comes the question , How can we design so many kinds of filter ah ? First , We may not know about a big push of pictures , What features do we need to identify , secondly , Even if you know the characteristics , Want to really design the corresponding filter, I'm afraid it's not easy , Need to know , The number of features can be thousands .

In fact, after learning neural network , We knew , these filter, We don't need to design at all , Every filter The numbers in , It's just a parameter , We can use a lot of data , Come on Let the machine go by itself “ Study ” These parameters Well . this , Namely CNN Principle .

Two 、CNN Basic concepts of

1.padding White filling

From the introduction above , We can know , The original image is passing filter After convolution , It's getting smaller , from (8,8) Turned into (6,6). Suppose we roll it again , Then the size becomes (4,4) 了 .

What's the problem with this ?

There are two main problems :

Every convolution , The images are all reduced , I can't roll it for several times ;

Compared to the middle of the picture , The number of times the edge points of the image are calculated in convolution is very small . In this case , Information on the edge is easy to lose .

To solve this problem , We can use padding Methods . Every time we convolute , Fill in the blanks around the picture first , Let the convoluted image be the same size as before , meanwhile , The original edge is also calculated more times .

such as , We put (8,8) Make up the picture of (10,10), So after (3,3) Of filter after , Namely (8,8), No change .

Let's put the above “ Let the size after convolution remain the same ” Of padding The way , be called “Same” The way ,

Fill in the blank without any filling , be called “Valid” The way . This is when we use some frameworks , Super parameters to be set .

2.stride step

The convolution we introduced earlier , All default steps are 1, But actually , We can set the steps to other values .

such as , about (8,8) The input of , We use it (3,3) Of filter,

If stride=1, The output of (6,6);

If stride=2, The output of (3,3);( The example here is not very good , Divide and keep rounding down )

3.pooling Pooling

This pooling, It is to extract the main features of a certain region , And reduce the number of parameters , Prevent model over fitting .

Like the following MaxPooling, A 2×2 The window of , And take stride=2:

except MaxPooling, also AveragePooling, As the name suggests, it is to take the average value of that area .

4. For multichannel (channels) Volume of pictures product ( important !)

This needs to be mentioned separately . Color images , It's usually RGB Three channels (channel) Of , So there are three dimensions of input data :( Long , wide , passageway ).

For example, a 28×28 Of RGB picture , Dimension is (28,28,3).

In the front Introduction , The input picture is 2 Dimensional (8,8),filter yes (3,3), The output is 2 Dimensional (6,6).

If the input image is three-dimensional ( That is to say, one more channels), For example (8,8,3), This is the time , our filter The dimension of is going to be (3,3,3) 了 , its The last dimension is going to follow the input channel The dimensions are the same .

Convolution at this time , It's the three one. channel All the elements of the corresponding multiplication sum , That's before 9 The sum of products , Now it is 27 The sum of products . therefore , The dimensions of output do not change . still (6,6).

however , In general , We will More use filters Simultaneous convolution , such as , If we use 4 individual filter Words , that The dimension of the output changes to (6,6,4).

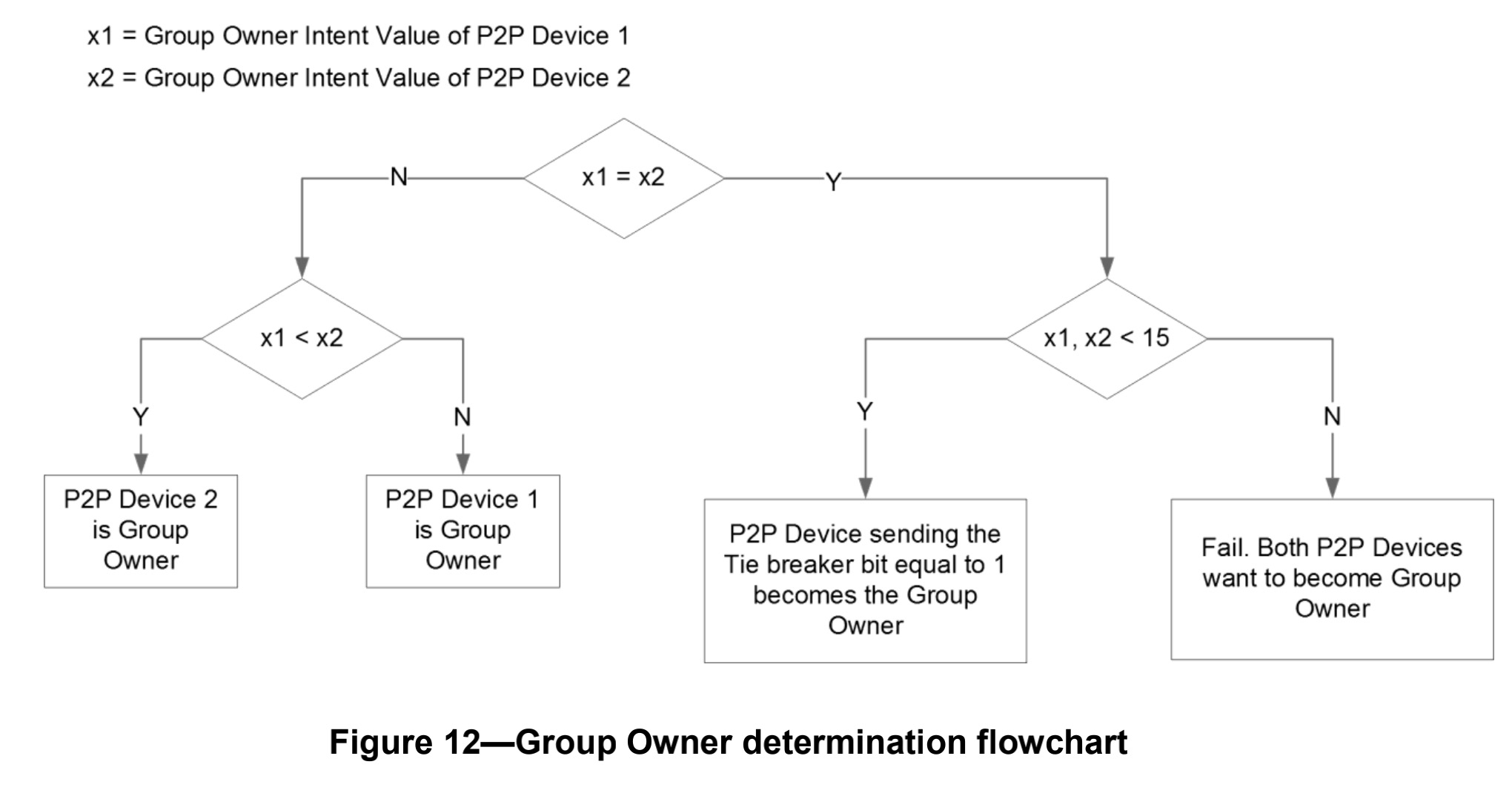

I specially drew the following picture , To show the above process :

The input image is (8,8,3),filter Yes 4 individual , All sizes are (3,3,3), The output is (6,6,4).

I think this picture is very clear , And gives 3 and 4 How did these two key figures come from , So I won't be wordy ( This picture made me at least 40 minute ).

Actually , If we apply the neural network symbols we learned before to look at CNN Words ,

Our input image is X,shape=(8,8,3);

4 individual filters In fact, it is the parameters of the first layer Shenjin network W1,,shape=(3,3,3,4), This 4 It means having 4 individual filters;

Our output , Namely Z1,shape=(6,6,4);

There should also be an activation function , such as relu, After activation ,Z1 Turn into A1,shape=(6,6,4);

therefore , In the picture above , I add an activation function , Mark the corresponding part , That's it :

【 Personally feel , Such a good picture is not collected , What a pity 】

3、 ... and 、CNN Structure of

We already know convolution above (convolution)、 Pooling (pooling) And white filling (padding) How it works , Let's take a look CNN Overall structure , It contains 3 Seed layer (layer):

1. Convolutional layer( Convolution layer —CONV)

By filter filters And the activation function .

The general parameters to be set include filters The number of 、 size 、 step , as well as padding yes “valid” still “same”. Of course , It also includes choosing what activation function .

2. Pooling layer ( Pooling layer —POOL)

There are no parameters to learn , Because the parameters here are all set by us , Or Maxpooling, Or Averagepooling.

You need to specify a super parameter , It includes Max still average, Window size and step size .

Usually , What we use more is Maxpooling, And the general size is (2,2) In steps of 2 Of filter, such , after pooling after , The length and width of the input will be reduced 2 times ,channels unchanged .

3. Fully Connected layer( Fully connected layer —FC)

This is not mentioned before , Because this is the guy we are most familiar with , It is the most common layer of neural network we learned before , It's a row of neurons . Because every unit in this layer is connected with every unit in the previous layer , So it's called “ Full connection ”.

Super parameter to be specified here , It's just the number of neurons , And activation functions .

Next , Let's look at one CNN The appearance of , To get the right CNN Some perceptual knowledge :

Above this CNN It's the one I casually patted on the forehead . Its structure can be used :

X→CONV(relu)→MAXPOOL→CONV(relu)→FC(relu)→FC(softmax)→Y

To express .

Here's the thing to note , After several convolutions and pooling , We Finally, the multi-dimensional data will be processed first “ flat ”, That is the (height,width,channel) The data is compressed to a length of height × width × channel One dimensional array of , And then with FC layer Connect , After that, it is the same as the ordinary neural network .

You can see from the figure , With the development of Internet , Our image ( Strictly speaking, those in the middle can't be called images , But for convenience , Let's say so ) It's getting smaller , however channels But it's getting bigger and bigger . The representation in the figure is that the area of the cuboid facing us is getting smaller and smaller , But the length is getting longer .

Four 、 Convolutional neural networks VS. Traditional neural networks

In fact, looking back ,CNN Like the neural network we learned before , There is no big difference .

Traditional neural networks , In fact, there are many FC Layers are superimposed .

CNN, It's nothing more than putting FC Changed to CONV and POOL, It's made up of neurons layer, It's changed from filters Composed of layer.

that , Why do you want to change like this ? What are the benefits ?

Specifically speaking, there are two points :

1. Parameter sharing mechanism (parameters sharing)

Let's compare the layers of traditional neural networks with filters Composed of CONV layer :

Suppose our image is 8×8 size , That is to say 64 Pixel , Suppose we use a with 9 Full connection layer of units :

How many parameters do we need in this layer ? need 64×9 = 576 Parameters ( Let's not consider the offset term b). Because every link needs a weight w.

So let's see There are also 9 Unit filter What is it like :

In fact, you don't need to look , There are only a few parameters for a few units , So the total is 9 Parameters !

because , For different areas , We all share the same filter, So share the same set of parameters .

And that makes sense , Through the previous explanation, we know ,filter It is used to detect features , Generally, that feature is likely to appear in more than one place , such as “ Vertical boundary ”, It may appear more in one picture , that We share the same filter Not only is it reasonable , And it should be done .

thus it can be seen , Parameter sharing mechanism , Let us greatly reduce the number of network parameters . such , We can use fewer parameters , Train better models , The typical way is to get twice the result with half the effort , And it can effectively Avoid overfitting .

Again , because filter Parameter sharing , Even if the picture has some translation operation , We can still recognize features , It's called “ Translation invariance ”. therefore , The model is more robust .

2. Sparsity of connections (sparsity of connections)

It can be seen from the operation of convolution , Output any unit in the image , It is only related to a part of the input image system :

And in the traditional neural network , Because it's all connected , So any unit of output , It's influenced by all the input units . In this way, the recognition effect of the image will be greatly reduced . Compare , Each area has its own characteristics , We don't want it to be affected by other regions .

It's because of these two advantages , bring CNN Beyond the traditional NN, It opens a new era of neural networks

边栏推荐

- Cookie operation

- Daily Practice:Codeforces Round #794 (Div. 2)(A~D)

- 2022年PMP项目管理考试敏捷知识点(7)

- Don't confuse the use difference between series / and / *

- What does soda ash do?

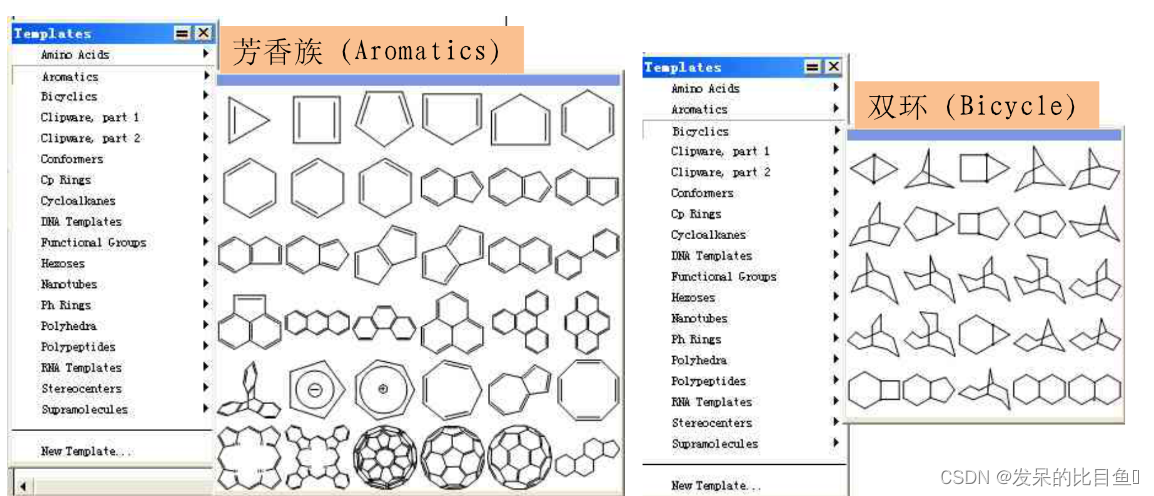

- CADD course learning (5) -- Construction of chemosynthesis structure with known target (ChemDraw)

- Altimeter data knowledge point 2

- Anaconda pyhton multi version switching

- GBK error in web page Chinese display (print, etc.), solution

- What is Bezier curve? How to draw third-order Bezier curve with canvas?

猜你喜欢

随机推荐

Ue5 hot update - remote server automatic download and version detection (simplehotupdate)

C learning notes

纯碱是做什么的?

String alignment method, self use, synthesis, newrlcjust

Install deeptools in CONDA mode

[tf1] save and load parameters

Unconventional ending disconnected from the target VM, address: '127.0.0.1:62635', transport: 'socket‘

selenium 元素定位

The SQL implementation has multiple records with the same ID, and the latest one is taken

R language learning notes 1

UE5热更新-远端服务器自动下载和版本检测(SimpleHotUpdate)

Qu'est - ce que l'hydroxyde de sodium?

Apple animation optimization

使用go语言读取txt文件写入excel中

Line test -- data analysis -- FB -- teacher Gao Zhao

Basic series of SHEL script (I) variables

arcgis_ spatialjoin

Light up the running light, rough notes for beginners (1)

How to modify the file path of Jupiter notebook under miniconda

What if the DataGrid cannot see the table after connecting to the database