当前位置:网站首页>Play with grpc - go deep into concepts and principles

Play with grpc - go deep into concepts and principles

2022-07-05 07:24:00 【Barry Yan】

This article belongs to an article of knowledge leakage and double disk , The main purpose is to repeat gRPC Related concepts of , And analyze its principle , You can refer to the previous articles for relevant knowledge points and usage :

《 Get along well with gRPC—Go Use gRPC Communication practice 》

《 Get along well with gRPC— Communication between different programming languages 》

《 I'll take you to understand HTTP and RPC The similarities and differences of the agreement 》

《 from 1 Start , Expand Go Language backend business system RPC function 》

The above articles are all about and RPC Agreement related knowledge and Google Open source RPC frame gRPC Knowledge about , But they may be relatively simple and unsystematic , Therefore, I want to use this article to systematically and deeply describe gRPC, Let's start with :

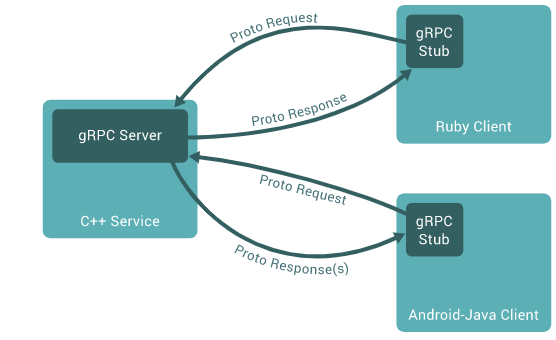

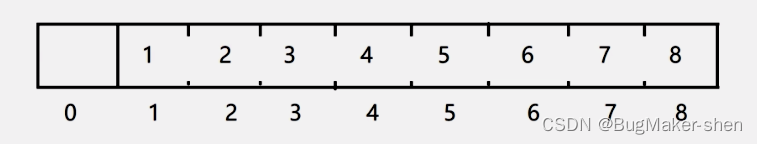

1 Use gRPC Basic architecture

As can be seen from the figure above , Use gRPC The basic architecture of communication is basically divided into five parts , They are :

- Service: Services provided

- Client:gRPC client

- gRPC Server:gRPC Server interface

- gRPC Stub:gRPC Client interface

- Proto Request/Proto Response(s): Intermediate document ( Code / agreement )

2 Protocol Buffers

2.1 What is? Protocol Buffers?

Protocol Buffers, yes Google A company developed Data description language , abbreviation protobuf.

characteristic :

- Support for multiple programming languages

- Serialized data is small

- Fast deserialization

- Serialization and deserialization code are automatically generated

2.2 Protocol Buffers and gRPC What is the relationship ?

The first thing to say is this gRPC yes RPC A protocol is an implementation , It's a frame ;Protocol Buffers, yes Google A company developed Data description language .

gRPC and Protocol Buffers The relationship between browser and HTML The relationship between , Don't depend on each other , But they need to be used together , To achieve the best results .

2.3 Protocol Buffers Basic grammar

Protocol Buffers It's one with .proto Ordinary text file with extension .

The protocol buffer data is constructed as news , Each message is a small logical record of information , Contains a series of key value pairs called fields . This is a simple example :

message Person {

string name = 1;

int32 id = 2;

bool has_ponycopter = 3;

}

Once you specify your data structure , You can use the protocol buffer compiler protoc Generate data access classes from your prototype definition in your favorite language . These provide a simple accessor for each field , for example name()and set_name(), And serializing the entire structure / The method of parsing to the original bytes .

In ordinary proto The document defines gRPC service , take RPC Method parameters and return types Specify as protocol buffer message :

// The greeter service definition.

service Greeter {

// Sends a greeting

rpc SayHello (HelloRequest) returns (HelloReply) {}

}

// The request message containing the user's name.

message HelloRequest {

string name = 1;

}

// The response message containing the greetings

message HelloReply {

string message = 1;

}

gRPC Use protoc special gRPC Plug in from proto File generation code : Will get the generated gRPC Client and server code , And for filling 、 General protocol buffer code for serializing and retrieving message types .

3 gRPC Four service delivery methods

3.1 Unary RPC

One yuan RPC, The client sends a single request to the server and obtains a single response , It's like a normal function call .

rpc SayHello(HelloRequest) returns (HelloResponse);

3.2 Server streaming RPC

Server streaming RPC, The client sends a request to the server and gets the stream to read back a series of messages . The client reads from the returned stream , Until there is no more news .gRPC Guarantee a single RPC The order of messages in the call .

rpc LotsOfReplies(HelloRequest) returns (stream HelloResponse);

3.3 Client streaming RPC

Client streaming RPC, The client writes a series of messages and sends them to the server , Use the provided stream again . Once the client has finished writing the message , It will wait for the server to read them and return its response .gRPC Once again, a single RPC The order of messages in the call .

rpc LotsOfGreetings(stream HelloRequest) returns (HelloResponse);

3.4 Bidirectional streaming RPC

Two way flow RPC, Send and write a series of messages using the stream . These two streams run independently , Therefore, clients and servers can read and write in any order they like : for example , The server can wait to receive all client messages before writing the response , Or it can alternately read messages and then write messages , Or some other combination of read and write . Preserve the order of messages in each flow .

rpc BidiHello(stream HelloRequest) returns (stream HelloResponse)

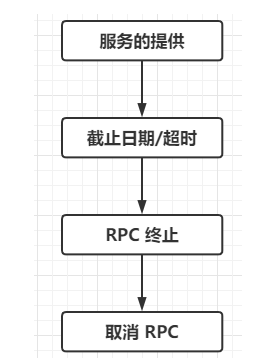

4 gRPC Life cycle of

4.1 Service delivery

RPC Service provision mainly includes the above four service provision methods .

4.2 Closing date / Overtime

gRPC Allows the client to specify in RPC Before termination due to error , They are willing to wait RPC How long will it take to complete DEADLINE_EXCEEDED. On the server side , The server can query specific RPC Whether it has timed out , Or how much time is left to finish RPC.

Specifying a deadline or timeout is language specific : Some languages API Work overtime , And some languages API Work according to the deadline .

4.3 RPC End

stay gRPC in , Both the client and the server make independent local judgments on whether the call is successful , And their conclusions may not match . It means , for example , There may be one RPC Successfully completed on the server side but failed on the client . The server can also decide to complete before the client sends all requests .

4.4 Cancel RPC

Either the client or the server can cancel at any time RPC. Cancellation will immediately terminate RPC, So that no more work can be done .

5 gRPC Communication principle

as everyone knows ,gRPC Is based on HTTP2 Of , and HTTP2 Another relative HTTP1.1 A relatively new concept , So I'm exploring gRPC It is necessary to understand the principle before HTTP2 What is the .

5.1 HTTP2

HTTP/2 The specification of 2015 year 5 Published in , It aims to solve some scalability problems of its predecessor , Improved in many ways HTTP/1.1 The design of the , The most important thing is to provide semantic mapping on connections .

establish HTTP The cost of connection is great . You must establish TCP Connect 、 Use TLS Protect the connection 、 Exchange headers and settings .HTTP/1.1 By treating connections as long-term 、 Reusable objects to simplify this process .HTTP/1.1 The connection remains idle , So that new requests can be sent to the same destination through existing idle connections . Although connection reuse alleviates this problem , But a connection can only process one request at a time —— They are 1:1 Coupled . If you want to send a big message , A new request must either wait for it to complete ( Lead to Queue blocking ), Or pay the price of starting another connection more often .

HTTP/2 By providing a semantic layer above the connection : flow , This further extends the concept of persistent connections . A stream can be thought of as a series of semantically connected messages , be called frame . The flow may be short , For example, a unary flow requesting user status ( stay HTTP/1.1 in , This may be equivalent to

GET /users/1234/status). As the frequency increases , It has a long life . The recipient may establish a long-term flow , So as to receive user status messages continuously in real time , Not to /users/1234/status The endpoint makes a separate request . The main advantage of flow is connection concurrency , That is, the ability to interleave messages on a single connection .flow control

However , Concurrent flows contain some subtle pitfalls . Consider the following : Two streams on the same connection A and B. flow A Receive a lot of data , Far more than the data it can process in a short time . Final , The recipient's buffer is filled ,TCP The receiving window limits the sender . This is for TCP It's quite standard behavior , But this situation is unfavorable for flow , Because neither stream will receive more data . Ideally , flow B It should be free from flow A The impact of slow processing .

HTTP/2 By providing flow control The mechanism solves this problem as part of the flow specification . Flow control is used to limit each flow ( And each connection ) Amount of unfinished data . It operates as a credit system , The receiver assigns a certain “ The budget ”, The sender “ cost ” This budget . More specifically , The receiver allocates some buffer size (“ The budget ”), The sender fills (“ cost ”) buffer . The receiver uses WINDOW_UPDATE The frame notifies the sender of the available additional buffer . When the receiver stops broadcasting additional buffers , The sender must be in the buffer ( Its “ The budget ”) Stop sending messages when exhausted .

Use flow control , Concurrent streams can guarantee independent buffer allocation . Plus polling request sending , All sizes 、 The flow of processing speed and duration can be carried out on a single connection Multiplexing , There is no need to care about cross flow issues .

Smarter agents

HTTP/2 The concurrency attribute of allows agents to have higher performance . for example , Consider a method of accepting and forwarding peak traffic HTTP/1.1 Load balancer : When there is a spike , The agent will start more connections to handle the load or queue the request . The former —— new connection —— Usually the first choice is ( In a way ); The disadvantage of these new connections is not only the time waiting for system calls and sockets , Still in happen TCP The connection time is not fully utilized during slow startup .

by comparison , Consider a configuration for multiplexing each connection 100 A stream of HTTP/2 agent . Some peak requests will still cause new connections to be started , But with HTTP/1.1 The corresponding number of connections is only 1/100 A connection . More generally : If n individual HTTP/1.1 The request is sent to a proxy , be n individual HTTP/1.1 Request must go out ; Each request is a meaningful data request / Payload , The request is 1:1 The connection of . contrary , Use HTTP/2 Sent to the agent n Request needs n individual flow , but Unwanted n individual Connect !

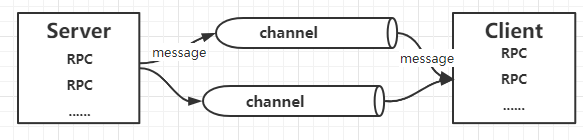

5.2 gRPC And HTTP2

gRPC Three new concepts are introduced : passageway 、 Remote procedure call (RPC) And news . The relationship between the three is very simple : Each channel may have many RPC, And each RPC There may be a lot of news .

The channel is gRPC A key concept in .HTTP/2 The flow in supports multiple concurrent sessions on a single connection ;** Channels extend this concept by enabling multiple streams on multiple concurrent connections .** On the face of it , Channel provides a simple interface for users to send messages ; However , Under the hood , A lot of engineering is invested in keeping these connections alive 、 Health and utilization .

Channels represent virtual connections to endpoints , In fact, there may be many HTTP/2 Connection support .RPC Associated with a connection ( This association will be further described later ).RPC It's actually ordinary HTTP/2 flow . News and RPC Associate and act as HTTP/2 Data frame transmission . More specifically , The message is above the data frame * A layered .* A data frame may have many gRPC news , Or if one gRPC The message is very large, and it may span multiple data frames .

6 summary

Okay , To go here about gRPC The explanation of is almost , in the final analysis ,gRPC It's a network protocol , Since it is a network protocol, it is difficult to escape the network I/O, So it is I/O Multiplexing has achieved HTTP2, And then achieved gRPC, Next article , Let's explain the Internet in simple terms I/O Model !

Reference article :

https://www.cncf.io/blog/2018/07/03/http-2-smarter-at-scale/

https://grpc.io/blog/grpc-on-http2/

边栏推荐

- Delayqueue usage and scenarios of delay queue

- Basic series of SHEL script (III) for while loop

- PostMessage communication

- Simple operation of running water lamp (keil5)

- 借助 Navicat for MySQL 软件 把 不同或者相同数据库链接中的某数据库表数据 复制到 另一个数据库表中

- Graduation thesis project local deployment practice

- What is soda?

- Three body goal management notes

- Energy conservation and creating energy gap

- Pytorch has been installed in anaconda, and pycharm normally runs code, but vs code displays no module named 'torch‘

猜你喜欢

![When jupyter notebook is encountered, erroe appears in the name and is not output after running, but an empty line of code is added downward, and [] is empty](/img/fe/fb6df31c78551d8908ba7964c16180.jpg)

When jupyter notebook is encountered, erroe appears in the name and is not output after running, but an empty line of code is added downward, and [] is empty

Docker installs MySQL and uses Navicat to connect

Basic series of SHEL script (III) for while loop

Solve tensorfow GPU modulenotfounderror: no module named 'tensorflow_ core. estimator‘

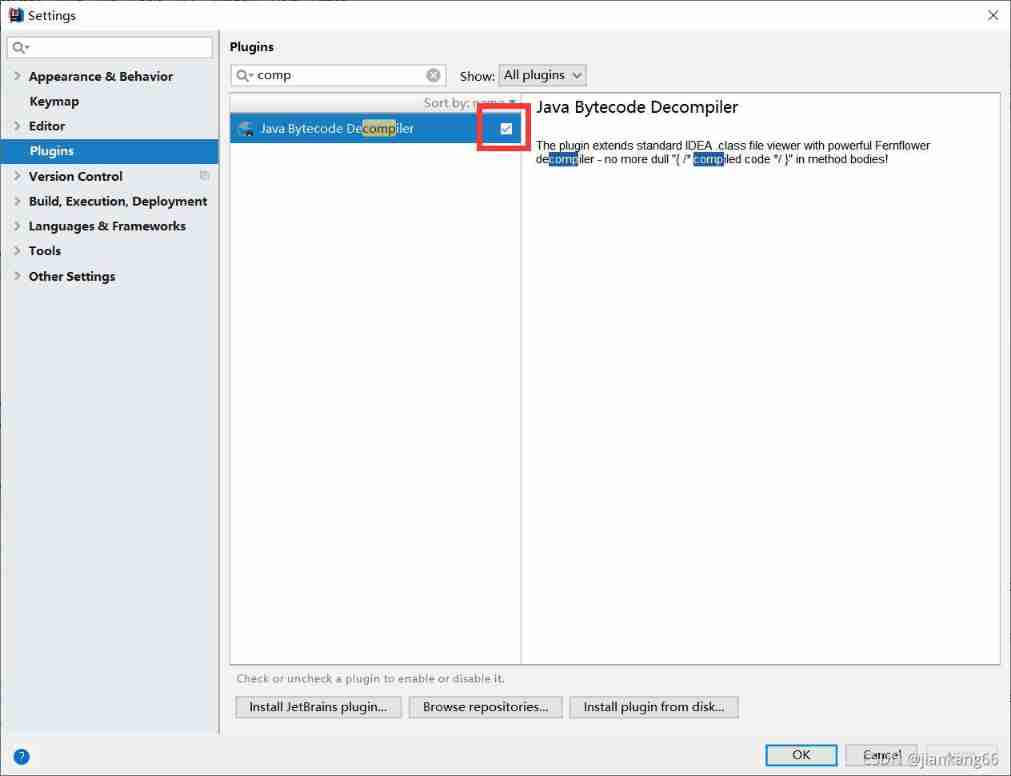

Idea to view the source code of jar package and some shortcut keys (necessary for reading the source code)

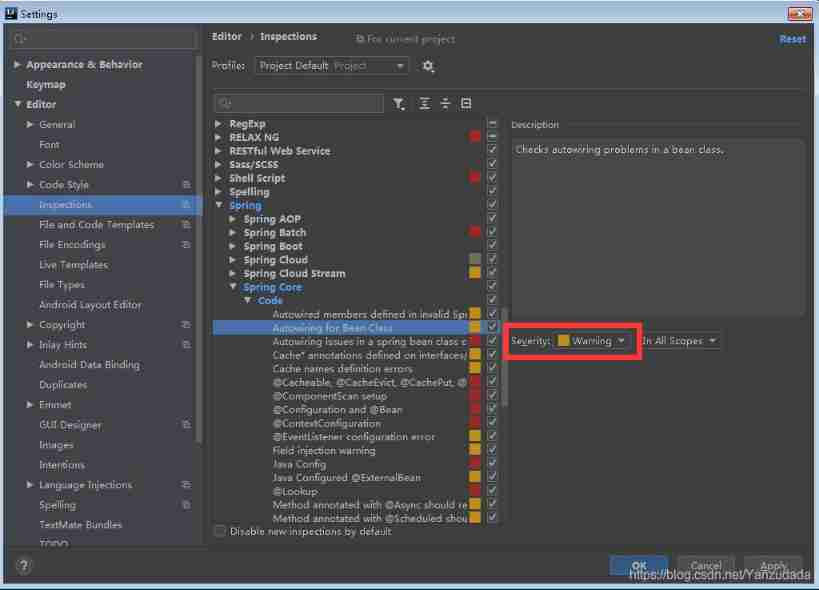

【idea】Could not autowire. No beans of xxx type found

并查集理论讲解和代码实现

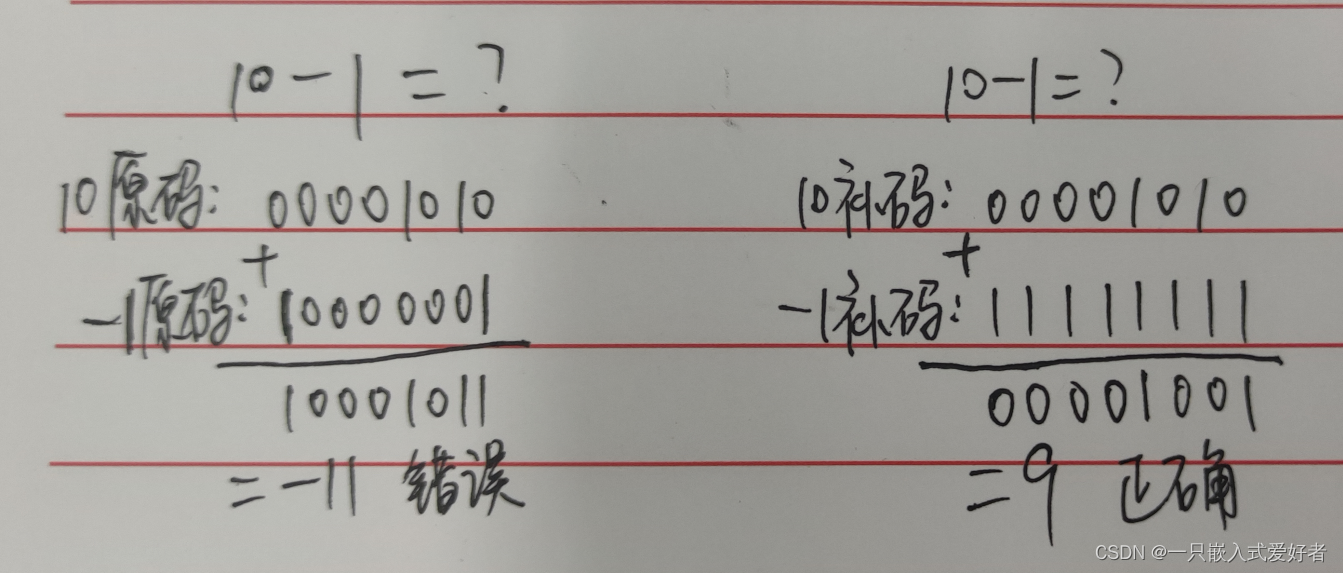

Negative number storage and type conversion in programs

Ugnx12.0 initialization crash, initialization error (-15)

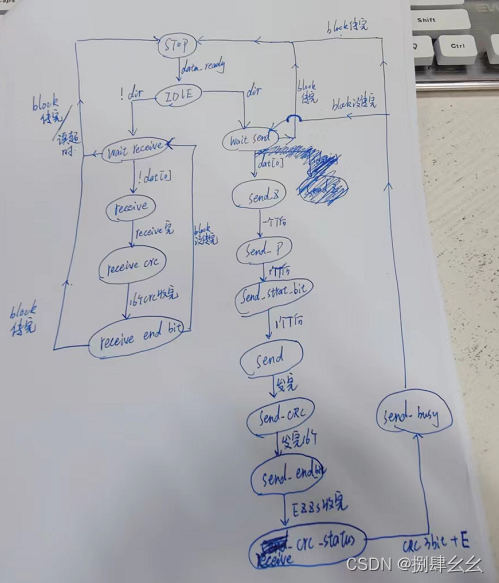

SOC_ SD_ DATA_ FSM

随机推荐

Graduation thesis project local deployment practice

[vscode] recommended plug-ins

[vscode] search using regular expressions

The difference between NPM install -g/-save/-save-dev

The SQL implementation has multiple records with the same ID, and the latest one is taken

[solved] there is something wrong with the image

DelayQueue延迟队列的使用和场景

M2DGR 多源多场景 地面机器人SLAM数据集

SOC_ SD_ DATA_ FSM

I 用c I 实现队列

Microservice registry Nacos introduction

iNFTnews | 喝茶送虚拟股票?浅析奈雪的茶“发币”

list. files: List the Files in a Directory/Folder

Tshydro tool

氫氧化鈉是什麼?

Three body goal management notes

ImportError: No module named ‘Tkinter‘

Binary search (half search)

借助 Navicat for MySQL 软件 把 不同或者相同数据库链接中的某数据库表数据 复制到 另一个数据库表中

Matrix and TMB package version issues in R