Write cache and write data , Because it's not atomic , So it will cause data inconsistency . Should we write the database first or operate the cache first , Is cache operation update or delete ?

Any design that leaves the business is a rogue . Let's imagine a scene ,A After the service updates the cache , For a long time no one visited ,B The service also updated the cache , that A The updated cache is useless ? What's more , If this cached calculation , It's extremely complicated 、 Time-consuming 、 Resource intensive , A few more database operations , This is a big expense . So we chose to delete , Only when it's used , To compute the cache , When you don't use caching , No calculations , The disadvantage is that the first visit may be slow .

Now there are two situations left :

- Write the database first , Delete the cache .

- So let's delete the cache , Write the database again .

in addition , We also consider concurrency and failure .

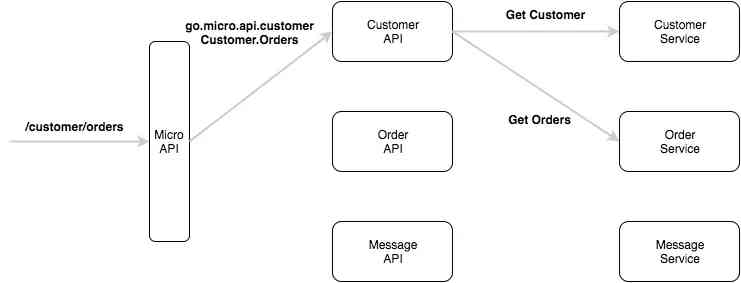

Database first

Concurrent

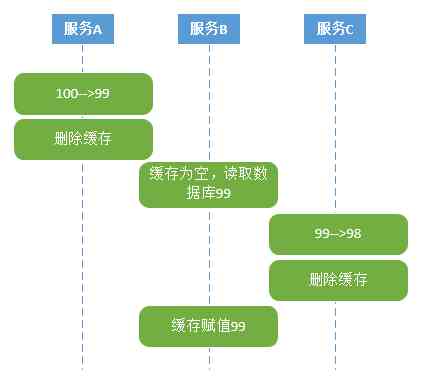

As shown in the figure below :

- service A Update data , hold 100 Change to 99, Then delete the cache .

- service B Read cache , At this point, the cache is empty , Read from database 99.

- service C Update data , hold 99 Change to 98, Then delete the cache .

- service B The assignment cache is 99.

here , The database for 98, But the cache is 99, Data inconsistency .

fault

As shown in the figure below :

- service A Update data , hold 100 Change to 99.

- service A Delete cache failed .

- service B Read cache , by 100.

At this time, the cache data is inconsistent with the database data

The cache first

Concurrent

As shown in the figure below :

- service A Delete cache .

- service B Read cache .

- service B Can't read cache , Just read the database , This is the case 100.

- service A Update the database .

At this time, the cache data is inconsistent with the database data

fault

As shown in the figure below :

- service A Update cache .

- service A Database update failed .

- service B Read cache .

- service B Read database .

Because the database has not been changed , So the cache data is consistent with the database data .

Solution

Business

Any design that leaves the business is a rogue . If the business can accept this inconsistency , Then use Cache Aside Pattern. If business doesn't accept , That allows businesses with strong consistency requirements to read the database directly , But this performance will be very poor , So we have the following serial solution .

Serial

stay java in , Multithreading has data security issues , We can use synchronized and cas To solve , The main idea is to change parallel to serial .

Train of thought : Through message queuing

Push all requests into the queue , Because of the requested key Too much , May, in accordance with the hash The way to take the mold , hold key Spread to a limited queue . Such as the 3 A queue ,hash(key)=0, Put it in the first queue .

The deposit is read 、 read 、 Write 、 read 、 read 、 Write , When it comes to execution , The order of deposit is the same , Because of the same key The operation of is serial , So the consistency of the data is guaranteed .

Train of thought two : Through distributed locks

In the scheme above , If there are too many queues , It's not easy to manage , If there are too few queues , So many key Queuing affects performance , So we can do it with distributed locks . The method here is similar to synchronized and cas, Only when you get the lock , To operate , This can also ensure data consistency .

Of course, either way , The relationship between data consistency and performance is inversely proportional . High data consistency is required , Performance will decline ; The requirement of data consistency is not high , Performance will go up .