当前位置:网站首页>【Cutout】《Improved Regularization of Convolutional Neural Networks with Cutout》

【Cutout】《Improved Regularization of Convolutional Neural Networks with Cutout》

2022-07-02 07:45:00 【bryant_ meng】

arXiv-2017

List of articles

1 Background and Motivation

With the development of deep learning technology ,CNN Emerging in many computer vision tasks , but increased representational power also comes increased probability of overfitting, leading to poor generalization.

To improve the generalization performance of the model , simulation object occlusion, The author puts forward Cutout Methods of data enhancement ——randomly masking out square regions of input during training,take more of the image context into consideration when making decisions.

This technique encourages the network to better utilize the full context of the image, rather than relying on the presence of a small set of specific visual features(which may not always be present).

2 Related Work

- Data Augmentation for Images

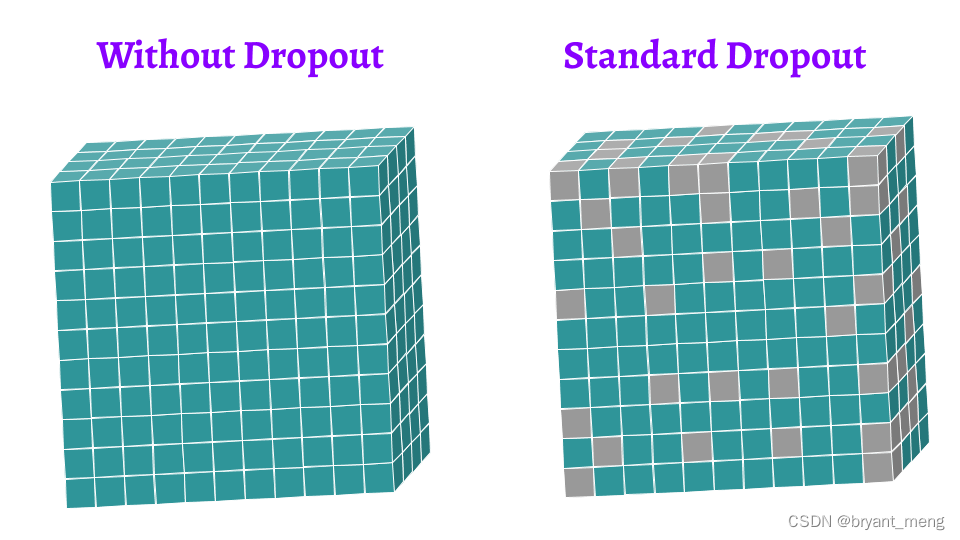

- Dropout in Convolutional Neural Networks

- Denoising Autoencoders & Context Encoders(self-supervised, Dig out some , Network supplement , To strengthen the characteristics )

3 Advantages / Contributions

It is proposed in supervised learning Cutout Data enhancement method (dropout A form of , There are similar methods in self-monitoring )

4 Method

Initial edition :remove maximally activated features

The final version : Random center point , The square blocks ( Can be outside the picture , After being intercepted by the image boundary, it is not square )

It needs to be centralized when using ( That is, subtract the mean )

the dataset should be normalized about zero so that modified images will not have a large effect on the expected batch statistics.

5 Experiments

5.1 Datasets and Metrics

- CIFAR-10(32x32)

- CIFAR-100(32x32)

- SVHN(Street View House Numbers,32x32)

- STL-10(96x96)

The evaluation index is top1 error

5.2 Experiments

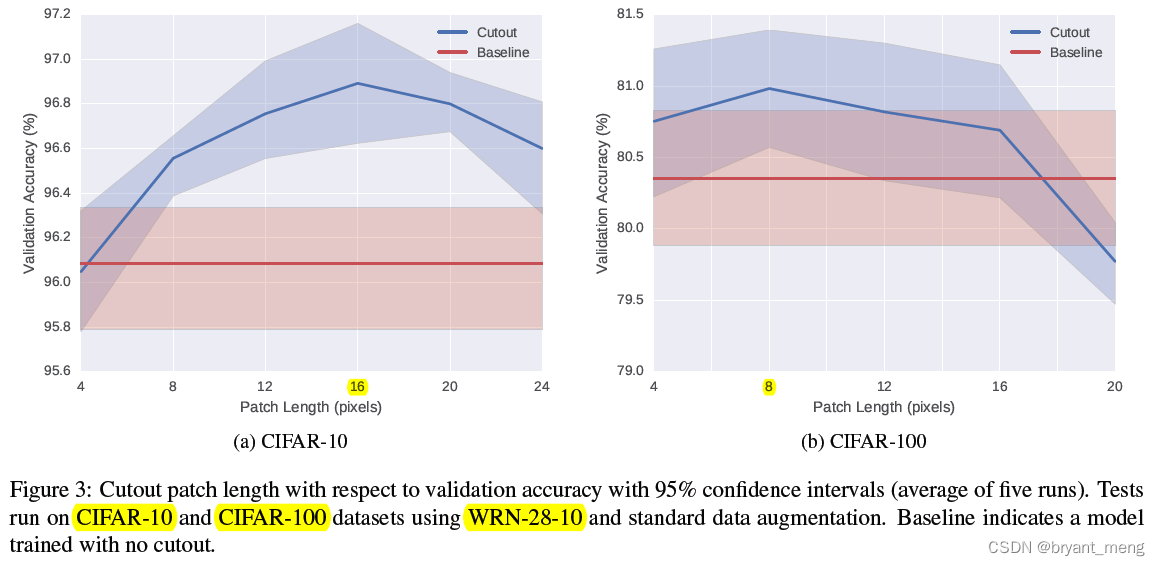

1)CIFAR10 and CIFAR100

Individual experiments are repeated 5 Time ,±x

The following figure explores cutout Different from patch length Influence ,

2)STL-10

3)Analysis of Cutout’s Effect on Activations

introduce cutout After shallow activation, both are improved , Deep level in the tail end of the distribution.

The latter observation illustrates that cutout is indeed encouraging the network to take into account a wider variety of features when making predictions, rather than relying on the presence of a smaller number of features

Then focus on the single sample

6 Conclusion(own) / Future work

code:https://github.com/uoguelph-mlrg/Cutout

memory footprint Memory footprint

Related work introduction drop out when , This sentence appears in the article :All activations are kept when evaluating the network, but the resulting output is scaled according to the dropout probability

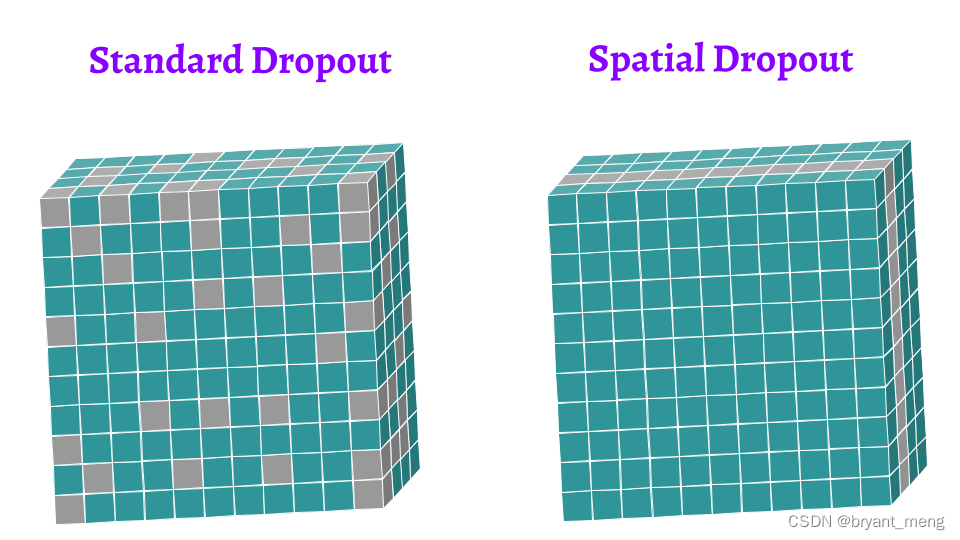

dropout It works on FC The effect is better than Conv Excellent , The author's explanation is :1)convolutional layers already have much fewer parameters than fully-connected layers; 2)neighbouring pixels in images share much of the same information( It's harmless to lose some )

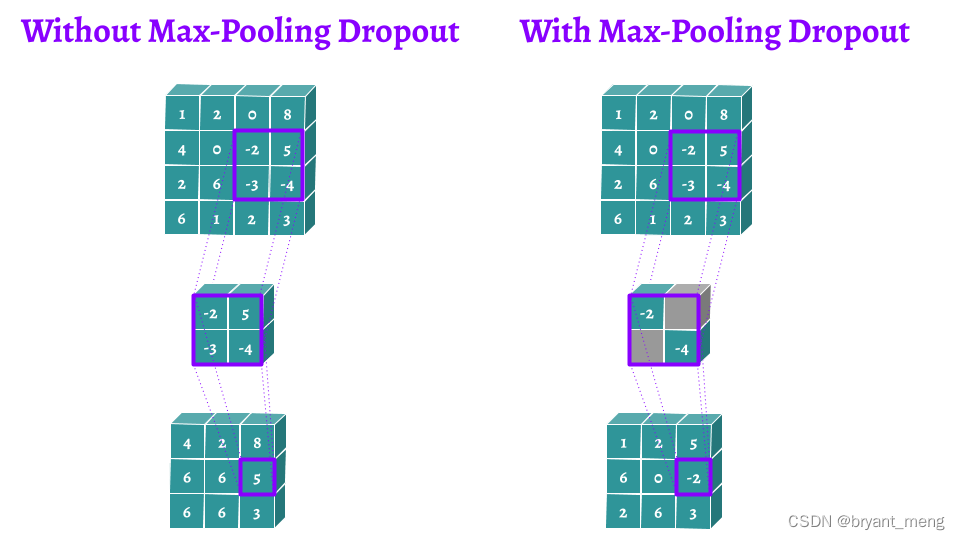

cutout—— The continuous area only acts on the input layer dropout technology

Dropout Technology in : Visual interpretation and in DNN/CNN/RNN Application in

边栏推荐

- TimeCLR: A self-supervised contrastive learning framework for univariate time series representation

- latex公式正体和斜体

- [mixup] mixup: Beyond Imperial Risk Minimization

- 【AutoAugment】《AutoAugment:Learning Augmentation Policies from Data》

- Implementation of yolov5 single image detection based on onnxruntime

- Interpretation of ernie1.0 and ernie2.0 papers

- 【深度学习系列(八)】:Transoform原理及实战之原理篇

- Huawei machine test questions-20190417

- MMDetection安装问题

- label propagation 标签传播

猜你喜欢

![[medical] participants to medical ontologies: Content Selection for Clinical Abstract Summarization](/img/24/09ae6baee12edaea806962fc5b9a1e.png)

[medical] participants to medical ontologies: Content Selection for Clinical Abstract Summarization

![[introduction to information retrieval] Chapter II vocabulary dictionary and inverted record table](/img/3f/09f040baf11ccab82f0fc7cf1e1d20.png)

[introduction to information retrieval] Chapter II vocabulary dictionary and inverted record table

机器学习理论学习:感知机

label propagation 标签传播

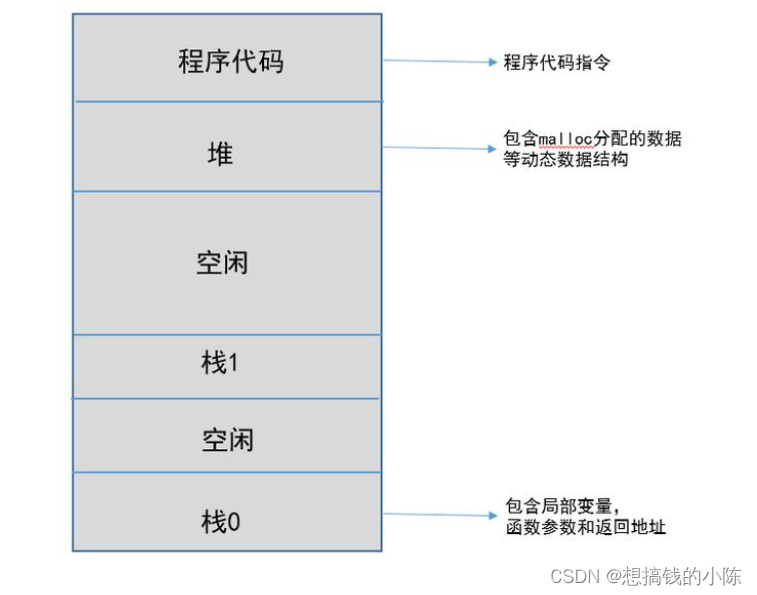

Memory model of program

【AutoAugment】《AutoAugment:Learning Augmentation Policies from Data》

【Paper Reading】

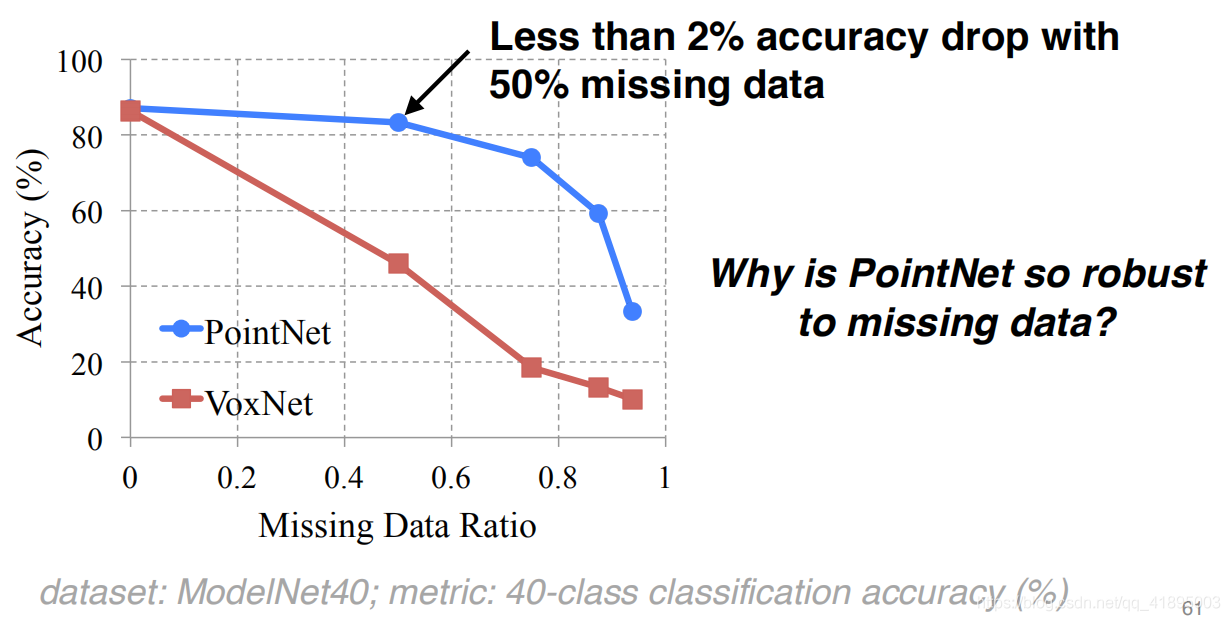

PointNet理解(PointNet实现第4步)

![[introduction to information retrieval] Chapter 6 term weight and vector space model](/img/42/bc54da40a878198118648291e2e762.png)

[introduction to information retrieval] Chapter 6 term weight and vector space model

【Programming】

随机推荐

程序的内存模型

Handwritten call, apply, bind

Generate random 6-bit invitation code in PHP

Tencent machine test questions

【Mixed Pooling】《Mixed Pooling for Convolutional Neural Networks》

[medical] participants to medical ontologies: Content Selection for Clinical Abstract Summarization

Get the uppercase initials of Chinese Pinyin in PHP

聊天中文语料库对比(附上各资源链接)

Conversion of numerical amount into capital figures in PHP

[introduction to information retrieval] Chapter II vocabulary dictionary and inverted record table

How to efficiently develop a wechat applet

【MEDICAL】Attend to Medical Ontologies: Content Selection for Clinical Abstractive Summarization

One field in thinkphp5 corresponds to multiple fuzzy queries

[introduction to information retrieval] Chapter 7 scoring calculation in search system

Faster-ILOD、maskrcnn_benchmark安装过程及遇到问题

Classloader and parental delegation mechanism

传统目标检测笔记1__ Viola Jones

【Sparse-to-Dense】《Sparse-to-Dense:Depth Prediction from Sparse Depth Samples and a Single Image》

[Bert, gpt+kg research] collection of papers on the integration of Pretrain model with knowledge

Huawei machine test questions-20190417