当前位置:网站首页>Convolution neural network -- convolution layer

Convolution neural network -- convolution layer

2022-07-05 05:33:00 【Li Junfeng】

Convolution layer

In convolutional neural networks , The most important thing is convolution layer , It is responsible for extracting feature information from pictures .

First consider the simplest picture , A two-dimensional picture , Then the convolution kernel is also two-dimensional .

Convolution operation

It sounds tall , In fact, it is the sum of products .

for instance :

Suppose the picture is like this

| 1 | 2 | 4 |

|---|---|---|

| 3 | 5 | 4 |

The convolution kernel is

| 6 | 1 |

|---|---|

| 4 | 4 |

Then the result of convolution operation is :

6 × 1 + 1 × 2 + 4 × 3 + 4 × 5 = 40 6 × 2 + 1 × 4 + 4 × 5 + 4 × 4 = 52 6\times 1+1\times 2 + 4\times 3 + 4\times 5 =40 \newline 6\times 2 + 1\times 4 + 4\times 5+4\times 4 =52 6×1+1×2+4×3+4×5=406×2+1×4+4×5+4×4=52

fill

Through the simple example above , It's not hard to find out , After convolution , The size of the matrix changes . This will bring about a serious problem , After continuous convolution , The picture is getting smaller and smaller , So that the convolution operation cannot be carried out in the end .

To solve this problem , We hope that after convolution , The size of the picture does not change , At this time, you need to fill the corresponding value to the edge of the image .

Matrix size

How much to fill the edge of the picture , This is closely related to the size of convolution kernel . So is there a relationship between this size change . The answer is yes .

Might as well set H , W H,W H,W Represents the length and height of the original matrix , O H , O W OH,OW OH,OW Represents the length of the convolution result 、 high , F H , F W FH,FW FH,FW Represents the length of convolution kernel 、 high , P P P Indicates filling , S S S Indicates the stride .

O H = H + 2 ⋅ P − F H S + 1 O W = W + 2 ⋅ P − F W S + 1 OH =\frac{H + 2\cdot P - FH}{S}+1 \newline OW = \frac{W + 2\cdot P - FW}{S}+ 1 OH=SH+2⋅P−FH+1OW=SW+2⋅P−FW+1

Code implementation

The principle of convolution operation is very simple , And the code implementation is also very simple .

Through a few for Loop can easily solve , But there are more efficient ways .

We can change the numbers corresponding to the same convolution kernel in the picture into one line , Change the convolution kernel into a column . Then use Matrix multiplication , Convolution operation can be perfectly realized , And one matrix multiplication can calculate the result of the whole picture .

How to change it into one line , The code is given below .

def im2col(input_data, filter_h, filter_w, stride=1, pad=0):

""" Parameters ---------- input_data : from ( Data volume , passageway , high , Long ) Of 4 Input data made up of dimension array filter_h : The height of the filter filter_w : The length of the filter stride : Stride pad : fill Returns ------- col : 2 Dimension group """

N, C, H, W = input_data.shape

out_h = (H + 2*pad - filter_h)//stride + 1

out_w = (W + 2*pad - filter_w)//stride + 1

img = np.pad(input_data, [(0,0), (0,0), (pad, pad), (pad, pad)], 'constant')

col = np.zeros((N, C, filter_h, filter_w, out_h, out_w))

for y in range(filter_h):

y_max = y + stride*out_h

for x in range(filter_w):

x_max = x + stride*out_w

col[:, :, y, x, :, :] = img[:, :, y:y_max:stride, x:x_max:stride]

col = col.transpose(0, 4, 5, 1, 2, 3).reshape(N*out_h*out_w, -1)

return col

def col2im(col, input_shape, filter_h, filter_w, stride=1, pad=0):

""" Parameters ---------- col : input_shape : The shape of the input data ( example :(10, 1, 28, 28)) filter_h : filter_w stride pad Returns ------- """

N, C, H, W = input_shape

out_h = (H + 2*pad - filter_h)//stride + 1

out_w = (W + 2*pad - filter_w)//stride + 1

col = col.reshape(N, out_h, out_w, C, filter_h, filter_w).transpose(0, 3, 4, 5, 1, 2)

img = np.zeros((N, C, H + 2*pad + stride - 1, W + 2*pad + stride - 1))

for y in range(filter_h):

y_max = y + stride*out_h

for x in range(filter_w):

x_max = x + stride*out_w

img[:, :, y:y_max:stride, x:x_max:stride] += col[:, :, y, x, :, :]

return img[:, :, pad:H + pad, pad:W + pad]

边栏推荐

- [speed pointer] 142 circular linked list II

- Haut OJ 1241: League activities of class XXX

- Add level control and logger level control of Solon logging plug-in

- YOLOv5添加注意力机制

- 剑指 Offer 53 - II. 0~n-1中缺失的数字

- Introduction to tools in TF-A

- 全国中职网络安全B模块之国赛题远程代码执行渗透测试 //PHPstudy的后门漏洞分析

- Pointnet++ learning

- Reflection summary of Haut OJ freshmen on Wednesday

- [binary search] 34 Find the first and last positions of elements in a sorted array

猜你喜欢

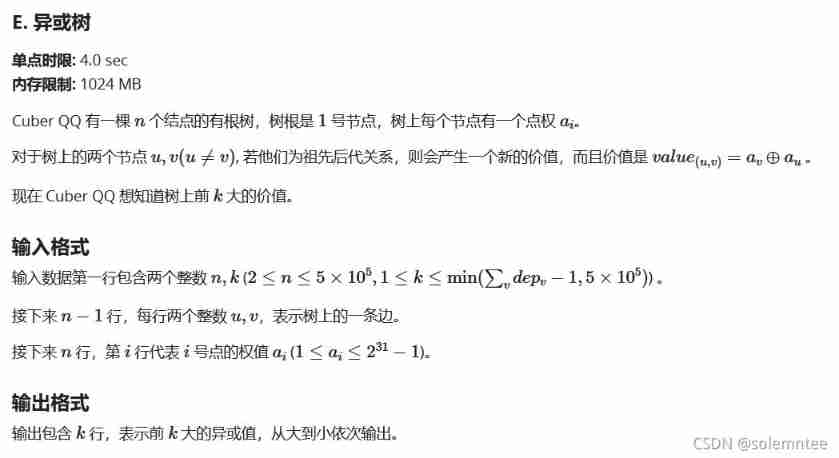

EOJ 2021.10 E. XOR tree

Corridor and bridge distribution (csp-s-2021-t1) popular problem solution

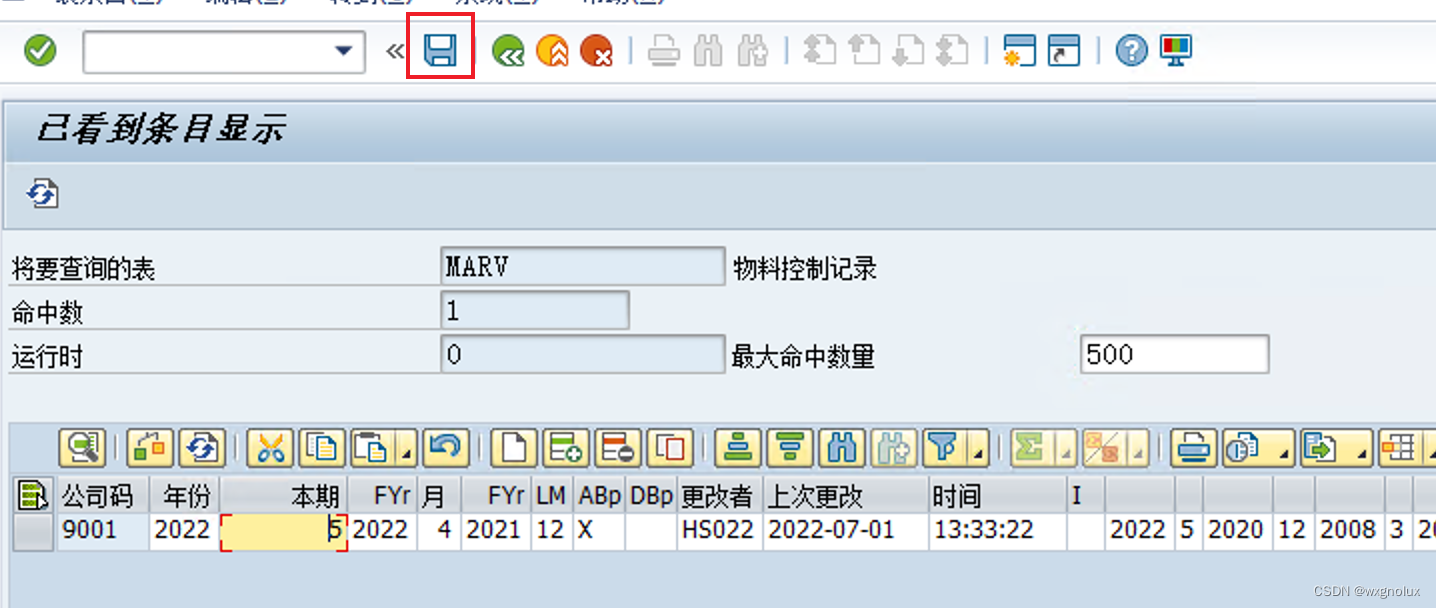

SAP method of modifying system table data

Double pointer Foundation

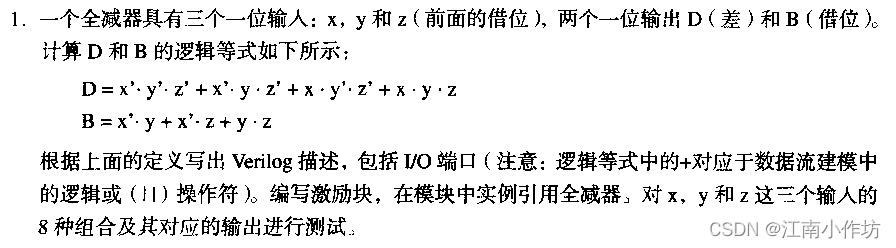

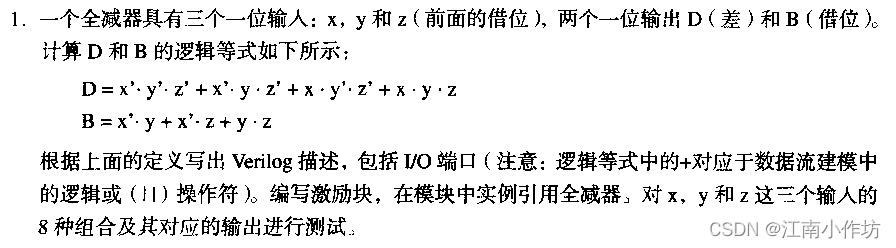

第六章 数据流建模—课后习题

Chapter 6 data flow modeling - after class exercises

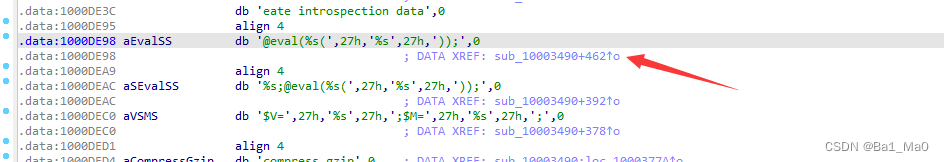

全国中职网络安全B模块之国赛题远程代码执行渗透测试 //PHPstudy的后门漏洞分析

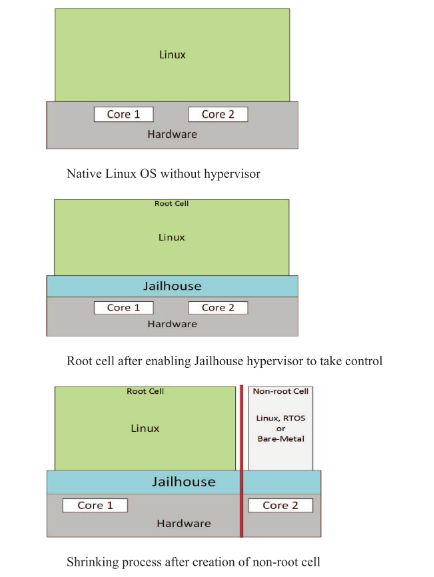

【Jailhouse 文章】Performance measurements for hypervisors on embedded ARM processors

![[to be continued] [depth first search] 547 Number of provinces](/img/c4/b4ee3d936776dafc15ac275d2059cd.jpg)

[to be continued] [depth first search] 547 Number of provinces

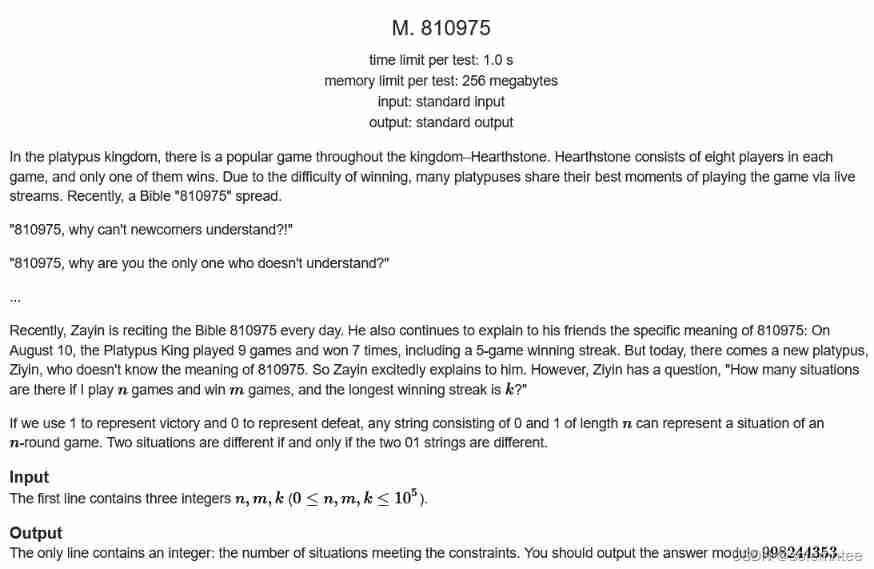

CCPC Weihai 2021m eight hundred and ten thousand nine hundred and seventy-five

随机推荐

Remote upgrade afraid of cutting beard? Explain FOTA safety upgrade in detail

Sword finger offer 09 Implementing queues with two stacks

Zzulioj 1673: b: clever characters???

Talking about JVM (frequent interview)

lxml. etree. XMLSyntaxError: Opening and ending tag mismatch: meta line 6 and head, line 8, column 8

Web APIs DOM node

Maximum number of "balloons"

[merge array] 88 merge two ordered arrays

【Jailhouse 文章】Jailhouse Hypervisor

软件测试 -- 0 序

Pointnet++学习

剑指 Offer 35.复杂链表的复制

Palindrome (csp-s-2021-palin) solution

Fragment addition failed error lookup

Introduction to tools in TF-A

Hang wait lock vs spin lock (where both are used)

2020ccpc Qinhuangdao J - Kingdom's power

YOLOv5添加注意力机制

Support multi-mode polymorphic gbase 8C database continuous innovation and heavy upgrade

智慧工地“水电能耗在线监测系统”