当前位置:网站首页>U.S. Air Force Research Laboratory, "exploring the vulnerability and robustness of deep learning systems", the latest 85 page technical report in 2022

U.S. Air Force Research Laboratory, "exploring the vulnerability and robustness of deep learning systems", the latest 85 page technical report in 2022

2022-07-07 03:24:00 【Zhiyuan community】

Deep neural network makes the performance of modern computer vision system reach a new level in various challenging tasks . Although it has great benefits in accuracy and efficiency , But the highly parameterized nonlinear properties of deep networks make them very difficult to explain , It is easy to fail when there are opponents or abnormal data . This vulnerability makes it disturbing to integrate these models into our real-world systems . This project has two main lines :(1) We explore the vulnerability of deep neural networks by developing the most advanced adversarial attacks ;(2) We are in a challenging operating environment ( For example, in the scene of target recognition and joint learning in the open world ) Improve the robustness of the model . A total of nine articles have been published in this study , Each article has promoted the latest progress in their respective fields .

Deep neural networks in the field of machine learning , In particular, great progress has been made in the field of computer vision . Although most of the recent research on these models is to improve the accuracy and efficiency of tasks , But people don't know much about the robustness of deep Networks . The highly parameterized nature of deep networks is both a blessing and a curse . One side , It makes the performance level far beyond the traditional machine learning model . On the other hand ,DNN Very difficult to explain , Cannot provide an accurate concept of uncertainty . therefore , Before integrating these powerful models into our most trusted systems , It is important to continue to study and explore the vulnerabilities of these models .

We The first main line of research is to explore by making powerful adversarial attacks against various models DNN The fragility of From the perspective of attack , Confrontational attacks are not only eye-catching , And they are also a tool , So that we can better understand and explain the complex model behavior . Adversarial attacks also provide a challenging robustness benchmark , We can test it in the future . Our philosophy is , To create a highly robust model , We must start by trying to fully understand all the ways in which they may fail at present . In the 3.1 In the festival , Each job has its own motivation and explanation . In the 3.1.1 In the festival , We first discussed an early project on efficient model poisoning attacks , The project highlights a key weakness of the exposed training pipeline model . Next , We introduced a series of research projects , These projects introduce and build on the new idea of feature space attack . Such attacks have proved to be much more powerful than existing output space attacks in a more realistic black box attack environment . These papers are published in 3.1.2-3.2.4 This section deals with . In the 3.1.5 In the festival , We considered an attack background that we hadn't considered before , There is no class distribution overlap between the black box target model and the target model . We show that , Even in this challenging situation , We can also use the adjustment of our feature distribution attack to pose a major threat to the black box model . Last , The first 3.1.6 Section covers A new class of black box antagonistic attacks against reinforcement learning agents , This is an unexplored field , It is becoming more and more popular in control based applications . Please note that , The experiments of these projects 、 The results and analysis will be presented in 4.0 In the corresponding chapter of section .

We The goal of the second research direction is to directly enhance DNN The soundness of . As we detailed in the first line , At present, adversarial attacks are based on DNN Our system poses a major risk . Before we trust these models enough and integrate them into our most trusted system ( Such as defense technology ) Before , We must ensure that we take into account all possible forms of data corruption and variation . In the 3.2.1 In the festival , The first case we consider is to formulate a principled defense against data reversal attacks in a distributed learning environment . after , In the 3.2.2 In the festival , We have greatly improved automatic target recognition (ATR) The accuracy and robustness of the model in an open environment , Because we cannot guarantee that the incoming data will contain the categories in the training distribution . In the 3.2.3 In the festival , We go further , An online learning algorithm with limited memory is developed , By using samples in the deployment environment , Enhanced in an open world environment ATR The robustness of the model . Again , Experiments of these works 、 The results and discussion are included in section 4.0 Section .

https://apps.dtic.mil/sti/pdfs/AD1170105.pdf

边栏推荐

- Sub pixel corner detection opencv cornersubpix

- 美国空军研究实验室《探索深度学习系统的脆弱性和稳健性》2022年最新85页技术报告

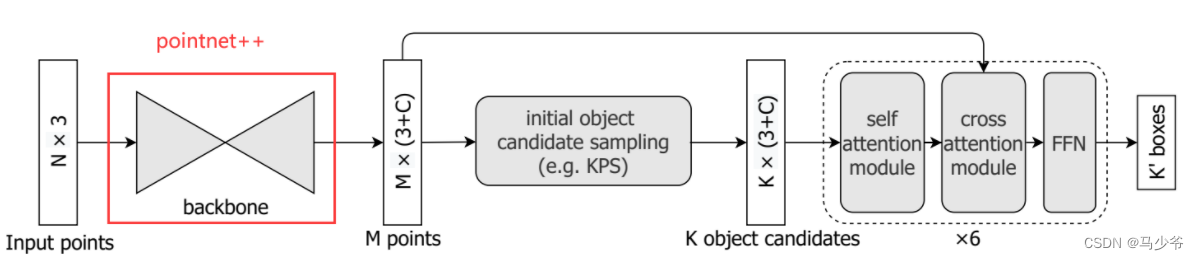

- How to replace the backbone of the model

- Centerx: open centernet in the way of socialism with Chinese characteristics

- 【colmap】已知相机位姿情况下进行三维重建

- leetcode

- 【C语言】 题集 of Ⅸ

- Another million qubits! Israel optical quantum start-up company completed $15million financing

- Jerry's phonebook acquisition [chapter]

- 从0开始创建小程序

猜你喜欢

![Jericho is in non Bluetooth mode. Do not jump back to Bluetooth mode when connecting the mobile phone [chapter]](/img/ce/baa4acb1b4bfc19ccf8982e1e320b2.png)

Jericho is in non Bluetooth mode. Do not jump back to Bluetooth mode when connecting the mobile phone [chapter]

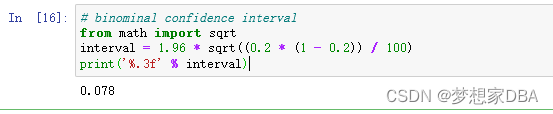

About Confidence Intervals

Centerx: open centernet in the way of socialism with Chinese characteristics

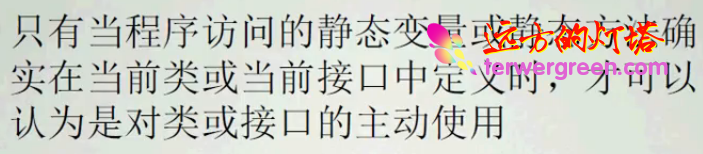

Depth analysis of compilation constants, classloader classes, and system class loaders

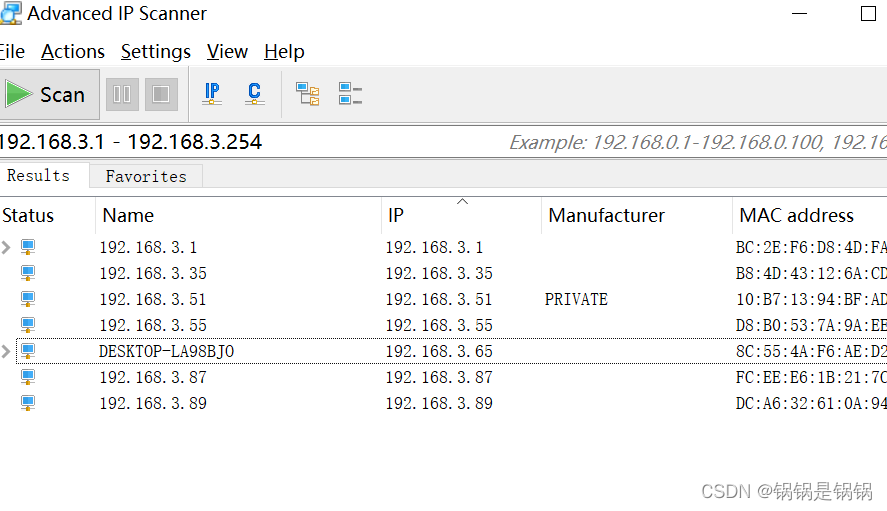

树莓派设置wifi自动连接

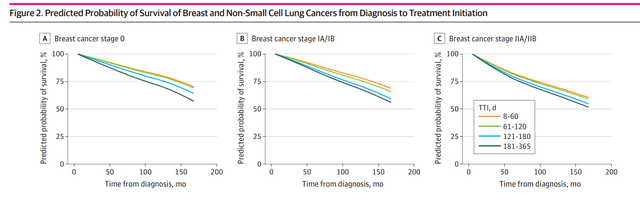

R数据分析:cox模型如何做预测,高分文章复现

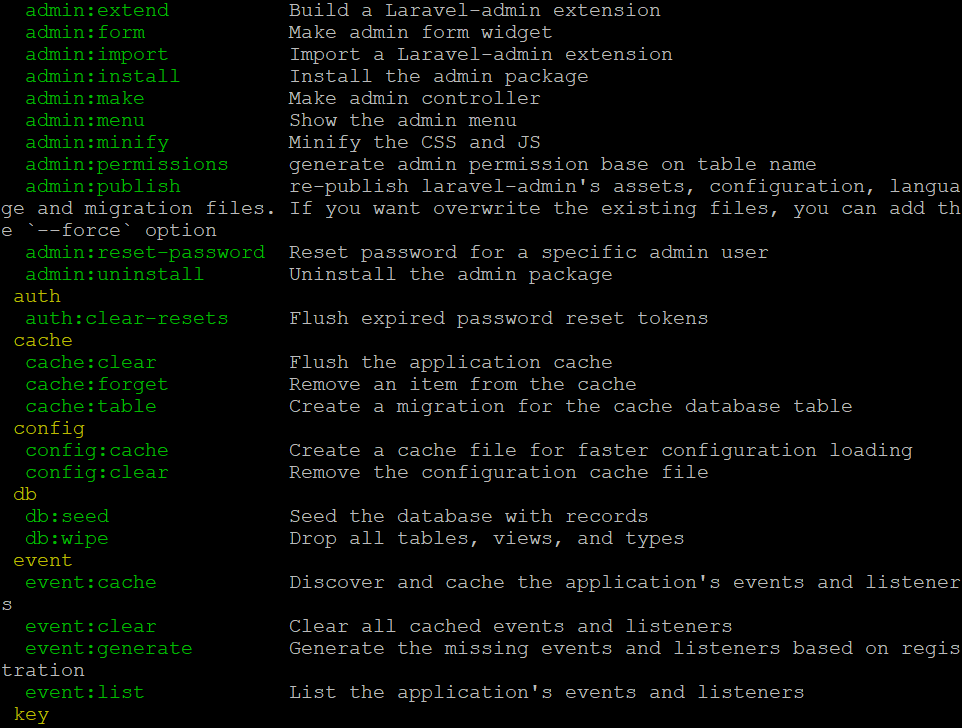

Lavel PHP artisan automatically generates a complete set of model+migrate+controller commands

![Jerry's broadcast has built-in flash prompt tone to control playback pause [chapter]](/img/8c/e8f7e667e4762a4815e97c36a2759f.png)

Jerry's broadcast has built-in flash prompt tone to control playback pause [chapter]

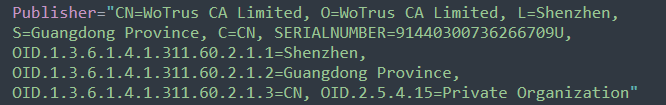

Appx code signing Guide

如何替换模型的骨干网络(backbone)

随机推荐

Jerry's ble exiting Bluetooth mode card machine [chapter]

CVPR 2022 最佳论文候选 | PIP: 6个惯性传感器实现全身动捕和受力估计

函数重入、函数重载、函数重写自己理解

23.(arcgis api for js篇)arcgis api for js椭圆采集(SketchViewModel)

Jerry's transmitter crashed after the receiver shut down [chapter]

Variables, process control and cursors (MySQL)

数学归纳与递归

About Confidence Intervals

Codeforces Round #264 (Div. 2) C Gargari and Bishops 【暴力】

unrecognized selector sent to instance 0x10b34e810

Jericho turns on the display icon of the classic Bluetooth hid mobile phone to set the keyboard [chapter]

Create applet from 0

迷失在MySQL的锁世界

Codeforces round 264 (Div. 2) C gargari and Bishop [violence]

源代码保密的意义和措施

Hazel engine learning (V)

美国空军研究实验室《探索深度学习系统的脆弱性和稳健性》2022年最新85页技术报告

VHDL实现单周期CPU设计

The solution of unable to create servlet file after idea restart

25.(arcgis api for js篇)arcgis api for js线修改线编辑(SketchViewModel)