当前位置:网站首页>How to choose cache read / write strategies in different business scenarios?

How to choose cache read / write strategies in different business scenarios?

2022-07-03 20:39:00 【Hollis Chuang】

source : Glacier Technology

Cache read / write strategy . You may think that the reading and writing of cache is very simple , Just read the cache first , If the cache does not hit, query from the database , When the query is found, the cache will be returned . actually , For different business scenarios , Cache read and write strategies are also different .

And we also need to consider many factors when choosing strategies , for instance , Whether it is possible to write dirty data in the cache , How good is the read-write performance of the policy , Whether there is a drop in cache hit rate, etc .

Next , I take the standard “ cache + database ” For example , Take you to analyze the classic cache read-write strategies and their applicable scenarios . thus , You can choose different reading and writing strategies according to different scenarios in your daily work .

Cache Aside( Bypass cache ) Strategy

Let's consider the simplest business scenario , For example, there is a user table in your e-commerce system , Only in the table ID And age , In the cache, we use ID by Key Store user's age information . So when we want to put ID by 1 The user's age ranges from 19 Changed to: 20, How to do it ?

You may have this idea : First update the database ID by 1 The record of , Then update the cache Key by 1 The data of .

This idea will cause inconsistency between the cache and the data in the database . such as ,A Request that the database be deleted ID by 1 The user's age ranges from 19 Changed to: 20, meanwhile , request B Also began to update ID by 1 User data for , It changes the age recorded in the database to 21, Then change the age of the user in the cache to 21. Then ,A Request to start updating cached data , It changes the age in the cache to 20. here , The age of the user in the database is 21, The age of the user in the cache is 20.

Why is this problem ? Because changing the database and changing the cache are two independent operations , And we don't have any concurrency control over operations . So when two threads update them simultaneously , The data will be inconsistent due to the different writing order .

in addition , Another problem with directly updating the cache is the loss of updates . Take our e-commerce system as an example , Suppose the account table in the e-commerce system has three fields :ID、 Account name and amount , At this time, more than the amount information is stored in the cache , But complete account information . When updating the account amount in the cache , You need to query the complete account data from the cache , Change the amount and then write it to the cache .

There will also be concurrency problems in this process , For example, the original amount is 20,A Request to read data from cache , And add the amount 1, Change to 21, Another request before writing to the cache B Also add the amount after reading the cached data 1, Also changed to 21, Both requests write the amount back to the cache at the same time , At this time, the amount in the cache is 21, But we actually expect the amount plus 2, This is also a big problem .

How can we solve this problem ? Actually , We can update the data without updating the cache , Instead, delete the data in the cache , While reading the data , After discovering that there is no data in the cache , Then read the data from the database , Update to cache .

This is the most common strategy that we use caching ,Cache Aside Strategy ( Also called bypass cache policy ), The policy data is based on the data in the database , The data in the cache is loaded on demand . It can be divided into read strategy and write strategy , The steps of reading strategy are :

Read data from cache ;

If cache hits , Then directly return the data ;

If cache misses , Query the data from the database ;

After querying the data , Write data to the cache , And back to the user .

The steps to write a strategy are :

Update the records in the database ;

Delete cache records .

You might ask , In the writing strategy , Can I delete the cache first , Update the database after ? The answer is No , Because this may also lead to inconsistent cache data , Let me take the user table scenario as an example to explain .

Suppose a user's age is 20, request A The user age to be updated is 21, So it will delete the contents of the cache . At this time , Another request B To read the age of this user , It queries the cache and finds a miss , The age will be read from the database 20, And write to the cache , Then request A Continue changing the database , Update the user's age to 21, This causes inconsistencies between the cache and the database .

So it's like Cache Aside The strategy is to update the database first , Is there no problem after deleting the cache ? In fact, there are still defects in theory .

If a user data does not exist in the cache , request A When reading the data, the age is queried from the database 20, Another request when not written to the cache B Update data . It updates the age in the database to 21, And empty the cache . Request at this time A The age read from the database is 20 Data is written to the cache , Cause inconsistency between cache and database data .

However, the probability of such problems is not high , The reason is that cache writes are usually much faster than database writes , So in practice, it is very difficult to request B The database has been updated and the cache has been emptied , request A After updating the cache . And once requested A Earlier than request B The cache was updated before emptying the cache , Then the next request will reload data from the database because the cache is empty , So there will be no such inconsistency .

Cache Aside Policy is the most frequently used caching policy in our daily development , But we should also learn to change according to the situation when using . For example, when a new user is registered , Follow this update strategy , You have to write the database , Then clean up the cache ( Of course, there is no data in the cache for you to clean up ). I can read the user information immediately after I register the user , And when the master and slave of the database are separated , There will be a situation that the user information cannot be read because of the master-slave delay .

The solution to this problem is to write the cache after inserting new data into the database , In this way, subsequent read requests will read data from the cache . And because it is a newly registered user , Therefore, there will be no concurrent update of user information .

Cache Aside The biggest problem is when writes are frequent , The data in the cache will be cleaned up frequently , This will have some impact on the cache hit rate . If your business has strict requirements on cache hit rate , Then two solutions can be considered :

One way to do this is to update the cache while updating the data , Just add a distributed lock before updating the cache , Because only one thread is allowed to update the cache at the same time , There will be no concurrency problem . Of course, this will have some impact on the performance of writing ;

Another approach is also to update the cache when updating data , Just add a shorter expiration time to the cache , In this way, even if the cache is inconsistent , Cached data will also expire soon , The impact on the business is also acceptable .

Yes, of course , In addition to this strategy , There are several other classic caching strategies in the computer field , They also have their own usage scenarios .

Read/Write Through( Read through / Write through ) Strategy

The core principle of this strategy is that the user only deals with the cache , The cache communicates with the database , Write or read data . It's like you only report to your immediate superior when you report on your work , Then your direct superior reports to his superior , You can't report beyond your level .

Write Through The strategy is like this : First, query whether the data to be written already exists in the cache , If it already exists , Then update the data in the cache , And synchronously updated to the database by the cache component , If the data in the cache does not exist , We call this situation “Write Miss( Write failure )”.

Generally speaking , We can choose two “Write Miss” The way :

One is “Write Allocate( Assign by write )”, The method is to write to the corresponding location of the cache , Then the cache component synchronously updates to the database ;

The other is “No-write allocate( Do not allocate by write )”, Do not write to the cache , Instead, it is updated directly into the database .

stay Write Through Strategy , We usually choose “No-write allocate” The way , The reason is that no matter which one is used “Write Miss” The way , We all need to update the data to the database synchronously , and “No-write allocate” Compared with “Write Allocate” It also reduces one cache write , It can improve the performance of writing .

Read Through The strategy is simpler , Its steps are like this : First query whether the data in the cache exists , If it exists, it directly returns , If it doesn't exist , The cache component is responsible for synchronously loading data from the database .

Here is Read Through/Write Through Schematic diagram of strategy :

Read Through/Write Through The characteristic of the strategy is that the cache node, not the user, deals with the database , In our development process Cache Aside Strategies are less common , The reason is that we often use distributed caching components , Whether it's Memcached still Redis Writing to the database is not provided , Or the function of automatically loading data in the database . We can consider using this strategy when using local cache , For example, the local cache mentioned in the previous section Guava Cache Medium Loading Cache There is Read Through The shadow of strategy .

We see Write Through The database written in the policy is synchronized , This will have a great impact on the performance , Because compared to write cache , The latency of synchronous database writing is much higher . Then can we update the database asynchronously ? That's what we're going to talk about next “Write Back” Strategy .

Write Back( Write back to ) Strategy

The core idea of this strategy is to write only to the cache when writing data , And mark the cache block as “ dirty ” Of . The dirty block will write its data to the back-end storage only when it is used again .

It should be noted that , stay “Write Miss” Under the circumstances , We're going to use “Write Allocate” The way , That is, write to the cache while writing to the back-end storage , In this way, we only need to update the cache in subsequent write requests , There is no need to update the back-end storage , I will Write back The schematic diagram of the strategy is placed below :

If you use Write Back In terms of strategy , The strategy of reading has also changed . When we read the cache, if we find a cache hit, we will directly return the cache data . If the cache does not hit, look for an available cache block , If this cache block is “ dirty ” Of , Just write the previous data in the cache block to the back-end storage , And load data from the back-end storage to the cache block , If it's not dirty , The cache component loads the data in the back-end storage into the cache , Finally, we set the cache to be not dirty , Just return the data .

Have you found it? ? In fact, this strategy cannot be applied to our common database and cache scenarios , It is a design in computer architecture , For example, we use this strategy when writing data to disk . Whether at the operating system level Page Cache, Or asynchronous disk brushing of logs , Or asynchronous writing of messages to disk in message queue , Most of them adopt this strategy . Because the performance advantage of this strategy is beyond doubt , It avoids the random write problem caused by writing directly to the disk , After all, writing to memory and disk is random I/O The delay is several orders of magnitude different .

But because cache generally uses memory , Memory is non persistent , So once the cache machine loses power , It will cause the dirty block data in the original cache to be lost . So you will find that after the system loses power , Some of the previously written files will be lost , Because of Page Cache It's caused by not having time to brush the plate .

Of course , You can still use this strategy in some scenarios , When use , The landing advice I want to give you is : When you write data to a low-speed device , You can temporarily store data in memory for a period of time , Even do some statistical summary , Then refresh to the low-speed device regularly . for instance , When you are counting the response time of your interface , You need to print the response time of each request into the log , Then the monitoring system collects logs and makes statistics . But if you print the log every time, you will undoubtedly increase the disk I/O, Then it's better to store the response time for a period of time , After simple statistics, the average time is , The number of requests per time-consuming interval, and so on , Then regularly , Batch print to log .

summary

This article mainly takes you to understand several strategies for caching , And what kind of usage scenarios are applicable to each strategy . What I want you to master is :

Cache Aside It's the most common strategy we use when using distributed caching , You can use it directly in your work .

Read/Write Through and Write Back Policies need to be supported by caching components , So it is more suitable for you to use when implementing the local cache component ;

Write Back Policies are policies in computer architecture , But the write only cache in the write strategy , There are many application scenarios for asynchronous write back-end storage strategy .

End

My new book 《 In depth understanding of Java The core technology 》 It's on the market , After listing, it has been ranked in Jingdong best seller list for several times , At present 6 In the discount , If you want to start, don't miss it ~ Long press the QR code to buy ~

Long press to scan code and enjoy 6 A discount

Previous recommendation

Social recruitment for two and a half years 10 A company 28 Round interview experience

There is Tao without skill , It can be done with skill ; No way with skill , Stop at surgery

Welcome to pay attention Java Road official account

Good article , I was watching ️

边栏推荐

- Wargames study notes -- Leviathan

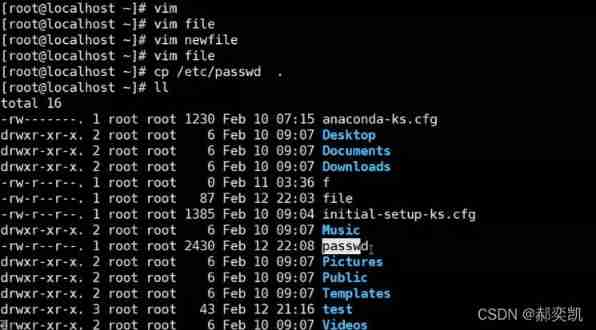

- Commands related to files and directories

- Battle drag method 1: moderately optimistic, build self-confidence (1)

- Oak-d raspberry pie cloud project [with detailed code]

- TLS environment construction and plaintext analysis

- MySQL learning notes - single table query

- Global and Chinese market of high temperature Silver sintering paste 2022-2028: Research Report on technology, participants, trends, market size and share

- P5.js development - setting

- Basic knowledge of dictionaries and collections

- Fingerprint password lock based on Hal Library

猜你喜欢

强基计划 数学相关书籍 推荐

IP address is such an important knowledge that it's useless to listen to a younger student?

How to modify the network IP addresses of mobile phones and computers?

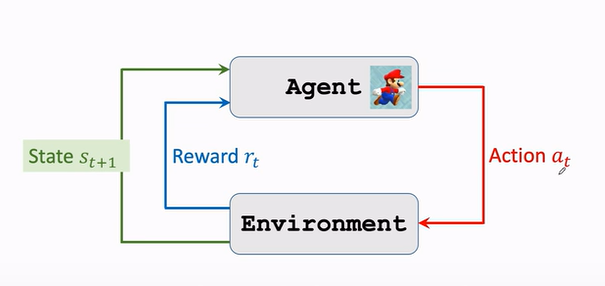

强化學習-學習筆記1 | 基礎概念

Gee calculated area

In 2021, the global revenue of syphilis rapid detection kits was about US $608.1 million, and it is expected to reach US $712.9 million in 2028

1.4 learn more about functions

Rhcsa third day notes

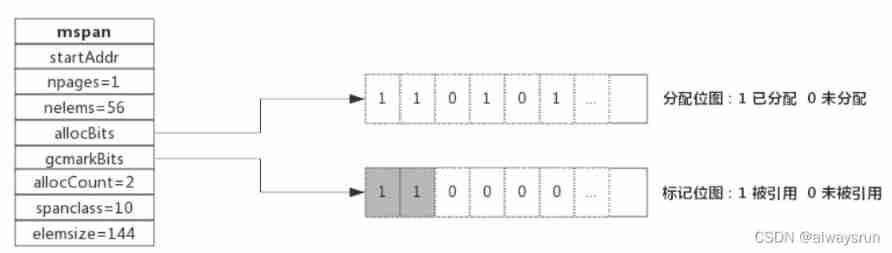

Introduction to golang garbage collection

In 2021, the global revenue of thick film resistors was about $1537.3 million, and it is expected to reach $2118.7 million in 2028

随机推荐

Global and Chinese market of high purity copper foil 2022-2028: Research Report on technology, participants, trends, market size and share

Micro service knowledge sorting - three pieces of micro Service Technology

Battle drag method 1: moderately optimistic, build self-confidence (1)

Viewing Chinese science and technology from the Winter Olympics (II): when snowmaking breakthrough is in progress

How to do Taobao full screen rotation code? Taobao rotation tmall full screen rotation code

JVM JNI and PVM pybind11 mass data transmission and optimization

Global and Chinese markets of active matrix LCD 2022-2028: Research Report on technology, participants, trends, market size and share

Qtablewidget control of QT

Change deepin to Alibaba image source

Global and Chinese market of two in one notebook computers 2022-2028: Research Report on technology, participants, trends, market size and share

2022 high voltage electrician examination and high voltage electrician reexamination examination

AI enhanced safety monitoring project [with detailed code]

Research Report on the overall scale, major manufacturers, major regions, products and application segmentation of rotary tablet presses in the global market in 2022

Global and Chinese markets for medical temperature sensors 2022-2028: Research Report on technology, participants, trends, market size and share

Basic number theory -- Chinese remainder theorem

Global and Chinese market of rubidium standard 2022-2028: Research Report on technology, participants, trends, market size and share

Explore the internal mechanism of modern browsers (I) (original translation)

Basic command of IP address configuration ---ip V4

Machine learning support vector machine SVM

How can the outside world get values when using nodejs to link MySQL